You Only Look Twice: Rapid Multi-Scale Object Detection In

Satellite Imager y

Adam Van Een

CosmiQ Works, In-Q-Tel

avaneen@iqt.org

ABSTRACT

Detection of small objects in large swaths of imagery is one of

the primary problems in satellite imagery analytics. While object

detection in ground-based imagery has beneted from research

into new deep learning approaches, transitioning such technology

to overhead imagery is nontrivial. Among the challenges is the

sheer number of pixels and geographic extent per image: a single

DigitalGlobe satellite image encompasses

>

64 km

2

and over 250

million pixels. Another challenge is that objects of interest are

minuscule (oen only

∼

10 pixels in extent), which complicates

traditional computer vision techniques. To address these issues, we

propose a pipeline (You Only Look Twice, or YOLT) that evaluates

satellite images of arbitrary size at a rate of

≥

0

.

5

km

2

/

s. e

proposed approach can rapidly detect objects of vastly dierent

scales with relatively lile training data over multiple sensors. We

evaluate large test images at native resolution, and yield scores of

F

1

>

0

.

8 for vehicle localization. We further explore resolution and

object size requirements by systematically testing the pipeline at

decreasing resolution, and conclude that objects only

∼

5 pixels in

size can still be localized with high condence. Code is available at

hps://github.com/CosmiQ/yolt

KEYWORDS

Computer Vision, Satellite Imagery, Object Detection

1 INTRODUCTION

Computer vision techniques have made great strides in the past few

years since the introduction of convolutional neural networks [

5

]

in the ImageNet [

13

] competition. e availability of large, high-

quality labelled datasets such as ImageNet [

13

], PASCAL VOC [

2

]

and MS COCO [

6

] have helped spur a number of impressive ad-

vances in rapid object detection that run in near real-time; three of

the best are: Faster R-CNN [

12

], SSD [

7

], and YOLO [

10

] [

11

]. Faster

R-CNN typically ingests 1000

×

600 pixel images, whereas SSD uses

300

×

300 or 512

×

512 pixel input images, and YOLO runs on either

416

×

416 or 544

×

544 pixel inputs. While the performance of all

these frameworks is impressive, none can come remotely close to in-

gesting the

∼

16

,

000

×

16

,

000 input sizes typical of satellite imagery.

Of these three frameworks, YOLO has demonstrated the greatest

inference speed and highest score on the PASCAL VOC dataset. e

authors also showed that this framework is highly transferrable

to new domains by demonstrating superior performance to other

frameworks (i.e., SSD and Faster R-CNN) on the Picasso Dataset

[

3

] and the People-Art Dataset [

1

]. Due to the speed, accuracy,

and exibility of YOLO, we accordingly leverage this system as the

inspiration for our satellite imagery object detection framework.

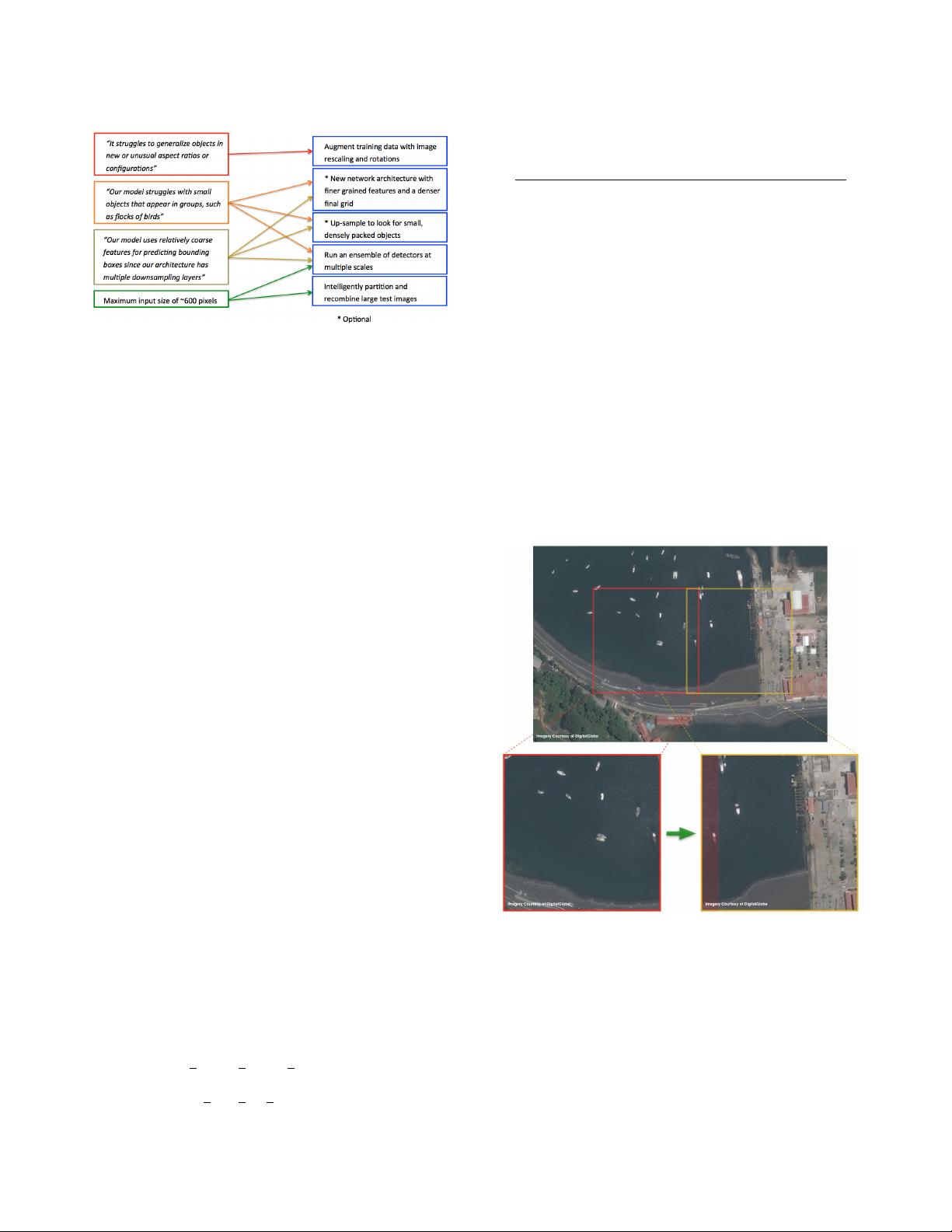

e application of deep learning methods to traditional object

detection pipelines is non-trivial for a variety of reasons. e unique

aspects of satellite imagery necessitate algorithmic contributions to

address challenges related to the spatial extent of foreground target

objects, complete rotation invariance, and a large scale search space.

Excluding implementation details, algorithms must adjust for:

Small spatial extent

In satellite imagery objects of interest

are oen very small and densely clustered, rather than

the large and prominent subjects typical in ImageNet data.

In the satellite domain, resolution is typically dened as

the ground sample distance (GSD), which describes the

physical size of one image pixel. Commercially available

imagery varies from 30 cm GSD for the sharpest Digital-

Globe imagery, to 3

−

4 meter GSD for Planet imagery. is

means that for small objects such as cars each object will

be only

∼

15 pixels in extent even at the highest resolution.

Complete rotation invariance

Objects viewed from over-

head can have any orientation (e.g. ships can have any

heading between 0 and 360 degrees, whereas trees in Ima-

geNet data are reliably vertical).

Training example frequency

ere is a relative dearth of

training data (though eorts such as SpaceNet

1

are aempt-

ing to ameliorate this issue)

Ultra high resolution

Input images are enormous (oen

hundreds of megapixels), so simply downsampling to the

input size required by most algorithms (a few hundred

pixels) is not an option (see Figure 1).

e contribution in this work specically addresses each of these

issues separately, while leveraging the relatively constant distance

from sensor to object, which is well known and is typically

∼

400

km. is coupled with the nadir facing sensor results in consistent

pixel size of objects.

Section 2 details in further depth the challenges faced by standard

algorithms when applied to satellite imagery. e remainder of

this work is broken up to describe the proposed contributions as

follows. To address small, dense clusters, Section 3.1 describes

a new, ner-grained network architecture. Sections 3.2 and 3.3

detail our method for spliing, evaluating, and recombining large

test images of arbitrary size at native resolution. With regard to

rotation invariance and small labelled training dataset sizes, Section

4 describes data augmentation and size requirements. Finally, the

performance of the algorithm is discussed in detail in Section 6.

1

hps://aws.amazon.com/public-datasets/spacenet/

arXiv:1805.09512v1 [cs.CV] 24 May 2018