Eicient Deep Learning: A Survey on Making Deep Learning

Models Smaller, Faster, and Beer

GAURAV MENGHANI, Google Research, USA

Deep Learning has revolutionized the elds of computer vision, natural language understanding, speech recog-

nition, information retrieval and more. However, with the progressive improvements in deep learning models,

their number of parameters, latency, resources required to train, etc. have all have increased signicantly.

Consequently, it has become important to pay attention to these footprint metrics of a model as well, not just

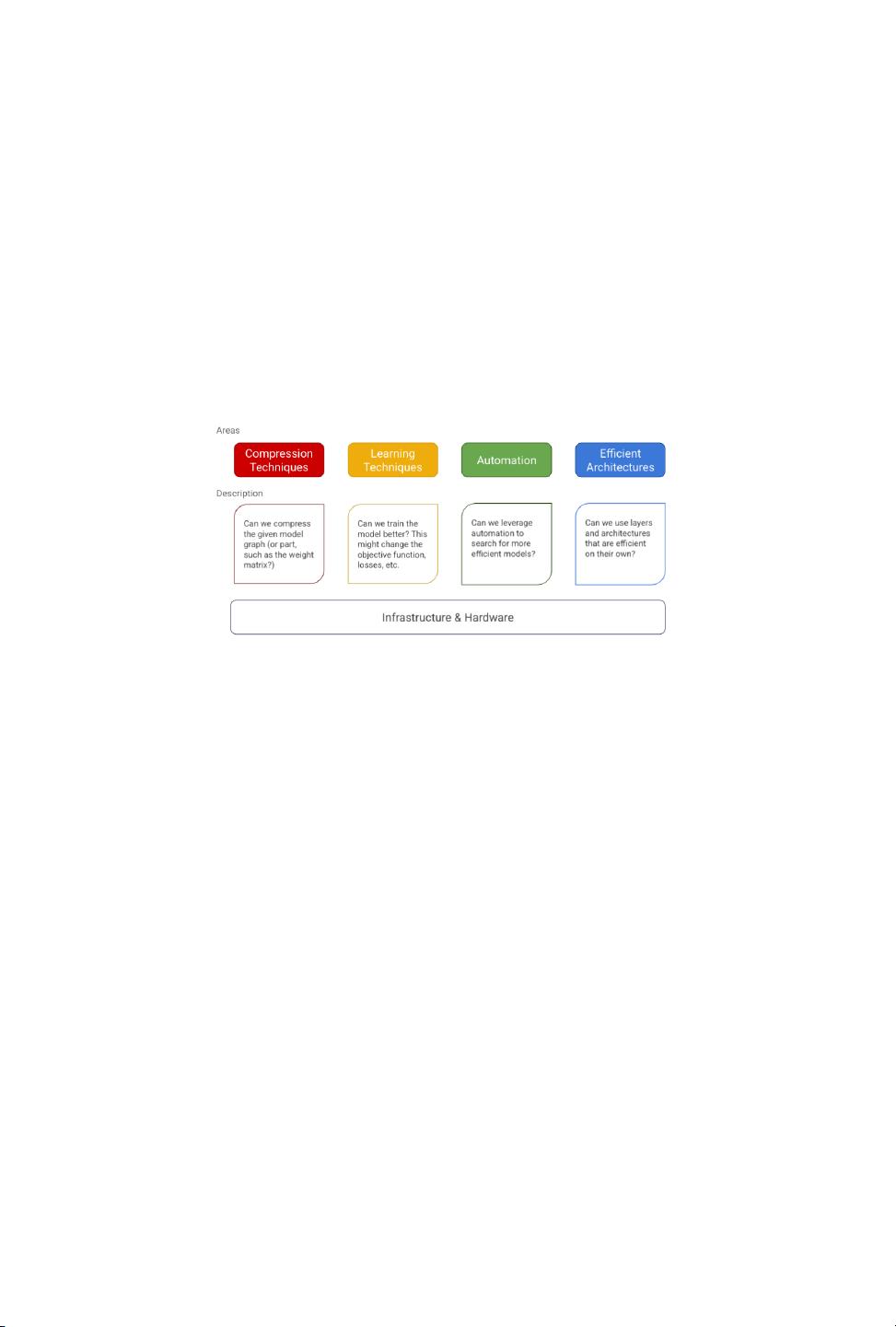

its quality. We present and motivate the problem of eciency in deep learning, followed by a thorough survey

of the ve core areas of model eciency (spanning modeling techniques, infrastructure, and hardware) and the

seminal work there. We also present an experiment-based guide along with code, for practitioners to optimize

their model training and deployment. We believe this is the rst comprehensive survey in the ecient deep

learning space that covers the landscape of model eciency from modeling techniques to hardware support.

Our hope is that this survey would provide the reader with the mental model and the necessary understanding

of the eld to apply generic eciency techniques to immediately get signicant improvements, and also equip

them with ideas for further research and experimentation to achieve additional gains.

ACM Reference Format:

Gaurav Menghani. 2021. Ecient Deep Learning: A Survey on Making Deep Learning Models Smaller, Faster,

and Better. 1, 1 (June 2021), 43 pages. https://doi.org/10.1145/nnnnnnn.nnnnnnn

1 INTRODUCTION

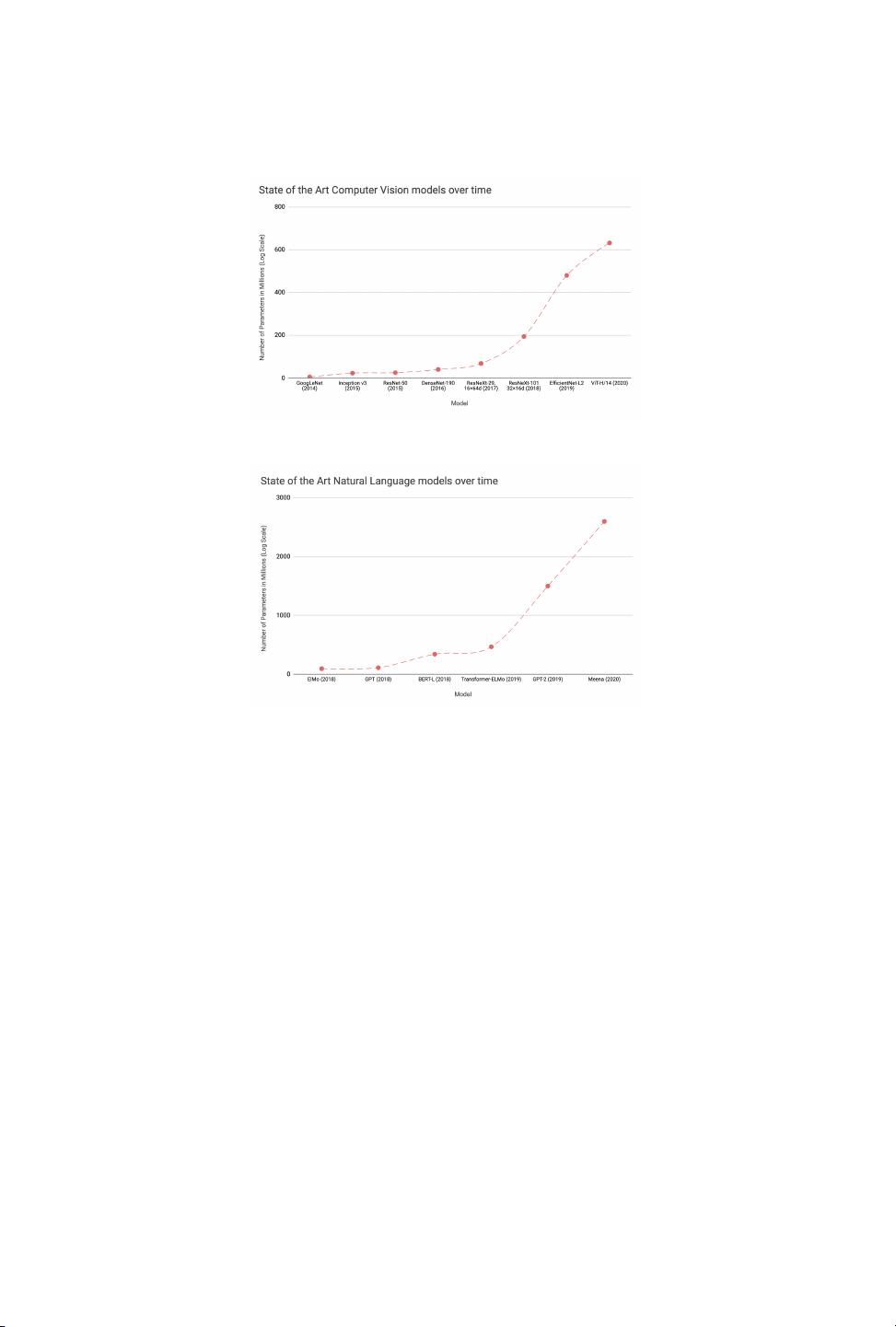

Deep Learning with neural networks has been the dominant methodology of training new machine

learning models for the past decade. Its rise to prominence is often attributed to the ImageNet

competition [

45

] in 2012. That year, a University of Toronto team submitted a deep convolutional

network (AlexNet [

92

], named after the lead developer Alex Krizhevsky), performed 41% better

than the next best submission. As a result of this trailblazing work, there was a race to create

deeper networks with an ever increasing number of parameters and complexity. Several model

architectures such as VGGNet [

141

], Inception [

146

], ResNet [

73

] etc. successively beat previous

records at ImageNet competitions in the subsequent years, while also increasing in their footprint

(model size, latency, etc.)

This eect has also been noted in natural language understanding (NLU), where the Transformer

[

154

] architecture based on primarily Attention layers, spurred the development of general purpose

language encoders like BERT [

47

], GPT-3 [

26

], etc. BERT specically beat 11 NLU benchmarks

when it was published. GPT-3 has also been used in several places in the industry via its API. The

common aspect amongst these domains is the rapid growth in the model footprint (Refer to Figure

1), and the cost associated with training and deploying them.

Since deep learning research has been focused on improving the state of the art, progressive

improvements on benchmarks like image classication, text classication, etc. have been correlated

with an increase in the network complexity, number of parameters, the amount of training resources

Author’s address: Gaurav Menghani, gmenghani@google.com, Google Research, Mountain View, California, USA, 95054.

Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee

provided that copies are not made or distributed for prot or commercial advantage and that copies bear this notice and

the full citation on the rst page. Copyrights for components of this work owned by others than ACM must be honored.

Abstracting with credit is permitted. To copy otherwise, or republish, to post on servers or to redistribute to lists, requires

prior specic permission and/or a fee. Request permissions from permissions@acm.org.

© 2021 Association for Computing Machinery.

XXXX-XXXX/2021/6-ART $15.00

https://doi.org/10.1145/nnnnnnn.nnnnnnn

, Vol. 1, No. 1, Article . Publication date: June 2021.

arXiv:2106.08962v1 [cs.LG] 16 Jun 2021

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功