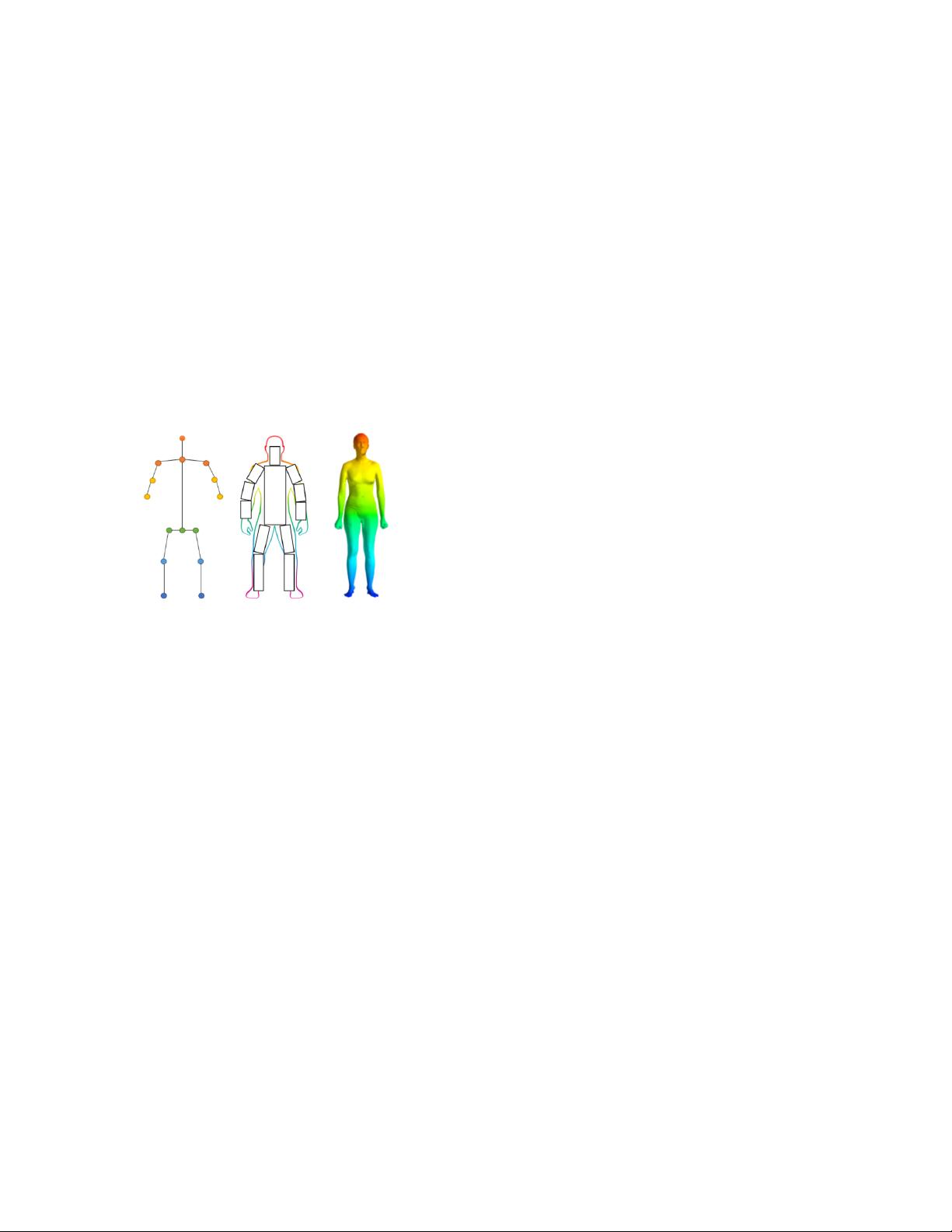

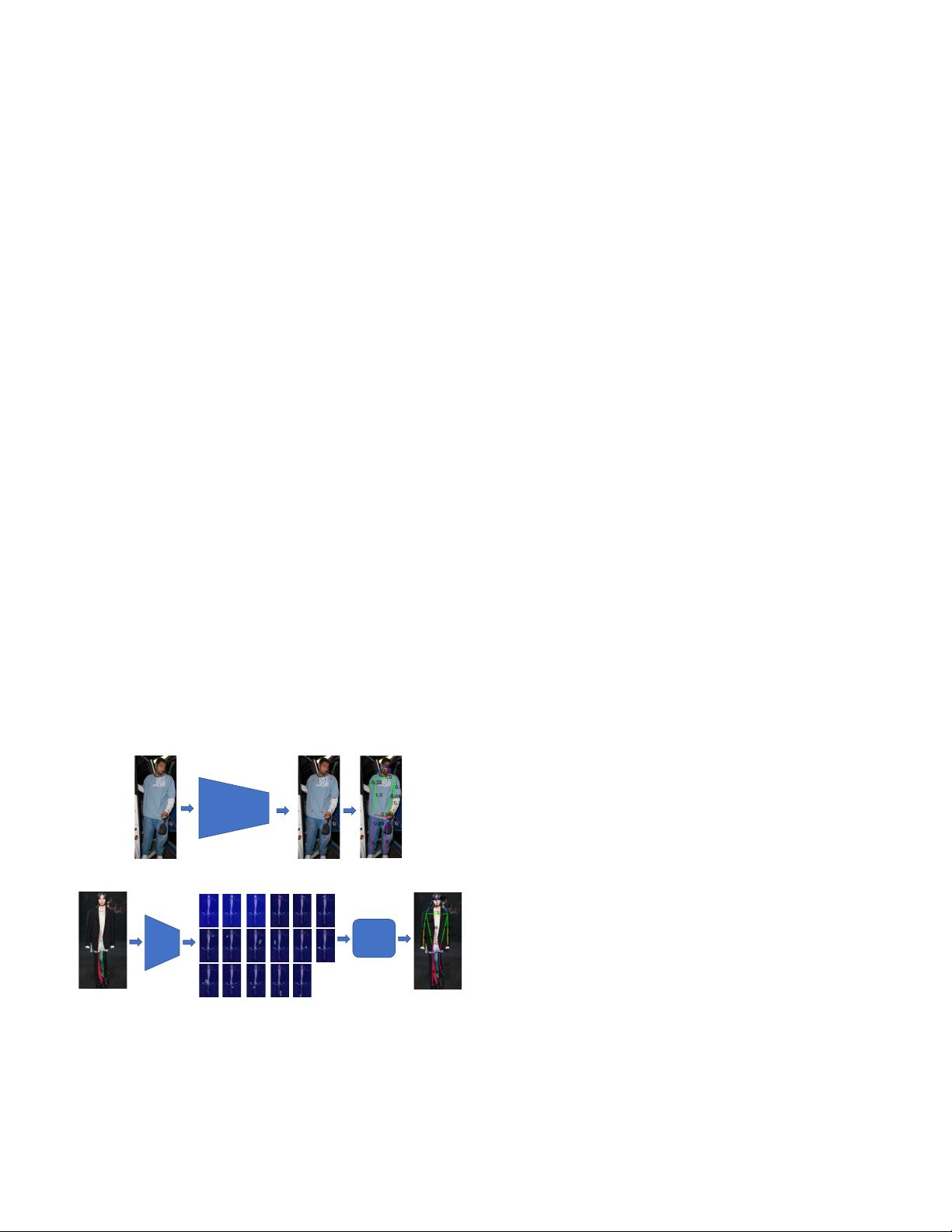

人体姿态估计(Human Pose Estimation, HPE)是计算机视觉领域中的一个经典课题,随着技术的发展,它已从传统方法转向深度学习方法,并在过去的十年中获得了大量的关注。人体姿态估计的目标是从图像、视频等输入数据中定位人体部位,并构建人体表征,如人体骨架。这一技术应用广泛,包括人机交互、运动分析、增强现实(AR)和虚拟现实(VR)等多个领域。 随着深度学习技术的快速发展,基于深度学习的人体姿态估计解决方案已经取得了非常高的性能。然而,仍存在一些挑战,包括训练数据不足、深度歧义和遮挡等问题。近期发表的这篇综述论文的主要目标是通过系统地分析和比较基于深度学习的2D和3D人体姿态估计方案,基于输入数据和推理过程,提供一个全面的综述。该论文覆盖了自2014年以来的240多篇研究论文,并包括2D和3D人体姿态估计数据集及评价指标。 为了更好地理解人体姿态估计在深度学习中的应用和相关知识,我们可以将知识点分为以下几个方面: 1. 人体姿态估计基础 人体姿态估计是一种技术,旨在通过分析视觉数据(如照片或视频帧)识别和定位人体的各个部位,包括四肢、头部、躯干等。这些部位的位置信息被用来构建一个称为人体骨架的模型,该模型是一种简化的表示,用于捕捉人体的姿态和动作。 2. 2D与3D姿态估计 在人体姿态估计中,存在二维(2D)和三维(3D)两种估计方法。2D姿态估计侧重于从二维图像或视频帧中识别人体部位的位置,而3D姿态估计则更进一步,旨在确定这些部位在三维空间中的真实位置。3D估计需要考虑到人体部位的深度信息,并且通常需要处理比2D估计更为复杂的数学和计算问题。 3. 深度学习在姿态估计中的作用 深度学习技术,尤其是卷积神经网络(CNN)和递归神经网络(RNN),已经成为进行高效人体姿态估计的关键。深度学习方法能够从大量的标注数据中自动学习到特征表示,这些特征表示比传统方法中人工设计的特征更加精细和鲁棒。 4. 训练数据的重要性 尽管深度学习技术很强大,但它们对于大规模、高质量的训练数据集具有依赖性。在姿态估计中,高质量的标注数据集对于训练有效的深度学习模型至关重要,缺乏这类数据会限制模型性能的提升。 5. 深度歧义和遮挡问题 人体姿态估计中一个重要的挑战是深度歧义,即同一姿态在二维图像上可能对应多种三维空间的配置。此外,遮挡问题也不可忽视,当身体的一部分被其他部分或外部物体遮挡时,会对姿态估计的准确性产生影响。 6. 应用领域 人体姿态估计技术被广泛应用于包括人机交互、运动分析、增强现实和虚拟现实等领域。例如,在人机交互中,姿态估计可以被用来理解和预测用户的身体动作,使计算机系统能够响应用户的意图。 7. 数据集和评价指标 论文中提到了评估人体姿态估计方法性能的一些常用数据集和指标。这些工具为研究人员提供了统一的标准来衡量和比较不同方法的性能,从而推动了领域内技术的进步。 8. 未来研究方向和挑战 该综述论文总结了当前的研究成果,并讨论了未来可能的研究方向。它还指出了在深度学习中进行人体姿态估计仍需克服的挑战,比如算法的泛化能力、实时性能,以及对各种不同场景和人群的适应性。 人体姿态估计在深度学习的推动下已发展成为一个活跃的研究领域,对各种应用产生了深远的影响。随着技术的不断进步和新挑战的出现,未来的研究人员将有望在这一领域中取得更多创新性的突破。

剩余25页未读,继续阅读

行走的瓶子Yolo2023-07-29这篇综述论文对最新的深度学习人体姿态估计进行了详尽的探讨,提供了全面的研究视角。

行走的瓶子Yolo2023-07-29这篇综述论文对最新的深度学习人体姿态估计进行了详尽的探讨,提供了全面的研究视角。 小小二-yan2023-07-29作者对标注数据库和评估指标进行了系统的介绍,这对于读者来说是非常重要的背景知识,使得他们能够有更深入的理解。

小小二-yan2023-07-29作者对标注数据库和评估指标进行了系统的介绍,这对于读者来说是非常重要的背景知识,使得他们能够有更深入的理解。 lowsapkj2023-07-29论文详细论述了现有方法的优缺点,对读者有很大的帮助,能够帮助他们在实际应用中做出明智的选择。

lowsapkj2023-07-29论文详细论述了现有方法的优缺点,对读者有很大的帮助,能够帮助他们在实际应用中做出明智的选择。 WaiyuetFung2023-07-29该论文对不同深度学习方法在人体姿态估计领域的应用进行了全面归纳,对于初学者来说非常友好。

WaiyuetFung2023-07-29该论文对不同深度学习方法在人体姿态估计领域的应用进行了全面归纳,对于初学者来说非常友好。 丽龙2023-07-29作者对该领域的发展趋势给出了中肯而有见地的评论,为读者提供了对未来研究的启发。

丽龙2023-07-29作者对该领域的发展趋势给出了中肯而有见地的评论,为读者提供了对未来研究的启发。

- 粉丝: 158

- 资源: 1184

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

- 基于脉振高频电压注入的永磁同步电机无感FOC 1.带转子初始位置检测,包括极性判断,可实现任意初始位置下的无感起动运行; 2.可实现带载起动和突加负载运行; 提供算法对应的参考文献和仿真模型,支持技术

- PSO-KELM 粒子群算法优化核极限学习机分类预测算法 粒子群算法 优化 核极限学习机 分类预测算法(也有回归预测) matlab代码 狼群优化 黏菌优化 鲸鱼优化 麻雀优化 阿基米德优化

- 综合能源系统优化 数据来源《考虑用户侧柔性负荷的社区综合能源系统日前优化调度-刘蓉晖》 %% 风电+储能+电网交易+燃气轮机+燃气锅炉+电制冷机+(%燃料电池FC+溴化锂制冷机LBR+余热锅炉) 有电

- 基于matlab的储能选址定容程序 采用蒙特卡洛随机算法,非粒子群算法 图中每个点代表一种配置方式,红点为最优方式 程序稳定运行,每条语句均有详细注释,0基础可看懂 有对应文献

- 模拟 火算法-旅行商问题(TSP)优化 Matlab代码可用于路径规划,物流配送,路径优化 源码+注释 数据可以修改 多少个坐标都行

- 激光slam算法改进 提出了一种增强重定位的cartographer算法,在五千平方车库中进行实验验证,实验结果表明:重定位耗时时间降低为3.35秒 提供改进后算法源码

- Matlab算法仿真,单无人机三维地图路径规划 使用的算法是蚁群算法,加入了无人机自身的约束条件如飞行高度,水平偏转角,垂直偏转角等,仿真结果更稳定,更优 注:只保证结果跟下图一致,不对代码解释教

- matlab程序:EMD-SSA-BiLSTM预测程序 将数据进行EMD分解,再采用经蚁群算法优化的双向长短时记忆神经网络进行预测,最终将结果重组得到最终预测结果 注意:程序功能如上述,可进行负荷预

- 超车避幢通过五次多项式规划出超车路径,根据地横向避幢约束计算出最小纵向距离,确定转向避幢起始点,路径跟踪控制采用了mpc控制算法,加入了侧偏角软约束,在超车结束后返回原车道行驶 采用16carsim

- No.423 基于PLC的二维平台位置模糊控制系统设计十字平台步进伺服 带解释的梯形图程序,接线图原理图图纸,io分配,组态画面

- 1关键词:氢能;阶梯式碳交易机制;热电比可调;综合能源系统;低碳经济;Matlab程序 2参考文献:《考虑阶梯式碳交易机制与电制氢的综合能源系统热电优化》 3主要内容:首先考虑IES参与到

- AxureUX中后台管理信息系统通用原型方案 v2 (Axure RP9作品) 主要适用:web端 软件版本:Axure9 当前版本:v2.1 发布日期:2021-09-09 作品编号:TEM020

- 基于SMO滑模观测器算法的永磁同步电机无传感器矢量控制的仿真模型+C代码: 1. 完整的SMO滑模观测器算法的C代码,本人已经成功移植到DSP(TMS320F28335)芯片中,在一台额定功率为45k

- 基于单片机的智能寻迹小车设计 设计功能: 1.红外寻迹; 2.自动避障; 须知: 程序 仿真 原理图

- 9节点的配电网,研究分布式电源接入对配电网节点电压产生的影响,可以自己设置分布式电源的容量大小,matlab代码,可运行出电压波形图,可用于分布式对配电网影响的研究

- matlab代码:基于主从博弈的智能小区代理商定价策略及电动汽车充电管理 摘要:提出了一种未来智能小区代理商的定价及购电策略,将代理商和车主各自追求利益最大化建 模为主从博弈 该模型亦可为研究电动

信息提交成功

信息提交成功