2

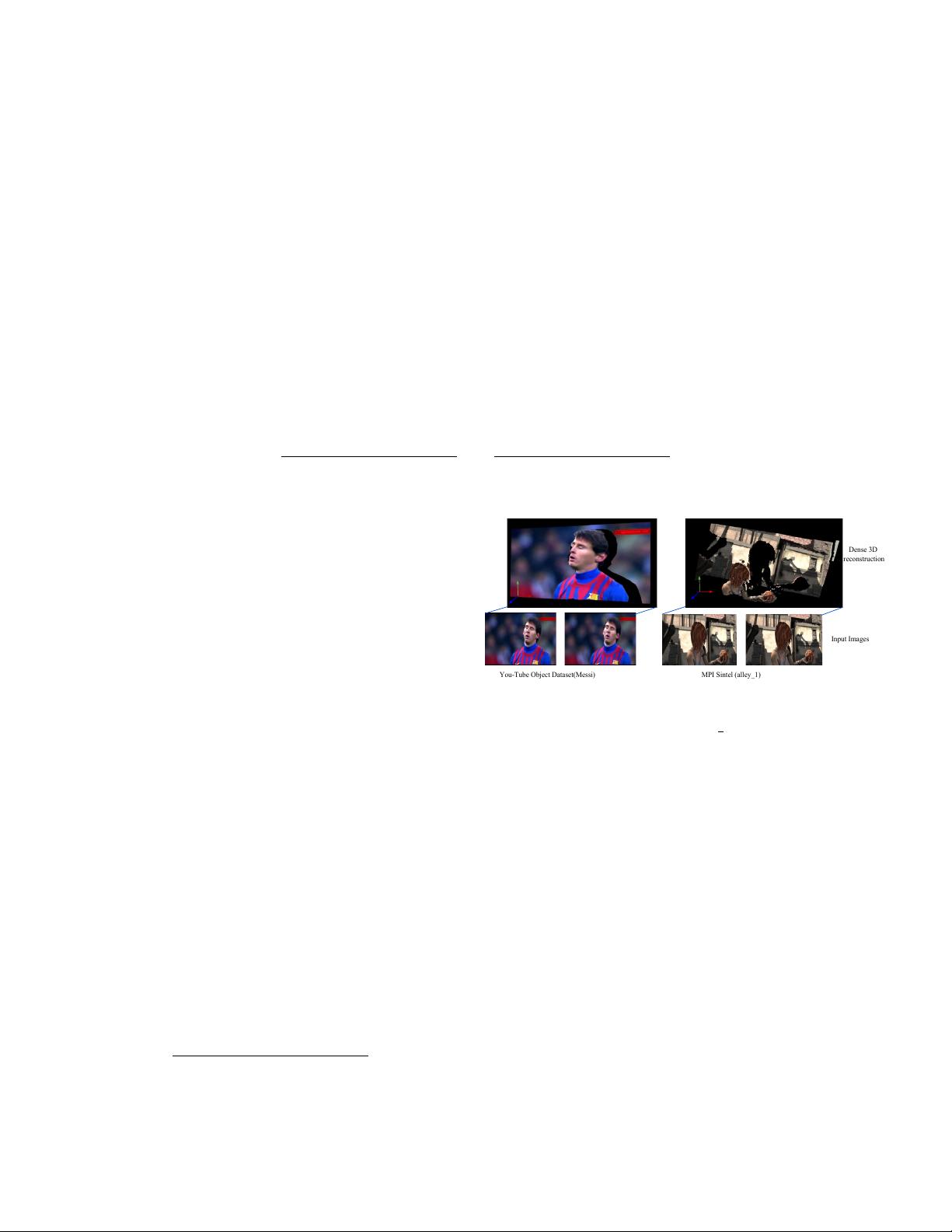

Recently, Ranftl et al. [1] proposed a three-step approach

to procure dense 3D reconstruction of a general dynamic

scene using two consecutive perspective frames. Concretely,

it performs object-level motion segmentation followed by

per-object 3D reconstruction and finally solves for scale

ambiguity. We know that in a general dynamic setting, the

task of densely segmenting rigidly moving objects or part

is not trivial. Consequently, inferring motion models for

deforming shapes becomes very challenging. Furthermore,

the success of object-level segmentation builds upon the

assumption of multiple rigid motions, fails to describe more

general scenarios such as “when the objects themselves

are deforming”. Subsequently, 3D reconstruction algorithms

dependent on motion segmentation of objects suffer.

Motivated by such limitations, we propose a unified

approach that neither performs any object-level motion

segmentation nor assumes any prior knowledge about the

scene rigidity type and still able to recover scale consistent

dense reconstruction of a complex dynamic scene. Our for-

mulation instinctively encapsulates the solution to inherent

scale ambiguity in perspective structure from motion which

is a very challenging problem in general. We show that

by using two prior assumptions —about the 3D scene and

the deformation, we can effectively pin down the unknown

relative scales, and obtain a globally consistent dense 3D

reconstruction of a dynamic scene from its two perspective

views. The two basic assumptions we used about the dy-

namic scene are:

1) The dynamic scene can be approximated by a collection

of piecewise planar surfaces each having its own rigid

motion.

2) The deformation of the scene between two frames is

locally-rigid but globally as-rigid-as-possible.

• Piece-wise planar model: Our method models a dynamic

scene as a collection of piece-wise planar regions. Given two

perspective images I (reference image), I

0

(next image) of

a general dynamic scene, our method first over-segment

the reference image into superpixels. This collection of

superpixels are assumed approximation of the dynamic

scene in the projective space. It can be argued that mod-

eling dynamic scene per pixel can be more compelling,

however, modeling of a scene using planar regions makes

this problem computationally tractable for optimization

or inference [25], [26].

• Locally-rigid and globally as-rigid-as-possible: We implicitly

assume that each local plane undergoes a rigid motion.

Suppose every individual superpixel corresponds to a

small planar patch moving rigidly in 3D space and dense

optical flow between frame is given, we can estimate its

location in 3D using rigid reconstruction pipeline [8], [27].

Since the relative scale of these patches is not determined

correctly, they are floating in 3D space as a set of un-

organized superpixel soup. Under the assumption that

the change between the frame is not too arbitrary rather

regular or smooth, the scene can be assumed to be chang-

ing as rigid as possible globally. Using this intuition, our

method starts finding for each superpixel an appropriate

scale, under which the entire set of superpixels can be

assembled (glued) together coherently, forming a piece-

wise smooth surface, as if playing the game of “3D jig-

Output

Optimization

framework

Fig. 2: Reconstructing a 3D surface from a soup of un-scaled superpixels via

solving a 3D Superpixel Jigsaw puzzle problem.

saw puzzle”. Hence, we call our method the “SuperPixel

Soup” algorithm (see Fig. 2 for a conceptual visualization).

In this paper, we show that our aforementioned assump-

tions can faithfully model most of the real-world dynamic

scenarios. Furthermore, we encapsulate these assumptions

in a simple optimization problem which are solved using a

combination of continuous and discrete optimization algo-

rithms [28], [29], [30]. We demonstrate the benefit of our

approach on available benchmark dataset such as KITTI

[31], MPI Sintel [24] and Virtual KITTI [32]. The statistical

comparison shows that our algorithm outperforms many

available state-of-the-art methods by a significant margin.

2 RELATED WORKS

The solution to SfM has undergone prodigious development

since its inception [2]. Even after such a remarkable devel-

opment in this field, the choice of algorithm depends on the

complexity of the object motion and the environment. In this

work, we utilize the idea of rigidity (locally) to solve dense

reconstruction of a general dynamic scene. The concept of

rigidity is not new in structure from motion problem [2]

[33] and has been effectively applied as a global constraint

to solve large scale reconstruction problem [18]. The idea of

global rigidity to solve structure and motion has also been

exploited to solve reconstruction over multiple frames via a

factorization approach [10].

The literature on structure from motion and its treatment

to different scenarios is very extensive. Consequently, for

brevity, we only discuss the previous works that are of direct

relevance to dynamic 3D reconstruction from monocular

images. The linear low-rank model has been used for dense

non-rigid reconstruction. Kumar et al. [34], [35] and Garg et

al. [36] solved the task with an orthographic camera model

assuming feature matches across multiple frames is given as

input. Fayad et al. [37] recovered deformable surfaces with

a quadratic approximation, again from multiple frames.

Taylor et al. [38] proposed a piecewise rigid solution using

locally-rigid SfM to reconstruct a soup of rigid triangles.

While Taylor et al. [38] method is conceptually similar to

ours, there are major differences:

1) We achieve two-view dense reconstruction while [38]

relies on multiple views (N ≥ 4).

2) We use perspective camera model while they rely on an

orthographic camera model.

3) We solve the scale-indeterminacy issue, which is an

inherent ambiguity for 3D reconstruction under per-

spective projection, while Taylor et al. [38] method does

not suffer from this, at the cost of being restricted to the

orthographic camera model.

Recently, Russel et al. [39] and Ranftl et al. [1] used object-

level segmentation for dense dynamic 3D reconstruction. In

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功