IEEE TRANSACTIONS ON ROBOTICS, VOL. 28, NO. 1, FEBRUARY 2012 61

Visual-Inertial-Aided Navigation for High-Dynamic

Motion in Built Environments Without

Initial Conditions

Todd Lupton and Salah Sukkarieh

Abstract—In this paper, we present a novel method to fuse ob-

servations from an inertial measurement unit (IMU) and visual

sensors, such that initial conditions of the inertial integration,

including gravity estimation, can be recovered quickly and in a

linear manner, thus removing any need for special initialization

procedures. The algorithm is implemented using a graphical simul-

taneous localization and mapping like approach that guarantees

constant time output. This paper discusses the technical aspects

of the work, including observability and the ability for the system

to estimate scale in real time. Results are presented of the system,

estimating the platforms position, velocity, and attitude, as well as

gravity vector and sensor alignment and calibration on-line in a

built environment. This paper discusses the system setup, describ-

ing the real-time integration of the IMU data with either stereo or

monocular vision data. We focus on human motion for the pur-

poses of emulating high-dynamic motion, as well as to provide a

localization system for future human–robot interaction.

Index Terms—Field robots, localization, search-and-rescue

robots, sensor fusion.

I. INTRODUCTION

T

HE motivation of this paper was inspired by the need to

develop a human-mounted localization system for first re-

sponse units, such as fire fighters, counter-terrorism groups, and

search-and-rescue operators. The system had to keep track of

the location of the operator in an unknown building in real

time. The system could not use any purpose built localiza-

tion infrastructure and had to provide information that could

be shared with the future incorporation of robotic systems in the

mission.

A human-mounted localization system for first responders

operating in built environments poses a number of difficulties.

There is the lack of (or poor) global positioning system (GPS)

signals, unreliable magnetic readings, high-dynamic motion,

Manuscript received September 7, 2010; revised April 28, 2011; accepted

September 21, 2011. Date of publication November 29, 2011; date of current

version February 9, 2012. This paper was recommended for publication by

Associate Editor J. Neira and Editor D. Fox upon evaluation of the reviewers’

comments. This work was supported in part by the Australian Research Council

(ARC) Centre of Excellence programme, funded by the ARC and the New South

Wales State Government, and by ARC Discovery under Grant DP0665439.

T. Lupton was with The University of Sydney, Sydney, N.S.W. 2006,

Australia. He is now with Silverbrook Research, Balmain, N.S.W. 2041,

Australia (e-mail: tlup8791@uni.sydney.edu.au).

S. Sukkarieh is with The University of Sydney, Sydney, N.S.W. 2006,

Australia (e-mail: salah@acfr.usyd.edu.au).

Color versions of one or more of the figures in this paper are available online

at http://ieeexplore.ieee.org.

Digital Object Identifier 10.1109/TRO.2011.2170332

and the lack of any internal localization infrastructure. A local-

ization system would, thus, need to use whatever features were

available in the environment, but the closeness of objects in the

buildings, as well as the presence of walls, means that landmark

features may only be observable for short periods of time. The

system must also be able to quickly initialize and handle periods

when few or no external landmarks are observed.

Cameras can work with landmarks of opportunity that al-

ready exist in the environment; therefore, no external infras-

tructure is required. Indoor Simultaneous Localization and Map-

ping (SLAM) using a single camera has been developed in the

past [4]; however, this implementation had to operate at a high

frame rate, i.e., up to 200 Hz [9], and even then, only slow dy-

namics and certain restricted motions could be used. In addition,

as a single camera only provides bearing observations, special-

ized landmark initialization techniques are required as range is

not immediately observable, and the scale of the environment

and, therefore, the motions cannot be determined.

The use of a stereo camera pair for SLAM [17], [18] or visual

odometry [11] gives very promising results for this kind of

application. In addition to providing more constrained motion

estimates, the true map scale can be observed and scale drift over

the trajectory is eliminated. The close proximity to landmarks

and visually rich environments contribute greatly to its success.

The main shortcoming of a system that relies solely on visual

observations is that it will fail when sufficient visual landmarks

are not observable, even if for just a short period of time. This

could easily be the case for human-mounted systems that are

considered in this paper. For example, loss of distinct visual

features can occur in dark areas of buildings, due to motion

blur, if smoke is present, or if the cameras are very close to

blank walls such as in narrow corridors or staircases.

The use of an inertial measurement unit (IMU) could help

in these momentary periods where visual observations may not

be available [19]. Even a low-cost IMU can observe the high

dynamics of such a system and can constrain the estimated lo-

cation for short periods once properly initialized. The difficulty

in using an IMU in these applications is one of obtaining proper

initialization.

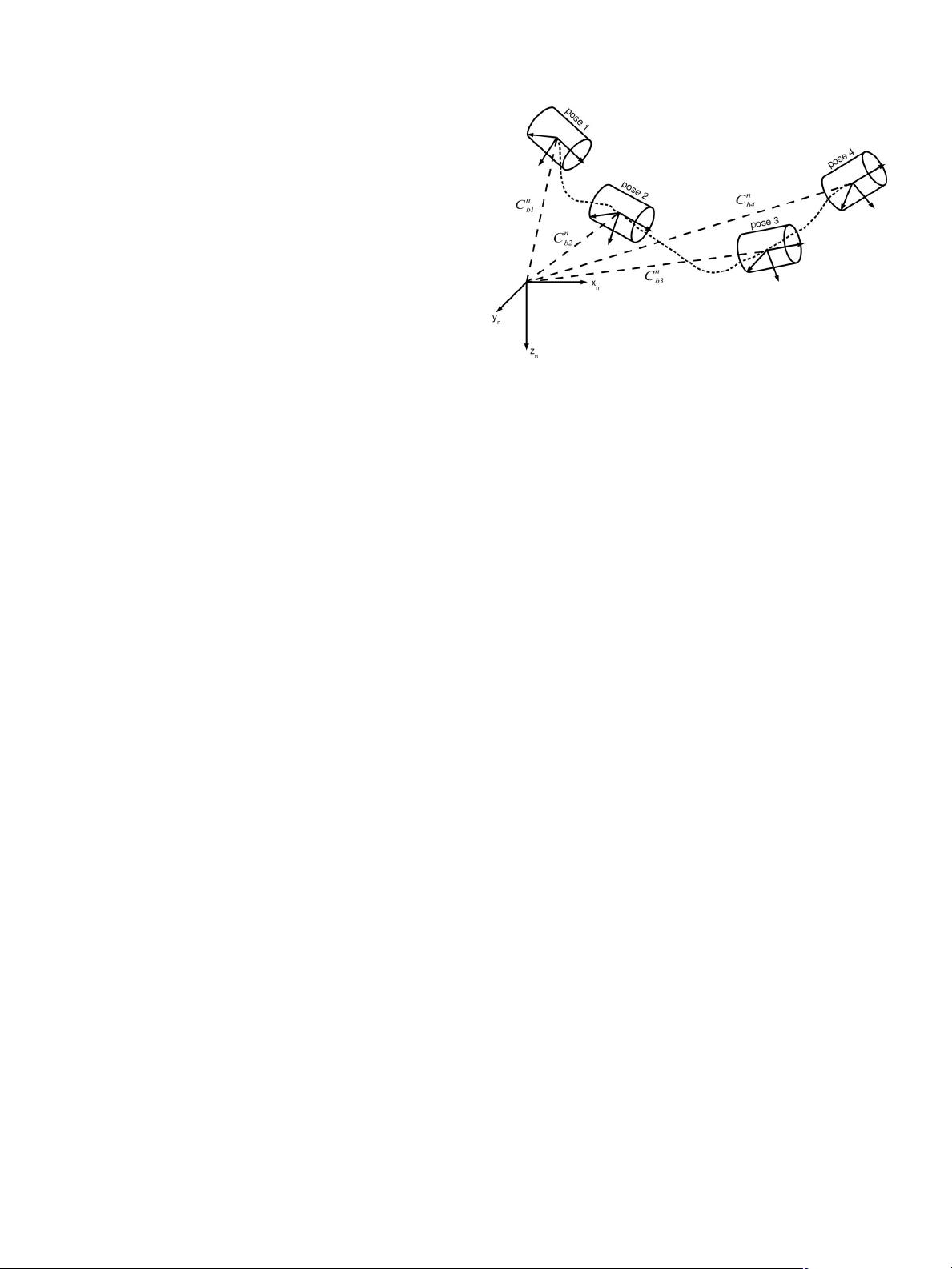

In this paper, the development and analysis of a novel system

to process and fuse inertial observations with observations from

other body frame sensors, such as cameras, is presented. This

system is inspired by the idea that inertial observations can

be integrated in an arbitrary frame between poses to form a

single pseudo-observation, as presented in [16]. This core idea is

expanded in this paper with integration into a graphical filter that

1552-3098/$26.00 © 2011 IEEE

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功