没有合适的资源?快使用搜索试试~ 我知道了~

Benchmarking Foundation Models with Language-Model-as-an-Examine

0 下载量 165 浏览量

2024-09-11

21:57:50

上传

评论

收藏 7.61MB PDF 举报

温馨提示

Benchmarking Foundation Models with Language-Model-as-an-Examine

资源推荐

资源详情

资源评论

Benchmarking Foundation Models with

Language-Model-as-an-Examiner

Yushi Bai

1∗

, Jiahao Ying

2∗

, Yixin Cao

2

, Xin Lv

1

, Yuze He

1

,

Xiaozhi Wang

1

, Jifan Yu

1

, Kaisheng Zeng

1

, Yijia Xiao

3

,

Haozhe Lyu

4

, Jiayin Zhang

1

, Juanzi Li

1

, Lei Hou

1B

1

Tsinghua University, Beijing, China

2

Singapore Management University, Singapore

3

University of California, Los Angeles, CA, USA

4

Beijing University of Posts and Telecommunications, Beijing, China

Abstract

Numerous benchmarks have been established to assess the performance of founda-

tion models on open-ended question answering, which serves as a comprehensive

test of a model’s ability to understand and generate language in a manner similar to

humans. Most of these works focus on proposing new datasets, however, we see

two main issues within previous benchmarking pipelines, namely testing leakage

and evaluation automation. In this paper, we propose a novel benchmarking frame-

work, Language-Model-as-an-Examiner, where the LM serves as a knowledgeable

examiner that formulates questions based on its knowledge and evaluates responses

in a reference-free manner. Our framework allows for effortless extensibility as

various LMs can be adopted as the examiner, and the questions can be constantly

updated given more diverse trigger topics. For a more comprehensive and equitable

evaluation, we devise three strategies: (1) We instruct the LM examiner to generate

questions across a multitude of domains to probe for a broad acquisition, and raise

follow-up questions to engage in a more in-depth assessment. (2) Upon evaluation,

the examiner combines both scoring and ranking measurements, providing a reli-

able result as it aligns closely with human annotations. (3) We additionally propose

a decentralized Peer-examination method to address the biases in a single examiner.

Our data and benchmarking results are available at: http://lmexam.xlore.cn.

1 Introduction

Recently, many large foundation models [

1

], such as ChatGPT [

2

], LLaMA [

3

], and PaLM [

4

], have

emerged with impressive general intelligence and assisted billions of users worldwide. For various

users’ questions, they can generate a human-like response. However, the answers are not always

trustworthy, e.g., hallucination [

5

]. To understand the strengths and weaknesses of foundation models,

various benchmarks have been established [6, 7, 8, 9, 10].

Nevertheless, we see two main hurdles in existing benchmarking methods, as summarized below. (1)

Testing leakage. Along with increasing tasks and corpus involved in pre-training, the answer to the

testing sample may have been seen and the performance is thus over-estimated. (2) Evaluation au-

tomation. Evaluating machine-generated texts is a long-standing challenge. Thus, researchers often

convert the tasks into multi-choice problems to ease the quantitative analysis. This is clearly against

real scenarios — as user-machine communications are mostly open-ended Question Answering (QA)

or freeform QA [

11

]. On the other hand, due to the existence of a vast number of valid “good”

answers, it is impossible to define one or several groundtruth, making similarity-based matching

∗

Equal contribution

37th Conference on Neural Information Processing Systems (NeurIPS 2023) Track on Datasets and Benchmarks.

LM as an

Examiner

Q: Which machine learning

algorithm is mainly used for

classification problems?

Q: Who is considered the

“father” of hip-hop?

Domain: ML

Domain: Hip-Hop

Knowledge breadth

Knowledge depth

Foundation models

A neural

network

Decision

Tree

SVM

FQ: What are the

advantages of

Decision Tree

for classification?

Decision Tree

is more stable.

FQ: How do SVM

algorithm handle

non-linearly

separable data?

By using kernel

functions…

Flan-T5 Vicuna ChatGPT

Rank

Score

Evaluation

Centralized

Evaluation

Decentralized

Evaluation

1

2

3

4

2 has the most

fluent answer

2 has the least

helpful answer

a b

a examines

b on its Q,

vice versa

Fa i r n e ss

Wo r k l o a d

Reliable

Peer-examination

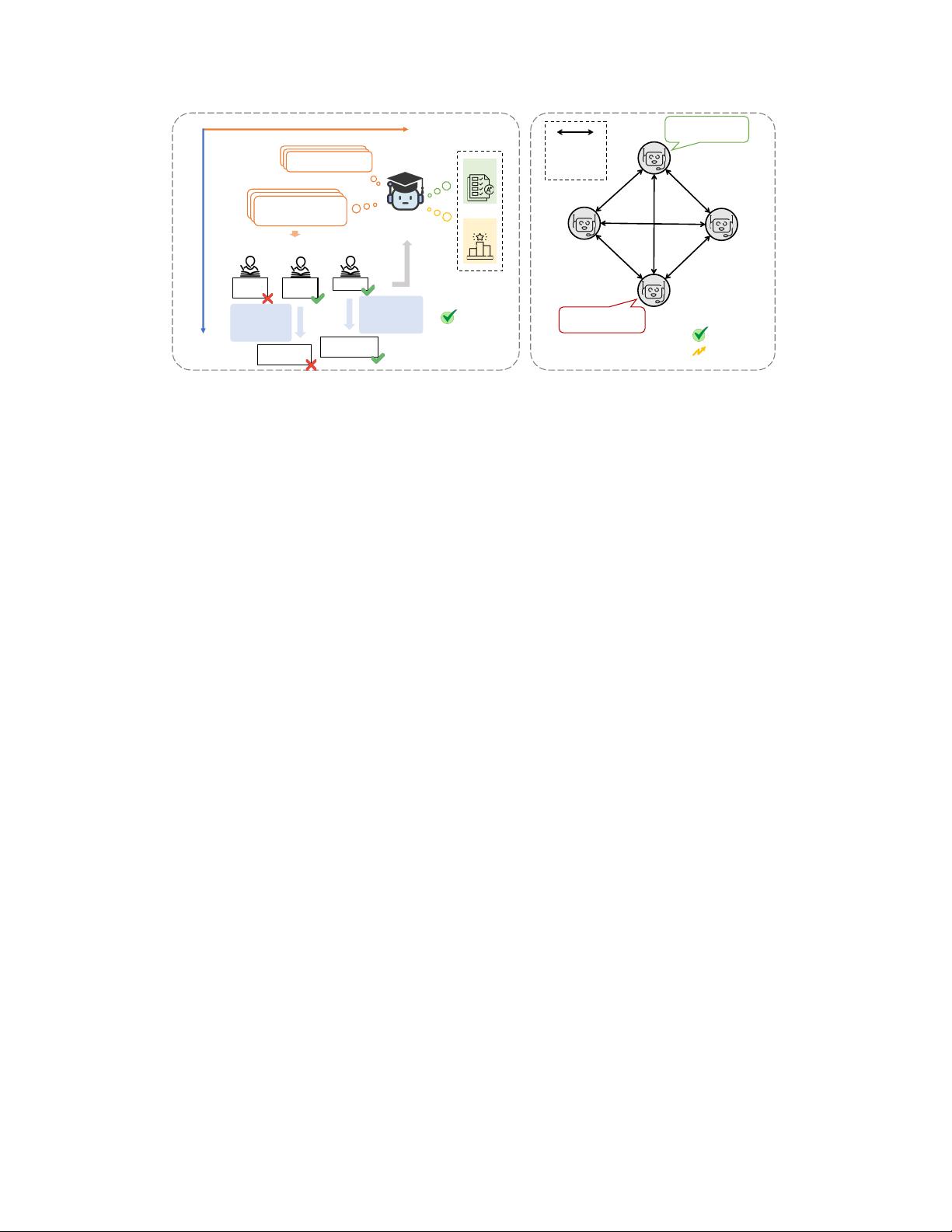

Figure 1: Overview of our benchmarking method. The left part shows the use of language model

as an examiner. The examiner generates questions from various domains, allowing it to probe for

comprehensive understanding (knowledge breadth) as well as deep specialization (knowledge depth)

through follow-up questions (FQs). It then scores and ranks other models’ responses according to its

understanding of the subject, providing a reliable evaluation. The right part presents peer-examination,

a novel decentralized method that provides fairer evaluation results, which potentially demands higher

workload of running multiple LM examiners, compared to running a single LM examiner.

measurements (e.g., Exact Match, ROUGE-L [

12

], and BERTScore [

13

]) ineffective [

11

,

14

,

15

].

Therefore, recent works target a well-trained evaluator language model (LM) to assess the answer

quality in a reference-free manner [

16

,

17

,

18

]. However, using LM as an evaluator also presents a

problem: What if the evaluator hallucinates and makes wrong judgments during assessment?

As an attempt, our pilot study utilizes GPT-4 [

19

] to evaluate the correctness of LLaMA [

3

] on Natural

Questions [

20

], where non-negligible

18

out of

100

judgments are incorrect (cases in Appendix A). We

attribute the main reason to the inadequate knowledge of the evaluator itself regarding the questions.

A straightforward solution is to use the LM not just as an evaluator to assess the responses, but as a

knowledgeable examiner to also formulate questions, which is guaranteed a thorough understanding

of the judgments. And, it naturally addresses the testing leakage issue by generating new questions

periodically. Yet, relying on a centralized examiner can hardly be considered fair, especially when

evaluating the examiner itself — A man who is his own lawyer has a fool for his client.

In this paper, we propose a novel benchmarking framework, Language-Model-as-an-Examiner, to

assess current foundation models, mitigating the aforementioned issues. Herein, the language model

acts as a knowledgeable examiner that poses questions based on its inherent knowledge and evaluates

others on their responses. We devise three strategies to alleviate potential bias:

•

Increasing Knowledge Breadth and Depth. In terms of breadth, according to a predefined

taxonomy, we select as many diverse domains as possible to generate questions. In terms of depth,

to probe models deeply within a specific subfield, we propose a multi-round setting where the

evaluator mimics an interviewer, posing more sophisticated follow-up questions based on the

interviewee model’s preceding responses. We release our dataset, namely LMExamQA, which is

constructed using GPT-4 [19] as an examiner.

•

Reliable Evaluation Measurement. We explore two evaluation metrics, namely Likert scale

scoring and Ranking, offering a more comprehensive evaluation result. The results from both

metrics correlate closely with human annotations, significantly outperforming all previous metrics.

•

Peer-examination Mechanism. To avoid the potential bias arising from a single model as examiner,

we propose a decentralized evaluation setting where all participating models are invited to be the

examiner and assess each other.

In experiments, our benchmarking pipeline yields fruitful results on 8 popular foundation models. We

also demonstrate that peer-examination can generate a more diverse set of questions for knowledge

probing and balance the biases from individual evaluator models, ultimately leading to a more

equitable evaluation outcome.

2

2 Related Work

Benchmarks for Foundation Models. Various benchmarks have been proposed to assess foundation

models on open-ended question answering, since it is the most natural setting for user-machine interac-

tion in real scenarios. Some prominent such benchmarks include MS MARCO [

21

], SQuAD [

22

,

23

],

Natural Questions [

20

], WebQuestions [

24

] and OpenBookQA [

25

]. On the other hand, there exist a

limited number of datasets that feature long-form QA. One of the widely-recognized examples is

ELI5 [

26

], which comprises questions that necessitate lengthy descriptive and explanatory answers.

One notable limitation of these benchmarks is their reliance on human curation and annotation,

which inherently constrains their scalability. Our approach, by comparison, utilizes LMs to construct

datasets, offering the advantage of effortless extensibility.

Automating NLG Evaluation. To evaluate machine-generated responses to the questions, several

automatic metrics have been adopted, including the F1 score, Exact Match (EM), BLEU [

27

],

ROUGE [

12

], and METEOR [

28

]. However, each metric has its own shortcomings, resulting in large

discrepancies between the tested and actual performance [14, 29, 30].

To address these issues, well-trained LMs are utilized in NLG evaluation [

31

,

32

,

33

,

34

]. One

mainstream of previous methods is reference-based, where they derive the similarity between the

candidate and the reference using an LM. Some prominent metrics in this class include Mover-

Score [

35

], BERTScore [

13

]. These metrics measure the distributional similarity rather than lexical

overlap [

36

], making them appropriate for contexts that require more flexible generation. Recent

studies [

16

,

17

,

18

,

37

,

38

,

39

,

40

,

41

] have demonstrated that large language models (LLMs), such

as ChatGPT [

2

], can conduct NLG evaluations in a reference-free manner. They can rate a candidate

text (or perform a comparative assessment of two candidates) based on a specified evaluation aspect,

displaying a high correlation with human assessments in tasks such as summarization and story

generation [

42

,

43

]. In these studies, the evaluations primarily focus on lexical quality aspects,

such as coherence and fluency, of a generated text. However, their capability to evaluate crucial

aspects in a QA response, including factual correctness and information comprehensiveness, remains

uncertain. Moreover, a single evaluator inevitably brings bias to the assessment [

17

]. Our work aims

to resolve these issues by leveraging LM not just as an evaluator but also as an examiner, assessing the

performance of other models through self-generated questions, and deploying multiple LM examiners

to ensure balanced evaluation.

3 Methodology

In this section, we discuss the methodology in language-model-as-an-examiner, including the LMEx-

amQA dataset construction, the evaluation metric design, and the peer-examination pipeline.

3.1 Dataset Construction

Question Generation towards Knowledge Breadth. We employ a language model (LM) as an

examiner that generates diversifying and high-quality questions across various domains. To ensure

wide coverage of knowledge, we choose the Google Trends Categories

2

as the domain taxonomy,

and randomly select

n

domains from it. For each domain, we prompt the LM to generate

m

distinct

questions. Our designed prompt (shown in Appendix B) is formulated to ensure that the generated

questions possess three essential characteristics: diversified question forms, varied cognitive levels,

and most importantly, assurance that the LM has a comprehensive understanding of the knowledge

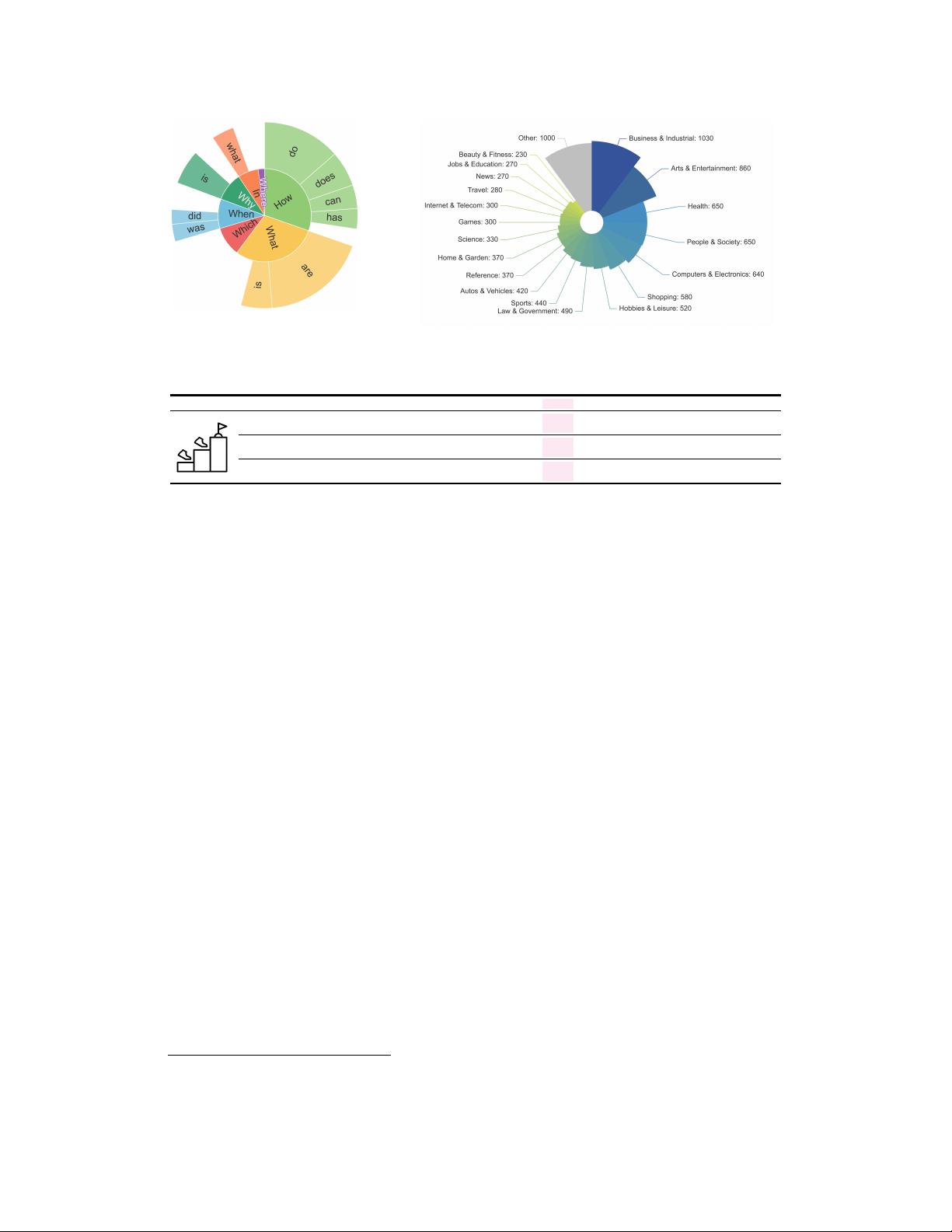

surrounding the question it poses. Figure 2 shows the distribution of question forms based on their

interrogative words, and the distribution of question domains. According to Bloom’s taxonomy [

44

],

we divide the questions into 3 categories based on their required cognitive levels, from low to

high-level, namely knowledge memorization, knowledge comprehension, and knowledge analysis:

•

Knowledge memorization. Questions of such level demand recognition or recollection of certain

entities and attributes, such as a person, location, or time.

•

Knowledge comprehension. These questions involve demonstrating an understanding of particular

instances or concepts, such as “What is . . . ”, “Why . . . ”, and “How . . . ”.

2

https://github.com/pat310/google-trends-api/wiki/Google-Trends-Categories.

3

(a) Question word distribution.

(b) Question domain distribution.

Figure 2: Statistics of generated questions in LMExamQA.

MS [21] SQuAD2.0 [23] NQ [20] ELI5 [26] Ours Example questions in our dataset

Analysis 1% 4% 3% 0%

What are the potential short and long-term

35%

impacts of divorce on children?

Comprehension 4% 13% 19% 100%

How does towing capacity affect a truck’s performance

43%

and what factors influence its maximum towing limit?

memorization 95% 83% 78% 0%

Which international organization publishes

22%

the World Economic Outlook report?

Table 1: Proportions of each level of questions. MS and NQ are short for MS MARCO and Natural

Questions. We also list an example question in LMExamQA for each category.

•

Knowledge analysis. Questions of this type require more advanced cognitive skills and they

typically question the impact, comparison, or advantages and disadvantages of a given topic.

By adopting GPT-4 to categorize the questions in LMExamQA and previous open-ended QA datasets

into three levels

3

, we obtain the distribution with respect to the 3 cognitive levels as listed in Table 1,

and show an example for each type of question. Compared with previous datasets, LMExamQA

achieves a more balanced distribution across these 3 levels, thus providing a means of quantifying

foundational models’ proficiency at each cognitive level. Furthermore, LMExamQA includes a larger

proportion of questions classified within higher cognitive levels, particularly at the analysis level,

indicating a greater level of challenge.

To justify the reliability of the LM examiner as an evaluator on these questions, we employ it to

produce a groundtruth answer with the prompt, “Answer the questions accurately and completely,

without providing additional details.” Upon evaluation by human experts on a random selection of

100 questions, the answers offered by the LM exhibit a

100%

accuracy rate, thereby demonstrating

mastery over the questions it generates.

Multi-round Follow-up Question Generation towards Knowledge Depth. To further probe the

model’s comprehension of a topic in depth, we develop an evaluation procedure involving multiple

rounds of follow-up inquiries, drawing inspiration from the interview process. We utilize the LM

examiner to construct a series of follow-up inquiries (prompt is shown in the Appendix B). These

follow-up questions are specifically tailored to delve deeper into the concepts presented within the

model-generated answers from the previous round. As the follow-up questions are dependent on

the model’s generated answers, we only ask follow-up questions for the correctly answered queries

(determined by the LM examiner) and calculate the proportion of correct responses in the subsequent

round. We limit the total number of rounds to

k

in order to minimize topic deviation that might occur

during longer sessions. Note that we only provide the interviewee model with the follow-up question

as input, rather than engaging the “exam history”

4

, since most models are not capable of multi-round

conversations. We show an example of a follow-up question to Flan-T5 [48]:

3

We manually label 100 of the questions in LMExamQA and find a high agreement (85%) between human

and GPT-4 annotations.

4

It is important to note that our approach is essentially different with conversational QA [

45

,

46

,

47

], which

places greater emphasis on evaluating the model’s comprehension of the conversational context.

4

Question: Which material is primarily used to manufacture semiconductor devices?

Flan-T5: Silicon ✓

Follow-up Question: What are the advantages of using silicon as the primary material for

semiconductor devices?

Flan-T5: Silicon is a nonrenewable resource, and it is the most abundant element on Earth. ✗

3.2 Evaluation Metrics

Several methodologies are commonly employed to facilitate human-like evaluation in LMs, prominent

among these are the Likert scale scoring [

16

,

17

,

41

] and pairwise comparison [

38

,

41

]. For the

purposes of our benchmark, we incorporate both Likert scale scoring and a variant of pairwise

comparison, namely ranking.

Likert scale scoring functions as an absolute evaluative measure, where the evaluator assigns scores

to a given response along predefined dimensions. We establish four distinct dimensions on our

dataset: (1) Accuracy. This assesses the extent to which the provided response accurately answers

the question. (2) Coherence. This evaluates the logical structure and organization of the response

and the degree to which it can be comprehended by non-specialists. (3) Factuality. This examines

whether the response contains factual inaccuracies. (4) Comprehensiveness. This gauges whether

the response encompasses multiple facets of the question, thus providing a thorough answer. Each

of these dimensions is scored on a scale of 1 to 3, ranging from worst to best. We also ask the

evaluator to provide an overall score ranging from 1 to 5, based on the scores assigned to the previous

4 dimensions. This score serves as an indicator of the overall quality of the answer.

On the other hand, pairwise comparison operates as a relative evaluation method and is often more

discerning compared to scoring. In this process, evaluators are given two responses and are tasked

with determining which is superior, taking into account their accuracy, coherence, factuality, and

comprehensiveness. Given that there are

n

contestant models, we implement a merge sort algorithm

to rank the n responses, involving O(n log n) pairwise comparisons.

3.3 Decentralized Evaluation: Peer-Examination

We introduce a novel decentralized method that incorporates multiple models to serve as examiners,

namely Peer-examination (illustrated in the right part of Figure 1), since relying only on one cen-

tralized model as the examiner introduces the following potential drawbacks to the benchmarking

process. (1) Coverage of generated questions: The examiner may not have a holistic understanding

of certain domain knowledge. As a result, the examiner may struggle to propose questions that

examine in detail on these areas, which in turn renders the scope of generated questions insufficient.

(2) Potential bias during evaluation: The model itself may have a bias during evaluation. The bias

can manifest as a preference for certain types of responses or a predisposition towards perspectives

irrelevant to the quality of the responses, such as response length or linguistic style. For example,

[

17

] shows that GPT-4 [

19

] prefers ChatGPT [

2

] summaries compared to human-written summaries.

Such biases may result in unfair ranking assessment outcomes.

To mitigate these issues, during peer-examination, each model is assigned the role of an examiner

separately. As examiners, they are responsible for posing questions and evaluating the answers

provided by the other models. We then combine the evaluation results from each of these models

by voting, and obtain a final result. This approach leverages the collective expertise and diverse

perspectives of all models to improve the coverage of questions as well as ensure fairer assessments.

4 Experiments

To demonstrate the effectiveness of our Language-model-as-an-examiner framework, we first employ

GPT-4 [

19

] as the examiner for a centralized evaluation, since it exhibits a broad understanding of

knowledge [

9

,

49

,

50

] and a precise judgmental ability [

16

,

17

]. In peer-examination, we also employ

Claude (Claude-instant) [51], ChatGPT [2], Bard [52], and Vicuna-13B [38] as LM examiners.

5

剩余25页未读,继续阅读

资源评论

啊我有兔子牙

- 粉丝: 4183

- 资源: 8

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功