没有合适的资源?快使用搜索试试~ 我知道了~

自然语言强化学习(NLRL)框架在MDPs任务中的应用与实现

需积分: 5 0 下载量 139 浏览量

2024-12-13

14:33:57

上传

评论

收藏 633KB PDF 举报

温馨提示

内容概要:本文提出了一种新的强化学习框架——自然语言强化学习(NLRL),该框架将传统的数学模型转化为自然语言表示,通过利用大型语言模型(LLMs)的能力来增强决策制定过程。作者详细介绍了如何重新定义传统RL的关键概念,如任务目标、策略、价值函数、贝尔曼方程和广义策略迭代,使其符合人类自然语言的表达方式。实验部分展示了该方法在表格型马尔科夫决策过程(MDPs)中的有效性和高效性,同时解决了传统RL中存在的样本效率低、解释性差和监督信号稀疏等问题。 适合人群:具备基本自然语言处理和机器学习知识的研究人员和从业人员,尤其是对强化学习和大型语言模型感兴趣的读者。 使用场景及目标:① 结合自然语言表示和强化学习,优化决策制定过程;② 提高决策制定过程的解释性和透明度;③ 减少样本量需求,提高学习效率。 其他说明:尽管NLRL框架初步显示了良好的效果,但当前存在一些局限性,如大型语言模型的幻觉问题,未来的工作将进一步解决这些问题并扩展实验范围。

资源推荐

资源详情

资源评论

Preprint. Work in progress.

NATURAL LANGUAGE REINFORCEMENT LEARNING

Xidong Feng

1∗

, Ziyu Wan

2∗

, Mengyue Yang

1

, Ziyan Wang

3

, Girish A. Koushik

4

,

Yali Du

3

, Ying Wen

2

, Jun Wang

1

1

University College London,

2

Shanghai Jiao Tong University,

3

King’s Collge London,

4

University of Surrey

ABSTRACT

Reinforcement Learning (RL) has shown remarkable abilities in learning poli-

cies for decision-making tasks. However, RL is often hindered by issues such as

low sample efficiency, lack of interpretability, and sparse supervision signals. To

tackle these limitations, we take inspiration from the human learning process and

introduce Natural Language Reinforcement Learning (NLRL), which innova-

tively combines RL principles with natural language representation. Specifically,

NLRL redefines RL concepts like task objectives, policy, value function, Bellman

equation, and policy iteration in natural language space. We present how NLRL

can be practically implemented with the latest advancements in large language

models (LLMs) like GPT-4. Initial experiments over tabular MDPs demonstrate

the effectiveness, efficiency, and also interpretability of the NLRL framework.

1 INTRODUCTION

Reinforcement Learning (RL) constructs a mathematical framework that encapsulates key decision-

making elements. It quantifies the objectives of tasks through the concept of cumulative rewards,

formulates policies with probability distributions, expresses value functions via mathematical ex-

pectations, and models environment dynamics through state transition and reward functions. This

framework effectively converts the policy learning problem into an optimization problem.

Despite the remarkable achievements of RL in recent years, significant challenges still underscore

the framework’s limitations. For example, RL suffers from the sample efficiency problem–RL algo-

rithms are task-agnostic and do not leverage any prior knowledge, requiring large-scale and extensive

sampling to develop an understanding of the environment. RL also lacks interpretability. Despite

the superhuman performance of models like AlphaZero (Silver et al., 2017) in mastering complex

games such as Go, the underlying strategic logic of their decision-making processes remains elusive,

even to professional players. In addition, the supervision signal of RL is a one-dimensional scalar

value, which is much more sparse compared with traditional supervised learning over information-

rich datasets such as texts and images. This is also one of the reasons for the instability of RL

training (Zheng et al., 2023; Andrychowicz et al., 2020).

These limitations drive us to a new framework inspired by the human learning process. Instead of

mathematically modeling decision-making components like RL algorithms, humans tend to conduct

relatively vague operations by natural language. First, natural language enables humans with text-

based prior knowledge, which largely increases the sample efficiency when learning new tasks. Sec-

ond, humans possess the unique ability to articulate their explicit strategic reasoning and thoughts in

natural language before deciding on their actions, making their process fully interpretable by others,

even if it’s not always the most effective approach for task completion. Third, natural language data

contains information about thinking, analysis, evaluation, and future planning. It can provide signals

with high information density, far surpassing that found in the reward signals of traditional RL.

Inspired by the human learning process, we propose Natural Language Reinforcement Learn-

ing (NLRL), a new RL paradigm that innovatively combines the traditional RL concepts and nat-

ural language representation. By transforming key RL components—such as task objectives, poli-

cies, value functions, the Bellman equation, and generalized policy iteration (GPI) (Sutton & Barto,

∗

Equal Contribution. Correspondence to xidong.feng.20@ucl.ac.uk.

1

arXiv:2402.07157v2 [cs.CL] 14 Feb 2024

Preprint. Work in progress.

2018)—into their natural language equivalents, we harness the intuitive power of language to en-

capsulate complex decision-making processes. This transformation is made possible by leveraging

recent breakthroughs in large language models (LLMs), which possess human-like ability to un-

derstand, process, and generate language-based information. Our initial experiments over tabular

MDPs validate the effectiveness, efficiency, and interpretability of our NLRL framework.

2 PRELIMINARY OF REINFORCEMENT LEARNING

Reinforcement Learning models the decision-making problem as a Markov Decision Process

(MDP), defined by the state space S, action space A, probabilistic transition function P : S ×

A × S → [0, 1], discount factor γ ∈ [0, 1) and reward function r : S × A → [−R

max

, R

max

].

The goal of RL aims to learn a policy π : S × A → [0, 1], which measures the action a’s

probability given the state s: π(a|s) = Pr (A

t

= a | S

t

= s). In decision-making task, the opti-

mal policy tends to maximize the expected discounted cumulative reward: E

π

P

∞

t=0

γ

t

r (s

t

, a

t

)

.

The state-action and state value functions are two key concepts that evaluate states and actions

by RL objective’s proxy: Q

π

(s

t

, a

t

) = E

(s,a)

t+1:∞

∼P

π

P

∞

i=t

γ

i−t

r (s

i

, a

i

) | s

t

, a

t

, V

π

(s

t

) =

E

(s,a)

t+1:∞

∼P

π

P

∞

i=t

γ

i−t

r (s

i

, a

i

) | s

t

, where P

π

is the trajectory distribution given the policy

π and dynamic transition P .

Given the definition of V

π

(s

t

), the relationship between temporally adjacent state’s value can be

derived as the Bellman expectation equation. Here is a one-step Bellman expectation equation:

V

π

(s

t

) = E

a

t

∼π

θ

h

r(s

t

, a

t

) + γE

s

t+1

∼p(s

t+1

|s

t

,a

t

)

[V

π

(s

t+1

)]

i

, ∀s

t

∈ S (1)

A similar equation can also be derived for Q

π

(s, a). Given these basic RL definitions and equations,

we can illustrate how policy evaluation and policy improvement are conducted in GPI.

Policy Evaluation. The target of the policy evaluation process is to estimate state value function

V

π

(s) or state-action value function Q

π

(s, a). For simplicity, we only utilize V

π

(s) in the following

illustration. Two common value function estimation methods are the Monte Carlo (MC) estimate and

the Temporal-Difference (TD) estimate (Sutton, 1988). MC estimate leverages Monte-Carlo sam-

pling over trajectories to construct unbiased estimation: V

π

(s

t

) ≈

1

k

P

K

n=1

[

P

∞

i=t

γ

i−t

r(s

n

i

, a

n

i

)].

TD estimate relies on the temporal relationship shown in Equ.1 to construct an estimation: V

π

(s

t

) ≈

1

k

P

K

n=1

[r(s

t

, a

n

t

) + γV

π

(s

n

t+1

)], which can be seen as a bootstrap over next-state value function.

Policy Improvement. The policy improvement process aims to update and improve policy accord-

ing to the result of policy evaluation. Specifically, it replaces the old policy π

old

with the new one

π

new

to make the expected return increases: V

π

new

(s

0

) ≥ V

π

old

(s

0

). In the environment with small,

discrete action spaces, such improvements can be achieved by greedily choosing the action that

maximizes Q

π

old

(s, a) at each state:

π

new

(· | s) = arg max

¯π(·|s)∈P(A)

E

a∼¯π

[Q

π

old

(s, a)] , ∀s ∈ S (2)

Another improvement method involves applying policy gradient ascent (Sutton et al., 1999). It

parameterizes the policy π

θ

with θ. The analytical policy gradient can be derived as follows:

∇

θ

V (π

θ

)|

θ=θ

old

= E

(s,a)∼P

π

θ

old

∇

θ

log π

θ

(a|s)Q

π

θ

old

(s, a)

θ=θ

old

. (3)

By choosing a relatively small step-size α > 0 to conduct gradient ascent: θ

new

= θ +

α∇

θ

V

π

θ

(s

0

)|

θ=θ

old

, we can guarantee the policy improvement: V

π

new

(s

0

) ≥ V

π

old

(s

0

).

3 NATURAL LANGUAGE REINFORCEMENT LEARNING

In contrast to the precise statistical models used in traditional RL, humans typically frame all el-

ements—including task objectives, value evaluations, and strategic policies—within the form of

natural language. This section aims to mimic how humans navigate decision-making tasks using

natural language, aligning it with the concepts, definitions, and equations in traditional RL. Due to

the inherent ambiguity of natural language, the equations presented here are not strictly derived from

mathematical definitions. They are instead analogical and based on empirical insights of original RL

concepts. We leave rigorous theory for future work.

2

Preprint. Work in progress.

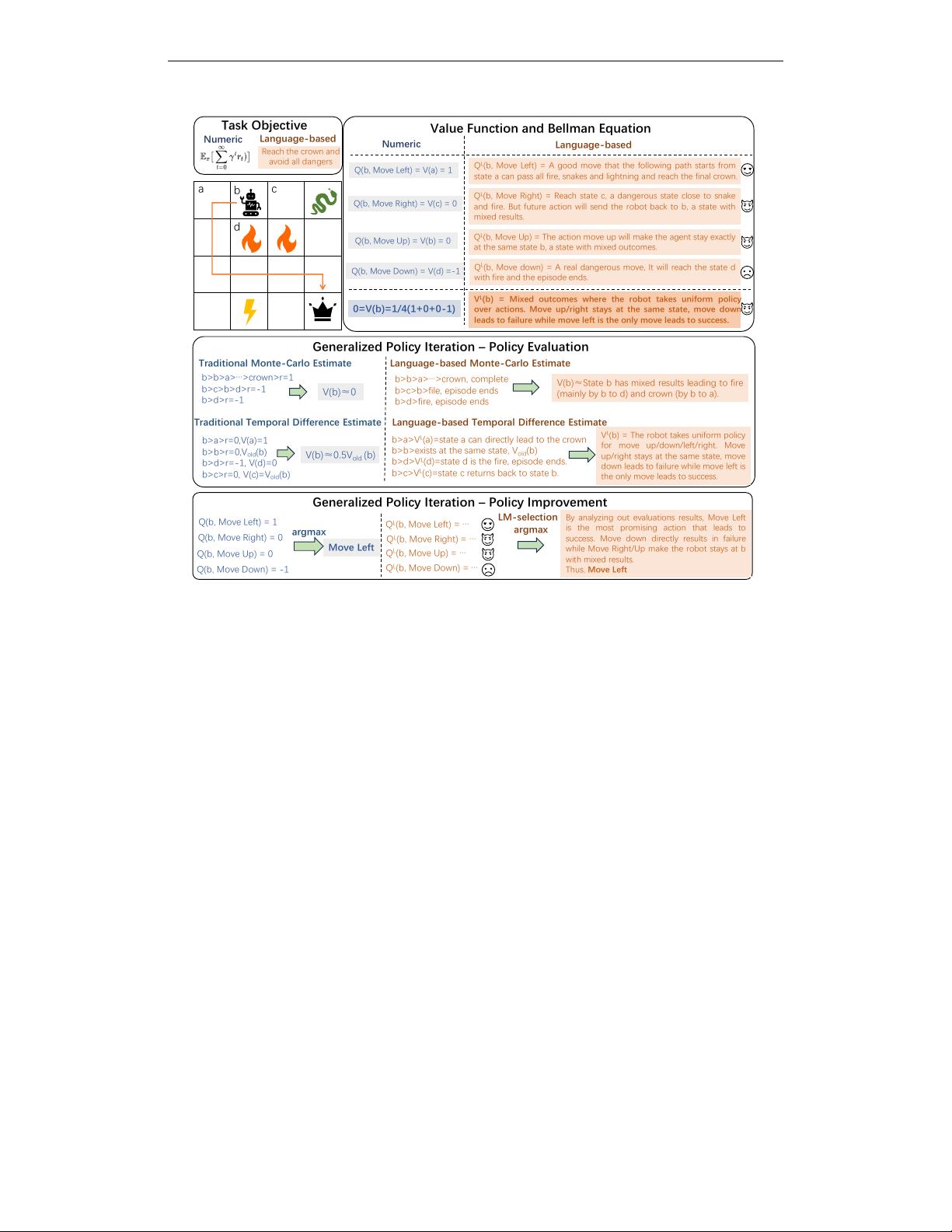

Reach the crown and

avoid all dangers

Value Function and Bellman Equation

Q(b, Move Left) = V(a) = 1

a

b

c

d

Q

L

(b, Move Left) = A good move that the following path starts from

state a can pass all fire, snakes and lightning and reach the final crown.

0=V(b)=1/4(1+0+0-1)

Q(b, Move Right) = V(c) = 0

Q(b, Move Down) = V(d) =-1

Q(b, Move Up) = V(b) = 0

Q

L

(b, Move Up) = The action move up will make the agent stay exactly

at the same state b, a state with mixed outcomes.

Q

L

(b, Move Right) = Reach state c, a dangerous state close to snake

and fire. But future action will send the robot back to b, a state with

mixed results.

Q

L

(b, Move down) = A real dangerous move, It will reach the state d

with fire and the episode ends.

V

L

(b) = Mixed outcomes where the robot takes uniform policy

over actions. Move up/right stays at the

same state, move down

leads to failure while move left is the only move leads to success.

Generalized Policy Iteration – Policy Evaluation

Traditional Monte-Carlo Estimate

b>b>a>…>crown>r=1

b>c>b>d>r=-1

b>d>r=-1

V(b)≈0

b>b>a>…>crown, complete

b>c>b>file, episode ends

b>d>fire, episode ends

V(b)≈State b has mixed results leading to fire

(mainly by b to d) and crown (by b to a).

Traditional Temporal Difference Estimate

b>a>r=0,V(a)=1

b>b>r=0,V

old

(b)

b>d>r=-1, V(d)=0

b>c>r=0, V(c)=V

old

(b)

V(b)≈0.5V

old

(b)

b>a>V

L

(a)=state a can directly lead to the crown

b>b>exists at the same state, V

old

(b)

b>d>V

L

(d)=state d is the fire, episode ends.

b>c>V

L

(c)=state c returns back to state b.

V

L

(b) = The robot takes uniform policy

for move up/down/left/right. Move

up/right stays at the same state, move

down leads to failure while move left is

the only move leads to success.

Generalized Policy Iteration – Policy Improvement

Q(b, Move Left) = 1

Move Left

Q

L

(b, Move Right) = …

By analyzing out evaluations results, Move Left

is the most promising action that leads to

success. Move down directly results in failure

while Move Right/Up make the robot stays at b

with mixed results.

Thus, Move Left

Q(b, Move Right) = 0

Q(b, Move Up) = 0

Q(b, Move Down) = -1

argmax

Q

L

(b, Move Left) = …

Q

L

(b, Move Up) = …

Q

L

(b, Move Down) = …

Language-based Monte-Carlo Estimate

Language-based Temporal Difference Estimate

LM-selection

argmax

Numeric

Language-based

Numeric

Language-based

Task Objective

Figure 1: We present an illustrative example of grid-world MDP to show how NLRL and traditional

RL differ for task objective, value function, Bellman equation, and generalized policy iteration. In

this grid-world, the robot needs to reach the crown and avoid all dangers. We assume the robot

policy takes optimal action at each non-terminal state, except a uniformly random policy at state b.

3.1 DEFINITIONS

We start with definitions and equations in natural language RL to model human’s behaviors. We

provide Fig. 1 with illustrative examples covering most concepts we will discuss in this section.

Text-based MDP: To conduct RL in natural language space, we first need to convert traditional

MDP to text-based MDP, which leverages text and language descriptions to represent MDP’s basic

concepts, including state, action, and environment feedback (state transitions and reward).

Language Task instruction: Humans usually define a natural language task instruction T

L

, like

“reaching the goal” or “opening the door”. Then, we denote a human metric by F that measures the

completeness of the task instruction given the trajectory description D

L

(τ

π

), a language descriptor

D

L

that can transform the trajectory distribution τ

π

into its corresponding language description

D

L

(τ

π

). Based on the notation, the objective of NLRL is reformulated as

max

π

F (D

L

(τ

π

), T

L

), (4)

We are trying to optimize the policy so that the language description of the trajectory distribution τ

π

can show high completeness of the task instruction.

Language Policy: Instead of directly modeling action probability, humans determine the action by

strategic thoughts, logical reasoning, and planning. Thus, we represent the policy on language as

π

L

(a, c|s), which will first generate such thought process c, then the final action probability π(a|s).

Language Value Function: Similar to the definition of Q and V in traditional RL, humans leverage

language value function, relying on natural language evaluation to assess the policy effectiveness.

The language state value function V

L

π

and language state-action value function Q

L

π

are defined as:

Q

L

π

(s

t

, a

t

) = D

(s, a)

t+1:∞

∼ P

π

| s

t

, a

t

, T

L

, V

L

π

(s

t

) = D

a

t

, (s, a)

t+1:∞

∼ P

π

| s

t

, T

L

,

(5)

3

Preprint. Work in progress.

Given the current state s

t

or state-action (s

t

, a

t

), Q

L

π

and V

L

π

leverage language descriptions instead

of scalar value to demonstrate the effectiveness of policy for achieving the task objective T

L

. The

language value functions are intuitively rich in the information of values and enhance interpretability

rather than the traditional scalar-based value. It can represent the evaluation results from different

perspectives, consisting of the underlying logic/thoughts, prediction/analysis of future outcomes,

comparison among different actions, etc.

Language Bellman Equation: In the Bellman equation, the state evaluation value V (s

t

), can

be decomposed into two parts. Firstly the intermediate changes, which include immediate

action a

t

, reward r

t

, and next state s

t+1

. Secondly, the state evaluation V (s

t+1

) over the next

state. Based on such intuition of decomposition, we introduce the language Bellman equation Equ.6

following the decomposition principle.

V

L

π

(s

t

) = G

a

t

,s

t+1

∼P

π

1

G

2

d

a

t

, r (s

t

, a

t

) , s

t+1

), V

L

π

(s

t+1

, ∀s

t

∈ S (6)

where d (a

t

, r (s

t

, a

t

) , s

t+1

)) depicts the language description of intermediate changes, G

1

and G

2

serves as two information aggregation function. Specifically, G

2

mimics the add ‘+’ operation

in the original Bellman equation, aggregating information from intermediate changes’ descriptions

and future evaluation given a

t

and s

t+1

. G

1

takes the responsibility of the expectation operation E,

aggregating information from different (a

t

, s

t+1

) pairs by sampling from trajectory distribution P

π

.

3.2 LANGUAGE GENERALIZED POLICY ITERATION

Given definitions and equations, in this part, we introduce how language GPI is conducted. Refer to

Fig. 1 with illustrative examples of language GPI.

3.2.1 LANGUAGE POLICY EVALUATION

Language policy evaluation aims to estimate language value function V

L

π

and Q

L

π

for each state. We

present how two classical estimations: MC and TD estimate work in language policy evaluation.

Language Monte-Carlo Estimate. Starting from the state s

t

, MC estimate is conducted over text

rollouts (i.e. K full trajectories {a

t

, (s, a)

t+1:∞

}) given the policy π. Since we cannot take the

average operation in language space, we instead leverage language aggregator/descriptor G

1

to ag-

gregate information over finite trajectories, approximating the expected evaluation.

V

L

π

(s

t

) ≈ G

1

a

n

t

, (s, a)

n

t+1:∞

k

n=1

(7)

Language Temporal-Difference Estimate. TD estimate mainly relies on the one-step language

Bellman equation illustrated in Equ. 6. Similar to MC estimate, we aggregate K one-step samples

to approximate the expected evaluation:

V

L

π

(s

t

) ≈ G

1

G

2

d(s

t

, a

n

t

, r(s

t

, a

n

t

), s

n

t+1

), V

L

π

(s

n

t+1

)

K

n=1

, ∀s

t

∈ S (8)

Language MC estimate is free from estimation “bias” as it directly utilizes samples from complete

trajectories. However, the MC method is prone to high “variance” considering the significant varia-

tions in the long-term future steps. Such variability poses a challenge for the language aggregator D

in Equ. 7 to efficiently extract crucial information from diverse trajectories. On the contrary, while

the inaccuracy of the next state evaluation V

L

π

(s

t+1

) can bring estimation “bias” to TD estimate,

they effectively reduce “variance” by discarding future variations. G

1

and G

2

are only required to

conduct simple one-step information aggregation with limited variations.

1

3.2.2 LANGUAGE POLICY IMPROVEMENT

Similar to traditional policy improvement, the motivation of language policy improvement also aims

to select actions that maximize the human task completeness function F :

π

new

(· | s) = arg max

¯π(·|s)∈P(A)

F (Q

L

π

old

(s, a), T

L

), ∀s ∈ S (9)

1

We use quotes for “bias” and “variance” to indicate that we draw on their conceptual essence, not their

strict statistical definitions, to clarify concepts in NLRL.

4

Preprint. Work in progress.

As we mentioned, F is typically a human measurement of task completeness, which is hard to quan-

tify and take the argmax operation. Considering that F largely depends on human prior knowledge,

instead of mathematically optimizing it, we leverage the language analysis process I to conduct

policy optimization and select actions:

π

new

(· | s), c = I(Q

L

π

old

(s, a), T

L

), ¯π(· | s) ∈ P(A), ∀s ∈ S (10)

Language policy improvement conducts strategic analysis C to generate the thought process c and

determine the most promising action as the new policy π

new

(· | s). This analysis is mainly based on

human’s correlation judgment between the language evaluation Q

L

π

old

(s, a) and task objective T

L

.

3.3 PRACTICAL IMPLEMENTATION WITH LARGE LANGUAGE MODELS

Section 3 demonstrates the philosophy of NLRL: transfer RL key concepts into its human natural

language correspondence. To practically implement these key concepts, we require a model that can

understand, process, and generate language information. The Large language model, trained with

large-scale human language and knowledge corpus, can be a natural choice to help mimic human

behaviors and implement these language RL components.

LLMs as policy (π

L

). Many works adopted LLMs as the decision-making agent (Wang et al.,

2023a; Feng et al., 2023a; Christianos et al., 2023; Yao et al., 2022) with Chain-of-thought process

(Wei et al., 2022b). By setting proper instructions, LLMs can leverage natural language to describe

their underlying thought for determining the action, akin to the human strategic thinking.

LLMs as information extractor and aggregator (G

1

, G

2

) for concepts. LLMs can be powerful

information summarizers (Zhang et al., 2023), extractors (Xu et al., 2023), and aggregators to help

us fuse intermediate changes and future language evaluations for language value function estimates.

One core issue is to determine which kind of information we hope our LLMs to extract and aggre-

gate. Inspired by works Das et al. (2023); Sreedharan et al. (2020); Schut et al. (2023); Hayes &

Shah (2017) in the field of interpretable RL, we believe Concept can be the core. We adopt the

illustration in Das et al. (2023) that concept is a general, task-objective oriented, and high-level ab-

straction grounded in human domain knowledge. For example, in the shortest path-finding problem,

the path distance and available path sets are two concepts that are (1) high-level abstraction of the

trajectories and are predefined in human prior knowledge, (2) generally applicable over different

states, (3) directly relevant to the final task objective. Given these motivations, LLMs will try to

aggregate and extract domain-specific concepts to form the value target information. Such concepts

can be predefined by human’s prior knowledge, or proposed by LLMs themselves.

LLMs as value function approximator (D

L

, Q

L

, V

L

). The key idea of value function approxima-

tion (Sutton et al., 1999) is to represent the value function with a parameterized function instead of

a table representation. Nowadays deep RL typically chooses neural networks that take the state as

input and output one-dimension scalar value. For NLRL, the language value function approximation

of D

L

, Q

L

, V

L

can be naturally handled by (multi-modal) LLMs. LLMs can take in the features

from the task’s state, such as low-dimension statistics, text, and images, and output the correspond-

ing language value judgment and descriptions. For the training of LLMs, we adopt the concept

extractor/aggregator mentioned above to form MC or TD estimate (in Sec 3.2.1), which can be used

to finetune LLMs for better language critics.

LLMs as policy improvement operator (I). With the chain-of-thought process and human prior

knowledge, LLMs are better to determine the most promising action π

new

(· | s) by taking language

analysis c over the correlation of language evaluation Q

L

π

old

(s, a) and task objective T

L

. The un-

derlying idea also aligns with some recent works (Kwon et al., 2023; Rocamonde et al., 2023) that

leverage LLMs or Vision-language models as the reward–they can accurately model the correlation.

3.4 DISCUSSIONS OVER OTHER RL CONCEPTS

To illustrate the versatility of the framework, we show several examples of how other fundamental

RL concepts can be framed into NLRL.

TD-λ (Sutton, 1988). Equ. 6 considers the one-step decomposition of the value function, or in

the context of traditional RL, the TD(1) situation. A natural extension is to conduct an n-step

5

剩余21页未读,继续阅读

资源评论

- #完美解决问题

- #运行顺畅

- #内容详尽

- #全网独家

- #注释完整

码流怪侠

- 粉丝: 2w+

- 资源: 435

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

- 基于java的自习室管理和预约系统设计与实现.docx

- C++实现的基于huffman编码的文件压缩解压demo,供学习用

- No.1176 基于组态王和S7-200 PLC的锅炉温度控制系统设计 带解释的梯形图程序,接线图原理图图纸,io分配,组态画面

- 固体电介质电树枝击穿,以及SF6气体,流注放电过程

- libstdc++.so.6

- 两相交错并联buck boost变器仿真 采用双向结构,管子均为双向管 模型内包含开环,电压单环,电压电流双闭环三种控制方式 两个电感的电流均流控制效果好 matlab simulink plecs仿

- springboot在线教育平台.zip

- “互联网+”中国脉动地图——腾讯移动互联发展指数报告.pdf

- 【报告PDF】破解网络视频创新广告形式.pdf

- 【报告PDF】2015汽车消费新常态研究.pdf

- opencv-4.10.0-vs2019-x86

- 7大员工内推明星业.pdf

- 2013爱德曼新兴市场信任度调查.pdf

- 2014-2015 数字营销和O2O趋势.pdf

- 2014Q4与2015Q1新增对比报告.pdf

- 2014爱德曼中国企业信任度调查.pdf

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功