没有合适的资源?快使用搜索试试~ 我知道了~

ChartLlama论文

试读

19页

需积分: 0 0 下载量 172 浏览量

更新于2024-07-15

1

收藏 2.51MB PDF 举报

### ChartLlama论文知识点概述

#### 一、论文背景与目的

**背景:**

随着人工智能技术的发展,尤其是自然语言处理(NLP)领域的突破,多模态大语言模型在多种视觉语言任务上展现出了强大的能力。然而,在面对特定领域数据时,尤其是在图表理解方面,这些模型的表现往往不尽如人意。这主要是因为缺乏高质量的多模态指令微调数据集。

**目的:**

本论文旨在通过创建一个高质量的多模态指令微调数据集来提升模型在图表理解和生成方面的性能。该数据集基于GPT-4开发,并通过一个多步骤的数据生成流程来确保其质量和多样性。

#### 二、ChartLlama简介

**定义:**

ChartLlama是一款专为图表理解和生成设计的多模态大语言模型。它能够处理复杂的图表结构,并具备将图表转换为文本描述、图表间转换以及图表编辑等多种能力。

**核心功能:**

1. **图表描述**:能够根据给定的图表生成详细的文本描述。

2. **图表提取**:能够从图表中提取具体的信息或数据。

3. **图表转表格**:能够将图表中的数据转换成表格形式。

4. **图表到图表**:能够将一种类型的图表转换为另一种类型,比如柱状图转饼图。

5. **文本到图表**:根据文本描述自动生成相应的图表。

6. **图表编辑**:能够进行图表背景更改、网格线去除等操作。

#### 三、数据集创建与训练过程

**数据集创建:**

为了训练ChartLlama模型,本研究团队提出了一种基于GPT-4的高质量多模态指令微调数据集创建方法。该方法包括以下几个关键步骤:

1. **数据收集**:收集大量包含图表的文档,涵盖不同领域和类型的图表。

2. **数据预处理**:对收集到的数据进行清洗、标注和格式化,确保数据质量。

3. **数据增强**:通过数据增强技术增加数据集的多样性和复杂性。

4. **指令设计**:设计一系列指令模板,用于指导模型完成特定任务,如图表描述、图表转文本等。

5. **质量控制**:通过人工评估和自动化检查来确保数据集的质量。

**模型训练:**

训练过程主要分为以下几个阶段:

1. **预训练**:利用大规模文本语料库对模型进行预训练,建立基本的语言理解能力。

2. **指令微调**:在上述创建的多模态指令微调数据集上对模型进行进一步训练,使其具备处理图表相关任务的能力。

3. **评估与优化**:通过多种评估指标监测模型性能,并针对不足之处进行迭代优化。

#### 四、应用场景与展望

**应用场景:**

ChartLlama模型可以应用于多个场景,包括但不限于:

- **商业智能**:帮助企业快速理解复杂的数据报告和市场趋势。

- **教育领域**:辅助教学资源的制作,帮助学生更好地理解统计数据。

- **新闻报道**:自动分析和解释数据图表,提高新闻报道的质量和效率。

- **科研工作**:支持科研人员快速解读实验结果和科学数据。

**未来展望:**

随着技术的不断进步,ChartLlama模型有望在更多领域得到应用和发展。未来的研究方向可能包括模型的可扩展性、多语言支持、以及更高级的图表理解和生成能力等方面。此外,探索如何使模型更加人性化、易于使用也将成为重要的研究课题之一。

ChartLlama: A Multimodal LLM for Chart Understanding and Generation

Yucheng Han

1,2∗

Chi Zhang

2∗†

Xin Chen

2

Xu Yang

3

Zhibin Wang

2

Gang Yu

2

Bin Fu

2

Hanwang Zhang

1

1

Nanyang Technological University

2

Tencent

3

Southeast University

1

{yucheng002, hanwangzhang}@ntu.edu.sg

2

{johnczhang, shingxchen, billzbwang, skicyyu, brianfu}@tencent.com

3

xuyang palm@seu.edu.cn

https://tingxueronghua.github.io/ChartLlama/

Q&A

Chart description

Chart extraction

Q: What is the

number of Pop

songs in the 2000s

according to the

chart?

A: 50

Q: Please summarize

the chart with text.

A: The chart presents

data on the popularity

of various gadgets

from 2016 to…

Q: Convert the

figure into table.

A:

Flood 30.0%

Earthquake 15.0%

Hurricane 20.0%

Tornado 15.0%

Wildfire 10.0%

More chart types and abilities…

Chart-to-chart

Q: Derender the given image to Python code

using matplotlib.

Text-to-chart

A:

import …

ax=plt.subplots(figsize=(10, 6))

ax.plot(x, y,

label=column, linewidth=2,

marker='o', markersize=10,

alpha=0.7)

for i,txt in enumerate(df['2020’]):…

Chart editing

Q: Change the background of the figure to

white and remove the grid lines.

Q: Change the color of the image by setting

a different color for each category of bars.

Q: Convert the

figure into pie table.

Facebook 30.0%

Twitter 15.0%

Wechat 20.0%

Tiktok 35.0%

Figure 1. Capability demonstration of ChartLlama. An instruction-tuning dataset is created based on our proposed data generation

pipeline. We train ChartLlama on this dataset and achieve the abilities shown in the figure.

Abstract

Multi-modal large language models have demonstrated

impressive performances on most vision-language tasks.

However, the model generally lacks the understanding ca-

pabilities for specific domain data, particularly when it

comes to interpreting chart figures. This is mainly due to

the lack of relevant multi-modal instruction tuning datasets.

In this article, we create a high-quality instruction-tuning

dataset leveraging GPT-4. We develop a multi-step data

generation process in which different steps are respon-

*

Equal contributions. Work was done when Yucheng Han was a Re-

search Intern at Tencent.

†

Corresponding Author.

sible for generating tabular data, creating chart figures,

and designing instruction tuning data separately. Our

method’s flexibility enables us to generate diverse, high-

quality instruction-tuning data consistently and efficiently

while maintaining a low resource expenditure. Addition-

ally, it allows us to incorporate a wider variety of chart and

task types not yet featured in existing datasets. Next, we in-

troduce ChartLlama, a multi-modal large language model

that we’ve trained using our created dataset. ChartLlama

outperforms all prior methods in ChartQA, Chart-to-text,

and Chart-extraction evaluation benchmarks. Additionally,

ChartLlama significantly improves upon the baseline in our

specially compiled chart dataset, which includes new chart

and task types. The results of ChartLlama confirm the value

1

arXiv:2311.16483v1 [cs.CV] 27 Nov 2023

and huge potential of our proposed data generation method

in enhancing chart comprehension.

1. Introduction

In the past year, the field of artificial intelligence has un-

dergone remarkable advancements. A key highlight is the

emergence of large language models (LLMs) like GPT-

4 [23]. These models [3, 24, 29–31, 35] have demonstrated

a remarkable capability to comprehend and generate intri-

cate textual data, opening doors to myriads of applications

in both academia and industry. Taking this progress a step

further, the introduction of GPT-4V [33] marked another

milestone. It endows LLMs with the ability to interpret vi-

sual information, essentially providing them with a vision.

As a result, they can now extract and analyze data from im-

ages, marking a significant evolution in the capacities of

these models.

However, despite the achievements and potentials of

models like GPT-4V, the details behind GPT-4V’s architec-

ture remain a mystery. This opacity has given rise to ques-

tions within the academic world about the best practices for

designing multi-modal LLMs. Notably, pioneering research

initiatives, like LLaVA [17, 18] and MiniGPT [4, 40], pro-

vide insightful directions in this regard. Their findings

suggest that by incorporating visual encoders into exist-

ing LLMs and then fine-tuning them using multi-modal

instruction-tuning datasets, LLMs can be effectively trans-

formed into multi-modal LLMs. It’s noteworthy that these

multi-modal datasets are typically derived from established

benchmarks, presenting a cost-effective method for accu-

mulating data required for instruction tuning.

Datasets grounded on established benchmarks, such

as COCO [13], have significantly enhanced the abilities

of multi-modal LLMs to interpret everyday photographs

adeptly. However, when confronted with specialized visual

representations, such as charts, they reveal a noticeable lim-

itation [16, 33]. Charts are important visual instruments that

translate complex data sets into digestible visual narratives,

playing a crucial role in facilitating understanding, shaping

insights, and efficiently conveying information. Their per-

vasive presence, from academic publications to corporate

presentations, underscores the essentiality of enhancing the

capability of multi-modal LLMs in interpreting charts. In-

deed, gathering data specifically to refine instructions for

understanding charts presents several challenges. These

typically stem from two areas: understanding and gener-

ation. An effective chart understanding model should be

capable of extracting and summarizing data from various

types of charts and making predictions based on this infor-

mation.

However, most existing datasets [8, 20–22] only provide

support for simple question-answering or captioning, pri-

marily due to the absence of detailed chart information and

annotations that provide a high-level understanding of raw

data. The high dependency on manually annotated charts

gathered by web crawlers negatively affects the quality of

these datasets. Thus, the previous annotating methods could

only result in chart datasets with lower quality and less com-

prehensive annotations. Compared with chart understand-

ing, generating chart figures is a more challenging task for

the model because existing deep-learning-based generation

methods [26, 27] struggle to accurately create images based

on instructions. Using Python code to generate charts seems

promising which needs the corresponding annotations to su-

pervise models. Most charts obtained from the web are de-

void of detailed annotations, making it challenging to anno-

tate the generation code. The absence of code annotations

makes it challenging to supervise models in code genera-

tion. These issues combined impede the model’s ability to

understand charts and learn generation jointly.

To address this, we introduce an adaptive and innovative

data collection approach exclusively tailored to chart un-

derstanding and generation. At the heart of our methodol-

ogy is the strategic employment of GPT-4’s robust linguistic

and coding capabilities, which facilitate the creation of rich

multi-modal datasets. This innovative integration not only

optimizes data accuracy but also ensures its wide-ranging

diversity. Specifically, our method comprises three main

phases:

1) Chart Data Generation. Our strategy for data collec-

tion stands out for its flexibility. Rather than limiting data

collection to conventional data sources such as the web or

existing datasets, we harness the power of GPT-4 to produce

synthesized data. By providing specific characteristics such

as topics, distributions, and trends, we guide GPT-4 to pro-

duce data that is both diverse and precise.

2) Chart Figure Generation. Subsequently, GPT-4’s com-

mendable coding skills are utilized to script chart plots us-

ing the open-sourced library, like Matplotlib, given the data

and function documentation. The result is a collection of

meticulously rendered charts that span various forms, each

accurately representing its underlying data.

3) Instruction data generation. Beyond chart rendering,

GPT-4 is further employed to interpret and narrate chart

content, ensuring a holistic understanding. It is prompted

to construct relevant question-answer pairs correlating with

the charts. This results in a comprehensive instruction-

tuning corpus, amalgamating the narrative texts, question-

answer pairs, and source or modified codes of the charts.

A standout feature of our methodology is its flexibility,

which diminishes the potential for bias while simultane-

ously offering scalability. Building on this robust method-

ology, we’ve crafted a benchmark dataset, which is made

available for public access. This dataset stands out, not only

for its superior quality but also its unparalleled diversity.

2

Datasets #Chart type #Chart figure

#Instruction

tuning data

#Task type

ChartQA [20] 3 21.9K 32.7K 1

PlotQA [22] 3 224K 28M 1

Chart-to-text [8] 6 44K 44K 1

Unichart [21] 3 627K 7M 3

StructChart [32] 3 9K 9K 1

ChartLlama 10 11K 160K 7

Table 1. Dataset statistics. Thanks to the flexibility of our data

construction method, our proposed dataset supports a wider range

of chart types and tasks. We can generate more diverse instruction-

tuning data based on specific requirements.

A comparative analysis of our benchmark against existing

datasets can be viewed in Table 1. To showcase the su-

periority of our benchmark, we introduced a multi-modal

Large Language Model (LLM) named ChartLlama trained

with our established benchmarks. Our extensive experi-

ments evaluated on multiple existing benchmark datasets

show that our model outperforms previous methods with

remarkable advantages and considerably less training data.

Additionally, ChartLlama is equipped with several unique

capabilities, including the ability to support a wider range

of chart types, infer across multiple charts, undertake chart

de-rendering tasks, and even edit chart figures.

Our main contributions are summarized as follows:

• We introduce a novel multi-modal data collection ap-

proach specifically designed for chart understanding and

generation. The proposed data collection method boasts

superior flexibility and scalability, enabling easy migra-

tion to different types of charts and various tasks.

• Through our innovative data collection approach, we cre-

ate a benchmark dataset that stands out in terms of both

quality and diversity. We make this dataset publicly avail-

able to catalyze further advancements in the field.

• We develop ChartLlama, a multi-modal LLM that not

only surpasses existing models on various existing bench-

marks but also possesses a diverse range of unique chart

understanding and generation capabilities.

2. Related work

2.1. Large Language Model

The series of LLM models, such as GPT-3.5 [24] and GPT-

4 [23], have demonstrated remarkable reasoning and con-

versational capabilities, which have garnered widespread

attention in the academic community. Following closely,

a number of open-source LLM [1, 3, 30, 31, 35] models

emerged, among which Llama [30] and Llama 2 [31] are no-

table representatives. With extensive pre-training on large-

scale datasets and carefully designed instruction datasets,

these models have also showcased similar understanding

and conversational abilities. Subsequently, a series of works

have been developed, aiming to achieve specific function-

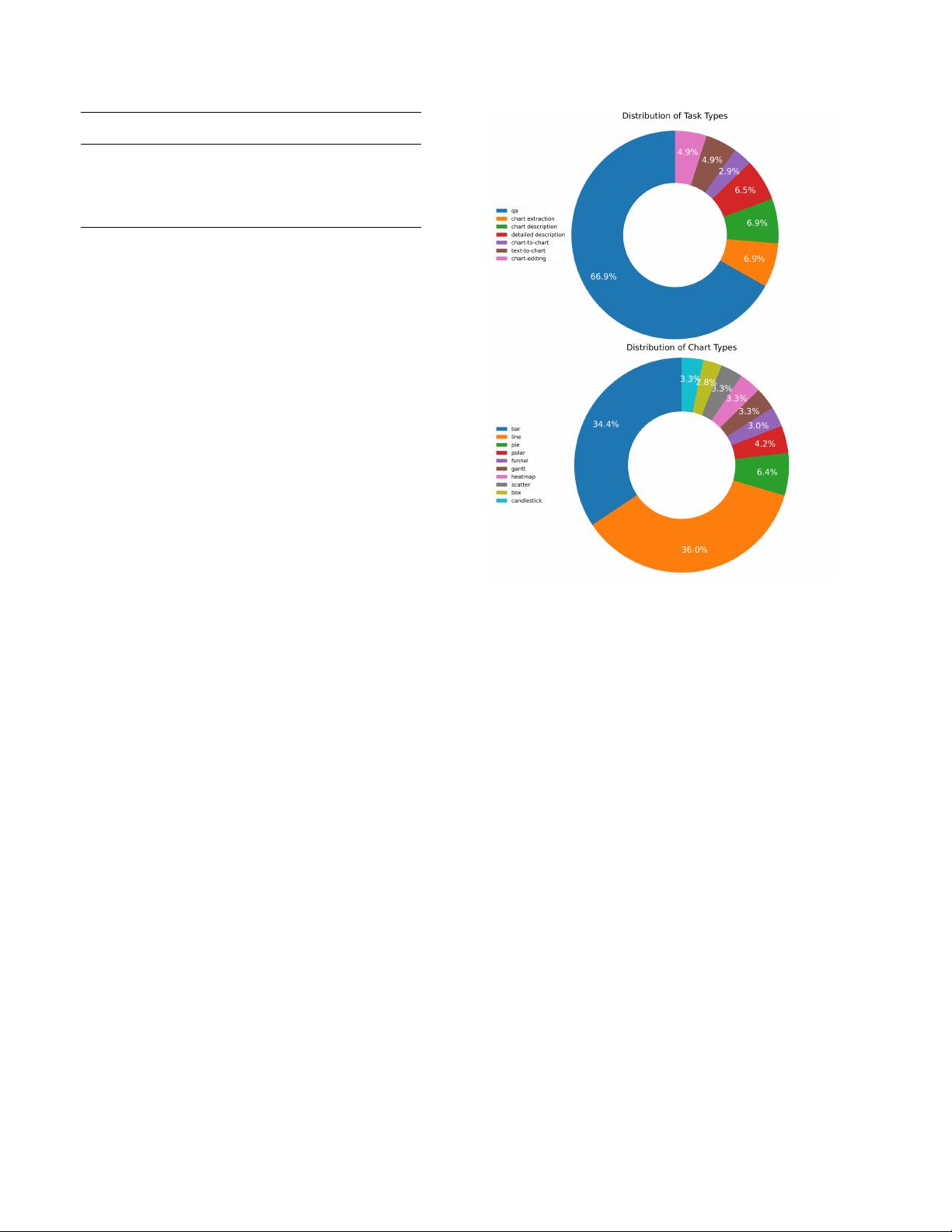

Figure 2. Distributions of different types of data in our dataset.

The top and bottom pie charts show the distribution of task types

and chart types, respectively. (The illustration is generated by our

proposed ChartLlama.)

alities by leveraging efficient supervised fine-tuning algo-

rithms based on the Llama series. Among these influen-

tial works, Alpaca [28] and Vicuna [39] stand out, with Vi-

cuna’s framework serving as the cornerstone for subsequent

multi-modal works.

2.2. Multi-modal Large Language Model

Concurrently, the academic community has witnessed a

surge of development in multi-modal LLMs [2, 4, 7, 10–

12, 17, 18, 34, 36–38, 40] built upon existing open-source

models. Earlier efforts in this domain, such as LLaVA [18],

MiniGPT [4], BLIP2 [11], and mPLUG-Owl [34], have

shown significant room for improvement in both perfor-

mance and functionality. With further exploration of train-

ing strategies and an increase in dataset scale, the perfor-

mance of these new models has steadily improved, reaching

comparable levels to GPT-4V in specific evaluation metrics.

Notably, LLaVA-1.5 [17], an iterative version of LLaVA,

has gained popularity as a baseline due to its user-friendly

training framework, superior performance, and data effi-

ciency. Our work is also based on LLaVA-1.5.

3

Input

Theme: Global average temperature, Daily traffic, …

Trend: Rapid increase, Slow increase, …

…

Input

Detailed descriptions about data: the chart presents the

variation in forest cover over time, specifically for the Amazon

Rainforest and the Siberian Taiga. …showcases the irregular

fluctuations and sudden drops in forest coverage for …

Raw Data:

Output

Detailed descriptions about charts: …the plot has

labels for x and y axis as 'Year' and 'Area (Square

Kilometers)', respectively, and the title of the plot is

'Comparison of Amazon Rainforest and Siberian Taiga

Area'. A legend is placed at the upper right corner…

Generated figures:

Output

Year Amazon Siberian

2010 500 200

2011 600 300

… … …

Input

The descriptions: The chart presents the variation in…

The raw data: Year, Amazon, Siberian\n 2010, 500…

…

Instruction tuning data

Q1: What is the number of Pop songs in the 2000s according

to the chart? A1: 50

Q2: From the chart, can we infer any potential reasons for the

more significant reduction in forest coverage? A2: It could…

Q3: Extract the raw data from the given chart. A3: …

Q4: Redraw the given chart figure. A4: …

Q5: Draw a funnel chart based on given raw data. A5: …

Q6: Remove the grids in the given chart figure. A6: …

Abilities: Q&A, Chart Descriptions, …

Stage 1: Chart Data Generation

Stage 2: Chart Figure Generation

Stage 3: Instruction Data Generation

In context examples:

Raw data: tabular data from Stage 1.

…

Figure 3. Pipeline of our data generation method. The innovative data generation process we proposed consists of three important steps

relying on GPT-4. The dataset generated using this process exhibits significant advantages compared to previous datasets in terms of data

diversity, quality, the number of chart types, and the variety of tasks. ChartLlama, which is trained on this dataset, has the ability to perform

various tasks based on the design of the instruction-tuning data.

2.3. Chart Understanding

In evaluations such as the report of GPT-4V [33] and Hallu-

sionBench [16], it is evident that current multi-modal LLMs

still struggle with complex chart-related problems. There

are already some datasets [8, 20, 22] available for evalu-

ating models’ chart understanding capabilities, mainly di-

vided into two categories, each with its own advantages

and disadvantages. One category measures through simple

question-and-answer tasks, such as ChartQA [20], which

has high-quality questions and answers annotated by hu-

mans, and PlotQA [22], which has lower-quality questions

and answers generated through templates. The advantage of

these datasets lies in their large scales and the ability to gen-

erate them through templates. However, their limitations

include the difficulty in ensuring the quality of questions

and answers, as well as a tendency to focus too much on

simple questions about the data in the charts. The other cat-

egory converts charts into textual descriptions, with Chart-

to-text [8] being a representative work in this field. The

charts and annotations in these datasets are derived from

the real world, ensuring higher quality, and encouraging

models to delve deeper into the trends and meanings be-

hind the charts. However, the corresponding drawbacks in-

clude the presence of more noises in the textual annotations

and the over-reliance on BLEU-4. Previous works focusing

on chart understanding tasks can be divided into two main

kinds of approaches. One kind of approach is using a sin-

gle model to understand the charts and answer questions in

natural language, for example, [15, 21]. The other kind of

approach, such as [14, 32], is to first utilize the model to

convert the charts into structured data and then analyze and

answer questions based on the structured data using exist-

ing large models. In our work, we primarily explore the

former kind, aiming to leverage a single model to complete

the entire process of chart understanding.

3. Method

In this section, we detail our unique approach to chart un-

derstanding and generation. Our method involves three in-

terconnected steps: data collection, chart figure generation,

and instruction data generation. We illustrate this process in

Fig. 3. These steps are detailed in the following subsections.

3.1. Chart Data Generation

Our primary goal in chart data collection is to collect diverse

and high-quality data. We employ two main strategies for

this purpose: 1) Data Generation from Scratch Using GPT-

4: To collect a diverse and high-quality dataset, we initially

generate tabular data from scratch using GPT-4. We instruct

GPT-4 to create data tables based on specific themes, distri-

butions, and other characteristics like the size of the dataset

in terms of rows and columns. This process ensured the

creation of data with known and controlled characteristics,

which can be essential for generating reliable instruction-

answer pairs. Moreover, by managing these characteristics,

we can intentionally minimize bias, leading to a more bal-

anced dataset. 2) Synthesizing Data from Existing Chart

Datasets. Our second strategy is to synthesize data by ref-

erencing existing chart datasets. These datasets already en-

compass a range of topics and characteristics, providing a

solid base for data generation. By prompting GPT-4 with

these datasets, we guide it to generate reasonable data that

complements its existing knowledge base. This method

added variety to our dataset and improved its overall quality.

Generating diverse data at scale using the LLM is not

an easy task. When the prompt is designed improperly, the

model tends to generate repetitive and meaningless data that

deviates from the distribution of real-world data and thus

4

剩余18页未读,继续阅读

资源推荐

资源评论

2023-03-21 上传

180 浏览量

172 浏览量

188 浏览量

156 浏览量

2023-05-01 上传

170 浏览量

125 浏览量

2023-04-12 上传

176 浏览量

153 浏览量

2024-02-03 上传

151 浏览量

189 浏览量

106 浏览量

193 浏览量

177 浏览量

186 浏览量

165 浏览量

170 浏览量

资源评论

需要重新演唱

- 粉丝: 8824

- 资源: 3

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

- ztree的demo入门

- STM32定时器对象捕获功能测量市电频率

- 1717skddhscq_downcc.zip

- 2022年电赛e题声源定位跟踪系统.zip

- Mini-Imagenet数据集文件

- MATLAB实现SSA-CNN-LSTM-Multihead-Attention多头注意力机制多变量时间序列预测(含完整的程序,GUI设计和代码详解)

- Matlab实现MTF-CNN-Mutilhead-Attention基于马尔可夫转移场-卷积神经网络融合多头注意力多特征数据分类预测(含完整的程序,GUI设计和代码详解)

- 知行乐评ver1.1.0

- treegird的demo

- Towards a Digital Engineering Initialization Framework迈向数字工程初始化框架

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功