Going deeper with convolutions

Christian Szegedy

Google Inc.

Wei Liu

University of North Carolina, Chapel Hill

Yangqing Jia

Google Inc.

Pierre Sermanet

Google Inc.

Scott Reed

University of Michigan

Dragomir Anguelov

Google Inc.

Dumitru Erhan

Google Inc.

Vincent Vanhoucke

Google Inc.

Andrew Rabinovich

Google Inc.

Abstract

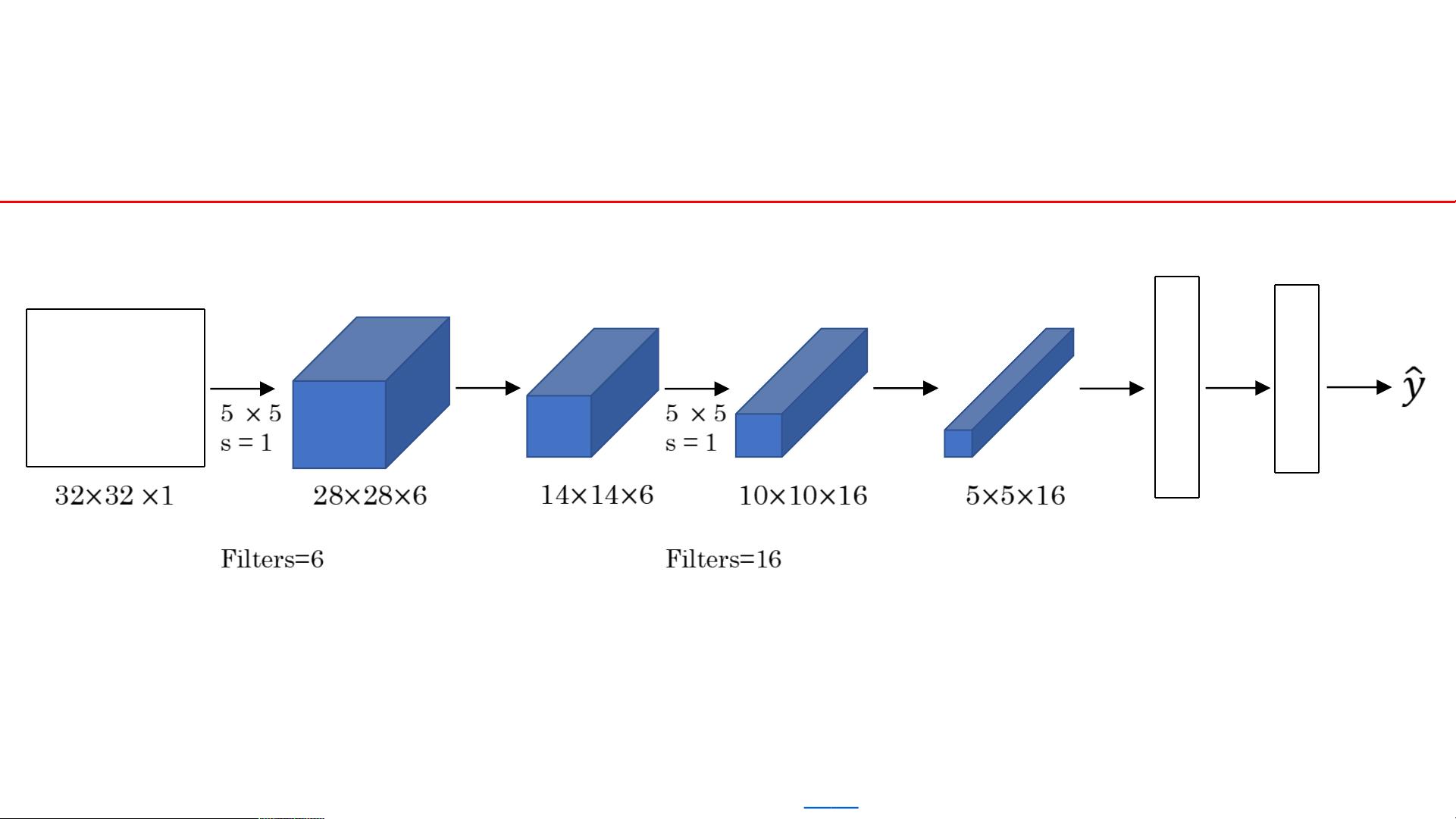

We propose a deep convolutional neural network architecture codenamed Incep-

tion, which was responsible for setting the new state of the art for classification

and detection in the ImageNet Large-Scale Visual Recognition Challenge 2014

(ILSVRC14). The main hallmark of this architecture is the improved utilization

of the computing resources inside the network. This was achieved by a carefully

crafted design that allows for increasing the depth and width of the network while

keeping the computational budget constant. To optimize quality, the architectural

decisions were based on the Hebbian principle and the intuition of multi-scale

processing. One particular incarnation used in our submission for ILSVRC14 is

called GoogLeNet, a 22 layers deep network, the quality of which is assessed in

the context of classification and detection.

1 Introduction

In the last three years, mainly due to the advances of deep learning, more concretely convolutional

networks [10], the quality of image recognition and object detection has been progressing at a dra-

matic pace. One encouraging news is that most of this progress is not just the result of more powerful

hardware, larger datasets and bigger models, but mainly a consequence of new ideas, algorithms and

improved network architectures. No new data sources were used, for example, by the top entries in

the ILSVRC 2014 competition besides the classification dataset of the same competition for detec-

tion purposes. Our GoogLeNet submission to ILSVRC 2014 actually uses 12× fewer parameters

than the winning architecture of Krizhevsky et al [9] from two years ago, while being significantly

more accurate. The biggest gains in object-detection have not come from the utilization of deep

networks alone or bigger models, but from the synergy of deep architectures and classical computer

vision, like the R-CNN algorithm by Girshick et al [6].

Another notable factor is that with the ongoing traction of mobile and embedded computing, the

efficiency of our algorithms – especially their power and memory use – gains importance. It is

noteworthy that the considerations leading to the design of the deep architecture presented in this

paper included this factor rather than having a sheer fixation on accuracy numbers. For most of the

experiments, the models were designed to keep a computational budget of 1.5 billion multiply-adds

at inference time, so that the they do not end up to be a purely academic curiosity, but could be put

to real world use, even on large datasets, at a reasonable cost.

1

arXiv:1409.4842v1 [cs.CV] 17 Sep 2014