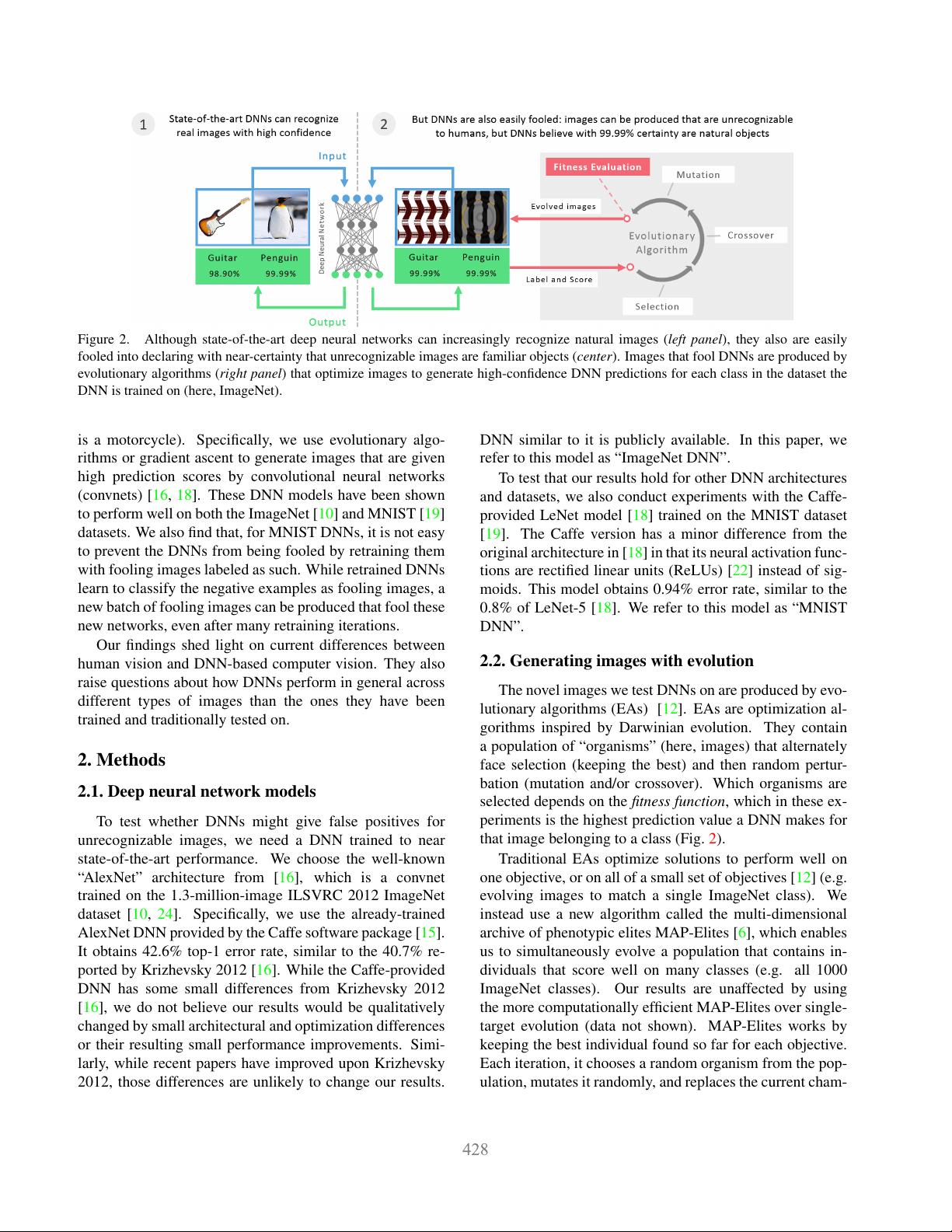

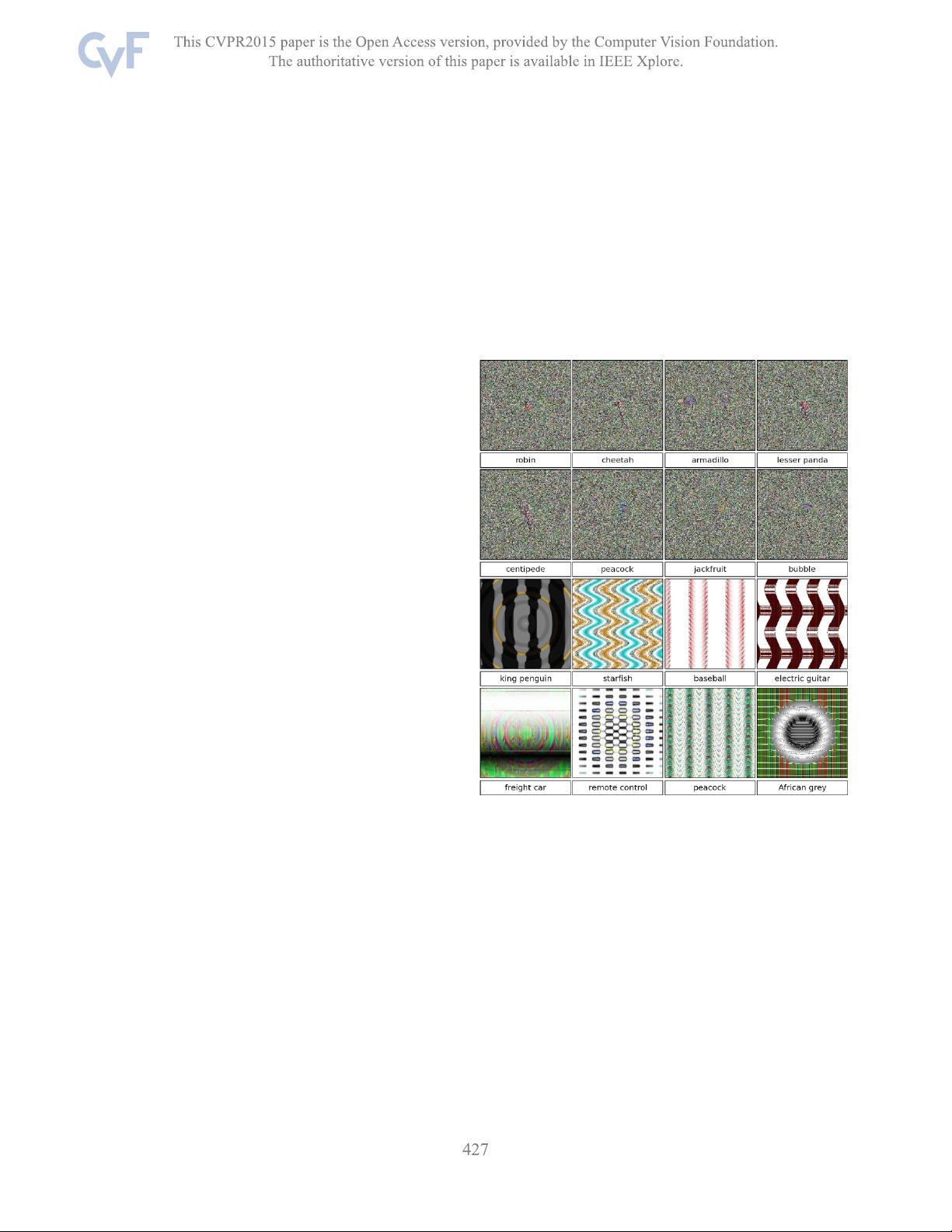

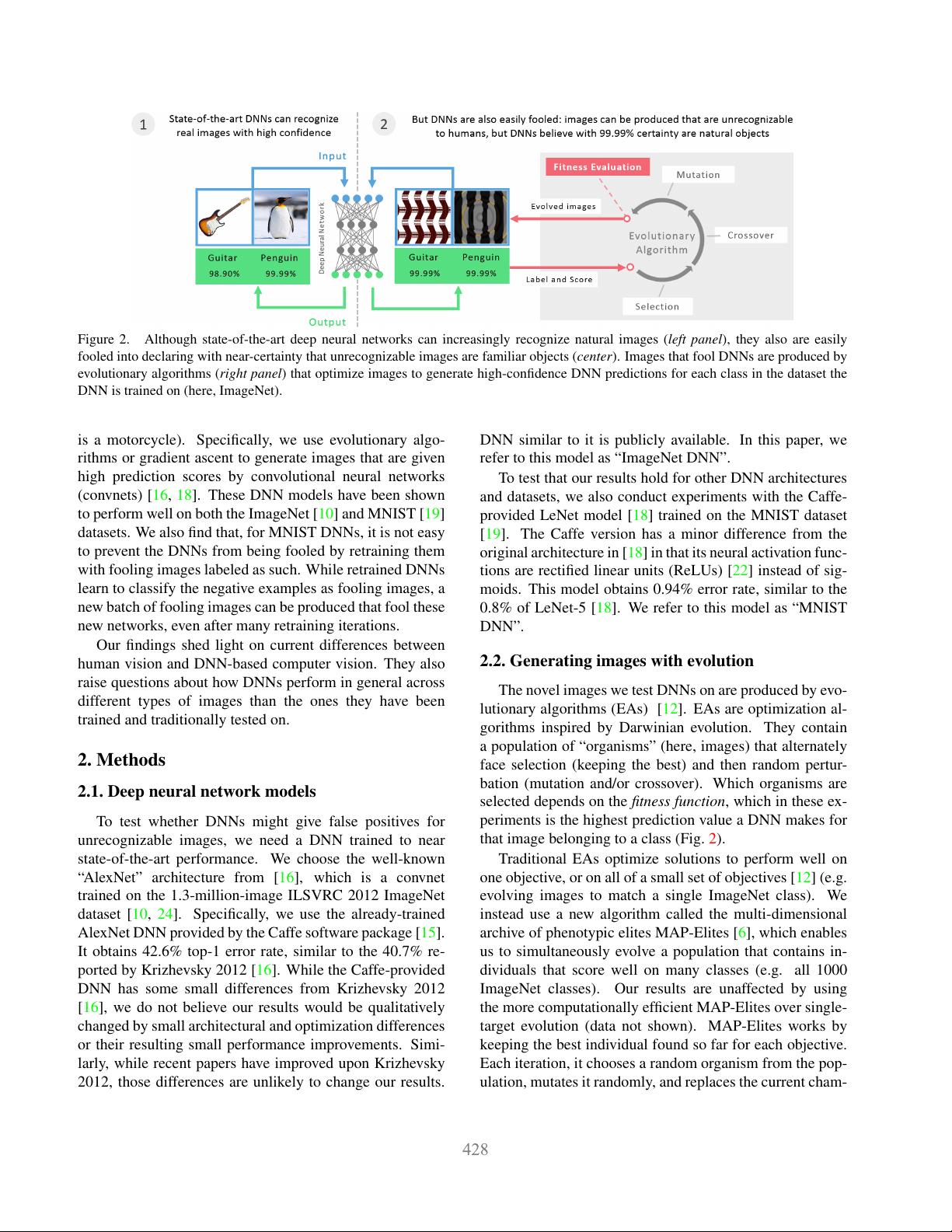

Figure 2. Although state-of-the-art deep neural networks can increasingly recognize natural images (left panel), they also are easily

fooled into declaring with near-certainty that unrecognizable images are familiar objects (center). Images that fool DNNs are produced by

evolutionary algorithms (right panel) that optimize images to generate high-confidence DNN predictions for each class in the dataset the

DNN is trained on (here, ImageNet).

is a motorcycle). Specifically, we use evolutionary algo-

rithms or gradient ascent to generate images that are given

high prediction scores by convolutional neural networks

(convnets) [16, 18]. These DNN models have been shown

to perform well on both the ImageNet [10] and MNIST [19]

datasets. We also find that, for MNIST DNNs, it is not easy

to prevent the DNNs from being fooled by retraining them

with fooling images labeled as such. While retrained DNNs

learn to classify the negative examples as fooling images, a

new batch of fooling images can be produced that fool these

new networks, even after many retraining iterations.

Our findings shed light on current differences between

human vision and DNN-based computer vision. They also

raise questions about how DNNs perform in general across

different types of images than the ones they have been

trained and traditionally tested on.

2. Methods

2.1. Deep neural network models

To test whether DNNs might give false positives for

unrecognizable images, we need a DNN trained to near

state-of-the-art performance. We choose the well-known

“AlexNet” architecture from [16], which is a convnet

trained on the 1.3-million-image ILSVRC 2012 ImageNet

dataset [10, 24]. Specifically, we use the already-trained

AlexNet DNN provided by the Caffe software package [15].

It obtains 42.6% top-1 error rate, similar to the 40.7% re-

ported by Krizhevsky 2012 [16]. While the Caffe-provided

DNN has some small differences from Krizhevsky 2012

[16], we do not believe our results would be qualitatively

changed by small architectural and optimization differences

or their resulting small performance improvements. Simi-

larly, while recent papers have improved upon Krizhevsky

2012, those differences are unlikely to change our results.

We chose AlexNet because it is widely known and a trained

DNN similar to it is publicly available. In this paper, we

refer to this model as “ImageNet DNN”.

To test that our results hold for other DNN architectures

and datasets, we also conduct experiments with the Caffe-

provided LeNet model [18] trained on the MNIST dataset

[19]. The Caffe version has a minor difference from the

original architecture in [18] in that its neural activation func-

tions are rectified linear units (ReLUs) [22] instead of sig-

moids. This model obtains 0.94% error rate, similar to the

0.8% of LeNet-5 [18]. We refer to this model as “MNIST

DNN”.

2.2. Generating images with evolution

The novel images we test DNNs on are produced by evo-

lutionary algorithms (EAs) [12]. EAs are optimization al-

gorithms inspired by Darwinian evolution. They contain

a population of “organisms” (here, images) that alternately

face selection (keeping the best) and then random pertur-

bation (mutation and/or crossover). Which organisms are

selected depends on the fitness function, which in these ex-

periments is the highest prediction value a DNN makes for

that image belonging to a class (Fig. 2).

Traditional EAs optimize solutions to perform well on

one objective, or on all of a small set of objectives [12] (e.g.

evolving images to match a single ImageNet class). We

instead use a new algorithm called the multi-dimensional

archive of phenotypic elites MAP-Elites [6], which enables

us to simultaneously evolve a population that contains in-

dividuals that score well on many classes (e.g. all 1000

ImageNet classes). Our results are unaffected by using

the more computationally efficient MAP-Elites over single-

target evolution (data not shown). MAP-Elites works by

keeping the best individual found so far for each objective.

Each iteration, it chooses a random organism from the pop-

ulation, mutates it randomly, and replaces the current cham-

pion for any objective if the new individual has higher fit-

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功