没有合适的资源?快使用搜索试试~ 我知道了~

温馨提示

YouTube推荐系统Paper[2016]-Deep Neural Networks for YouTube Recommendations.pdf YouTube推荐系统Paper[2016]-Deep Neural Networks for YouTube Recommendations.pdf

资源推荐

资源详情

资源评论

Deep Neural Networks for YouTube Recommendations

Paul Covington, Jay Adams, Emre Sargin

Google

Mountain View, CA

{pcovington, jka, msargin}@google.com

ABSTRACT

YouTube represents one of the largest scale and most sophis-

ticated industrial recommendation systems in existence. In

this paper, we describe the system at a high level and fo-

cus on the dramatic performance improvements brought by

deep learning. The paper is split according to the classic

two-stage information retrieval dichotomy: first, we detail a

deep candidate generation model and then describe a sepa-

rate deep ranking model. We also provide practical lessons

and insights derived from designing, iterating and maintain-

ing a massive recommendation system with enormous user-

facing impact.

Keywords

recommender system; deep learning; scalability

1. INTRODUCTION

YouTube is the world’s largest platform for creating, shar-

ing and discovering video content. YouTube recommenda-

tions are responsible for helping more than a billion users

discover personalized content from an ever-growing corpus

of videos. In this paper we will focus on the immense im-

pact deep learning has recently had on the YouTube video

recommendations system. Figure 1 illustrates the recom-

mendations on the YouTube mobile app home.

Recommending YouTube videos is extremely challenging

from three major perspectives:

• Scale: Many existing recommendation algorithms proven

to work well on small problems fail to operate on our

scale. Highly specialized distributed learning algorithms

and efficient serving systems are essential for handling

YouTube’s massive user base and corpus.

• Freshness: YouTube has a very dynamic corpus with

many hours of video are uploaded per second. The

recommendation system should be responsive enough

to model newly uploaded content as well as the lat-

est actions taken by the user. Balancing new content

Permission to make digital or hard copies of part or all of this work for personal or

classroom use is granted without fee provided that copies are not made or distributed

for profit or commercial advantage and that copies bear this notice and the full citation

on the first page. Copyrights for third-party components of this work must be honored.

For all other uses, contact the owner/author(s).

RecSys ’16 September 15-19, 2016, Boston , MA, USA

c

2016 Copyright held by the owner/author(s).

ACM ISBN 978-1-4503-4035-9/16/09.

DOI: http://dx.doi.org/10.1145/2959100.2959190

Figure 1: Recommendations displayed on YouTube

mobile app home.

with well-established videos can be understood from

an exploration/exploitation perspective.

• Noise: Historical user behavior on YouTube is inher-

ently difficult to predict due to sparsity and a vari-

ety of unobservable external factors. We rarely ob-

tain the ground truth of user satisfaction and instead

model noisy implicit feedback signals. Furthermore,

metadata associated with content is poorly structured

without a well defined ontology. Our algorithms need

to be robust to these particular characteristics of our

training data.

In conjugation with other product areas across Google,

YouTube has undergone a fundamental paradigm shift to-

wards using deep learning as a general-purpose solution for

nearly all learning problems. Our system is built on Google

Brain [4] which was recently open sourced as TensorFlow [1].

TensorFlow provides a flexible framework for experimenting

with various deep neural network architectures using large-

scale distributed training. Our models learn approximately

one billion parameters and are trained on hundreds of bil-

lions of examples.

In contrast to vast amount of research in matrix factoriza-

tion methods [19], there is relatively little work using deep

neural networks for recommendation systems. Neural net-

works are used for recommending news in [17], citations in

[8] and review ratings in [20]. Collaborative filtering is for-

mulated as a deep neural network in [22] and autoencoders

in [18]. Elkahky et al. used deep learning for cross domain

user modeling [5]. In a content-based setting, Burges et al.

used deep neural networks for music recommendation [21].

The paper is organized as follows: A brief system overview

is presented in Section 2. Section 3 describes the candidate

generation model in more detail, including how it is trained

and used to serve recommendations. Experimental results

will show how the model benefits from deep layers of hidden

units and additional heterogeneous signals. Section 4 details

the ranking model, including how classic logistic regression

is modified to train a model predicting expected watch time

(rather than click probability). Experimental results will

show that hidden layer depth is helpful as well in this situa-

tion. Finally, Section 5 presents our conclusions and lessons

learned.

2. SYSTEM OVERVIEW

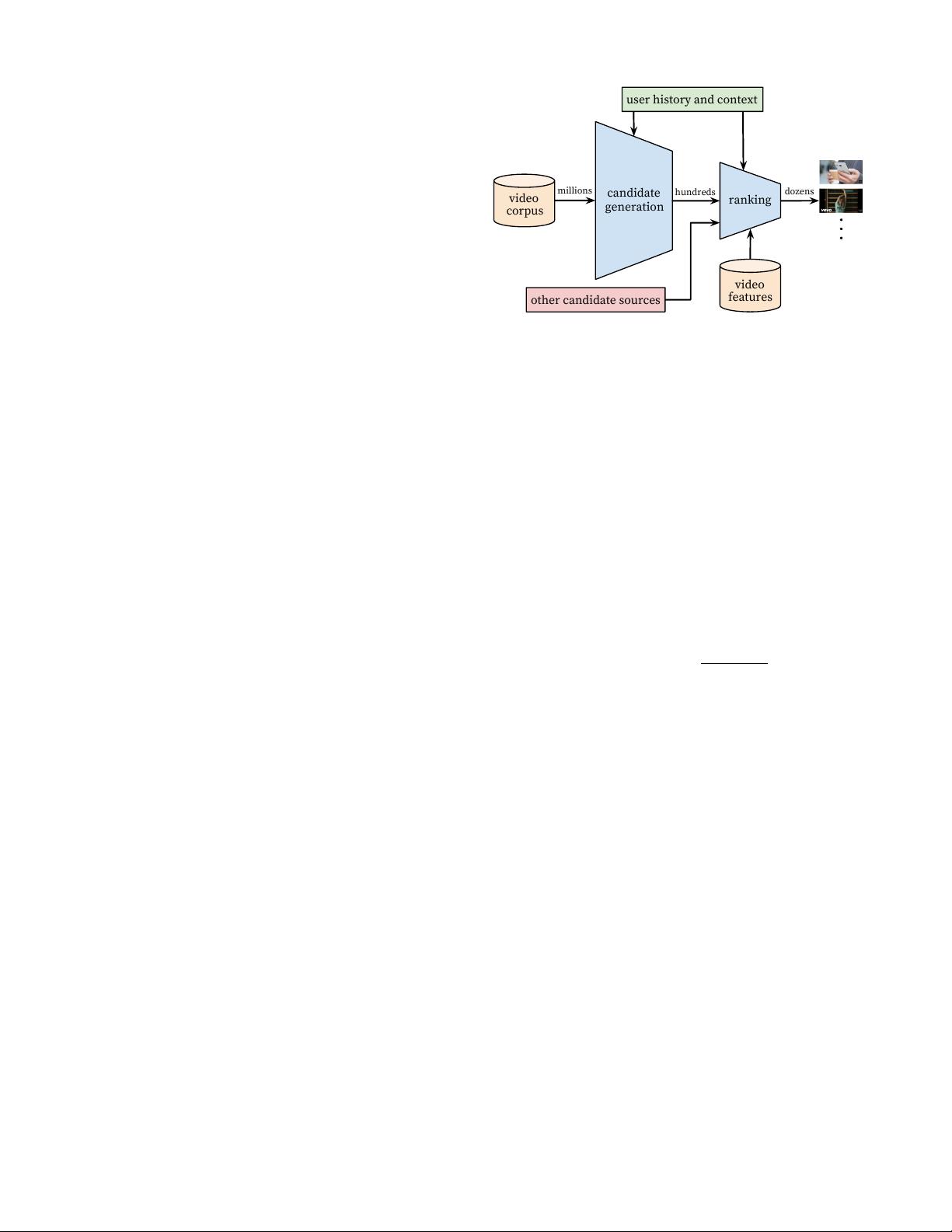

The overall structure of our recommendation system is il-

lustrated in Figure 2. The system is comprised of two neural

networks: one for candidate generation and one for ranking.

The candidate generation network takes events from the

user’s YouTube activity history as input and retrieves a

small subset (hundreds) of videos from a large corpus. These

candidates are intended to be generally relevant to the user

with high precision. The candidate generation network only

provides broad personalization via collaborative filtering.

The similarity between users is expressed in terms of coarse

features such as IDs of video watches, search query tokens

and demographics.

Presenting a few “best” recommendations in a list requires

a fine-level representation to distinguish relative importance

among candidates with high recall. The ranking network

accomplishes this task by assigning a score to each video

according to a desired objective function using a rich set of

features describing the video and user. The highest scoring

videos are presented to the user, ranked by their score.

The two-stage approach to recommendation allows us to

make recommendations from a very large corpus (millions)

of videos while still being certain that the small number of

videos appearing on the device are personalized and engag-

ing for the user. Furthermore, this design enables blending

candidates generated by other sources, such as those de-

scribed in an earlier work [3].

During development, we make extensive use of offline met-

rics (precision, recall, ranking loss, etc.) to guide iterative

improvements to our system. However for the final deter-

mination of the effectiveness of an algorithm or model, we

rely on A/B testing via live experiments. In a live experi-

ment, we can measure subtle changes in click-through rate,

watch time, and many other metrics that measure user en-

gagement. This is important because live A/B results are

not always correlated with offline experiments.

3. CANDIDATE GENERATION

During candidate generation, the enormous YouTube cor-

pus is winnowed down to hundreds of videos that may be

relevant to the user. The predecessor to the recommender

candidate

ranking

user history and context

generation

millions

hundreds

dozens

video

corpus

other candidate sources

video

features

Figure 2: Recommendation system architecture

demonstrating the “funnel” where candidate videos

are retrieved and ranked before presenting only a

few to the user.

described here was a matrix factorization approach trained

under rank loss [23]. Early iterations of our neural network

model mimicked this factorization behavior with shallow

networks that only embedded the user’s previous watches.

From this perspective, our approach can be viewed as a non-

linear generalization of factorization techniques.

3.1 Recommendation as Classification

We pose recommendation as extreme multiclass classifica-

tion where the prediction problem becomes accurately clas-

sifying a specific video watch w

t

at time t among millions

of videos i (classes) from a corpus V based on a user U and

context C,

P (w

t

= i|U, C) =

e

v

i

u

P

j∈V

e

v

j

u

where u ∈ R

N

represents a high-dimensional“embedding” of

the user, context pair and the v

j

∈ R

N

represent embeddings

of each candidate video. In this setting, an embedding is

simply a mapping of sparse entities (individual videos, users

etc.) into a dense vector in R

N

. The task of the deep neural

network is to learn user embeddings u as a function of the

user’s history and context that are useful for discriminating

among videos with a softmax classifier.

Although explicit feedback mechanisms exist on YouTube

(thumbs up/down, in-product surveys, etc.) we use the im-

plicit feedback [16] of watches to train the model, where a

user completing a video is a positive example. This choice is

based on the orders of magnitude more implicit user history

available, allowing us to produce recommendations deep in

the tail where explicit feedback is extremely sparse.

Efficient Extreme Multiclass

To efficiently train such a model with millions of classes, we

rely on a technique to sample negative classes from the back-

ground distribution (“candidate sampling”) and then correct

for this sampling via importance weighting [10]. For each ex-

ample the cross-entropy loss is minimized for the true label

and the sampled negative classes. In practice several thou-

sand negatives are sampled, corresponding to more than 100

times speedup over traditional softmax. A popular alterna-

tive approach is hierarchical softmax [15], but we weren’t

剩余7页未读,继续阅读

资源评论

KimiWing

- 粉丝: 0

- 资源: 5

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功