A tutorial on support vector regression 203

Note that the conditions in Theorem 7 are only necessary but

not sufficient. The rules stated above can be useful tools for

practitioners both for checking whether a kernel is an admissible

SV kernel and for actually constructing new kernels. The general

case is given by the following theorem.

Theorem 8 (Schoenberg 1942). A kernel of dot-product type

k(x, x

) = k(x, x

) defined on an infinite dimensional Hilbert

space, with a power series expansion

k(t) =

∞

n=0

a

n

t

n

(27)

is admissible if and only if all a

n

≥ 0.

A slightly weaker condition applies for finite dimensional

spaces. For further details see Berg, Christensen and Ressel

(1984) and Smola,

´

Ov´ari and Williamson (2001).

2.4. Examples

In Sch¨olkopf, Smola and M¨uller (1998b) it has been shown, by

explicitly computing the mapping, that homogeneous polyno-

mial kernels k with p ∈ N and

k(x, x

) =x, x

p

(28)

are suitable SV kernels (cf. Poggio 1975). From this observation

one can conclude immediately (Boser, Guyon and Vapnik 1992,

Vapnik 1995) that kernels of the type

k(x, x

) = (x, x

+c)

p

(29)

i.e. inhomogeneous polynomial kernels with p ∈ N, c ≥ 0 are

admissible, too: rewrite k as a sum of homogeneous kernels and

apply Corollary 3. Another kernel, that might seem appealing

due to its resemblance to Neural Networks is the hyperbolic

tangent kernel

k(x, x

) = tanh(ϑ +κx, x

). (30)

By applying Theorem 8 one can check that this kernel does

not actually satisfy Mercer’s condition (Ovari 2000). Curiously,

the kernel has been successfully used in practice; cf. Scholkopf

(1997) for a discussion of the reasons.

Translation invariant kernels k(x, x

) = k(x − x

) are

quite widespread. It was shown in Aizerman, Braverman and

Rozono´er (1964), Micchelli (1986) and Boser, Guyon and Vap-

nik (1992) that

k(x, x

) = e

−

x −x

2

2σ

2

(31)

is an admissible SV kernel. Moreover one can show (Smola

1996, Vapnik, Golowich and Smola 1997) that (1

X

denotes the

indicator function on the set X and ⊗the convolution operation)

k(x, x

) = B

2n+1

(x − x

) with B

k

:=

k

i=1

1

[

−

1

2

,

1

2

]

(32)

B-splines of order 2n +1, defined by the 2n +1 convolution of

the unit inverval, are also admissible. We shall postpone further

considerations to Section 7 where the connection to regulariza-

tion operators will be pointed out in more detail.

3. Cost functions

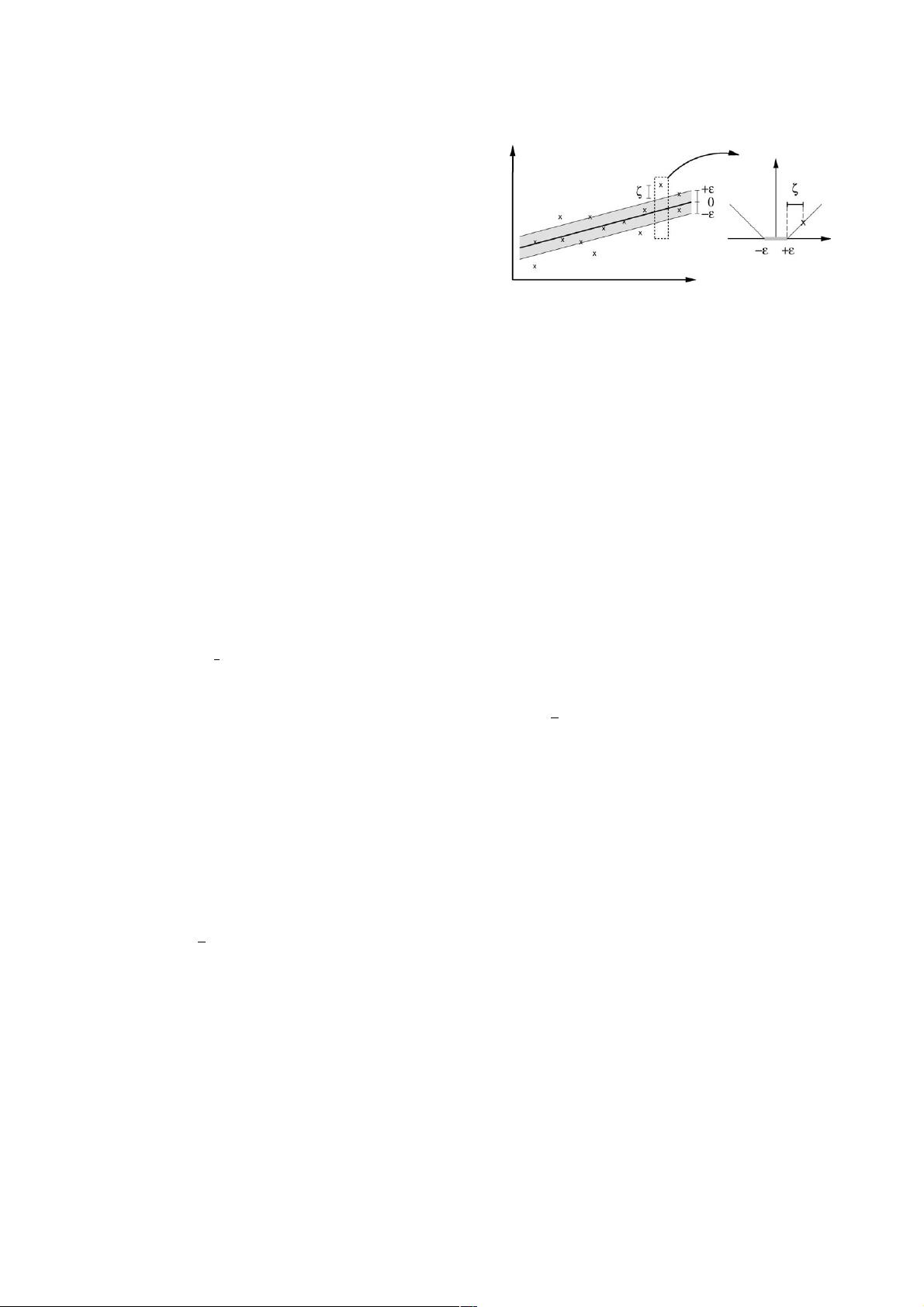

So far the SV algorithm for regression may seem rather strange

and hardly related to other existing methods of function esti-

mation (e.g. Huber 1981, Stone 1985, H¨ardle 1990, Hastie and

Tibshirani 1990, Wahba 1990). However, once cast into a more

standard mathematical notation, we will observe the connec-

tions to previous work. For the sake of simplicity we will, again,

only consider the linear case, as extensions to the nonlinear one

are straightforward by using the kernel method described in the

previous chapter.

3.1. The risk functional

Let us for a moment go back to the case of Section 1.2. There, we

had some training data X :={(x

1

, y

1

),...,(x

, y

)}⊂X × R.

We will assume now, that this training set has been drawn iid

(independent and identically distributed) from some probabil-

ity distribution P(x, y). Our goal will be to find a function f

minimizing the expected risk (cf. Vapnik 1982)

R[ f ] =

c(x, y, f (x))dP(x, y) (33)

(c(x, y, f (x)) denotes a cost function determining how we will

penalize estimation errors) based on the empirical data X.Given

that we do not know the distribution P(x, y)wecanonly use

X for estimating a function f that minimizes R[ f ]. A possi-

bleapproximation consists in replacing the integration by the

empirical estimate, to get the so called empirical risk functional

R

emp

[ f ]:=

1

i=1

c(x

i

, y

i

, f (x

i

)). (34)

A first attempt would be to find the empirical risk minimizer

f

0

:= argmin

f ∈H

R

emp

[ f ] for some function class H .However,

if H is very rich, i.e. its “capacity” is very high, as for instance

when dealing with few data in very high-dimensional spaces,

this may not be a good idea, as it will lead to overfitting and thus

bad generalization properties. Hence one should add a capacity

control term, in the SV case w

2

,which leads to the regularized

risk functional (Tikhonov and Arsenin 1977, Morozov 1984,

Vapnik 1982)

R

reg

[ f ]:= R

emp

[ f ] +

λ

2

w

2

(35)

where λ>0isasocalled regularization constant. Many

algorithms like regularization networks (Girosi, Jones and

Poggio 1993) or neural networks with weight decay networks

(e.g. Bishop 1995) minimize an expression similar to (35).