This article has been accepted for inclusion in a future issue of this journal. Content is final as presented, with the exception of pagination.

IEEE TRANSACTIONS ON CYBERNETICS 1

Rotational Invariant Dimensionality

Reduction Algorithms

Zhihui Lai, Yong Xu, Member, IEEE, Jian Yang, Linlin Shen, and David Zhang, Fellow, IEEE

Abstract—A common intrinsic limitation of the traditional sub-

space learning methods is the sensitivity to the outliers and the

image variations of the object since they use the L

2

norm as

the metric. In this paper, a series of methods based on the L

2,1

-

norm are proposed for linear dimensionality reduction. Since

the L

2,1

-norm based objective function is robust to the image

variations, the proposed algorithms can perform robust image

feature extraction for classification. We use different ideas to

design different algorithms and obtain a unified rotational invari-

ant (RI) dimensionality reduction framework, which extends the

well-known graph embedding algorithm framework to a more

generalized form. We provide the comprehensive analyses to

show the essential properties of the proposed algorithm frame-

work. This paper indicates that the optimization problems have

global optimal solutions when all the orthogonal projections of

the data space are computed and used. Experimental results on

popular image datasets indicate that the proposed RI dimension-

ality reduction algorithms can obtain competitive performance

compared with the previous L

2

norm based subspace learning

algorithms.

Index Terms—Dimensionality reduction, image classification,

image feature extraction, rotational invariant (RI) subspace

learning.

I. INTRODUCTION

F

EATURE extraction and dimensionality reduction meth-

ods have been paid much attention in past several decades.

Manuscript received May 23, 2015, revised November 11, 2015; accepted

May 24, 2016. This work was supported in part by the Natural Science

Foundation of China under Grant 61573248, Grant 61203376, Grant

61375012, Grant 61272050, Grant 61362031, Grant 61332011, and Grant

61370163, in part by the General Research Fund of Research Grants Council

of Hong Kong under Project 531708, in part by the Science Foundation

of Guangdong Province under Grant 2014A030313556, and in part by

the Shenzhen Municipal Science and Technology Innovation Council under

Grant JCYJ20150324141711637. This paper was recommended by Associate

Editor P. Tino.

Z. Lai is with the College of Computer Science and Software Engineering,

Shenzhen University, Shenzhen 518060, China, and also with the Hong Kong

Polytechnic University, Hong Kong (e-mail: lai_zhi_hui@163.com).

Y. Xu is with the Bio-Computing Research Center and Key Laboratory of

Network Oriented Intelligent Computation, Shenzhen Graduate School,

Harbin Institute of Technology, Shenzhen 518055, China (e-mail:

yongxu@ymail.com).

J. Yang is with the School of Computer Science, Nanjing

University of Science and Technology, Nanjing 210094, China (e-mail:

csjyang@njust.edu.cn).

L. Shen is with the College of Computer Science and Software Engineering,

Shenzhen University, Shenzhen 518060, China (e-mail: llshen@szu.edu.cn).

D. Zhang is with the Biometrics Research Centre, Department of

Computing, Hong Kong Polytechnic University, Hong Kong (e-mail:

csdzhang@comp.polyu.edu.hk).

Color versions of one or more of the figures in this paper are available

online at http://ieeexplore.ieee.org.

Digital Object Identifier 10.1109/TCYB.2016.2578642

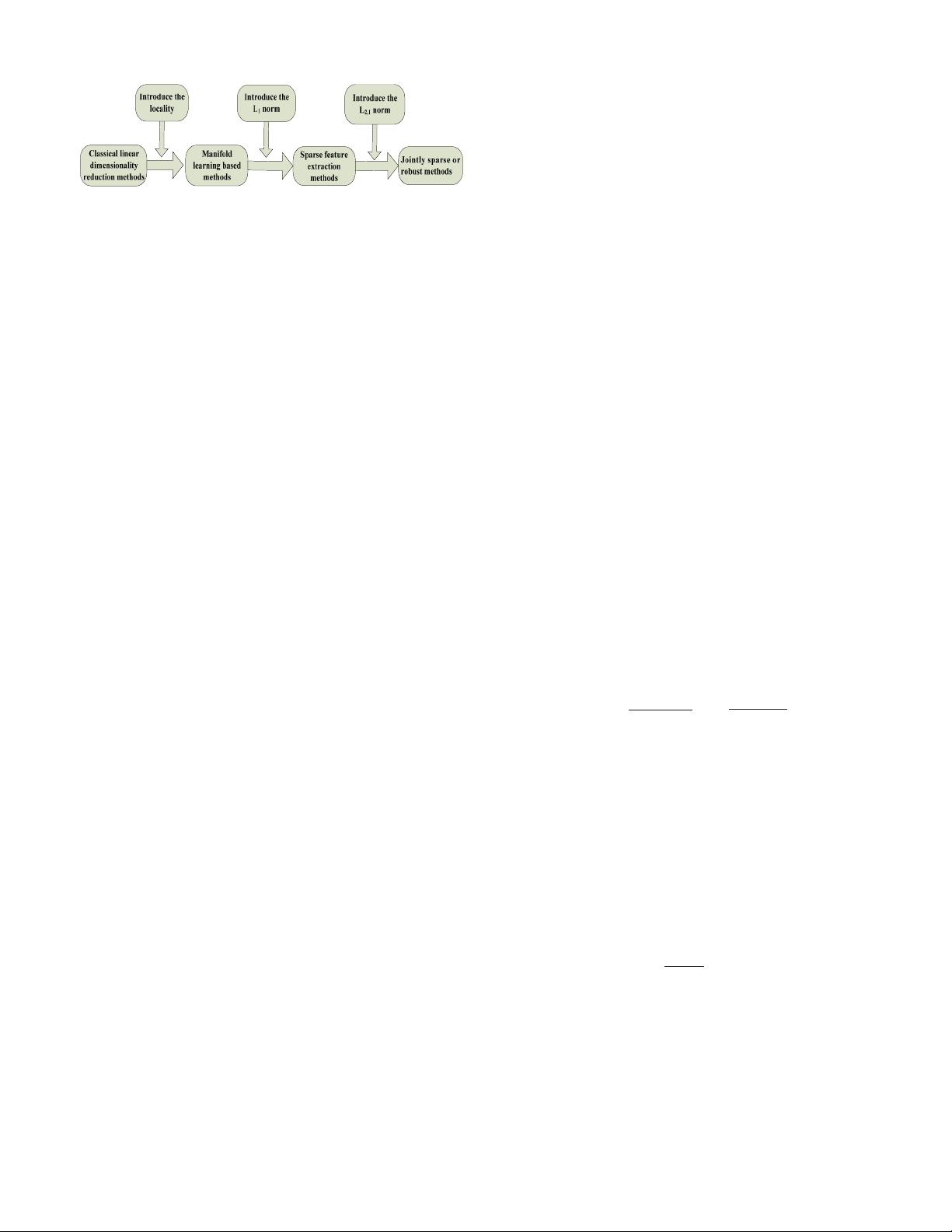

The classical linear dimensionality reduction methods such as

principle component analysis (PCA) [1]–[3] and linear dis-

criminant analysis (LDA) [4] and its variations [5], [6]are

widely used in the fields of pattern recognition, computer

vision, and data mining. It is known that these classical meth-

ods (i.e., PCA and LDA) only focus on the global structure

of a dataset in dimensionality reduction. With the fast devel-

opment of the manifold learning based techniques [7]–[10],

the local geometry structure has been taken into account in

designing different linear dimensionality reduction methods.

For example, locality preserving projection (LPP, also called

Laplacianfaces) [11] and orthogonal LPP [12] were proposed

for face recognition. Yan et al. [13] proposed a unified graph

embedding framework for linear and nonlinear dimension-

ality reduction, and marginal fisher analysis (MFA) and its

extension [14] were proposed the for face and gait feature

extraction.

All the above methods, however, use the L

2

or Frobenius

norm based metric to characterize the scatter of the dataset,

thus these methods are sensitive to the outliers. Recently, other

measurement such as L

1

norm was widely explored due to its

robustness in different applications. For example, the L

1

norm

was used in sparse regression [15]–[17], sparse representation

classifier designation [18], [19], subspace learning [20]–[25],

sparse subspace learning [26], [27], and sparse coding for

image representation [28]. In addition, the sparse L

1

graph

was also used in subspace learning, spectral clustering [29],

and label propagation [30]. But one drawback of these L

1

norm based methods is that the L

1

norm terms are just used

as the regularization and the L

2

or Frobenius norm terms are

still dominant in the optimization problems. Thus, these meth-

ods are still sensitive to the outliers in a certain sense in

dimensionality reduction.

Although various L

1

norm based subspace learning meth-

ods, such as those in [25] and [31]–[34], have shown promis-

ing performance, these methods still have some unsolved

problems. For example, some of them have very high com-

putational costs in computing the (local) optimal solutions,

and the theoretical relation between the optimal solutions of

L

1

norm based methods and the traditional/classical ones was

still unclear. Recently, a new measurement called rotational

invariance (RI) L

1

norm or L

2,1

norm has attracted much

attention in the fields of patter recognition and computer

vision [35], multitask learning and tensor factorization [36].

Previous studies show that the pure L

2,1

norm based regres-

sion is more robust than the L

1

norm regression in pat-

tern recognition [37]–[39], and thus was widely used in

2168-2267

c

2016 IEEE. Personal use is permitted, but republication/redistribution requires IEEE permission.

See http://www.ieee.org/publications_standards/publications/rights/index.html for more information.

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功