没有合适的资源?快使用搜索试试~ 我知道了~

Seed-Guided Topic Model for Document Filtering and Classificatio...

1 下载量 183 浏览量

2021-02-07

13:14:13

上传

评论

收藏 2.58MB PDF 举报

温馨提示

Seed-Guided Topic Model for Document Filtering and Classification

资源推荐

资源详情

资源评论

9

Seed-Guided Topic Model for Document Filtering

and Classification

CHENLIANG LI and SHIQIAN CHEN, Wuhan University, China

JIAN XING, Hithink RoyalFlush Information Network Co., Ltd, China

AIXIN SUN, Nanyang technological University, Singapore

ZONGYANG MA, Microsoft (China) Co., Ltd, China

One important necessity is to lter out the irrelevant information and organize the relevant information into

meaningful categories. However, developing text classiers often requires a large number of labeled docu-

ments as training examples. Manually labeling documents is costly and time-consuming. More importantly, it

becomes unrealistic to know all the categories covered by the documents beforehand. Recently, a few methods

have been proposed to label documents by using a small set of relevant keywords for each category, known as

dataless text classication. In this article, we propose a seed-guided topic model for the dataless text ltering

and classication (named DFC). Given a collection of unlabeled documents, and for each specied category

a small set of seed words that are relevant to the semantic meaning of the category, DFC lters out the ir-

relevant documents and classies the relevant documents into the corresponding categories through topic

inuence. DFC models two kinds of topics: category-topics and general-topics. Also, there are two kinds of

category-topics: relevant-topics and irrelevant-topics. Each relevant-topic is associated with one specic cat-

egory, representing its semantic meaning. The irrelevant-topics represent the semantics of the unknown cat-

egories covered by the document collection. And the general-topics capture the global semantic information.

DFC assumes that each document is associated with a single category-topic and a mixture of general-topics.

A novelty of the model is that DFC learns the topics by exploiting the explicit word co-occurrence patterns

between the seed words and regular words (i.e., non-seed words) in the document collection. A document is

then ltered, or classied, based on its posterior category-topic assignment. Experiments on two widely used

datasets show that DFC consistently outperforms the state-of-the-art dataless text classiers for both classi-

cation with ltering and classication without ltering. In many tasks, DFC can also achieve comparable or

even better classication accuracy than the state-of-the-art supervised learning solutions. Our experimental

results further show that DFC is insensitive to the tuning parameters. Moreover, we conduct a thorough study

about the impact of seed words for existing dataless text classication techniques. The results reveal that it

This article is an extended version of Reference [28], a paper presented at the 25th International ACM CIKM Conference

(Indianapolis, IN, Oct. 24–28, 2016).

This research was supported by National Natural Science Foundation of China (Grant No. 61502344), Natural Science

Foundation of Hubei Province (Grant No. 2017CFB502), Natural Scientic Research Program of Wuhan University (Grants

No. 2042017kf0225 and No. 2042016kf0190), Academic Team Building Plan for Young Scholars from Wuhan University

(Grant No. Whu2016012) and Singapore Ministry of Education Academic Research Fund Tier 2 (Grant No. MOE2014-T2-

2-066).

Authors’ addresses: C. Li (corresponding author) and S. Chen, Wuhan University, School of Cyber Science and Engineering,

Bayi Road, Wuhan, Hubei, 430075, China; emails: {cllee, sqchen}@whu.edu.cn; J. Xing, Hithink RoyalFlush Information

Network Co., Ltd, Hangzhou, Zhejiang, 310023, China; email: xingjian@myhexin.com; A. Sun, Nanyang Technological

University, School of Computer Science and Engineering, 639798, Singapore; email: axsun@ntu.edu.sg; Z. Ma, Microsoft

(China) Co., Ltd, Soochow, 215123, China; email: mzyone@gmail.com.

Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee

provided that copies are not made or distributed for prot or commercial advantage and that copies bear this notice and

the full citation on the rst page. Copyrights for components of this work owned by others than ACM must be honored.

Abstracting with credit is permitted. To copy otherwise, or republish, to post on servers or to redistribute to lists, requires

prior specic permission and/or a fee. Request permissions from permissions@acm.org.

© 2018 ACM 1046-8188/2018/12-ART9 $15.00

https://doi.org/10.1145/3238250

ACM Transactions on Information Systems, Vol. 37, No. 1, Article 9. Publication date: December 2018.

9:2 C. Li et al.

is not using more seed words but the document coverage of the seed words for the corresponding category

that aects the dataless classication performance.

CCS Concepts: • Information systems → Document topic models; Clustering and classication;

Additional Key Words and Phrases: Topic model, dataless classication, document ltering

ACM Reference format:

Chenliang Li, Shiqian Chen, Jian Xing, Aixin Sun, and Zongyang Ma. 2018. Seed-Guided Topic Model for

Document Filtering and Classication. ACM Trans. Inf. Syst. 37, 1, Article 9 (December 2018), 37 pages.

https://doi.org/10.1145/3238250

1 INTRODUCTION

With the advance of Information Technology, the tremendous amounts of textual information gen-

erated everyday is far beyond the scope that people can manage manually. The recent prevalence of

social media further exacerbates this information overload, because rich information about various

kinds of events, user opinions, and daily life activities are generated in an unprecedented speed.

This timely information breeds the new and dynamic information needs everywhere. For exam-

ple, a data analyst might need to track an emerging event by using a few relevant keywords [36].

A data-driven company often needs to conduct a focused and deep analysis on the documents of

the specied categories. Within these semantic applications, one fundamental task is to lter out

irrelevant information and organize relevant information into meaningful topical categories.

During the past decade, text classiers have become important tools in managing and analyz-

ing large document collections. Text classication refers to the task of assigning category labels

to documents based on their semantics. Due to its wide usage, text classication has been studied

intensively for many years. Existing solutions are mainly based on supervised learning techniques

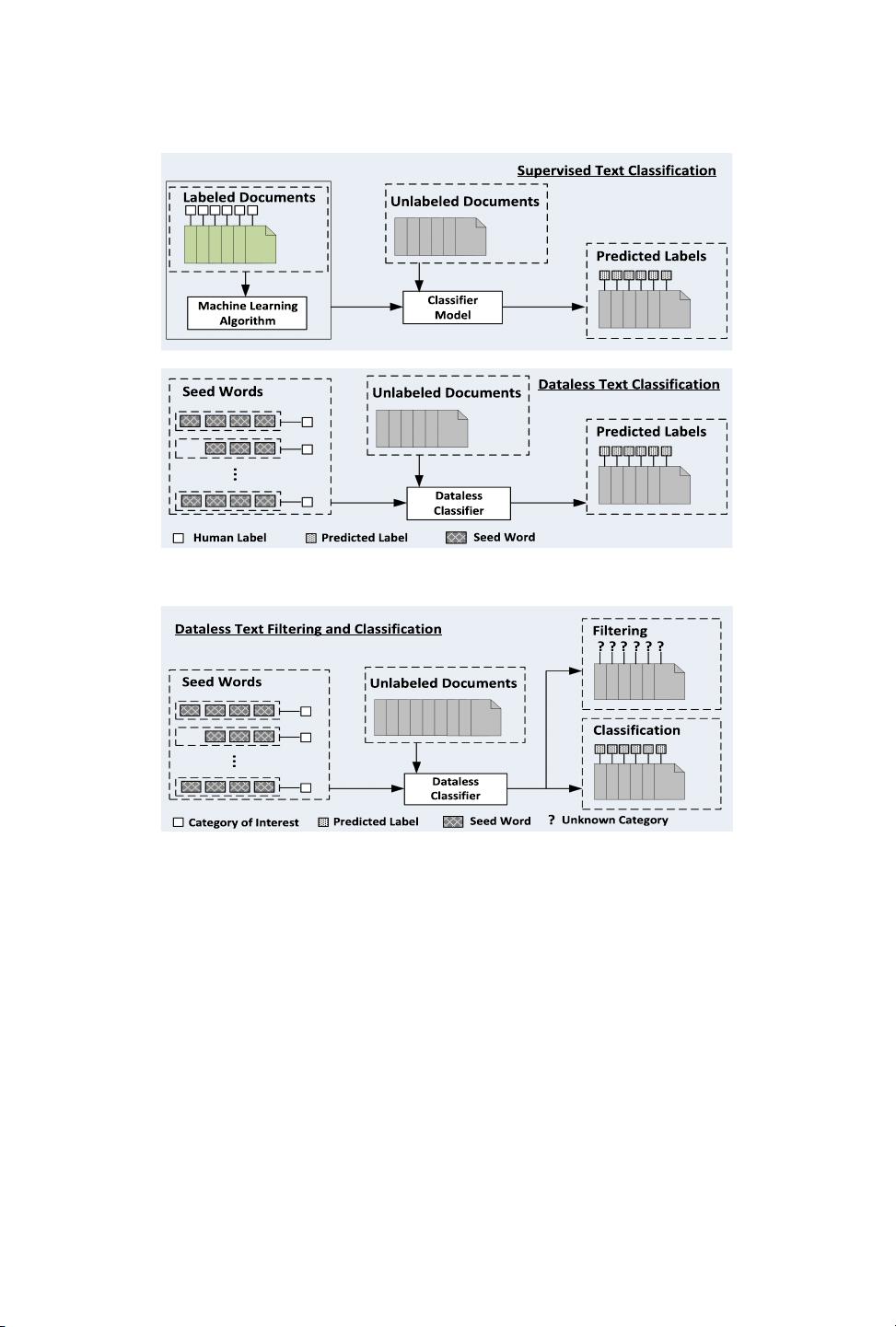

that require tremendous human eort in annotating documents as labeled examples, as shown in

the upper part of Figure 1. To reduce the labeling eort, many semi-supervised algorithms have

been proposed for text classication [7, 34]. Considering the diversity of the documents in many

applications, constructing a relatively small training set required by the semi-supervised algo-

rithms remains very expensive. Recently, a number of dataless text classication methods have

been proposed [7, 9, 13, 14, 18, 21, 22, 28, 29, 38]. Instead of using labeled documents as training

examples, dataless methods only require a small set of relevant words for each category or label-

ing the topics learned from a standard LDA model [3], to build text classiers. As illustrated in the

lower part of Figure 1, dataless classiers do not require labeled documents, which saves a lot of

human eorts. It has been reported that a speed-up of up to ve times can be achieved to build a

dataless text classier with indistinguishable performance to a supervised classier, by assuming

that labeling a word is ve times faster than labeling a document [14]. These promising results sug-

gest that dataless text classication is a practical alternative to the supervised approaches, when

constructing the training documents is not an easy task. More importantly, the labeled documents

produced by a dataless classier can also be used as training examples to learn supervised text

classiers if necessary [28]. However, these existing dataless classication techniques do not con-

sider document ltering. That is, we like to retrieve all the documents relevant to a specied set

of categories from a given document collection, and organize these relevant documents into the

corresponding categories. With the existing dataless classiers, we need to provide all the cate-

gories and the corresponding seed words covered by the document collection. Unfortunately, it is

often unrealistic to foresee all possible categories covered by a document collection, since the doc-

uments streamed in are likely to cover dynamic topics. In an extreme case, the number of possible

categories covered by documents could be potentially limitless.

ACM Transactions on Information Systems, Vol. 37, No. 1, Article 9. Publication date: December 2018.

Seed-Guided Topic Model for Document Filtering and Classification 9:3

Fig. 1. Supervised vs. dataless text classification.

Fig. 2. Illustration for dataless filtering and classification.

In this study, we aim to devise a dataless algorithm for the task of ltering and classifying doc-

uments into categories of interest. Figure 2 provides an illustration for this dataless ltering and

classication task. Specically, given a document collection of D documents and C categories of

interest, where each category c is dened by a small set of seed words S

c

, the task is to lter out

the documents irrelevant to any of the C categories, and to classify the relevant documents into C

categories, without using any labeled documents. We also call this task dataless classication with

ltering.

Human beings can quickly learn to distinguish whether a document belongs to a category, based

on several relevant keywords about a category. This is because people can learn to build the rele-

vance among the representative words of the category. For example, a human being can success-

fully identify a relevant word “wheel” to category automobile, after browsing several documents in

category automobile, even if she does not know the meaning of the word “wheel.” The underlying

reason is the high co-occurrence between “wheel” and other relevant words like “cars” and “en-

gines.” This relevance learning process is analogous to the unsupervised topic inference process

ACM Transactions on Information Systems, Vol. 37, No. 1, Article 9. Publication date: December 2018.

9:4 C. Li et al.

of the standard LDA [3], a probabilistic topic model (PTM) that implicitly infers the hidden topics

from the documents based on the higher-order word co-occurrence patterns [40]. However, con-

ventional PTMs like PLSA and LDA are unsupervised techniques that implicitly infer the hidden

topics based on word co-occurrences [3, 23]. It is dicult or even infeasible to lter and classify

documents in such a purely unsupervised manner.

Inspired by the recent success of the PTM-based dataless text classication techniques [9, 21, 22,

28], in this article, we propose a seed-guided topic model for dataless text ltering and classica-

tion (DFC). Given a collection of unlabeled documents, DFC is able to achieve the goal of ltering

and classication by taking only a few semantically relevant words for each category of interest

(called “seed words”). To enable document ltering, we model two sets of category-topics: relevant-

topics and irrelevant-topics. A one-to-one correspondence between relevant-topics and categories

of interest is made. That is, each relevant-topic is associated with one specic category of interest,

and vice versa. The relevant-topic is assumed to represent the meaning of that category.

1

Since

the documents relevant to the categories of interest could comprise a limited proportion of the

whole collection, irrelevant-topics are expected to model other categories covered by the irrele-

vant documents. In our earlier work [28], a topic model based dataless classication technique

(named STM) is proposed by using a set of general-topics to model the general semantics of the

whole document collection. Although the modeling of general and specic aspects of documents

was studied previously for information retrieval [8], it had been overlooked for dataless text clas-

sication in previous studies [9, 21, 22]. Our earlier work has proven that this model setting is

benecial for dataless classication performance. Following this modeling strategy, in DFC, we

also utilize a set of general-topics to represent the general semantic information. The task of l-

tering and classication is achieved by associating each document with a single category-topic

2

and a mixture of general-topics. The posterior category-topic assignment is then used to label the

document as a category of interest, or an irrelevant one. DFC also subsumes STM under some par-

ticular parameter settings. This means that DFC is also able to conduct dataless text classication

without ltering.

Seeking useful supervision from the seed words to precisely infer category-topics and general-

topics is vital to the ecacy of DFC. In other words, precise relevance estimation between a word

and a category-topic via a small set of seed words is crucial for DFC. It is noteworthy to underline

that no seed word can be provided for any irrelevant-topic, because the possible semantic cate-

gories covered by a document collection are unknown beforehand. However, the precise inference

for the irrelevant-topics is an essential factor for classication performance guarantee. Without

any supervision from the corresponding seed words, the model is hard to identify the irrelevant-

topics successfully, leading to inferior classication performance. Here, we devsie a simple but

eective mechanism to identify a set of pseudo seed words for each irrelevant-topic. Specically,

we resort to using standard LDA to extract the hidden topics for the document collection in an

unsupervised manner. Then, a relevance measure is proposed to calculate the distance between

each LDA hidden topic and all the seed words provided for the relevant-topics. After a heuristic

procedure to lter out noisy LDA hidden topics, the top topical words from the least relevant LDA

hidden topics are then considered as the pseudo seed words for the irrelevant-topics.

In contrast to the existing dataless classication methods that simply exploit the semantic guid-

ance provided by the seed words in an implicit way (i.e., the word co-occurrence information), we

adopt an explicit strategy to estimate the relevance between a word and a category-topic, and also

1

Category and relevant-topic are considered equivalent and exchangeable in this work when the context has no ambiguity.

2

A category-topic refers to either a relevant-topic or an irrelevant-topic in this work when the context is for DFC.

ACM Transactions on Information Systems, Vol. 37, No. 1, Article 9. Publication date: December 2018.

Seed-Guided Topic Model for Document Filtering and Classification 9:5

the initial category-topic distribution for each document. The estimated relevance is then utilized

to supervise the topic learning process of DFC. In particular, we investigate two mechanisms (i.e.,

Doc-Rel and Topic-Rel) to estimate the probability of a word being generated by a category-topic,

by measuring its correlations to the (pseudo) seed words of that category-topic based on either

document-level word co-occurrence or topical-level word co-occurrence information. We call the

words that are generated by a category-topic category words.

In summary, DFC conducts the document ltering and classication in a weakly supervised

manner, just as what humans do in learning to classify documents with just few words: (i) rst

to identify the highly relevant documents based on the given seed words of a category; (ii) then

based on these highly relevant documents, to collectively identify the category words in addition

to the seed words; (iii) next to use both the seed words and category words to nd new relevant

documents and new category words; the last step repeats until a global equilibrium is optimized.

We conduct extensive experiments on two datasets Reuters-10 and -20 Newsgroup, and compare

DFC with state-of-the-art dataless text classiers and supervised learning solutions. In terms of

classication accuracy measured by F

1

, our experimental results show that DFC outperforms all

the dataless competitors in almost all the tasks and performs better than the supervised classiers

sLDA and SVM in many tasks for both classication with ltering and classication without lter-

ing. We also conduct a comprehensive performance evaluation to analyze the impact of parameter

settings in DFC. The results show that the proposed DFC is reliable to a broad range of parameter

values, indicating its superiority in real scenarios.

We need to emphasize that all existing dataless text classication techniques rely solely on the

weak supervision provided by the seed words. That is, the quality of seed words plays a crucial

factor regarding the classication performance. It is intuitive that fewer seed words could carry

less semantic information for the classication. However, the study on seed words in the paradise

of dataless text classication is still missing. Here, an open question naturally arises: Will using

more seed words lead to better classication accuracy? If the answer to this question is no,then,

What criterion should we use to build a set of seed words for a category? In this work, we conduct a

thorough study with the aim of answering these two questions. We nd that using more seed words

may not produce better classication accuracy. Also, we empirically observe that more relevant

documents covered by a set of seed words under a category, better classication accuarcy can be

obtained. In an extreme case, given two seed words for a category, no performance gain could be

obtained by using both seed words over using either one, if the two words always appear together

in documents. That is, it is not the number of seed words that matters, but the document coverage

for that category. In summary, the main contributions of this article are listed as follows:

(1) We propose and formalize a new task of dataless text ltering and classifcation. To the

best of our knowledge, this is the rst work to classify documents into relevant categories

of interest, and lter out irrelevant documents in a dataless manner. To enable precise

inference of irrelevant-topics, we propose a novel mechanism to identify the pseudo seed

words for irrelevant topics in an unsupervised manner.

(2) DFC does not solely rely on the implicit word co-occurrence patterns to guide the category

inference process. Instead, we introduce two mechanisms to estimate the probability of a

word being generated by a category-topic. The estimation is based on the explicit word

co-occurrence patterns derived from the document collection.

(3) We empirically study the impact of seed words for dataless text classication techniques.

Our results suggest that using more seed words may not lead to better classication accu-

racy. Instead, the document coverage of the selected seed words correlates positively with

the classication accuracy.

ACM Transactions on Information Systems, Vol. 37, No. 1, Article 9. Publication date: December 2018.

剩余36页未读,继续阅读

资源评论

weixin_38623080

- 粉丝: 5

- 资源: 1002

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

- 【培训实施】-05-培训计划及实施方案.docx.doc

- 【培训实施】-03-企业培训整体规划及实施流程.docx

- 【培训实施】-08-培训实施.docx

- 【培训实施】-06-培训实施方案.docx

- 【培训实施】-11-培训实施流程 .docx

- 【培训实施】-09-公司年度培训实施方案.docx

- 【培训实施】-10-培训实施计划表.docx

- 【培训实施】-14-培训实施流程图.xlsx

- 【培训实施】-13-培训实施流程.docx

- 【培训实施】-12-企业培训实施流程.docx

- CentOS7修改默认启动级别

- 基于web的旅游管理系统的设计与实现论文.doc

- 02-培训师管理制度.docx

- 01-公司内部培训师管理制度.docx

- 00-如何塑造一支高效的企业内训师队伍.docx

- 05-某集团内部培训师管理办法.docx

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功