algorithms for multiview depth video coding by imbalance bit rate

allocation for different regions [18]. Zhang and Shao respectively

proposed depth video coding algorithms based on distortion anal-

yses [19,20].

Accurate and consistent depth video is the foundation of high

compression performance of depth video coding. In general, depth

acquisition methods include depth extraction from computer gra-

phic content [21], depth from structured light [22], Kinect sensors

[23], depth camera system [24,25] and depth estimation software

[26]. Limited by the principle of Kinect sensors based on structured

light technique, depth images suffer from temporal flickering,

noise, holes and inconsistent edges between depth and color

images [23]. In depth camera system, depth video is captured

based on the principle of time-of-flight [24,25]. The captured depth

maps may be inconsistent with the scene because of ambient light

noise, motion artifacts, specular reflections, and so on. In addition,

depth camera is too expensive to use on a large scale. So far, depth

estimation software is the alternative method of depth map acqui-

sition [26]. However, depth video obtained by depth estimation

software usually contains discrete and rugged noises. Hence, depth

video is inaccurate and inconsistent. The temporal and spatial cor-

relation is weak so as to decrease the compression performance.

In order to improve encoding and rendering performance, many

depth video processing algorithms [27–34] have been proposed.

Mueller et al. produced accurate depth video for artifact-free vir-

tual view synthesis by combining hybrid recursive matching with

motion estimation, cross-bilateral post-processing and mutual

depth map fusion [27]. Min et al. presented a weighted mode filter-

ing method that enhances temporal consistency and addressed the

flicking problem in virtual view [28]. Nguyen et al. suppressed cod-

ing artifacts over object boundaries using weighted mode filtering

[29]. Ekmekcioglu et al. proposed a content adaptive enhancement

method based on median filtering to enforce the coherence of

depth maps across the spatial, temporal and inter-view dimensions

[30]. Kim et al. presented a series of processing steps to solve the

critical problems of depth video captured by depth camera [31].

One of these processing steps is the enhancement of temporal con-

sistency by an algorithm based on motion estimation. Zhao et al.

proposed a depth no-synthesis-error (D-NOSE) model and pre-

sented a related smoothing scheme for depth video coding [32].

Fu et al. proposed a temporal enhancement algorithm for depth

video by utilizing adaptive temporal filtering [33]. In our previous

work, depth video was enhanced by temporal pixel classification

and smoothing [34].

However, depth video processing algorithms [27–34] do not

consider the perception of human visual system (HVS), and still

leave room for improvement. Zhao et al. proposed a binocular

just-noticeable-difference model to measure the perceptible

distortion of binocular vision for stereoscopic images [35]. Silva

et al. experimentally derived a just noticeable depth difference

(JNDD) model [36] and applied it to depth video preprocessing

[37]. Jung proposed a modified JNDD model that considers size

consistency, and then used it in depth sensation enhancement

[38]. The JNDD models are built by subjective tests on stereoscopic

displays. Hence, they are display dependent, and not suitable for

estimating depth distortion range in virtual view rendering. In this

study, we propose a just noticeable rendering distortion (JNRD)

model and apply it for spatial and temporal correlation enhance-

ment. Different from other distortion models [35,36,38], the JNRD

model is built based on the perception distortion of virtual view.

Firstly, the JNRD model is formulated by combining geometric dis-

placement in DIBR with just noticeable distortion (JND) model that

reflects human visual perception. Then, the spatial and temporal

correlation of depth video is enhanced using the JNRD model.

Finally, the proposed algorithm is appraised from three aspects,

compression ratio, objective quality and subjective quality of the

virtual view. The experimental results show that compression per-

formance of the processed depth video is improved in comparison

with the original depth video and the processed video of other

algorithms. The proposed algorithm maintains quality of the

virtual view.

The rest of this paper is organized as follows. Section 2

describes the problem of the depth in spatial and temporal corre-

lation, and presents the overall block diagram of the proposed

algorithm. Section 3 describes the JNRD model. Sections 4 and 5

present the spatial and temporal enhancement algorithm in detail.

Experimental results are given in Section 6. Finally, conclusions are

made in Section 7.

2. Proposed depth video correlation enhancement algorithm

2.1. Problem description

Depth video is inconsistent along spatial and temporal direc-

tions because of the limitations of depth video capture technolo-

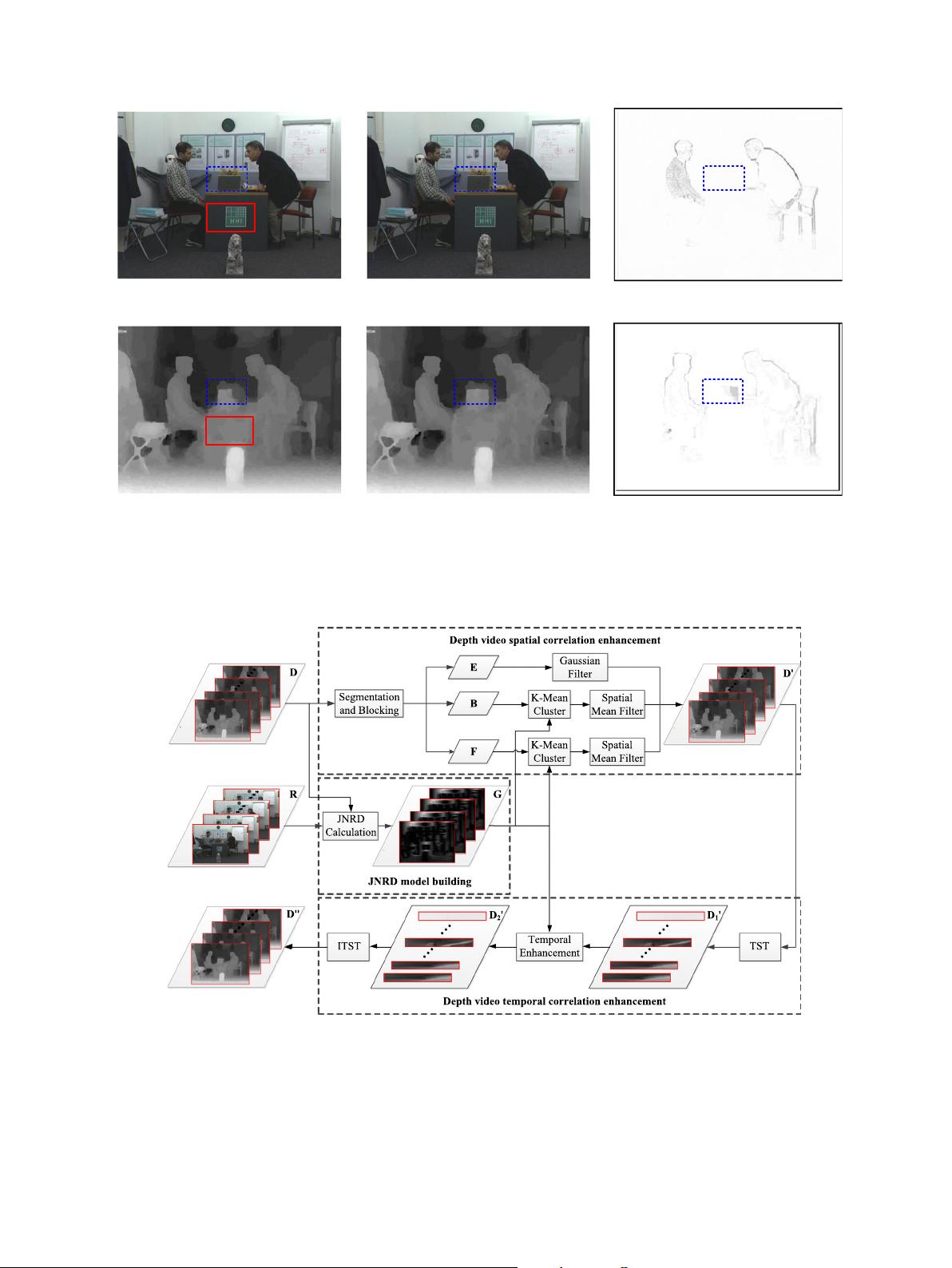

gies. Fig. 1 shows the frames and frame difference of the color

video and the corresponding depth video in ‘Leave Laptop’

sequence. The frame S

i

T

j

denotes the frame in the ith view at the

jth time instant in the video sequence. Fig. 1(a), (b), (d), and (e)

are the frames S

10

T

35

and S

10

T

36

of color video, and the associated

depth frames, Fig. 1(c) and (f) are the texture and the depth frame

difference images between frames S

10

T

35

and S

10

T

36

where black

means larger difference. The scene in the red rectangular region

of the color video is nearly at the same imaging plane. Correspond-

ingly, the depth value in the corresponding region should be nearly

the same. However, the depth value in the corresponding region is

not consistent with the corresponding color video. Depth inconsis-

tency decreases the spatial correlation of depth video.

Depth video inconsistency also decreases temporal correlation.

In the scene in Fig. 1, only the men and chair are seen moving

slightly. Hence, nearly total frame difference image of the color

video, with the exception of the men and chair in the scene, is dark,

which represents the content consistency along the temporal

direction. In contrast, some areas in the frame difference image

of the depth video, e.g., the blue rectangular region in Fig. 1(f),

are dark, which means temporal inconsistency.

Consequently, depth video inconsistency eventually deterio-

rates encoding performance because the spatial and temporal

correlation is the theoretical basis of high compression efficiency

of video signals.

2.2. Proposed depth video correlation enhancement algorithm

To improve the compression performance of depth video, a new

spatial and temporal correlation enhancement algorithm is

proposed in this paper. Fig. 2 shows the block diagram of the pro-

posed algorithm, which includes three parts, JNRD model building,

depth video spatial correlation enhancement, and depth video

temporal correlation enhancement. The JNRD model is the basis

for depth video spatial and temporal correlation enhancement. In

Fig. 2, G is the JNRD of the corresponding depth video; R, D and

D

00

are color video, original depth video and processed depth video,

respectively; E, F and B are the edge, foreground and background

regions of the depth video, respectively; and D

0

, D

1

0

, and D

2

0

are

intermediate processing results of depth video.

In the proposed algorithm, the JNRD model of depth video is

built firstly. Then, the depth video is processed in the order of spa-

tial and temporal correlation enhancement. JNRD model building,

spatial and temporal correlation enhancements are detailed in

Sections 3, 4 and 5, respectively.

310 Z. Peng et al. / J. Vis. Commun. Image R. 33 (2015) 309–322

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功