that are not specific to the tr aining data. The last layers do

extract complex and abstract features, but the closer they ar e

to the final layer, the less extra information they contain abo ut

the training set, compared with the final (outpu t) layer.

We formalize the thre at model in all these settings, and

exploit the privacy vulnerabilities of the stochastic gradient

descent (SGD) algorithm to design our white-box inferenc e

attack. Each da ta point in the training set influences many of

the model parameters, throu gh the SGD algo rithm, to mini-

mize its contribution to the learning lo ss. The lo cal gradient

of the loss on a target data rec ord, with respect to a given

parameter, indicates how much and in which direction the

parameter needs to be changed to fit the model to the data

record. To minimize the expected loss of the model, the SGD

algorithm repeated ly updates model parameter s in a direction

that the gradient of the loss over the whole training dataset

leans to zero. Therefo re, each training data sample will leave

a distinguishable footprint on the local gradients of the loss

function over the model’s parameters.

We desig n our inf e rence attack by using the gradient vector,

on the target data point over all par ameters, as the main feature

for the attack model. We design an architecture for our attack

model tha t processes extracted features from different layers

separately, and then aggregates them to extract membership

informa tion. In the cases where the adversary does not have

samples from th e target tra ining set to train its inference

model, we train the attack mode l in an unsupervised manner.

We train auto-enc oders to compute a membersh ip in formation

embedd ing for any data. We then use a clustering algorithm,

on the target dataset, to separate members from non-members

based on their membership information embedding.

To show the effectiveness of our white-box inference at-

tack, we evaluate the privacy of pre-trained and publicly

available state-of-the-art models on the CIFAR100 dataset.

We had no influence on training these models. Our results

show that the DenseNet model—which is the best model on

CIFAR100 with 82% test accura cy—is not much vulnerable

to black-box attacks (with a 54.5% inference attack accuracy,

where 50% is th e baseline for random guess). However,

the white-box membership infe rence attack obtains a c on-

siderably high er accur a cy of 74.3%. This shows that even

well-generalized deep models leak significant amount of

information about their training data, and are vulnerable

to w hit e-box membership infe rence attacks.

In federated lear ning, we show that a curious parameter

server or even a participant can perform alarming ly accurate

membersh ip inf erence attacks against other participants. For

the DenseNet model on CIFAR10 0, a local particip ant can

achieve a member ship inference accuracy of 72.2%, even

though it only observes aggregate updates through the pa-

rameter server. Also, the curio us central param e te r server

can achieve a 79.2% inference a ccuracy, as it receives the

individual parameter updates from all par ticipants. In federated

learning, the repeated parameter updates of the models

over different epochs on the same underlying training set

is a key factor in boosting the inference att ack a c curacy.

As the adversary’s contributions (i.e., parameter updates)

can influence the victim’s parameters, in the federated learn-

ing setting, the adversary can actively exploit SGD to leak

even more information about the participants’ training

data. We design an ac tive attack that performs gradient ascent

on a set of target data points befo re uploading and updating the

global parameters. This magnifies the presence of data points

in others’ training sets, in the way SGD reacts by abruptly

reducing the gradient on the target data points if they are

members. This leads to a 76.7 % inference accuracy for an

adversarial participant, and a significant 82.1% accuracy for

an active inference attack by the cen tral server. By isolating

a participant during parameter update , the central attacker can

boost his accuracy to 87.3% on the Densen et model.

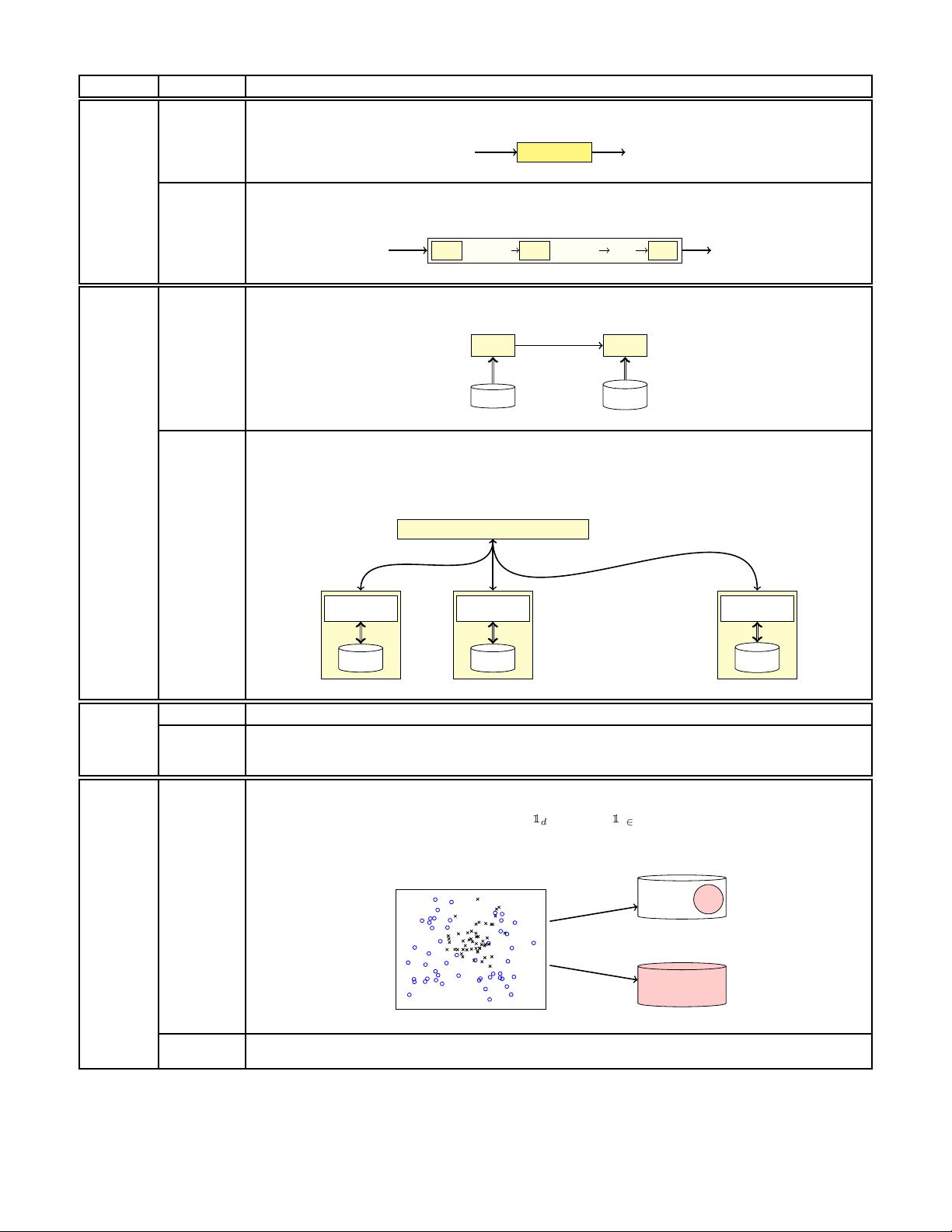

II. INFERENCE ATTACKS

We use membership inference attacks to measure informa-

tion lea kage through deep learning algorithms and mod els

about training data. There are many different scenario s in

which data is used for training models, and there are many

different ways the attacker can observe the deep le arning

process. In Table

I, we cover the major criteria to categorize

the attacks. This inc ludes attack observations, assumptions

about the adversary knowledge, the target training algo rithm,

and the mode o f the attack based on the adversary’s actio ns.

In this section, we discu ss different attack scena rios as well as

the techniques we use to exploit deep learning algorithms. We

also describe the architecture of our attack model, and how

the adversary computes the membership probability.

A. Attack Observations: Black-box vs. White-box Inferenc e

The adversary’s observations of the deep learning algorith m

are what constitute the inputs for the inferenc e attack.

Black-box. In this setting, the adversary’s observation is lim-

ited to the output of the model on arbitrary inputs. For any data

point x, the attacker can only obtain f (x; W). The parameters

of the model W and the intermediate steps of the computation

are not accessible to the attacker. This is the setting of machine

learning as a service platforms. M embership inference attacks

against black-box models are already designed, which exploit

the statistical differences between a model’s predic tions on its

training set versus unseen data [

6].

White-box. In this setting, the attacker obtains the model

f(x; W) in c luding its parameters which are needed for pre-

diction. Thus, for any input x, in addition to its output, the

attacker can compute all the intermed iate com putations of

the model. That is, the adversary can compute any function

over W and x given the model. The most straightforward

functions are the outputs of the h idden lay ers, h

i

(x) on the

input x. As a simple extension, the attacker can extend black-

box membership inference attacks (which are limited to the

model’s output) to the outputs of all ac tivation functions of

the model. However, this does not necessarily contain all the

useful information f or m e mbership infer ence. Notably, the

model output and ac tivation functions could generalize if the

model is well regularized. Thus, there m ight not be much

2

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功

评论0