Visual tracking datasets

and challenges

Developing CNN-

based methods

Developing RNN-

based methods

Developing SNN-

based methods

Developing RL- and

GAN-based methods

Developing custom

NN-based methods

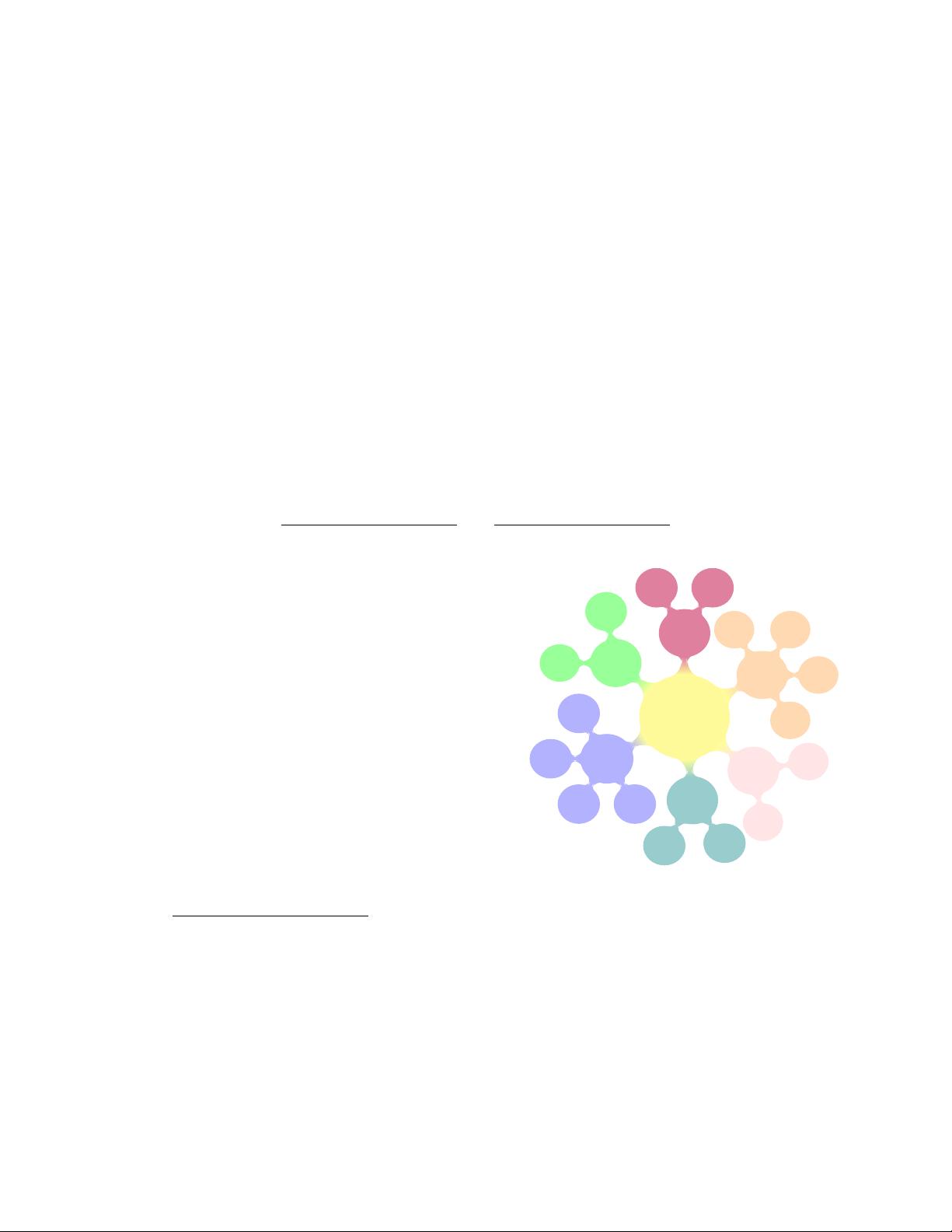

Fig. 2: Timeline of deep visual tracking methods.

2015: Exploring and studying deep features to exploit the traditional methods.

2016: Offline training/fine-tuning of DNNs for visual tracking purpose, Employ-

ing Siamese network for real-time tracking, Integrating DNNs into traditional

frameworks.

2017: Incorporating temporal and contextual information, Investigating various

offline training on large-scale image/video datasets.

2018: Studying different learning and search strategies, Designing more sophisti-

cated architectures for visual tracking task.

2019: Investigating deep detection and segmentation approaches for visual track-

ing, Taking advantages of deeper backbone networks.

(CNNs) have been dominant networks initially, the broad

range of architectures such as Siamese neural networks

(SNNs), recurrent neural networks (RNNs), auto-encoders

(AEs), generative adversarial networks (GANs), and custom

neural networks are currently investigated. Fig. 2 presents a

brief history of the development of deep visual trackers in

recent years. The state-of-the-art DL-based visual trackers

have distinct characteristics such as exploitation of deep

architecture, backbone network, learning procedure, train-

ing datasets, network objective, network output, types of

exploited deep features, CPU/GPU implementation, pro-

gramming language and framework, speed, and so forth.

Besides, several visual tracking benchmark datasets have

been proposed in the past few years for practical training

and evaluating of visual tracking methods. Despite various

properties, some of these benchmark datasets have common

video sequences. Thus, a comparative study of DL-based

methods, their benchmark datasets, and evaluation metrics

are provided in this paper to facilitate developing advanced

methods by the visual tracking community.

The visual tracking methods can be roughly classified

into two main categories of before and after the revolution of

DL in computer vision. The first category of visual tracking

survey papers [47]–[50] mainly review traditional methods

based on classical object and motion representations, and

then examine their pros and cons systematically, experi-

mentally, or both. Considering the significant progress of

DL-based visual trackers, the reviewed methods by these

papers are outdated. On the other hand, the second category

reviews limited deep visual trackers [51]–[53]. The papers

[51], [52] (two versions of a paper) categorize 81 and 93

handcrafted and deep visual trackers into the correlation

filter trackers and non-correlation filter trackers, and then

a further classification based on architectures and tracking

mechanisms has applied. These papers study <40 DL-based

method with limited investigations. Although the paper [54]

particularly investigates the network branches, layers, and

training aspects of nine SNN-based methods, it does not

include state-of-the-art SNN-based trackers (e.g., [55]–[57])

and the custom networks (e.g., [58]) which exploit SNNs,

partially. The last review paper [53] has categorized the

43 DL-based methods according to their structure, func-

tion, and training. Then, 16 DL-based visual trackers are

evaluated with different hand-crafted-based visual tracking

methods. From the structure perspective, these trackers

are categorized into 34 CNN-based (including ten CNN-

Matching and 24 CNN-Classification), five RNN-based, and

four other architecture-based methods (e.g., AE). Besides,

from the network function perspective, these methods are

categorized into the feature extraction network (FEN) or

end-to-end network (EEN). While the FENs are the methods

that exploit pre-trained models on different tasks, the EENs

are classified in terms of their outputs; namely, object score,

confidence map, and bounding box (BB). From the network

training perspective, these methods are categorized into the

NP-OL, IP-NOL, IP-OL, VP-NOL, and VP-OL categories, in

which the NP, IP, VP, OL, and NOL are the abbreviations of

no pre-trained, image pre-trained, video-pre-trained, online

learning, and no online learning, respectively.

Despite all efforts, there is no comprehensive study to

not only extensively categorize DL-based trackers, their

motivations, and solutions to different problems, but also

experimentally analyze the best methods according to dif-

ferent challenging scenarios. Motivated by these concerns,

the main goal of this survey is to fill this gap and investigate

the main present problems and future directions. The main

differences of this survey and prior ones are described as

follows.

Differences to Prior Surveys: Despite the currently avail-

able review papers, this paper focuses merely on 129

state-of-the-art DL-based visual tracking methods, which

have been published in major image processing and com-

puter vision conferences and journals. These methods in-

clude the HCFT [59], DeepSRDCF [60], FCNT [61], CNN-

SVM [62], DPST [63], CCOT [64], GOTURN [65], SiamFC

[66], SINT [67], MDNet [68], HDT [69], STCT [70], RPNT

[71], DeepTrack [72], CNT [73], CF-CNN [74], TCNN

[75], RDLT [76], PTAV [77], [78], CREST [79], UCT/UCT-

Lite [80], DSiam/DSiamM [81], TSN [82], WECO [83],

RFL [84], IBCCF [85], DTO [86]], SRT [87], R-FCSN [88],

GNET [89], LST [90], VRCPF [91], DCPF [92], CFNet [93],

ECO [94], DeepCSRDCF [95], MCPF [96], BranchOut [97],

DeepLMCF [98], Obli-RaFT [99], ACFN [100], SANet [101],

DCFNet/DCFNet2 [102], DET [103], DRN [104], DNT [105],

STSGS [106], TripletLoss [107], DSLT [108], UPDT [109],

ACT [110], DaSiamRPN [111], RT-MDNet [112], StructSiam

[113], MMLT [114], CPT [115], STP [116], Siam-MCF [117],

Siam-BM [118], WAEF [119], TRACA [120], VITAL [121],

DeepSTRCF [122], SiamRPN [123], SA-Siam [124], Flow-

Track [125], DRT [126], LSART [127], RASNet [128], MCCT

[129], DCPF2 [130], VDSR-SRT [131], FCSFN [132], FRPN2T-

Siam [133], FMFT [134], IMLCF [135], TGGAN [136], DAT

[137], DCTN [138], FPRNet [139], HCFTs [140], adaDDCF

[141], YCNN [142], DeepHPFT [143], CFCF [144], CFSRL

[145], P2T [146], DCDCF [147], FICFNet [148], LCTdeep

[149], HSTC [150], DeepFWDCF [151], CF-FCSiam [152],

MGNet [153], ORHF [154], ASRCF [155], ATOM [156], C-

RPN [157], GCT [158], RPCF [159], SPM [160], SiamDW

[56], SiamMask [57], SiamRPN++ [55], TADT [161], UDT

[162], DiMP [163], ADT [164], CODA [165], DRRL [166],

SMART [167], MRCNN [168], MM [169], MTHCF [170],

AEPCF [171], IMM-DFT [172], TAAT [173], DeepTACF [174],

MAM [175], ADNet [176], [177], C2FT [178], DRL-IS [179],

DRLT [180], EAST [181], HP [182], P-Track [183], RDT [184],

and SINT++ [58].

The trackers include 73 CNN-based, 35 SNN-based, 15

custom-based (including AE-based, reinforcement learning

(RL)-based, and combined networks), three RNN-based,

and three GAN-based methods. One major contribution and

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功

评论0