没有合适的资源?快使用搜索试试~ 我知道了~

Comprehensive_survey_of_deep_learning_in_remote_sensing.pdf

1.该资源内容由用户上传,如若侵权请联系客服进行举报

2.虚拟产品一经售出概不退款(资源遇到问题,请及时私信上传者)

2.虚拟产品一经售出概不退款(资源遇到问题,请及时私信上传者)

版权申诉

温馨提示

Comprehensive_survey_of_deep_learning_in_remote_sensing: theories, tools, and challenges for the community John E. Ball Derek T. Anderson Chee Seng Chan

资源推荐

资源详情

资源评论

Comprehensive survey of deep

learning in remote sensing: theories,

tools, and challenges for the

community

John E. Ball

Derek T. Anderson

Chee Seng Chan

John E. Ball, Derek T. Anderson, Chee Seng Chan, “ Comprehensive survey of deep learning in remote

sensing: theories, tools, and challenges for the community,” J. Appl. Remote Sens. 11(4),

042609 (2017), doi: 10.1117/1.JRS.11.042609.

Comprehensive survey of deep learning in remote

sensing: theories, tools, and challenges for

the community

John E. Ball,

a,

* Derek T. Anderson,

a

and Chee Seng Chan

b

a

Mississippi State University, Department of Electrical and Computer Engineering,

Mississippi State, Mississippi, United States

b

University of Malaya, Faculty of Comput er Science and Information Technology,

Kuala Lumpur, Malaysia

Abstract. In recent years, deep learning (DL), a rebranding of neural networks (NNs), has risen

to the top in numerous areas, namely compu ter vision (CV), speech recognition, and natural

language processing. Whereas remote sensing (RS) possesses a number of unique challenges,

primarily related to sensors and applications, inevitably RS draws from many of the same

theories as CV, e.g., statistics, fusion, and machine learning, to name a few. This means that

the RS community should not only be aware of advancements such as DL, but also be leading

researchers in this area. Herein, we provide the most comprehensive survey of state-of-the-art

RS DL research. We also review recent new developments in the DL field that can be used in DL

for RS. Namely, we focus on theories, tools, and challenges for the RS community. Specifically,

we focus on unsolved challenges and opportunities as they relate to (i) inadequate data sets,

(ii) human-understandable solutions for modeling physical phenomena, (iii) big data, (iv) non-

traditional heterogeneous data sources, (v) DL architectures and learning algorithms for spectral,

spatial, and temporal data, (vi) transfer learning, (vii) an improved theoretical understanding of

DL systems, (viii) high barriers to entry, and (ix) training and optimizing the DL.

© The Authors.

Published by SPIE under a Creative Commons Attribution 3.0 Unported License. Distribution or repro-

duction of this work in whole or in part requires full attribution of the original publication, including its

DOI. [DOI: 10.1117/1.JRS.11.042609]

Keywords: remote sensing; deep learning; hyperspectral; multispectral; big data; computer

vision.

Paper 170464SS received Jun. 3, 2017; accept ed for publication Aug. 16, 2017; published online

Sep. 23, 2017.

1 Introduction

In recent years, deep learning (DL) has led to leaps, versus incremental gain, in fields such as

computer vision (CV), speech recognition, and natural language processing, to name a few.

The irony is that DL, a surrogate for neural networks (NNs), is an age-old branch of artificial

intelligence that has been resurrected due to factors such as algorithmic advancements, high-

performance computing, and big data. The idea of DL is simple: the machine learns the features

and is usually very good at decision making (classification) versus a human manually designing

the system. The RS field draws from core theories such as physics, statistics, fusion, and machi ne

learning, to name a few. Therefore, the RS community should be aware of and at the leading edge

of advancements such as DL. The aim of this paper is to provide resources with respect to theory,

tools, and challenges for the RS community. Specifically, we focus on unsolved challenges and

opportunities as they relate to (i) inadequate data sets, (ii) human-understandable solutions for

modeling physical phenomena, (iii) big data, (iv) nontraditional heterogeneous data sources,

(v) DL architectures and learning algorithms for spectral, spatial, and temporal data, (vi) trans fer

learning, (vii) an improved theoretical understanding of DL systems, (viii) high barriers to entry,

and (ix) training and optimizing the DL.

*Address all correspondence to: John E. Ball, E-mail: jeball@ece.msstate.edu

REVIEW

Journal of Applied Remote Sensing 042609-1 Oct–Dec 2017

•

Vol. 11(4)

Herein, RS is a technological challenge where objects or scenes are analyzed by remote

means. This definition includes the traditional remote sensing (RS) areas, such as satellite-

based and aerial imaging. However, RS also includes nontraditional areas, such as unmanned

aerial vehicles (UAVs), crowdsourcing (phone imagery, tweets , etc.), and advanced driver-as-

sistance systems (ADAS). These types of RS offer different types of data and have different

processing needs, and thus also come with new challenges to algorithms that analyze the

data. The contributions of this paper are as follows:

1. Thorough list of ch allenges and open probl ems in DL RS. We focus on unsolved chal-

lenges and opportunities as they relate to (i) inadequate data sets, (ii) human-understand-

able solutions for modeling physical phenomena, (iii) big data, (iv) nontraditional

heterogeneous data sources, (v) DL architectures and learning algorithms for spectral,

spatial, and temporal data, (vi) transfer learning, (vii) an improved theoretic al under-

standing of DL systems, (viii) high barriers to entry, and (ix) training and optimizing

the DL. These observations are based on surveying RS DL and feature learning (FL)

literature, as well as numerous RS survey papers. This topic is the majority of our

paper and is discussed in Sec. 4.

2. Thorough literature survey. Herein, we review 205 RS application papers and 57 survey

papers in RS and DL. In addition, many relevant DL papers are cited. Our work extends

the previous DL survey papers

1–3

to be more comprehensive. We also cluster DL

approaches into different application areas and provide detailed discussions of many

relevant papers in these areas in Sec. 3.

3. Detailed discu ssions of modifying DL architectures to tackle RS problems. We highlight

approaches in DL for RS, including new architectures, tools, and DL compo nents that

current RS researchers have implemented in DL. This is discussed in Sec. 4.5.

4. Overview of DL. For RS researchers not familiar with DL, Sec. 2 provides a high-level

overview of DL and lists many good references for interested readers to pursue.

5. DL tool list. Tools are a major enabler of DL, and we revie w the more popular DL tools.

We also list pros and cons of several of the most popular toolsets and provide a table sum-

marizing the tools, with references and links (refer to Table 1). For more details, see

Sec. 2.3.5.

6. Online summaries of RS data sets and DL RS papers reviewed. First, an extensive online

table with details about each DL RS paper we reviewed: sensor modalities, a compilation

of the data sets used, a summary of the main contribution, and references. Second,

a data set summary for all the DL RS papers analyzed in this paper is provided online.

It contains the data set name, a description, a URL (if one is available), and a list of

references. Since the literature review for this paper was so extensive, these tables

are too large to put in the main paper but are provided online for the readers’ benefit.

These tables are located at http://cs-chan.com/source/FADL/Online_Paper_Summary_

Table.pdf, and http://cs-chan.com/source/FADL/Online_Dataset_Summary_Table.pdf.

This paper is organized as follows. Section 2 discusses related work in CV. This section

contrasts deep and “shallow” learning, and summarizes DL architectures. The main reasons

for success of DL are also discussed in this section. Section 3 provides an overview of DL

in RS, highlighting DL approaches in many disparate areas of RS. Section 4 discusses the unique

challenges and open issues in applying DL to RS. Conclusions and recommendations are listed

in Sec. 5.

2 Related Work in CV

CV is a field of study that aims to achieve visual understanding through computer analysis of

imagery. Traditional (aka, classical) approaches are sometimes referred to as “shallow” nowa-

days because there are typically only a few processing stages, e.g., image denoising or enhance-

ment followed by feature extraction then classification, that connect the raw data to our final

decisions. Examples of “shallow learn ers” include support vector machines (SVMs), Gaussian

mixtures models, hidden Markov models, and conditional random fields. In contrast, DL usually

Ball, Anderson, and Chan: Comprehensive survey of deep learning in remote sensing: theories. . .

Journal of Applied Remote Sensing 042609-2 Oct–Dec 2017

•

Vol. 11(4)

has many layers—the exact demarcation between “shallow” and “deep” learning is not a set

number (akin to multi- and hyperspectral signals)—which allows a rich variety of complex,

nonlinear, and hierarchical features to be learned from the data. The following sections contrast

deep and shallow learning, discuss DL approaches and DL enablers, and finally discuss DL

success in domains outside RS. Overall, the challenge of human-engineered solutions is the

manual or experimental discovery of which feature(s) and classifier satisfy the task at hand.

The challenge of DL is to define the appropriate network topology and subsequently optimizing

its hyperparameters.

2.1 Traditional Feature Learning Methods

Traditional methods of feature extraction involve hand-coded transforms that extract information

based on spatial, spectral, textural, morphological, and other cues. Examples are discussed in

detail in the following references; we do not give extensive algorithmic details herein.

Cheng et al.

1

discuss traditional hand-crafted features such as the histogram of ordered gra-

dients (HOG), which is a feature of the scale-invariant feature transform (SIFT), color histo-

grams, local binary patterns (LBP), etc. They also discuss unsupervised FL methods, such

as k-means clustering and sparse coding. Ot her g ood survey papers discuss hyperspectral

image (HSI) feature analysis,

4

kernel-based methods,

5

statistical learning methods in HSI,

6

spec-

tral distance functions,

7

pedestrian detection,

8

multiclassifier systems,

9

spectral–spatial classifi-

cation,

10

change detection,

11,12

machine learning in RS,

13

manifold learning,

14

endmember

extraction,

15

and spectral unmixing.

16–20

Traditional FL methods can work quite well, but (1) they require a high level of expertise and

very specific domain knowledge to create the hand-crafted features, (2) sometimes the proposed

solutions are fragile, that is, they work well with the data being analyzed but do not perform well

on data, (3) sophisticated methods may be required to properly handle irregular or complicated

decision surfaces, and (4) shall ow systems that learn hierarchical features can become very

complex.

In contrast, DL approaches (1) learn from the data itself, which means the expertise

for feature engineering is replaced (partially or comp let el y) by the DL, (2) DL has sta t e-

of-the-art results in many domains (and these results are usually significantly better then shal-

low approaches), and (3) DL in some instances can outperform humans and human-coded

features.

However, there are also considerations when adopting DL approaches: (1) many DL systems

have a large number of parameters, and require a significant amount of training data; (2) choosing

the optimal architecture and training it optimally are still open questions in the DL community;

(3) there is still a steep learning curve if one wants to really understand the math and opera-

tions of the DL systems; (4) it is hard to comprehend what is going on “under the hood” of

DL systems, (5) adapting very successful DL architectures to fit RS imagery analysis can be

challenging.

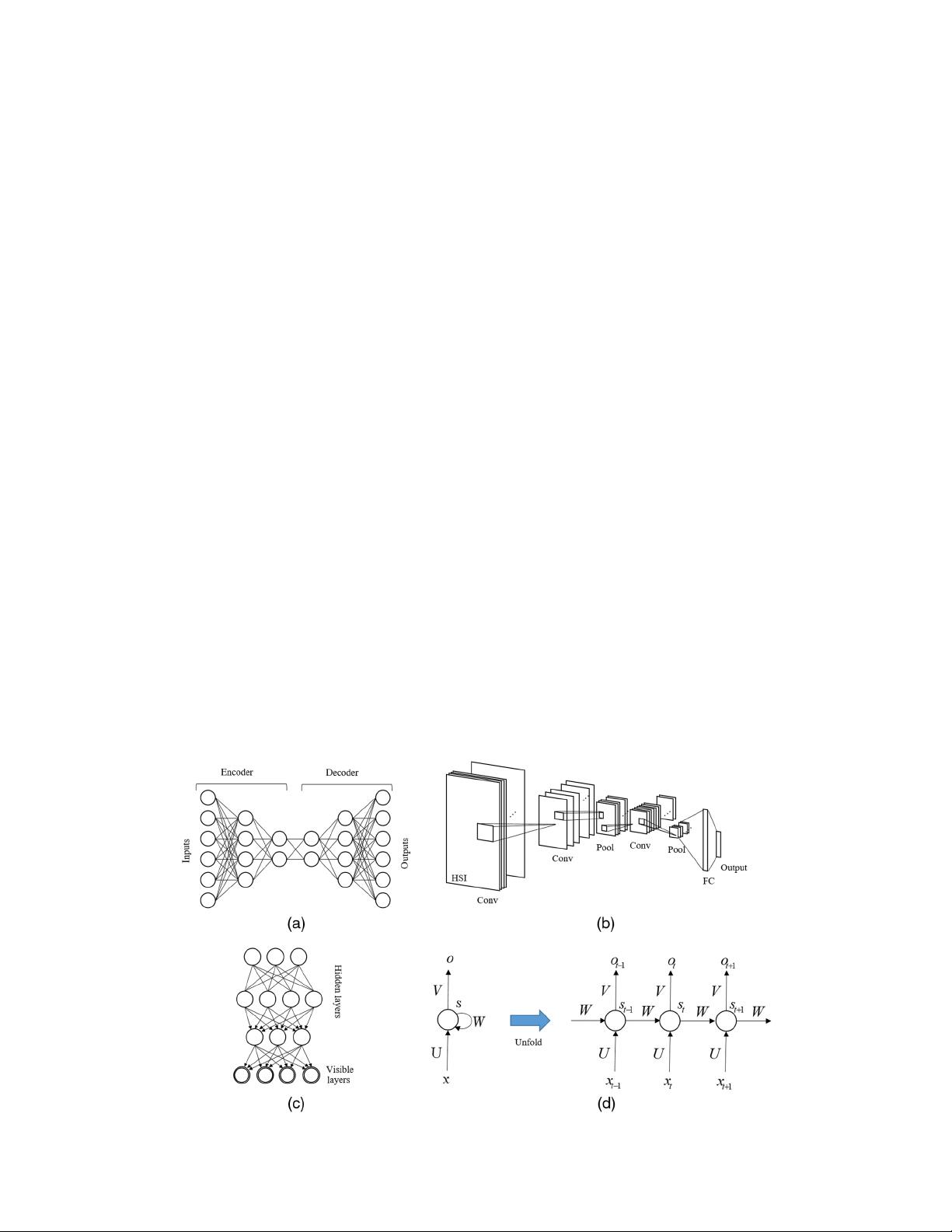

2.2 DL Approaches

To date, the autoencoder (AE), convolutional neural network (CNN), deep belief networks

(DBNs), and recurrent NN (RNN) have been the four mainstream DL architectures. Of

these architectures, the CNN is the most popular and most published to date. The deconvolu-

tional NN (DeconvNet)

21,22

is a relative newcomer to the DL community. The following sections

discuss each of these architectures at a high level. Many references are provided for the interested

reader.

2.2.1 Autoencoder

An AE is an NN that is used for unsupervised learning of efficient codings (from unlabeled data).

The AE’s codings often reveal useful features from unsupervised data. One of the first AE appli-

cations was dimensionality reduction, which is required in many RS applications. One advantage

of using an AE with RS data is that the data do not need to be labeled. In an AE, reducing the size

Ball, Anderson, and Chan: Comprehensive survey of deep learning in remote sensing: theories. . .

Journal of Applied Remote Sensing 042609-3 Oct–Dec 2017

•

Vol. 11(4)

of the adjacent layers forces the AE to learn a compact representation of the data. The AE maps

the input through an encoder function f to generate an internal (latent) representation, or code, h.

The AE also has a decoder function, g that maps h to the output

^

x. Let an input vector to an AE be

x ∈ R

d

. In a simple one hidden layer case, the function h ¼ fðxÞ¼gðWx þ bÞ, where W is the

learned weight matrix and b is a bias vector. A decoder then maps the latent representation to a

reconstruction or approximation of the input via x

0

¼ f

0

ðW

0

x þ b

0

Þ, where W

0

and b

0

are the

decoding weight and bias, respectively. Usually, the encoding and decoding weight matrices are

tied (shared), so that W

0

¼ W

T

, where T is the matrix transpose operator.

In general, the AE is constrained, either through its architecture, or through a sparsity con-

straint (or both), to learn a useful mapping (but not the trivial identity mapping). A loss function

L measures how close the AE can reconstruct the output: L is a function of x and

^

x ¼ f

0

½fðxÞ.

For example, a commonly used loss function is the mean squared error, which penalizes the

approximated output from being different from the input, Lðx; x

0

Þ¼kx − x

0

k

2

.

A regularization function ΩðhÞ can also be added to the loss function to force a more sparse

solution. The regularization function can involve penalty terms for model complexity, model

prior information, penalizing based on derivatives, or penalties based on some other criteria

such as supervised classification results (reference Sec. 14.2 of Ref. 23). Regularization is typ-

ically used with a deep encoder, not a shallow one. Regularization encourages the AE to have

other properties than just reconstructing the input, such as making the representation sparse,

robust to noise, or constraining derivatives in the representation. Arpit et al.

24

show that denois-

ing and contractive AEs that also contain activations such as sigmoid or rectified linear units

(ReLUs), which are commonly found in many AEs, satisfy conditions sufficient to encourage

sparsity. A common practice is to add a weighted regularizing term to the optimization function,

such as the l

1

norm, khk

1

¼

P

K

m¼1

jh½ij, where K is the dimensionality of h and λ is a term

which controls how much effect the regularization has on the optimization proces s. This opti-

mization can be solved using alternating optimization over W and h.

A denoising autoencoder (DAE) is an AE designed to remove noise from a signal or an

image. Chen et al.

25

developed an efficient DAE, which marginalizes the noise and has a com-

putationally efficient closed-form solution. To provide robustness, the system is trained using

additive Gaussian noise or binary masking noise (force some percentage of inputs to zero).

Many RS applications use an AE for denoising. Figure 1(a) shows an example of an AE.

The diabolo shape results in dimensionality reduction.

Fig. 1 Block diagrams of DL architectures: (a) AE, (b) CNN, (c) DBN, and (d) RNN.

Ball, Anderson, and Chan: Comprehensive survey of deep learning in remote sensing: theories. . .

Journal of Applied Remote Sensing 042609-4 Oct–Dec 2017

•

Vol. 11(4)

剩余54页未读,继续阅读

资源评论

读书旅行2021-08-04用户下载后在一定时间内未进行评价,系统默认好评。

读书旅行2021-08-04用户下载后在一定时间内未进行评价,系统默认好评。

Fun_He

- 粉丝: 19

- 资源: 104

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功