RIFE: Real-Time Intermediate Flow Estimation for Video Frame Interpolation

Zhewei Huang

1

Tianyuan Zhang

1

Wen Heng

1

Boxin Shi

2

Shuchang Zhou

1

1

Megvii Inc

2

Peking University

{huangzhewei, zhangtianyuan, hengwen, zsc}@megvii.com, shiboxin@pku.edu.cn

Abstract

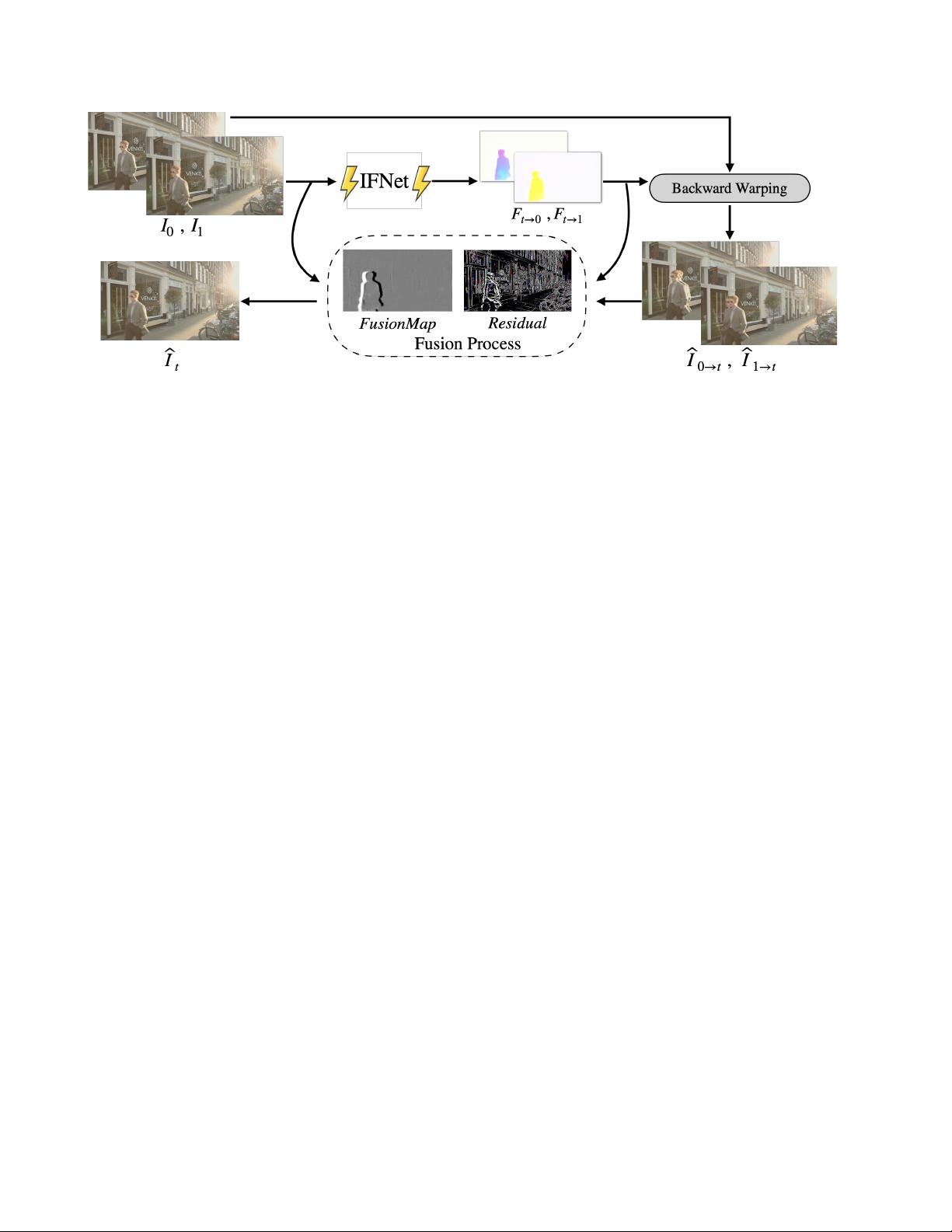

We propose RIFE, a Real-time Intermediate Flow Es-

timation algorithm for Video Frame Interpolation (VFI).

Most existing methods first estimate the bi-directional opti-

cal flows and then linearly combine them to approximate in-

termediate flows, leading to artifacts on motion boundaries.

RIFE uses a neural network named IFNet that can directly

estimate the intermediate flows from images. With the more

precise flows and our simplified fusion process, RIFE can

improve interpolation quality and have much better speed.

Based on our proposed leakage distillation loss, RIFE can

be trained in an end-to-end fashion. Experiments demon-

strate that our method is significantly faster than existing

VFI methods and can achieve state-of-the-art performance

on public benchmarks. The code is available at https:

//github.com/hzwer/arXiv2020-RIFE.

1. Introduction

Video Frame Interpolation (VFI) aims to synthesize in-

termediate frames between two consecutive frames of a

video and is widely used to improve the frame rate and

enhance visual quality. VFI also supports various ap-

plications like slow-motion generation, video compres-

sion [31], and training data generation for video motion de-

blurring [4]. Moreover, VFI algorithms running on high-

resolution videos (e.g., 720p, and 1080p) with real-time

speed have many more potential applications, such as play-

ing a higher frame rate video on the client’s player, provid-

ing video editing services for users with limited computing

resources.

VFI is challenging due to the complex, large non-linear

motions and illumination changes in the real world. Flow-

based VFI algorithms have recently offered a framework

to address these challenges and achieved impressive re-

sults [17, 22, 35, 2]. Common approaches for these methods

involve two steps: 1) warping the input frames according to

approximated optical flows and 2) fusing and refining the

warped frames using a bunch of Convolutional Neural Net-

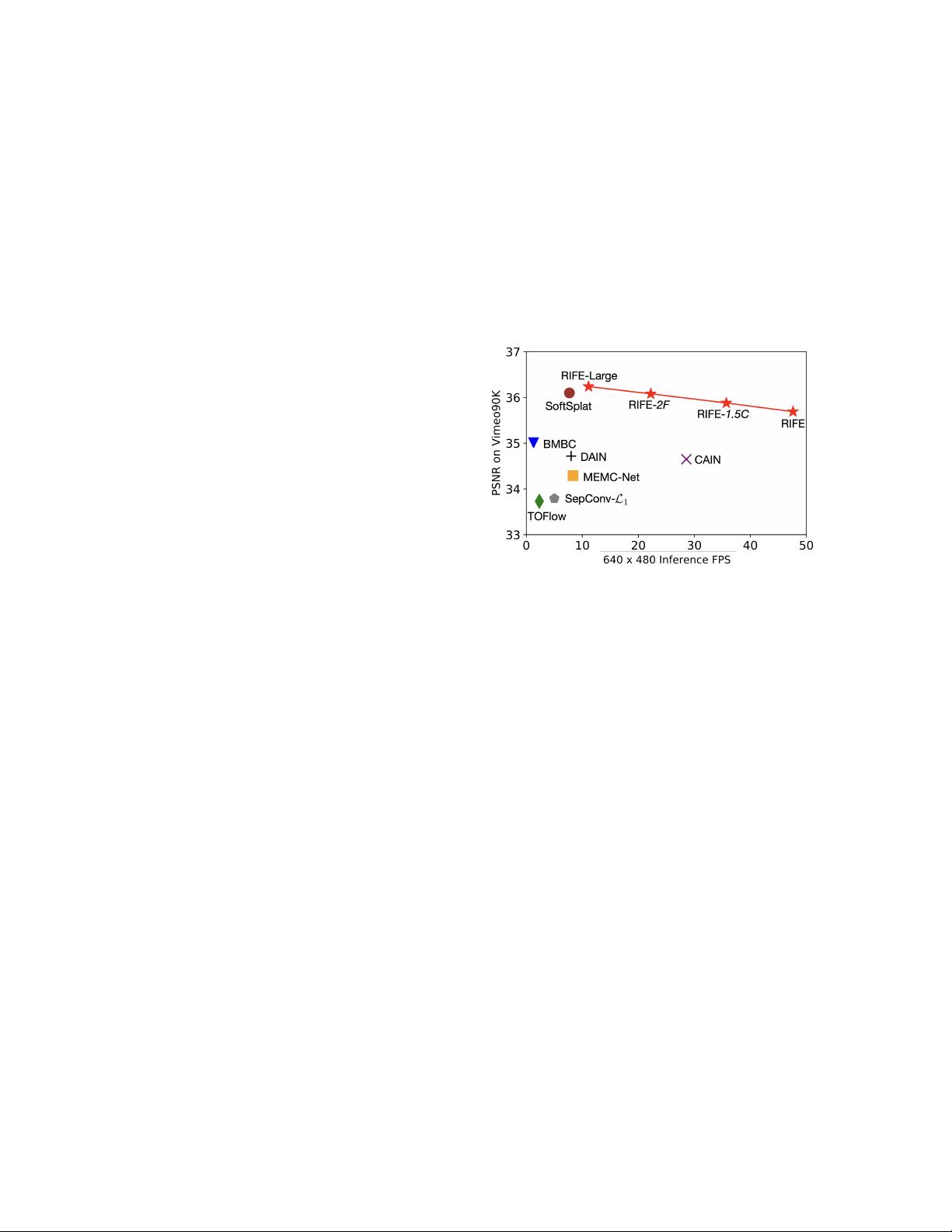

Figure 1: Speed and accuracy trade-off by adjusting

model size parameters C and F . We compare our models

with prior VFI methods including TOFlow [35], SepConv-

L

1

[24], MEMC-Net [3], DAIN [2], CAIN [8], Soft-

Splat [23] and BMBC [26] on the Vimeo90K testing set.

works (CNNs).

According to the way of warping frames, flow-based VFI

algorithms can be classified into forward warping based

methods and backward warping based methods. Backward

warping is more widely used because forward warping lacks

unified and efficient implementation and suffers from con-

flicts when multiple source pixels are mapped to the same

location, which leads to overlapped pixels and holes.

Given the input frames I

0

, I

1

, backward warping based

methods need to approximate the intermediate flows

F

t→0

, F

t→1

from the perspective of the frame I

t

that we are

expected to synthesize. Common practice [17, 34, 2] first

computes bi-directional flows from pre-trained off-the-shelf

optical flow models, then linearly combines them. This

combination, however, will fail on motion boundaries, as

there will be different objects in the two frames. Conse-

quently, previous VFI methods share two major drawbacks:

1) To solve the artifacts brought by the linear combination

of optical flows, previous methods usually need to ap-

proximate various representations, e.g., image depth [2],

intermediate flow refinement [17]. Coupled with the

large complexity in the bi-directional flow estimation,

1

arXiv:2011.06294v2 [cs.CV] 17 Nov 2020

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功

评论0