2

This manuscript combines the analysis of our earlier

works [36], [37] and extends them in a number of ways.

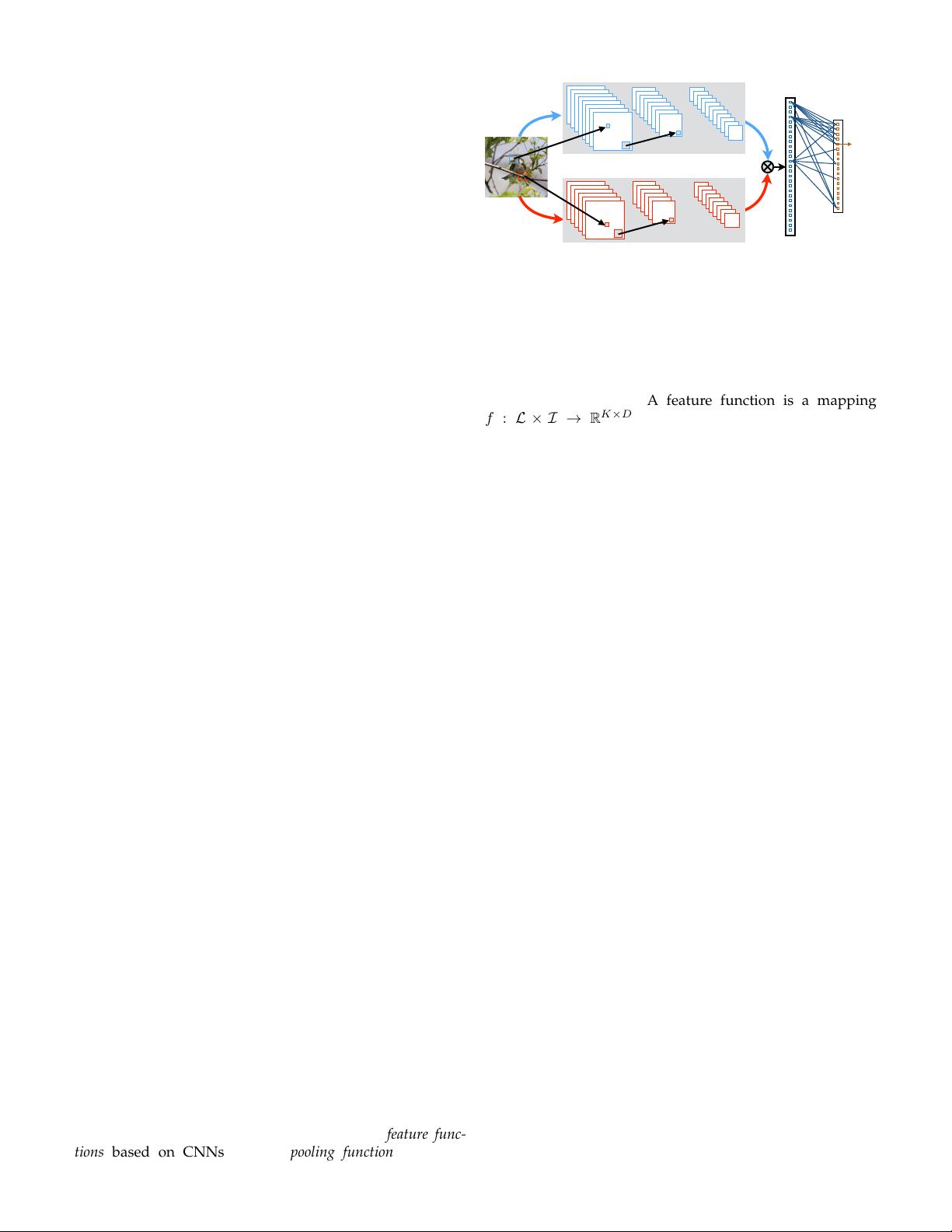

We present an account of related work, including extensions

published subsequently (Section 2). We describe the B-CNN

architecture (Section 3), and present a unified analysis of

exact and approximate end-to-end trainable formulations

of Second-Order Pooling (O2P), Fisher Vector (FV), Vector-

of-Locally-Aggregated Descriptors (VLAD), Bag-of-Visual-

Words (BoVW) in terms of their accuracy on a variety of

fine-grained recognition datasets (Section 3.2-4). We show

that the approach is general-purpose and is effective at

other image classification tasks such as material, texture,

and scene recognition (Section 4). We present a detailed

analysis of dimensionality reduction techniques and pro-

vide trade-off curves between accuracy and dimensionality

for different models, including a direct comparison with

the recently proposed compact bilinear pooling technique [19]

(Section 5.1). Moreover, unlike prior texture representations

based on networks pre-trained on the ImageNet dataset,

B-CNNs can be trained from scratch and offer consistent

improvements over the baseline architecture with a mod-

est increase in the computation cost (Section 5.2). Finally

we visualize the top activations of several units in the

learned models and apply the gradient-based technique of

Mahendran and Vedaldi [41] to visualize inverse images

on various texture and scene datasets (Section 5.3). We

have released the complete source code for the system at

http://vis-www.cs.umass.edu/bcnn.

2 RELATED WORK

Fine-grained recognition techniques. After AlexNet’s [34]

impressive performance on the ImageNet classification chal-

lenge, several authors (e.g., [13], [52]) have demonstrated

that features extracted from layers of a CNN are effective

at fine-grained recognition tasks. Building on prior work

on part-based techniques (e.g., [5], [15], [71]), Zhang et

al. [70], and Branson et al. [6] demonstrated the benefits

of combining CNN-based part detectors [23] and CNN-

based features for fine-grained recognition tasks. Other

approaches use segmentation to guide part discovery in

a weakly-supervised manner and train part-based mod-

els [31]. Among the non part-based techniques, texture

descriptors such as FV and VLAD have traditionally been

effective for fine-grained recognition. For example, the top

performing method on FGCOMP’12 challenge used SIFT-

based FV representation [25].

Recent improvements in deep architectures have also

resulted in improvements in fine-grained recognition. These

include architectures that have increased depth such as the

“deep” [9] and “very deep” [59] networks from the Oxford’s

VGG group, inception networks [60], and “ultra deep”

residual networks [26]. Spatial Transformer Networks [29]

augment CNNs with parameterized image transformations

and are highly effective at fine-grained recognition tasks.

Other techniques augment CNNs with “attention” mecha-

nisms that allow focused reasoning on regions of an im-

age [4], [43]. B-CNNs can be viewed as an implicit spatial

attention model since the outer product modulates one

feature based on the other, similar to the multiplicative

feature interactions in attention mechanisms. Although not

directly comparable, Krause et al. [32] showed that the

accuracy of deep networks can be improved significantly by

using two orders of magnitude more training data obtained

by querying category labels on search engines. Recently,

Moghimi et al. [44] showed boosting B-CNNs offers con-

sistent improvements on fine-grained tasks.

Texture representations and second-order features. Tex-

ture representations have been widely studied for decades.

Early work [35] represents the texture by computing the

statistics of linear filter-bank responses (e.g., wavelets and

steerable pyramids). The use of second-order features of

filter-bank responses was pioneered by Portilla and Simon-

celli [50]. Recent variants such as FV [46] and O2P [8] with

SIFT were shown to be a highly effective for image classifi-

cation and semantic segmentation [14] tasks respectively.

The advantages of combining orderless texture represen-

tations and deep features have been studied in a number of

recent works. Gong et al. performed a multi-scale orderless

pooling of CNN features [24] for scene classification. Cimpoi

et al. [11] performed a systematic analysis of texture repre-

sentations by replacing linear filter-banks with non-linear

filter-banks derived from a CNN and showed it results

in significant improvements on various texture, scene, and

fine-grained recognition tasks. They found that orderless

aggregation of CNN features was more effective than the

commonly-used fully-connected layers on these tasks. How-

ever, a drawback of these approaches is that the filter banks

are not trained in an end-to-end manner. Our work is also

related to the cross-layer pooling approach of Liu et al. [38]

who showed that second-order aggregation of features from

two different layers of a CNN is effective at fine-grained

recognition. Our work showed that feature normalization

and domain-specific fine-tuning offers additional benefits,

improving the accuracy from 77.0% to 84.1% using identical

networks on the Caltech-UCSD Birds dataset [67]. Another

subsequently published work of interest is the NetVLAD

architecture [3] which provides a end-to-end trainable ap-

proximation of VLAD. The approach was applied to image-

based geolocation problem. We include a comparison of

NetVLAD to other texture representations in Section 4.

Texture synthesis and style transfer. Concurrent to our

work, Gatys et al. showed that the Gram matrix of CNN

features is an effective texture representation and by match-

ing the Gram matrix of a target image one can create novel

images with the same texture [20] and transfer styles [21].

While the Gram matrix is identical to a pooled bilinear

representation when the two features are the same, the

emphasis of our work is recognition and not generation. This

distinction is important since Ustyuzhaninov et al. [63] show

that the Gram matrix of a shallow CNN with random filters

is sufficient for texture synthesis, while discriminative pre-

training and subsequent fine-tuning are essential to achieve

high performance for recognition.

Polynomial kernels and sum-product networks. An

alternate strategy for combining features from two networks

is to concatenate them and learn their pairwise interactions

through a series of layers on top. However, doing this

naively requires a large number of parameters since there

are O(n

2

) interactions over O(n) features requiring a layer

with O(n

3

) parameters. Our explicit representation using

an outer product has no parameters and is similar to a

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功

评论0