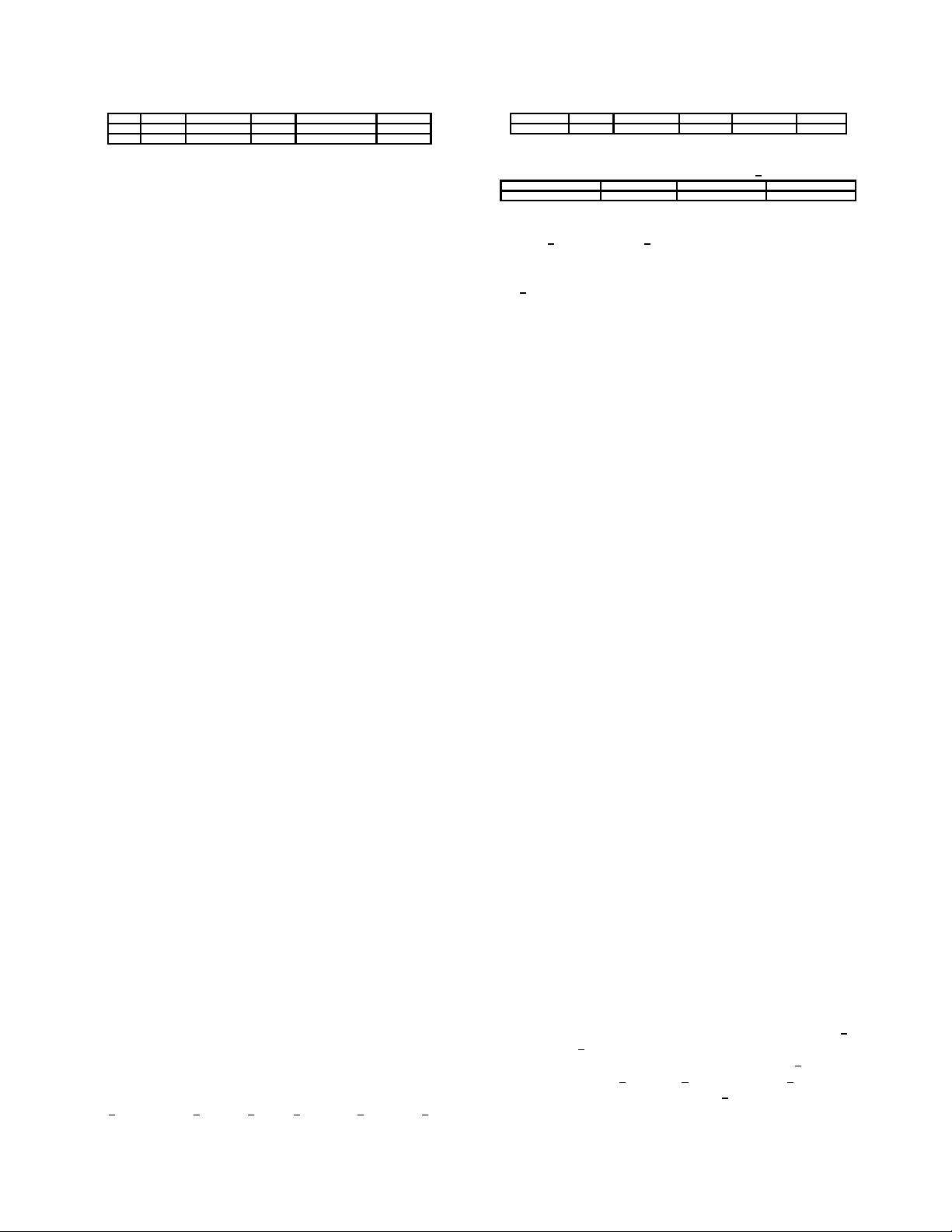

Table 1: Statistics of training and testing data

data #users #merchants #pairs #positive pairs positive%

train 212,062 1,993 260,864 15,952 6.12%

test 212,108 1,993 261,477 16,037 6.13%

because it is domain-specific, while machine learning algo-

rithms are largely general-purpose. Much t rial and error

can go into feature design, and it is typically where most

of the effort in a machine learning project goes [8]. While

thousands of classification algorithms have been prop osed

and studied in the research community, not much work has

been reported on feature engineering for prediction tasks in

e-commerce. Therefore, in this paper we focus on feature

engineering. We will describe how to generate various types

of features from user activity log data and study the impor-

tance of these features via extensive experiments. The fea-

tures we generated can be used in all kinds of e-commerce

applications, such as customer segmentation, product rec-

ommendation, and customer base augmentation for brands.

We hope that our work can be valuable for data science prac-

titioners, who need to develop solutions for prediction tasks

in e-commerce.

The rest of the paper is organized as follows. Section 2

gives th e problem description. Section 3 describes the fea-

tures we have generated. Model ensemble is briefly d escribed

in Section 4. In Section 5, the importance of features is stud-

ied and top features are listed. Finally, Section 6 concludes

the pap er.

2. PROBLEM DESCRIPTION

For the repeat buyer prediction competition, the follow-

ing data are provided as shown on the top of Figure 1:

demographic information of users, six months of user ac-

tivity log data prior to the “Double 11” promotion, and

training and testing hnew buyer, merchanti pairs, where the

first purchase of the new buyer from the merchant is on

the “Doub le 11” promotion. User demographic data con-

tains the age and gender of users. The age values are di-

vided into seven ranges. The class label of a training hnew

buyer, m erchanti pair is known, and it indicates whether the

new buyer bought items from the merchant again within six

months after the “Double 11” promotion. The class labels of

testing hnew buyer, merchanti pairs are hidden. The task is

to predict the class labels of th e testing pairs. The compe-

tition was carried out in two stages. In stage 1, all the data

were released to the contestants except for class labels of

testing p airs, which were released after stage 1. Stage 2 ran

on the cloud platform of Alibaba for bigger data, and the

data were not released. Therefore, in this paper, we focus

on the data of stage 1.

Table 1 shows the statistics of the training and testing

data. The set of merchants in training data and that in test-

ing data are the same except for a single merchant. Users in

the training and testing data have no overlap. The second

last column is the number of positive hn ew buyer, merchanti

pairs such that the new buyer bought items from the mer-

chant again within six months. The last column is the per-

centage of such positive pairs. The percentage of positive

pairs is around 6%, which indicates that most of the new

buyers are indeed one-time deal hu nters.

The user activity log data contains the following fields:

user

id, merchant id, item id, cat id, brand id, action type

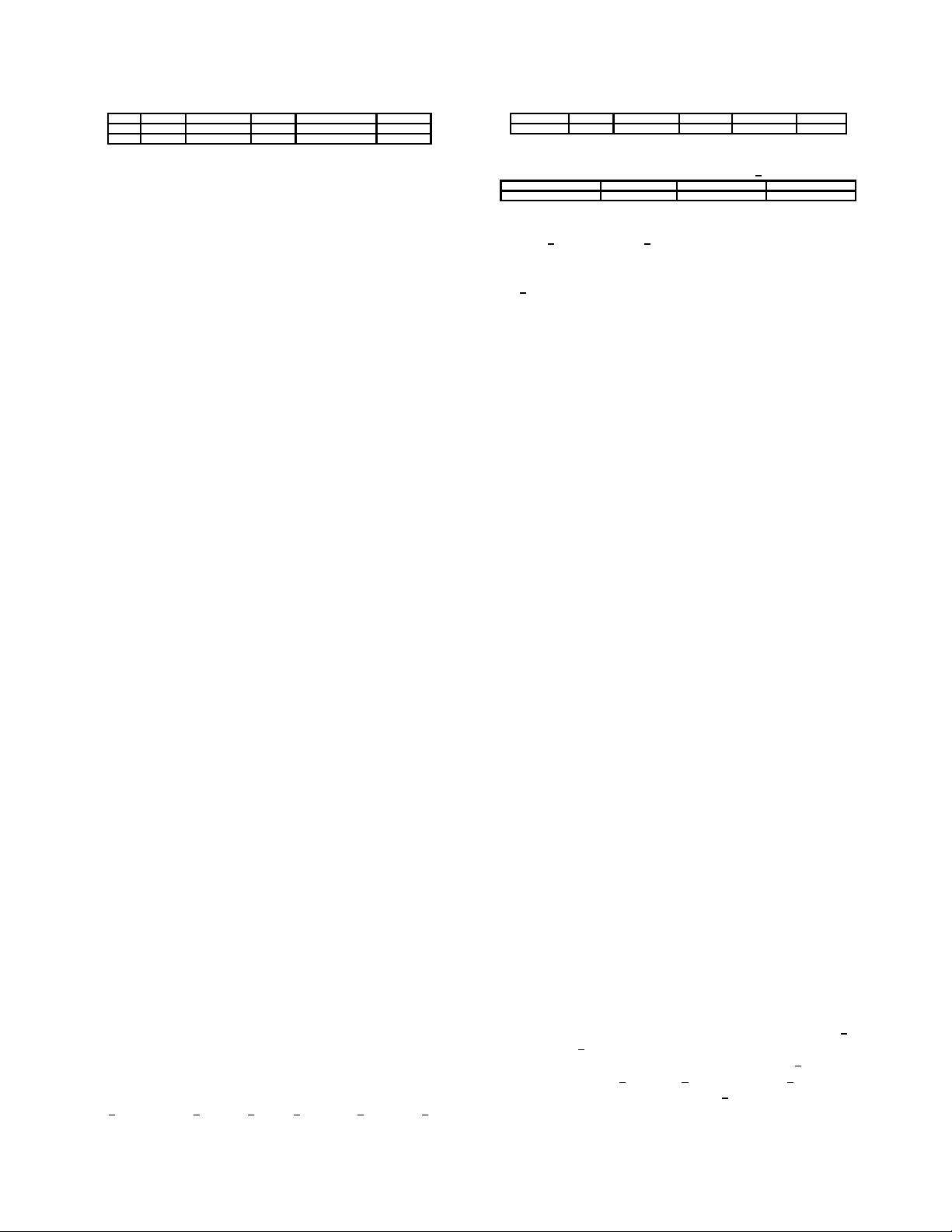

Table 2: Statistics of log activity data

#rows #users #merchants #items #categories #brands

54,925,330 424,170 4,995 1,090,390 1,658 8,444

Table 3: Statistics of action types

click add-to-cart purchase add-to-favourite

48,550,713 (88.39%) 76,750 (0.14%) 3,292,144 (5.99%) 3,005,723 (5.47%)

and time stamp. Action type takes four values: 0 for click,

1 for add-to-cart, 2 for purchase and 3 for add-to-favourite.

Products sold in different merchants are assigned different

item

ids even if the products are exactly the same. Table

2 shows the statistics of the user activity log data. Many

merch ants in the log data do not have new buyers in the

training or testing data. They are included in the log data

because some new buyers visited them. The activities of the

new buyers at these merchants are valuable information for

inferring the preferences and habits of the new buyers.

Table 3 shows the number of the four types of actions.

The m ajority of actions are clicks. The number of add-

to-cart actions is very small, so we merge the ad d-to-cart

actions with click actions.

The user activity log data provided in this competition

are very typical in e-commerce prediction tasks. However,

the log data are not in a form that is amenable to learning.

We need to construct new features from them and then join

the new features with t he training and testing data. In t he

next section, we describe how we d o this.

3. FEATURE ENGINEERING

The user activity log data contain five entities: users, mer-

chants, brands, categories and items. The characteristics of

these entities and their interactions can be predictive of the

class labels. For example, users are more likely to buy again

from a merchant selling snacks than from a merchant sell-

ing electronic products within six months, since snacks are

cheaper and are consumed much faster than electronic prod-

ucts. We generated a large number of features to describe

the characteristics of the five types of entities and their pair-

wise interactions. In the rest of this section, we first give an

overview of all the generated features, and then describe the

features in details.

3.1 Overview of features and profiles

The features we generated range from basic counts to com-

plex features like similarity scores, tren ds, PCA (Principal

Component Analysis) and LDA (Latent Dirichlet allocation)

features. All the features of an entity form the profile of the

entity. We have five entity profiles and five interaction pro-

files as shown at the bottom of Figure 1. Table 4 gives a

summary of the ty pes of features contained in these profiles.

User-merchant interaction is the most important interaction

among the five pairwise interaction profiles as the task is to

predict wheth er a user will return to a merchant to b uy

again. Therefore, user-merchant profile contains more fea-

tures than t he other interaction profiles.

The original training/testing data contain only user

ids

and merchant

ids as shown on the top of Figure 1. We ex-

panded the training/testing data by adding age

range and

gender of users, item id, brand id, an d category id as shown

in the middle of Figure 1, where item id is the id of the item

bought by the user from the merchant on the Double 11 day,

人工智能小白菜2018-05-08这个pdf是一篇英文论文......

人工智能小白菜2018-05-08这个pdf是一篇英文论文...... 我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功