Something unknown is doing we don’t know what.

– Sir Arthur Eddington

1 Introduction

Intelligence is a multifaceted and elusive concept that has long challenged psychologists, philosophers, and

computer scientists. An attempt to capture its essence was made in 1994 by a group of 52 psychologists

who signed onto a broad definition published in an editorial about the science of intelligence [Got97]. The

consensus group defined intelligence as a very general mental capability that, among other things, involves the

ability to reason, plan, solve problems, think abstractly, comprehend complex ideas, learn quickly and learn

from experience. This definition implies that intelligence is not limited to a specific domain or task, but rather

encompasses a broad range of cognitive skills and abilities. Building an artificial system that exhibits the kind

of general intelligence captured by the 1994 consensus definition is a long-standing and ambitious goal of AI

research. In early writings, the founders of the modern discipline of artificial intelligence (AI) research called

out sets of aspirational goals for understanding intelligence [MMRS06]. Over decades, AI researchers have

pursued principles of intelligence, including generalizable mechanisms for reasoning (e.g., [NSS59], [LBFL93])

and construction of knowledge bases containing large corpora of commonsense knowledge [Len95]. However,

many of the more recent successes in AI research can be described as being narrowly focused on well-defined

tasks and challenges, such as playing chess or Go, which were mastered by AI systems in 1996 and 2016,

respectively. In the late-1990s and into the 2000s, there were increasing calls for developing more general

AI systems (e.g., [SBD

+

96]) and scholarship in the field has sought to identify principles that might underly

more generally intelligent systems (e.g., [Leg08, GHT15]). The phrase, “artificial general intelligence” (AGI),

was popularized in the early-2000s (see [Goe14]) to emphasize the aspiration of moving from the “narrow

AI”, as demonstrated in the focused, real-world applications being developed, to broader notions of intelli-

gence, harkening back to the long-term aspirations and dreams of earlier AI research. We use AGI to refer

to systems that demonstrate broad capabilities of intelligence as captured in the 1994 definition above, with

the additional requirement, perhaps implicit in the work of the consensus group, that these capabilities are

at or above human-level. We note however that there is no single definition of AGI that is broadly accepted,

and we discuss other definitions in the conclusion section.

The most remarkable breakthrough in AI research of the last few years has been the advancement of

natural language processing achieved by large language models (LLMs). These neural network models are

based on the Transformer architecture [VSP

+

17] and trained on massive corpora of web-text data, using at its

core a self-supervised objective of predicting the next word in a partial sentence. In this paper, we report on

evidence that a new LLM developed by OpenAI, which is an early and non-multimodal version of GPT-4

[Ope23], exhibits many traits of intelligence, according to the 1994 definition. Despite being purely a language

model, this early version of GPT-4 demonstrates remarkable capabilities on a variety of domains and tasks,

including abstraction, comprehension, vision, coding, mathematics, medicine, law, understanding of human

motives and emotions, and more. We interacted with GPT-4 during its early development by OpenAI using

purely natural language queries (prompts)

1

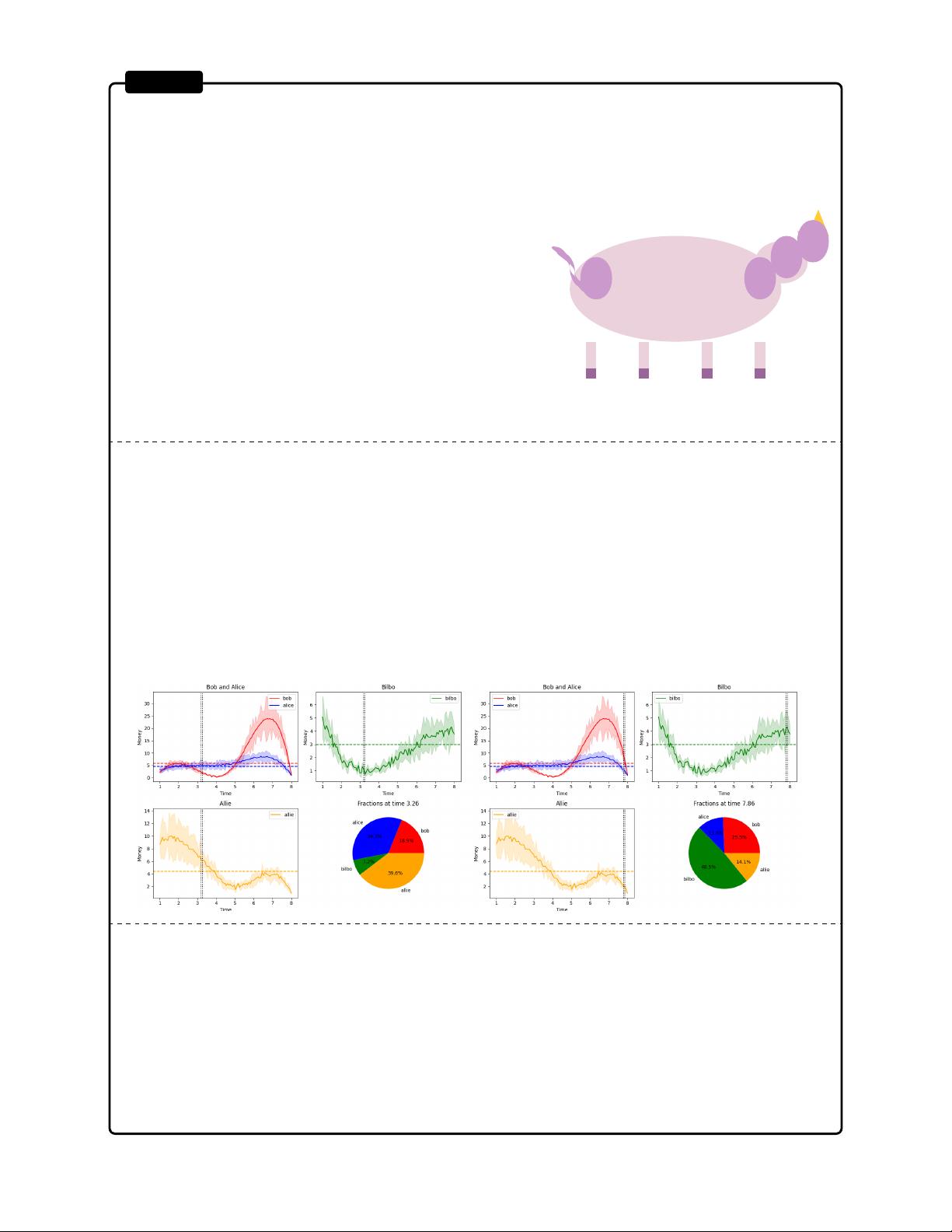

. In Figure 1.1, we display some preliminary examples of outputs

from GPT-4, asking it to write a proof of infinitude of primes in the form of a poem, to draw a unicorn in

TiKZ (a language for creating graphics in L

A

T

E

X), to create a complex animation in Python, and to solve

a high-school level mathematical problem. It easily succeeds at all these tasks, and produces outputs that

are essentially indistinguishable from (or even better than) what humans could produce. We also compare

GPT-4’s performance to those of previous LLMs, most notably ChatGPT, which is a fine-tuned version of (an

improved) GPT-3 [BMR

+

20]. In Figure 1.2, we display the results of asking ChatGPT for both the infini-

tude of primes poem and the TikZ unicorn drawing. While the system performs non-trivially on both tasks,

there is no comparison with the outputs from GPT-4. These preliminary observations will repeat themselves

throughout the paper, on a great variety of tasks. The combination of the generality of GPT-4’s capabilities,

with numerous abilities spanning a broad swath of domains, and its performance on a wide spectrum of tasks

at or beyond human-level, makes us comfortable with saying that GPT-4 is a significant step towards AGI.

1

As GPT-4 ’s development continued after our experiments, one should expect different responses from the final version of GPT-

4. In particular, all quantitative results should be viewed as estimates of the model’s potential, rather than definitive numbers. We

repeat this caveat throughout the paper to clarify that the experience on the deployed model may differ. Moreover we emphasize

that the version we tested was text-only for inputs, but for simplicity we refer to it as GPT-4 too.

4

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功