没有合适的资源?快使用搜索试试~ 我知道了~

关于Llama3.1模型的全面细致解读,官网92页文档

需积分: 5 0 下载量 69 浏览量

2024-08-12

10:52:28

上传

评论

收藏 8.84MB PDF 举报

温馨提示

2024.7.23日,Meta突然发布了llama3.1。上述资源是关于Llama3.1模型的全面细致解读,官网92页文档

资源推荐

资源详情

资源评论

The Llama 3 Herd of Models

Llama Team, AI @ Meta

1

1

A detailed contributor list can be found in the appendix of this paper.

Modern artificial intelligence (AI) systems are powered by foundation models. This paper presents a

new set of foundation models, called Llama 3. It is a herd of language models that natively support

multilinguality, coding, reasoning, and tool usage. Our largest model is a dense Transformer with

405B parameters and a context window of up to 128K tokens. This paper presents an extensive

empirical evaluation of Llama 3. We find that Llama 3 delivers comparable quality to leading language

models such as GPT-4 on a plethora of tasks. We publicly release Llama 3, including pre-trained and

post-trained versions of the 405B parameter language model and our Llama Guard 3 model for input

and output safety. The paper also presents the results of experiments in which we integrate image,

video, and speech capabilities into Llama 3 via a compositional approach. We observe this approach

performs competitively with the state-of-the-art on image, video, and speech recognition tasks. The

resulting models are not yet being broadly released as they are still under development.

Date: July 23, 2024

Website: https://llama.meta.com/

1 Introduction

Foundation models are general models of language, vision, speech, and/or other modalities that are designed

to support a large variety of AI tasks. They form the basis of many modern AI systems.

The development of modern foundation models consists of two main stages: (1) a pre-training stage in which

the model is trained at massive scale using straightforward tasks such as next-word prediction or captioning

and (2) a post-training stage in which the model is tuned to follow instructions, align with human preferences,

and improve specific capabilities (for example, coding and reasoning).

In this paper, we present a new set of foundation models for language, called Llama 3. The Llama 3 Herd

of models natively supports multilinguality, coding, reasoning, and tool usage. Our largest model is dense

Transformer with 405B parameters, processing information in a context window of up to 128K tokens. Each

member of the herd is listed in Table 1. All the results presented in this paper are for the Llama 3.1 models,

which we will refer to as Llama 3 throughout for brevity.

We believe there are three key levers in the development of high-quality foundation models: data, scale, and

managing complexity. We seek to optimize for these three levers in our development process:

•

Data. Compared to prior versions of Llama (Touvron et al., 2023a,b), we improved both the quantity and

quality of the data we use for pre-training and post-training. These improvements include the development

of more careful pre-processing and curation pipelines for pre-training data and the development of more

rigorous quality assurance and filtering approaches for post-training data. We pre-train Llama 3 on a

corpus of about 15T multilingual tokens, compared to 1.8T tokens for Llama 2.

•

Scale. We train a model at far larger scale than previous Llama models: our flagship language model was

pre-trained using 3

.

8

×

10

25

FLOPs, almost 50

×

more than the largest version of Llama 2. Specifically,

we pre-trained a flagship model with 405B trainable parameters on 15.6T text tokens. As expected per

1

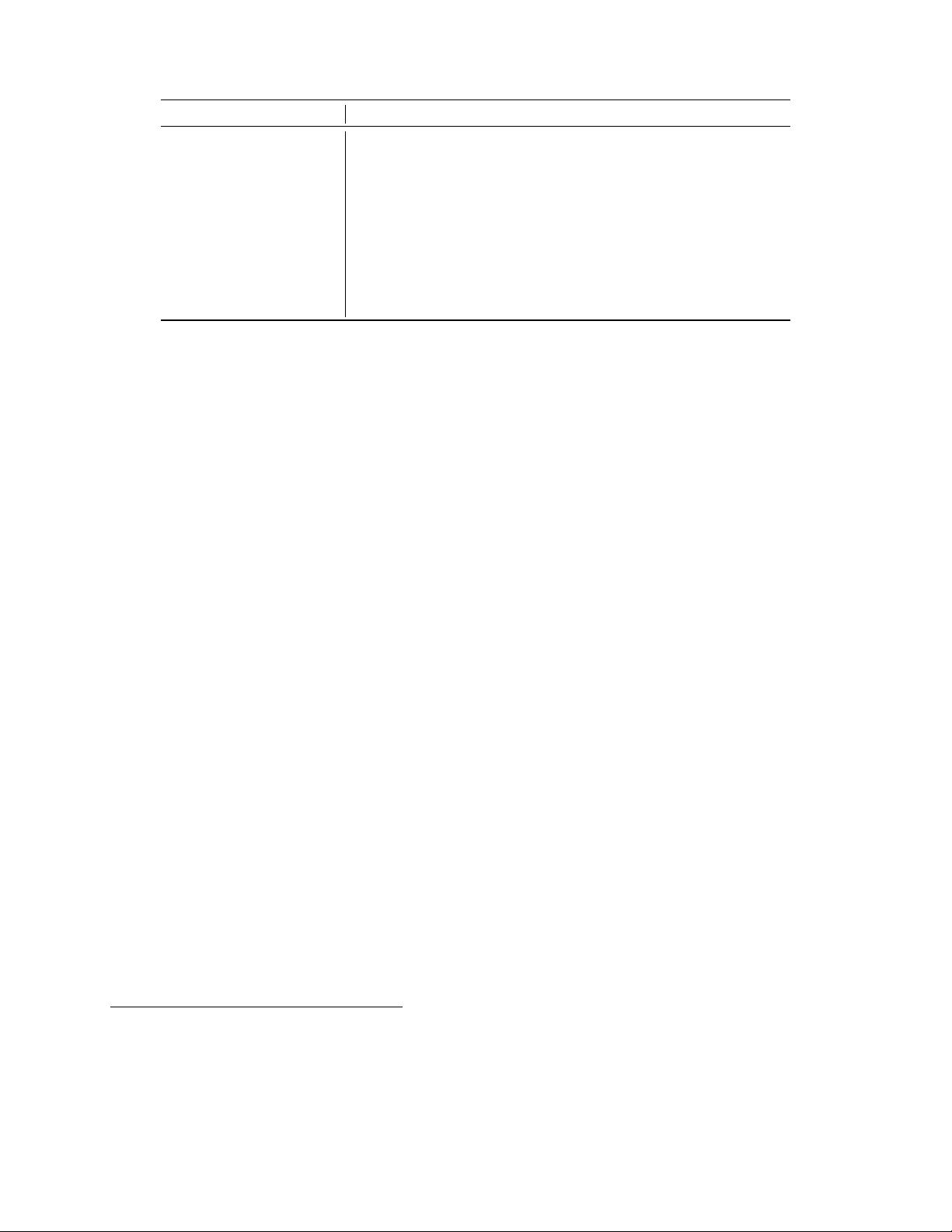

Finetuned Multilingual Long context Tool use Release

Llama 3 8B 7 7

1

7 7 April 2024

Llama 3 8B Instruct 3 7 7 7 April 2024

Llama 3 70B 7 7

1

7 7 April 2024

Llama 3 70B Instruct 3 7 7 7 April 2024

Llama 3.1 8B 7 3 3 7 July 2024

Llama 3.1 8B Instruct 3 3 3 3 July 2024

Llama 3.1 70B 7 3 3 7 July 2024

Llama 3.1 70B Instruct 3 3 3 3 July 2024

Llama 3.1 405B 7 3 3 7 July 2024

Llama 3.1 405B Instruct 3 3 3 3 July 2024

Table 1 Overview of the Llama 3 Herd of models. All results in this paper are for the Llama 3.1 models.

scaling laws for foundation models, our flagship model outperforms smaller models trained using the

same procedure. While our scaling laws suggest our flagship model is an approximately compute-optimal

size for our training budget, we also train our smaller models for much longer than is compute-optimal.

The resulting models perform better than compute-optimal models at the same inference budget. We

use the flagship model to further improve the quality of those smaller models during post-training.

•

Managing complexity. We make design choices that seek to maximize our ability to scale the model

development process. For example, we opt for a standard dense Transformer model architecture (Vaswani

et al., 2017) with minor adaptations, rather than for a mixture-of-experts model (Shazeer et al., 2017)

to maximize training stability. Similarly, we adopt a relatively simple post-training procedure based

on supervised finetuning (SFT), rejection sampling (RS), and direct preference optimization (DPO;

Rafailov et al. (2023)) as opposed to more complex reinforcement learning algorithms (Ouyang et al.,

2022; Schulman et al., 2017) that tend to be less stable and harder to scale.

The result of our work is Llama 3: a herd of three multilingual

1

language models with 8B, 70B, and 405B

parameters. We evaluate the performance of Llama 3 on a plethora of benchmark datasets that span a wide

range of language understanding tasks. In addition, we perform extensive human evaluations that compare

Llama 3 with competing models. An overview of the performance of the flagship Llama 3 model on key

benchmarks is presented in Table 2. Our experimental evaluation suggests that our flagship model performs

on par with leading language models such as GPT-4 (OpenAI, 2023a) across a variety of tasks, and is close to

matching the state-of-the-art. Our smaller models are best-in-class, outperforming alternative models with

similar numbers of parameters (Bai et al., 2023; Jiang et al., 2023). Llama 3 also delivers a much better

balance between helpfulness and harmlessness than its predecessor (Touvron et al., 2023b). We present a

detailed analysis of the safety of Llama 3 in Section 5.4.

We are publicly releasing all three Llama 3 models under an updated version of the Llama 3 Community License;

see

https://llama.meta.com

. This includes pre-trained and post-trained versions of our 405B parameter

language model and a new version of our Llama Guard model (Inan et al., 2023) for input and output safety.

We hope that the open release of a flagship model will spur a wave of innovation in the research community,

and accelerate a responsible path towards the development of artificial general intelligence (AGI).

As part of the Llama 3 development process we also develop multimodal extensions to the models, enabling

image recognition, video recognition, and speech understanding capabilities. These models are still under

active development and not yet ready for release. In addition to our language modeling results, the paper

presents results of our initial experiments with those multimodal models.

1

The Llama 3 8B and 70B were pre-trained on multilingual data but were intended for use in English at the time.

2

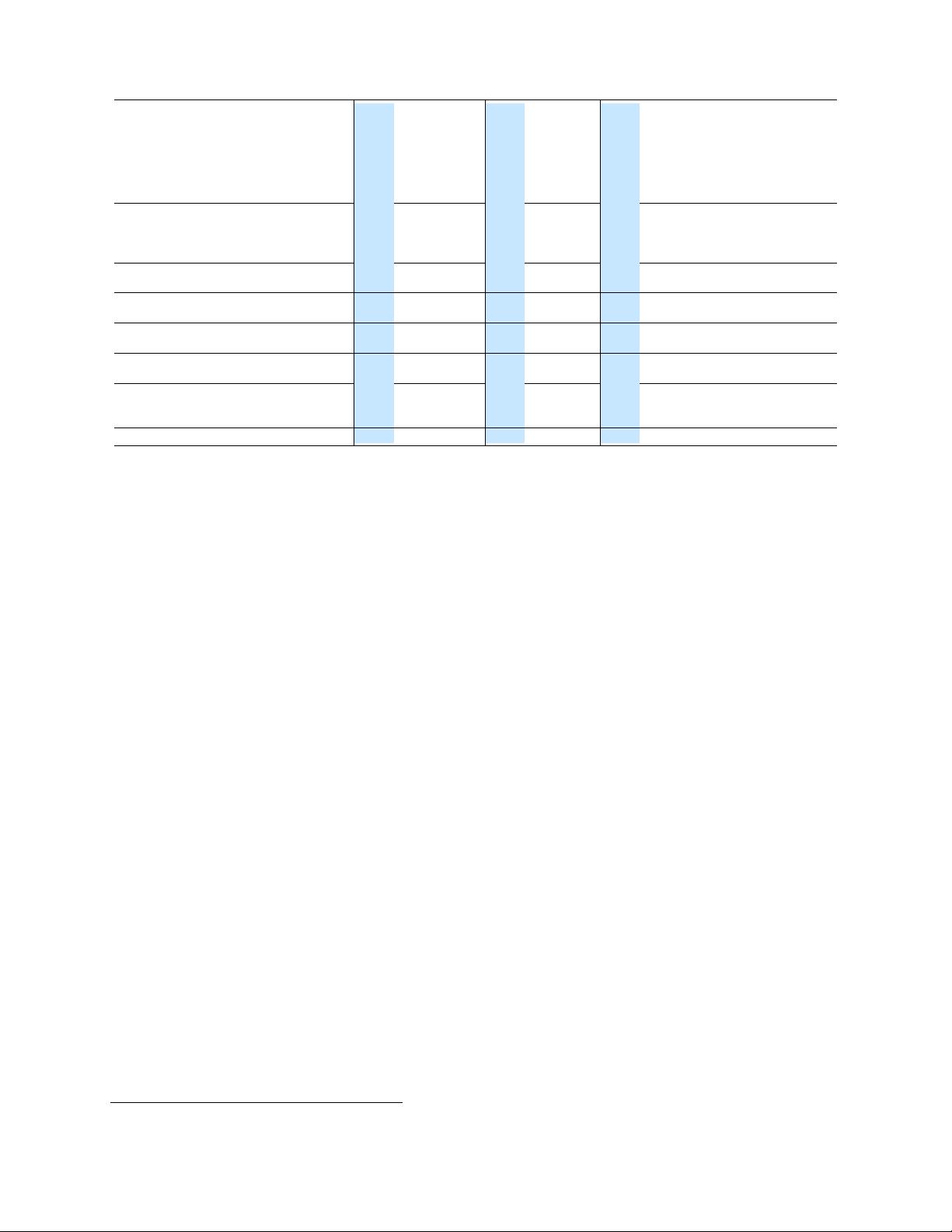

Category Benchmark

Llama 3 8B

Gemma 2 9B

Mistral 7B

Llama 3 70B

Mixtral 8x22B

GPT 3.5 Turbo

Llama 3 405B

Nemotron 4 340B

GPT-4 (0125)

GPT-4o

Claude 3.5 Sonnet

General

MMLU (5-shot) 69.4 72.3 61.1 83.6 76.9 70.7 87.3 82.6 85.1 89.1 89.9

MMLU (0-shot, CoT) 73.0 72.3

4

60.5 86.0 79.9 69.8 88.6 78.7

/

85.4 88.7 88.3

MMLU-Pro (5-shot, CoT) 48.3 – 36.9 66.4 56.3 49.2 73.3 62.7 64.8 74.0 77.0

IFEval 80.4 73.6 57.6 87.5 72.7 69.9 88.6 85.1 84.3 85.6 88.0

Code

HumanEval (0-shot) 72.6 54.3 40.2 80.5 75.6 68.0 89.0 73.2 86.6 90.2 92.0

MBPP EvalPlus (0-shot) 72.8 71.7 49.5 86.0 78.6 82.0 88.6 72.8 83.6 87.8 90.5

Math

GSM8K (8-shot, CoT) 84.5 76.7 53.2 95.1 88.2 81.6 96.8 92.3

♦

94.2 96.1 96.4

♦

MATH (0-shot, CoT) 51.9 44.3 13.0 68.0 54.1 43.1 73.8 41.1 64.5 76.6 71.1

Reasoning

ARC Challenge (0-shot) 83.4 87.6 74.2 94.8 88.7 83.7 96.9 94.6 96.4 96.7 96.7

GPQA (0-shot, CoT) 32.8 – 28.8 46.7 33.3 30.8 51.1 – 41.4 53.6 59.4

Tool use

BFCL 76.1 – 60.4 84.8 – 85.9 88.5 86.5 88.3 80.5 90.2

Nexus 38.5 30.0 24.7 56.7 48.5 37.2 58.7 – 50.3 56.1 45.7

Long context

ZeroSCROLLS/QuALITY 81.0 – – 90.5 – – 95.2 – 95.2 90.5 90.5

InfiniteBench/En.MC 65.1 – – 78.2 – – 83.4 – 72.1 82.5 –

NIH/Multi-needle 98.8 – – 97.5 – – 98.1 – 100.0 100.0 90.8

Multilingual MGSM (0-shot, CoT) 68.9 53.2 29.9 86.9 71.1 51.4 91.6 – 85.9 90.5 91.6

Table 2 Performance of finetuned Llama 3 models on key benchmark evaluations. The table compares the performance of

the 8B, 70B, and 405B versions of Llama 3 with that of competing models. We boldface the best-performing model in

each of three model-size equivalence classes.

4

Results obtained using 5-shot prompting (no CoT).

/

Results obtained

without CoT.

♦

Results obtained using zero-shot prompting.

2 General Overview

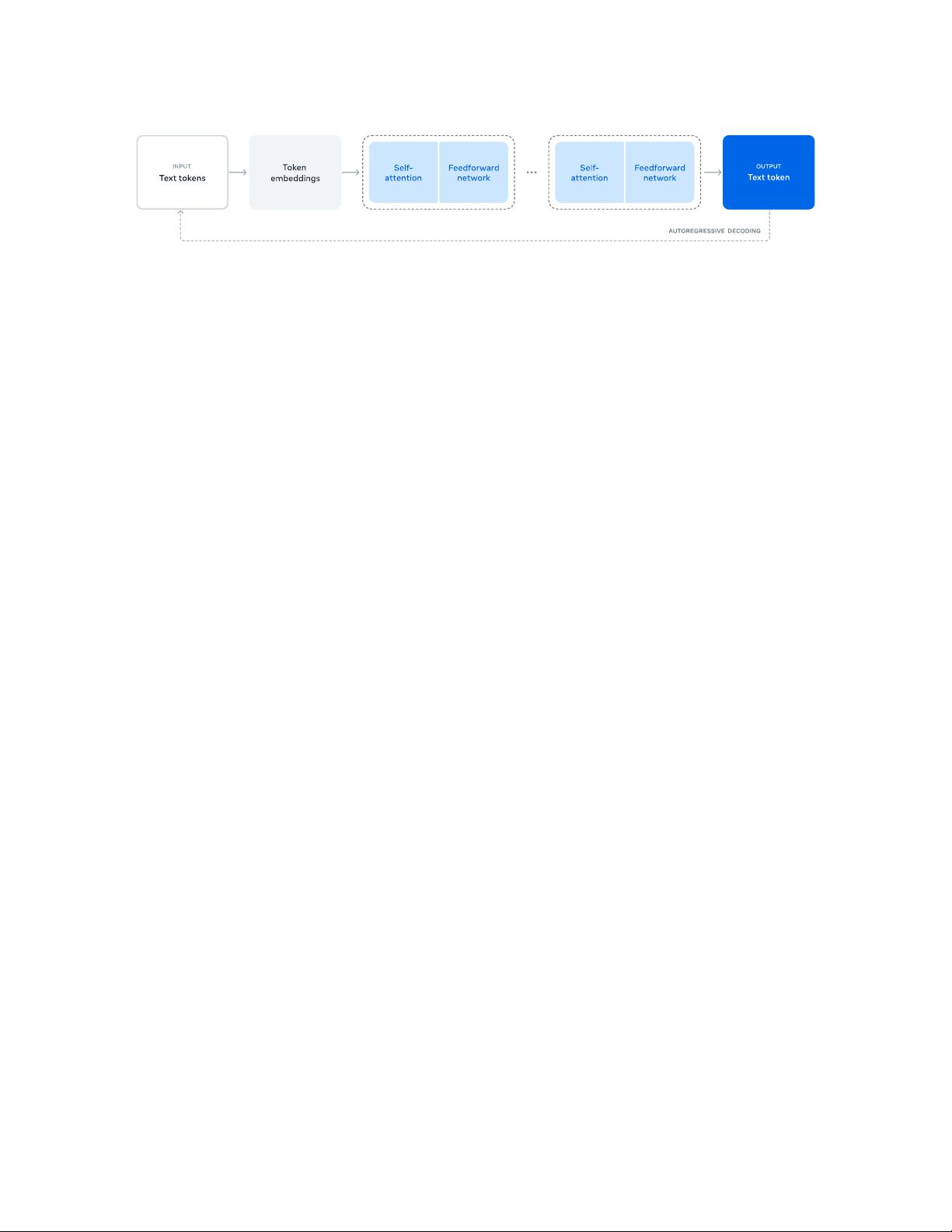

The model architecture of Llama 3 is illustrated in Figure 1. The development of our Llama 3 language

models comprises two main stages:

•

Language model pre-training. We start by converting a large, multilingual text corpus to discrete tokens

and pre-training a large language model (LLM) on the resulting data to perform next-token prediction.

In the language model pre-training stage, the model learns the structure of language and obtains large

amounts of knowledge about the world from the text it is “reading”. To do this effectively, pre-training

is performed at massive scale: we pre-train a model with 405B parameters on 15.6T tokens using a

context window of 8K tokens. This standard pre-training stage is followed by a continued pre-training

stage that increases the supported context window to 128K tokens. See Section 3 for details.

•

Language model post-training. The pre-trained language model has a rich understanding of language

but it does not yet follow instructions or behave in the way we would expect an assistant to. We

align the model with human feedback in several rounds, each of which involves supervised finetuning

(SFT) on instruction tuning data and Direct Preference Optimization (DPO; Rafailov et al., 2024).

At this post-training

2

stage, we also integrate new capabilities, such as tool-use, and observe strong

improvements in other areas, such as coding and reasoning. See Section 4 for details. Finally, safety

mitigations are also incorporated into the model at the post-training stage, the details of which are

described in Section 5.4.

The resulting models have a rich set of capabilities. They can answer questions in at least eight languages,

write high-quality code, solve complex reasoning problems, and use tools out-of-the-box or in a zero-shot way.

We also perform experiments in which we add image, video, and speech capabilities to Llama 3 using a

compositional approach. The approach we study comprises the three additional stages illustrated in Figure 28:

•

Multi-modal encoder pre-training. We train separate encoders for images and speech. We train our

image encoder on large amounts of image-text pairs. This teaches the model the relation between visual

content and the description of that content in natural language. Our speech encoder is trained using a

2

In this paper, we use the term “post-training” to refer to any model training that happens outside of pre-training.

3

Figure 1 Illustration of the overall architecture and training of Llama 3. Llama 3 is a Transformer language model trained to

predict the next token of a textual sequence. See text for details.

self-supervised approach that masks out parts of the speech inputs and tries to reconstruct the masked

out parts via a discrete-token representation. As a result, the model learns the structure of speech

signals. See Section 7 for details on the image encoder and Section 8 for details on the speech encoder.

•

Vision adapter training. We train an adapter that integrates the pre-trained image encoder into the

pre-trained language model. The adapter consists of a series of cross-attention layers that feed image-

encoder representations into the language model. The adapter is trained on text-image pairs. This

aligns the image representations with the language representations. During adapter training, we also

update the parameters of the image encoder but we intentionally do not update the language-model

parameters. We also train a video adapter on top of the image adapter on paired video-text data. This

enables the model to aggregate information across frames. See Section 7 for details.

•

Speech adapter training. Finally, we integrate the speech encoder into the model via an adapter that

converts speech encodings into token representations that can be fed directly into the finetuned language

model. The parameters of the adapter and encoder are jointly updated in a supervised finetuning stage

to enable high-quality speech understanding. We do not change the language model during speech

adapter training. We also integrate a text-to-speech system. See Section 8 for details.

Our multimodal experiments lead to models that can recognize the content of images and videos, and support

interaction via a speech interface. These models are still under development and not yet ready for release.

3 Pre-Training

Language model pre-training involves: (1) the curation and filtering of a large-scale training corpus, (2) the

development of a model architecture and corresponding scaling laws for determining model size, (3) the

development of techniques for efficient pre-training at large scale, and (4) the development of a pre-training

recipe. We present each of these components separately below.

3.1 Pre-Training Data

We create our dataset for language model pre-training from a variety of data sources containing knowledge

until the end of 2023. We apply several de-duplication methods and data cleaning mechanisms on each data

source to obtain high-quality tokens. We remove domains that contain large amounts of personally identifiable

information (PII), and domains with known adult content.

3.1.1 Web Data Curation

Much of the data we utilize is obtained from the web and we describe our cleaning process below.

PII and safety filtering. Among other mitigations, we implement filters designed to remove data from websites

are likely to contain unsafe content or high volumes of PII, domains that have been ranked as harmful

according to a variety of Meta safety standards, and domains that are known to contain adult content.

4

Text extraction and cleaning. We process the raw HTML content for non-truncated web documents to extract

high-quality diverse text. To do so, we build a custom parser that extracts the HTML content and optimizes

for precision in boilerplate removal and content recall. We evaluate our parser’s quality in human evaluations,

comparing it with popular third-party HTML parsers that optimize for article-like content, and found it

to perform favorably. We carefully process HTML pages with mathematics and code content to preserve

the structure of that content. We maintain the image

alt

attribute text since mathematical content is often

represented as pre-rendered images where the math is also provided in the alt attribute. We experimentally

evaluate different cleaning configurations. We find markdown is harmful to the performance of a model that

is primarily trained on web data compared to plain text, so we remove all markdown markers.

De-duplication. We apply several rounds of de-duplication at the URL, document, and line level:

•

URL-level de-duplication. We perform URL-level de-duplication across the entire dataset. We keep the

most recent version for pages corresponding to each URL.

•

Document-level de-duplication. We perform global MinHash (Broder, 1997) de-duplication across the

entire dataset to remove near duplicate documents.

•

Line-level de-duplication. We perform aggressive line-level de-duplication similar to

ccNet

(Wenzek

et al., 2019). We remove lines that appeared more than 6 times in each bucket of 30M documents.

Although our manual qualitative analysis showed that the line-level de-duplication removes not only

leftover boilerplate from various websites such as navigation menus, cookie warnings, but also frequent

high-quality text, our empirical evaluations showed strong improvements.

Heuristic filtering. We develop heuristics to remove additional low-quality documents, outliers, and documents

with excessive repetitions. Some examples of heuristics include:

•

We use duplicated n-gram coverage ratio (Rae et al., 2021) to remove lines that consist of repeated

content such as logging or error messages. Those lines could be very long and unique, hence cannot be

filtered by line-dedup.

•

We use “dirty word” counting (Raffel et al., 2020) to filter out adult websites that are not covered by

domain block lists.

•

We use a token-distribution Kullback-Leibler divergence to filter out documents containing excessive

numbers of outlier tokens compared to the training corpus distribution.

Model-based quality filtering. Further, we experiment with applying various model-based quality classifiers

to sub-select high-quality tokens. These include using fast classifiers such as

fasttext

(Joulin et al., 2017)

trained to recognize if a given text would be referenced by Wikipedia (Touvron et al., 2023a), as well as more

compute-intensive Roberta-based classifiers (Liu et al., 2019a) trained on Llama 2 predictions. To train a

quality classifier based on Llama 2, we create a training set of cleaned web documents, describe the quality

requirements, and instruct Llama 2’s chat model to determine if the documents meets these requirements. We

use DistilRoberta (Sanh et al., 2019) to generate quality scores for each document for efficiency reasons. We

experimentally evaluate the efficacy of various quality filtering configurations.

Code and reasoning data. Similar to DeepSeek-AI et al. (2024), we build domain-specific pipelines that extract

code and math-relevant web pages. Specifically, both the code and reasoning classifiers are DistilRoberta

models trained on web data annotated by Llama 2. Unlike the general quality classifier mentioned above, we

conduct prompt tuning to target web pages containing math deduction, reasoning in STEM areas and code

interleaved with natural language. Since the token distribution of code and math is substantially different

than that of natural language, these pipelines implement domain-specific HTML extraction, customized text

features and heuristics for filtering.

Multilingual data. Similar to our processing pipelines for English described above, we implement filters to

remove data from websites that are likely to contain PII or unsafe content. Our multilingual text processing

pipeline has several unique features:

• We use a fasttext-based language identification model to categorize documents into 176 languages.

• We perform document-level and line-level de-duplication within data for each language.

5

剩余91页未读,继续阅读

资源评论

小稻虫

- 粉丝: 209

- 资源: 3

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

- 10、安徽省大学生学科和技能竞赛A、B类项目列表(2019年版).xlsx

- 9、教育主管部门公布学科竞赛(2015版)-方喻飞

- C语言-leetcode题解之83-remove-duplicates-from-sorted-list.c

- C语言-leetcode题解之79-word-search.c

- C语言-leetcode题解之78-subsets.c

- C语言-leetcode题解之75-sort-colors.c

- C语言-leetcode题解之74-search-a-2d-matrix.c

- C语言-leetcode题解之73-set-matrix-zeroes.c

- 树莓派物联网智能家居基础教程

- YOLOv5深度学习目标检测基础教程

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功