没有合适的资源?快使用搜索试试~ 我知道了~

温馨提示

内容概要:本文对大规模语言模型(LLMs)时代的协作策略进行了全面综述,主要涵盖合并、集成和合作三种方法。合并涉及参数空间内的多个LLMs整合为单一更强模型;集成通过组合不同LLMs的输出来获得一致结果;合作则利用不同LLMs的多种能力解决特定任务。文中详细介绍了这三类方法的具体实现和技术挑战,探讨了它们的应用前景。 适合人群:自然语言处理研究人员、数据科学家和AI系统开发者。 使用场景及目标:适用于需要提升LLMs整体性能、效率和多样性的研究和应用。具体目标包括提高多任务能力、减轻计算负担、增强可信度和减少幻觉现象等。 其他说明:本文不仅提供了理论和方法论的基础,还展望了未来的研究方向,希望促进LLM合作领域的进一步发展。

资源推荐

资源详情

资源评论

Merge, Ensemble, and Cooperate! A Survey on Collaborative Strategies in

the Era of Large Language Models

Jinliang Lu

1,2∗

, Ziliang Pang

1∗

, Min Xiao

1,2∗

, Yaochen Zhu

3

*

, Rui Xia

3

, Jiajun Zhang

1,2,4 †

1

Institute of Automation, Chinese Academy of Sciences, Beijing, China

2

School of Artificial Intelligence, University of Chinese Academy of Sciences, Beijing, China

3

Nanjing University of Science and Technology, Nanjing, China

4

Wuhan AI Research, Wuhan, China

{lujinliang2019, ziliang.pang}@ia.ac.cn,

{yczhu, rxia}@njust.edu.cn, {jjzhang, min.xiao}@nlpr.ia.ac.cn

Abstract

The remarkable success of Large Language

Models (LLMs) has ushered natural language

processing (NLP) research into a new era. De-

spite their diverse capabilities, LLMs trained on

different corpora exhibit varying strengths and

weaknesses, leading to challenges in maximiz-

ing their overall efficiency and versatility. To

address these challenges, recent studies have ex-

plored collaborative strategies for LLMs. This

paper provides a comprehensive overview of

this emerging research area, highlighting the

motivation behind such collaborations. Specifi-

cally, we categorize collaborative strategies into

three primary approaches: Merging, Ensemble,

and Cooperation. Merging involves integrating

multiple LLMs in the parameter space. En-

semble combines the outputs of various LLMs.

Cooperation leverages different LLMs to allow

full play to their diverse capabilities for spe-

cific tasks. We provide in-depth introductions

to these methods from different perspectives

and discuss their potential applications. Addi-

tionally, we outline future research directions,

hoping this work will catalyze further studies

on LLM collaborations and paving the way for

advanced NLP applications.

1 Introduction

"Many hands make light work."

—– John Heywood

Human beings have long understood the power of

collaboration. When individuals pool their diverse

skills and efforts, they can achieve far more than

they could alone. This principle of collective effort

has found new relevance in the realm of machine

learning (Dietterich, 2000; Panait and Luke, 2005;

Sagi and Rokach, 2018), significantly boosting the

development of artificial intelligence.

In recent years, large language models (LLMs)

(Brown et al., 2020; Chowdhery et al., 2023) have

*

Equal Contribution

†

Corresponding author

PaLM-1/2

Qwen - 1/1.5/2

LLaMA-Chat

LLaMA - 1/2/3

LLaMA-Guard

CodeX

GPT-3

CodeLLaMA

Gemma

Flan-T5

GPT-4

ChatGPT

Qwen-Multilingual

Qwen-Audio

Gemini - 1/1.5

DeepSeek - Coder - 1/2

DeepSeek - Chat- 1/2

Baichuan

ERNIE

Mistral

Codestral

WebGPT

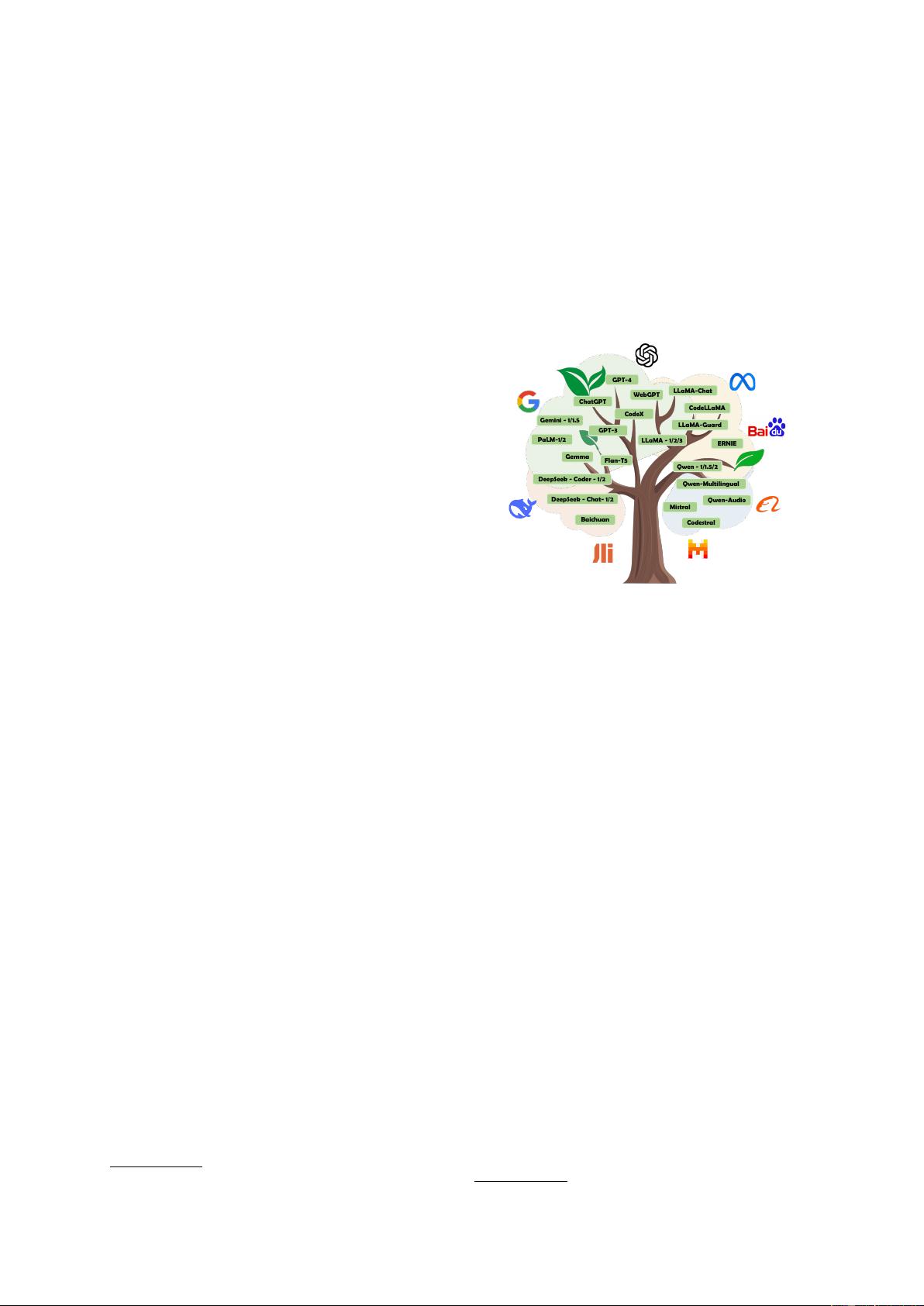

Figure 1: Recently, numerous large language models

have been released, each with its own unique strengths.

This diversity has fueled research into collaboration

between these models.

emerged as one of the most rapidly developing

and promising directions in artificial intelligence.

These models have significantly transformed the

paradigm of natural language processing (NLP)

(Min et al., 2023a; Chang et al., 2024; Zhao et al.,

2023) and influenced other areas (Wu et al., 2023a;

Zhang et al., 2024a). This impressive revolution

has inspired numerous universities, institutes, and

companies to pre-train and release their own LLMs.

Currently, over 74,000 pre-trained models are avail-

able on the HuggingFace model hub

1

. As shown in

Figure 1, these models, trained with diverse data,

architectures, and methodologies, possess unique

capabilities: some are proficient in multilingual

tasks (Le Scao et al., 2023; Lin et al., 2022), others

specialize in domains like medicine (Yang et al.,

2024b) or finance (Wu et al., 2023b), some are

adept at processing long-context windows (Chen

et al., 2023e,f), while others are fine-tuned for

better alignment with human interaction (Ouyang

et al., 2022). However, no single model consis-

tently outperforms all others across tasks (Jiang

1

https://huggingface.co/models

1

arXiv:2407.06089v1 [cs.CL] 8 Jul 2024

et al., 2023a). This variability motivates research

into the collaboration between various LLMs to

unlock their combined potential, akin to creating a

Hexagon Warrior.

Despite progress in LLM collaboration research,

the relationships and context among the proposed

methods remain unclear. This survey aims to fill

that gap by categorizing collaboration techniques

into three main approaches: Merging, Ensemble,

and Cooperation. Specifically, Merging and En-

semble methods for LLMs are derived from tradi-

tional fusion techniques commonly explored in ma-

chine learning (Li et al., 2023a). These methods are

tailored to be more suitable for LLMs, effectively

leveraging the collaborative advantages of diverse

LLMs. Merging involves integrating the parame-

ters of multiple LLMs into a single, unified model,

requiring that the parameters are compatible within

a linear space. In contrast, Ensemble focuses on

combining the outputs generated by various LLMs

to produce coherent results, with less emphasis on

the parameters of the individual models. Coopera-

tion extends beyond merging and ensemble. This

survey concentrates on cooperative methods that

harness the diverse strengths of LLMs to achieve

specific objectives. In general, these techniques

expand the methodologies for model collaboration,

holding significant research importance for LLMs.

The structure of this work is organized as follows.

We begin by providing the background of LLMs

and defining collaboration techniques for LLMs

in Section 2. Next, we introduce three key cate-

gories: Merging in Section 3, Ensemble in Section

4, and Cooperation in Section 5. Each category

of methods is thoroughly classified and described

in detail, offering a clear understanding of their

respective frameworks and applications. Finally,

we offer a comprehensive discussion in Section 6,

highlighting challenges and future directions for

research.

In summary, this study aims to comprehensively

explore the strategies and methodologies for col-

laborative efforts among LLMs. We aspire for this

survey to enrich understanding of LLM collabora-

tion strategies and to inspire future research.

2 Background

2.1 Large Language Models

Language modeling has always been a cornerstone

of natural language processing (NLP). Recently,

plenty of studies scale up of Transformer-based lan-

guage models (Vaswani et al., 2017; Radford et al.,

2018) to substantial more than billions of param-

eters, exemplified by models like GPT-3 (Brown

et al., 2020), PaLM (Chowdhery et al., 2023; Anil

et al., 2023), LLaMA (Touvron et al., 2023a,b).

These models are typically considered as Large

Language Models (LLMs) due to their massive

amount of parameters (Zhao et al., 2023). This

subsection discusses the architecture and scaling of

LLMs, their training objectives, and the emergent

abilities they exhibit.

Architecture and Scaling Similar to pre-trained

language models (PLMs) (Radford et al., 2018; De-

vlin et al., 2019), LLMs primarily adopt the Trans-

former architecture (Vaswani et al., 2017) as their

backbone, consisting of stacked multi-head atten-

tion and feed-forward layers. Unlike PLMs, most

currently released LLMs are built upon decoder-

only architectures for training efficiency and few-

shot capabilities. This approach also shows po-

tential when the number of parameters increases

(Zhang et al., 2022). Recent studies have investi-

gated the quantitative relationship between model

capacity, the amount of training data, and model

size, known as the scaling law (Kaplan et al., 2020;

Hoffmann et al., 2022).

Training Objectives In the previous studies

about PLMs, various language modeling tasks are

proposed. For example, masked language model-

ing for BERT (Devlin et al., 2019), De-noising lan-

guage modeling for BART (Lewis et al., 2020) and

T5 (Raffel et al., 2020). However, current LLMs

typically utilize the standard causal language mod-

eling as their training objective, which aims to pre-

dict the next token based on the preceding tokens

in a sequence. This training objective is well-suited

for decoder-only architectures.

Beyond the pre-training objective, recent studies

have aimed to model human preferences to better

align LLMs with human expectations. For exam-

ple, the well-known InstructGPT (Ouyang et al.,

2022) introduces reinforcement learning from hu-

man feedback (RLHF), which uses preference re-

wards as an additional training objective. Although

RLHF is effective at making LLMs more helpful to

users, it inevitably incurs an alignment tax, which

refers to performance degradation after RLHF. Re-

cent research has explored various techniques to

mitigate alignment tax issues (Lin et al., 2023; Lu

et al., 2024b; Fu et al., 2024b).

2

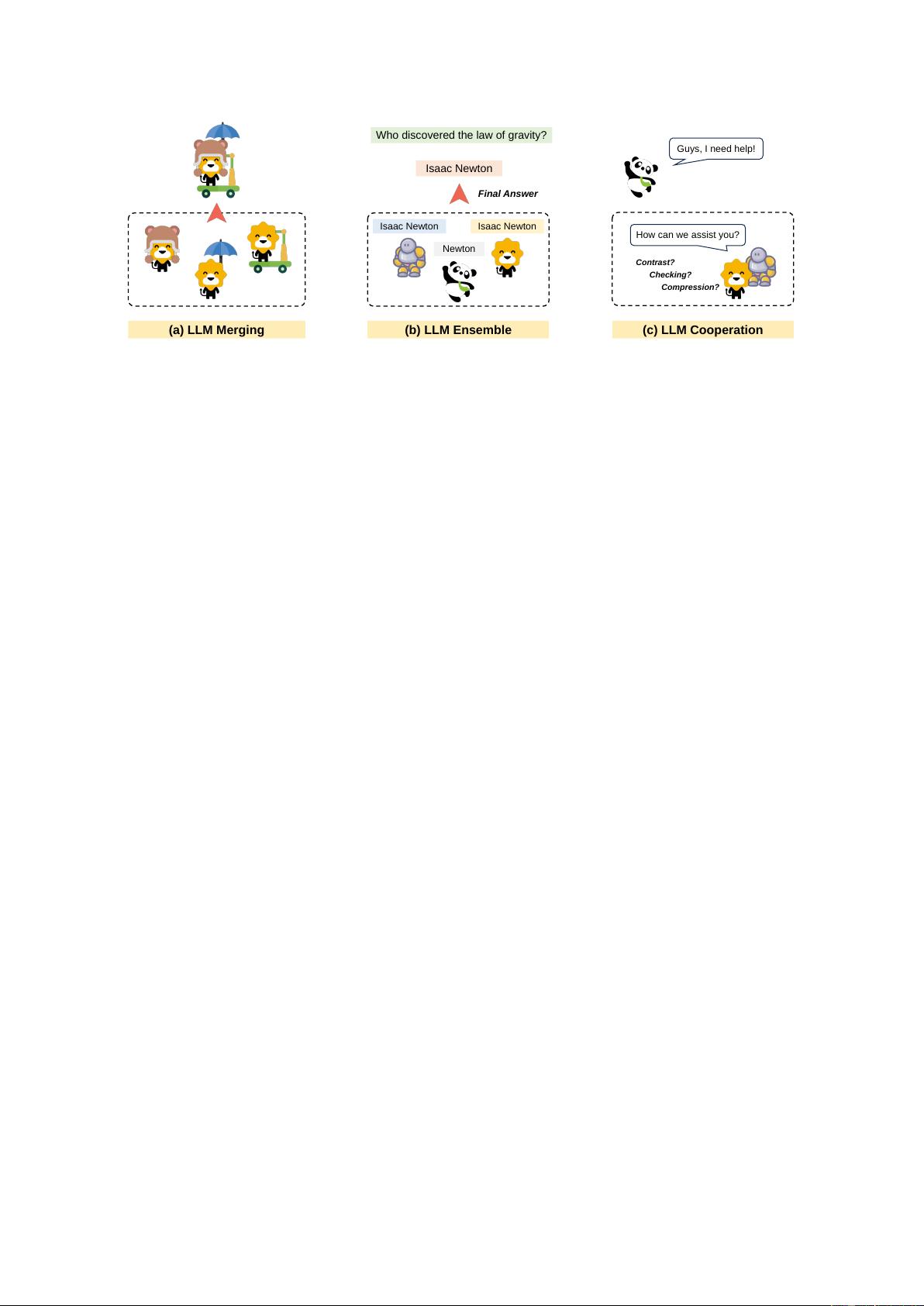

(a) LLM Merging (b) LLM Ensemble (c) LLM Cooperation

Isaac Newton

Newton

Isaac Newton

Isaac Newton

Final Answer

Guys, I need help!

How can we assist you?

Who discovered the law of gravity?

Contrast?

Compression?

Checking?

Figure 2: The illustration of different collaboration strategies, with each animal in the figures representing a different

LLM.

Emergent Abilities The fundamental capability

of language models is text generation, where tokens

are auto-regressively generated based on preceding

tokens using greedy search or nucleus sampling

(Holtzman et al., 2020a):

y

i

∼ p(y

i

|y

<i

) (1)

Interestingly, LLMs can not only generate real-

istic text but also perform specific tasks when pro-

vided with task-specific prompts, without requiring

fine-tuning on particular downstream tasks (Brown

et al., 2020). This phenomenon is one of the most

important differences between LLMs and previ-

ous PLMs. Wei et al. (2022b) define the emergent

ability as “an ability that is not present in smaller

models but is present in larger models.” Among

these emergent abilities, in-context learning (ICL)

(Brown et al., 2020; Dong et al., 2022) and instruc-

tion following are commonly used and significantly

enhance the ability of LLMs to process various

tasks.

ICL helps LLMs understand tasks by using sev-

eral task examples as demonstrations. When pro-

vide these demonstrations as prompts, LLMs can

automatically generate reasonable output for the

given test example, which can be formalized as:

p(y|x) = p(y|x, demonstration({(x

i

, y

i

)}

k

i=1

))

(2)

Instruction following ability are typically emerge

in LLMs that have been fine-tuned on examples

formatted with instructions on multiple tasks. The

generation process can be formalized as:

p(y|x) = p(y|x, I) (3)

where

I

refers to the given instruction for cur-

rent example

x

. The instruction tuning technique

(Sanh et al., 2021; Ouyang et al., 2022; Wei et al.,

2022a) can enhance the generalization capabilities

of LLMs, enabling them to perform well with in-

structions on a variety of tasks, including unseen

ones (Thoppilan et al., 2022).

2.2 Collaboration for LLMs

For previous task-dependent NLP models, collabo-

ration strategies typically aimed to improve perfor-

mance on specific tasks (Jia et al., 2023). Recently,

LLMs have revolutionized NLP by demonstrating

remarkable versatility across a wide range of tasks.

This shift has also shifted the focus of collabora-

tion strategies for LLMs toward enhancing versa-

tility and achieving more general objectives. Con-

sequently, some recently proposed collaboration

strategies have become more flexible and tailored

specifically for LLMs.

The Necessity of LLM Collaboration Although

almost all LLMs demonstrate strong versatility

across various tasks through in-context learning

and instruction following, different LLMs still have

distinct strengths and weaknesses (Jiang et al.,

2023a).

Differences in training corpora and model ar-

chitectures among various LLM families—such

as LLaMA, GLM (Zeng et al., 2023), and QWen

(Bai et al., 2023)—result in significant variations

in their capabilities. Even within the same fam-

ily, fine-tuning on specific corpora (e.g., mathemat-

ics (Azerbayev et al., 2023), code (Roziere et al.,

2023), or medical domains (Wu et al., 2024)) can

lead to noticeable performance differences. Effec-

tive collaboration among these LLMs can unlock

3

LLM Collaboration

Cooperation (§5)

Federated Co-

operation (§5.4)

Federated

Prompt Engi-

neering (§5.4.2)

e.g Zhang et al. (2024b), Li

et al. (2024a), Guo et al. (2022)

Federated Train-

ing (§5.4.1)

e.g. Fan et al. (2024), Ye et al.

(2024), Wang et al. (2024d)

Compensatory

Cooperation (§5.3)

Retriever (§5.3.2)

e.g. Ma et al. (2023b), Mao et al.

(2024), Li et al. (2024d), Su et al. (2024)

Detector (§5.3.1)

e.g. Pan et al. (2023), Huo et al. (2023),

Chen et al. (2023b), Wang et al. (2024c)

Knowledge

Transfer (§5.2)

Supplying New

Knowledge (§5.2.3)

e.g. Ormazabal et al. (2023), (Liu et al., 2024a),

Zhao et al. (2024b), Zhou et al. (2024b)

Strengthening

Correct Knowl-

edge (§5.2.2)

e.g. Tu et al. (2023), Lu et al.

(2024a), Deng and Raffel (2023)

Mitigating

Incorrect Knowl-

edge (§5.2.1)

e.g. Li et al. (2023b), Liu et al. (2021),

O’Brien and Lewis (2023), Shi et al. (2024)

Efficient Com-

putation (§5.1)

Speculative

Decoding (§5.1.2)

e.g. Stern et al. (2018), Leviathan et al.

(2023), Ou et al. (2024), Huang et al. (2024a)

Input Compres-

sion (§5.1.1)

e.g. LLMLINGUA (Jiang et al., 2023b), Li

et al. (2024b), Liu et al. (2023), (Gao, 2024b)

Ensemble (§4)

LLM Ensemble

Application (§4.2)

e.g. Gundabathula and Kolar (2024), Barabucci et al.

(2024), Coste et al. (2024), Ahmed et al. (2024)

LLM Ensemble

Methodology (§4.1)

After Infer-

ence (§4.1.3)

e.g. Chen et al. (2023d), Madaan et al.

(2023), Yue et al. (2024), Jiang et al. (2023a)

During Infer-

ence (§4.1.2)

e.g. Hoang et al. (2023), Li et al. (2024c),

Xu et al. (2024b), Huang et al. (2024c)

Before Infer-

ence (§4.1.1)

e.g. Shnitzer et al. (2023), Lu et al. (2023),

Srivatsa et al. (2024), Hu et al. (2024)

Merging (§3)

Merging for

Enhancing Multi-

Task Capability

(M-MTC) (§3.2)

Methods based

on Incremental

Training (§3.2.3)

e.g. Tang et al. (2023), Yang et al. (2024a)

Methods based

on Task Prop-

erty (§3.2.2)

e.g. Ilharco et al. (2023), Yadav et al. (2023), Yang

et al. (2023), Zhou et al. (2024a), Yu et al. (2023)

Methods based

on Weighted

Average (§3.2.1)

e.g. Jin et al. (2022), Daheim

et al. (2023), Nathan et al. (2024)

Merging for

Relatively Optimal

Solution (M-

ROS) (§3.1)

Adaptation to

LLMs (§3.1.2)

e.g. Wan et al. (2024b), Liu et al. (2024b), Kim

et al. (2024), Fu et al. (2024a), Lin et al. (2023)

Basic M-ROS

Methodolo-

gies (§3.1.1)

e.g. Soup (Wortsman et al., 2022), Rame et al.

(2022), Wan et al. (2024b), Liu et al. (2024b)

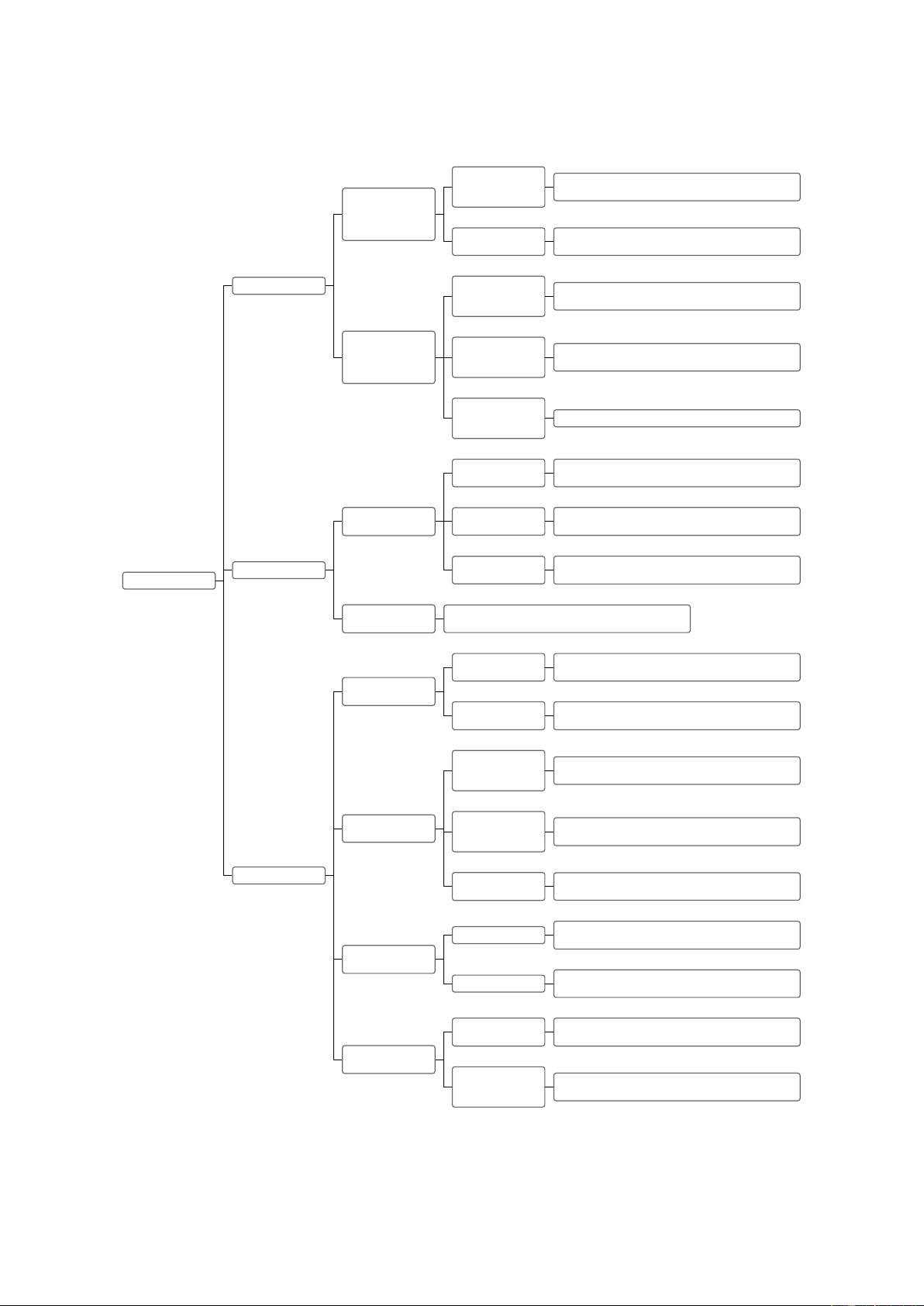

Figure 3: The primary categorization of LLM collaboration in this survey.

4

their full potential, significantly enhancing their

overall performance and versatility.

Furthermore, LLMs inevitably suffer from com-

putational inefficiencies (Zhou et al., 2024c), hallu-

cinations (Rawte et al., 2023; Ji et al., 2023; Huang

et al., 2023), and privacy leaks Fan et al. (2024).

Recent studies explore the collaboration strategies

between LLMs, which provides potential solutions

to mitigate these issues and compensate for their

shortcomings.

The Category of LLM Collaboration Methods

Collaboration between LLMs refers to the pro-

cess where multiple LLMs work together, lever-

aging their individual strengths and capabilities

to achieve a shared objective. In this survey, we

categorize LLM collaboration methods into three

aspects: merging, ensemble and cooperation. As

shown in Figure 2,

•

Merging involves integrating multiple LLMs

into a unified, stronger one, primarily through

arithmetic operations in the model parameter

space.

•

Ensemble combines the outputs of different

models to obtain coherent results. Recent stud-

ies have proposed various ensemble methods

tailored for LLMs.

•

Cooperation is a relatively broad concept.

This survey focuses on cooperation methods

that leverage the diverse capabilities of differ-

ent LLMs to accomplish specific objectives,

such as efficient computation or knowledge

transfer.

It should be noted that as we move from merging

to ensemble to cooperation, the requirements for

LLMs gradually relax, making the proposed meth-

ods increasingly flexible. Specifically, merging

methods are effective only when the LLMs share

a compatible parameter space, allowing seamless

integration. Ensemble methods require LLMs to

have diverse yet comparable abilities; without this

balance, the ensemble may be less effective. In

contrast, cooperation methods are more flexible,

focusing on leveraging LLMs with various capabili-

ties that are specially designed to achieve particular

objectives.

For each category, we further classify specific

methods based on their focus or stages of imple-

mentation. The comprehensive categorization is

shown in Figure 3.

3 Merging

Single models have inherent limitations, such as po-

tentially missing important information (Sagi and

Rokach, 2018), and being prone to getting stuck

in local optima or lacking multi-task capabilities.

To address these limitations, researchers have ex-

plored model merging methods, which combine

multiple models in the parameter space to create a

unified, stronger model. Model merging has made

significant progress in recent years, with various

techniques cataloged in existing surveys (Li et al.,

2023a). In the era of LLMs, model merging has

become an important solution for model collabo-

ration, usually employing basic merging methods

and demonstrate the effectiveness. This section

focuses on the merging techniques that are proven

to be effective for LLMs

2

.

Current studies on model merging typically fo-

cus on two key issues: merging to approach a rel-

atively optimal solution (M-ROS) and merging to

enhance multi-task capability (M-MTC). Research

on M-ROS is based on the finding that gradient-

optimized solutions often converge near the bound-

ary of a wide flat region rather than at the central

point (Izmailov et al., 2018). Model merging of-

fers a way to approach this relatively optimal point,

thereby yielding a stronger model. M-MTC, on

the other hand, aims to utilize model merging tech-

niques to enrich a single model with capabilities

across multiple tasks (Ilharco et al., 2023; Yadav

et al., 2023). In the following subsection, we will

introduce the techniques for each objective and

their application to LLMs.

It is important to note that for both M-ROS and

M-MTC, current model merging methods are appli-

cable only to models with the same architecture and

parameters within the same space. Therefore, most

candidate models for merging should be trained

with identical initialization. For instance, the can-

didate models

M = {M

1

, M

2

, · · · , M

k

}

should

be fine-tuned from the same pre-trained model

M

0

.

This requirement ensures compatibility and coher-

ence among the model parameters, promoting suc-

cessful merging. Unfortunately, for models with in-

compatible parameters, such as LLaMA and QWen,

current merging techniques are ineffective.

2

Some advanced methods, such as merging after neuron

alignments

-

like OT Fusion (Singh and Jaggi, 2020), Re-

Basin techniques (Peña et al., 2023; Ainsworth et al., 2023),

and REPAIR (Jordan et al., 2023)

-

have not been widely

explored for LLMs. We leave the implementation of these

techniques on LLMs for future work.

5

剩余28页未读,继续阅读

资源评论

pk_xz123456

- 粉丝: 2679

- 资源: 3706

下载权益

C知道特权

VIP文章

课程特权

开通VIP

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

- 基于粒子群优化算法的微型燃气轮机冷热电联供系统优化调度附Matlab代码.rar

- 基于企鹅优化算法的机器人轨迹规划Matlab代码.rar

- 基于无人机的移动边缘计算网络研究附Matlab代码.rar

- 基于双层优化的微电网系统规划设计方法附Matlab代码.rar

- 基于一阶剪切变形理论 (FSDT) 的复合材料层压板有限元分析Matlab代码.rar

- 基于小波的锐化特征 (WASH):基于 HVS 的图像质量评估指标Matlab代码.rar

- 基于遗传算法卡车无人机旅行推销员问题Matlab代码.rar

- 基于支持向量机SVM-Adaboost的风电场预测研究附Matlab代码.rar

- 基于蚁群优化算法解决机器人路径规划问题Matlab代码.rar

- 自制数据库迁移工具-C版-05-HappySunshineV1.4-(支持Gbase8a、PG)

- 基于遗传算法求解TSP和MTSP研究Matlab代码实现.rar

- 卡尔曼滤波器、隐式动态反馈、滤波器偏差更新和移动时域估计Matlab代码.rar

- 计及调峰主动性的风光水火储多能系统互补协调优化调度matlab复现.rar

- 考虑阶梯式碳交易机制与电制氢的综合能源系统热电优化附Matlab代码.rar

- 列车-轨道-桥梁交互仿真研究Matlab代码.rar

- 两级三相逆变器的选择性谐波消除PWM(SHEPWM)simulink实现.rar

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功