没有合适的资源?快使用搜索试试~ 我知道了~

大规模语言模型微调中不同数据与方法对性能的影响研究

1.该资源内容由用户上传,如若侵权请联系客服进行举报

2.虚拟产品一经售出概不退款(资源遇到问题,请及时私信上传者)

2.虚拟产品一经售出概不退款(资源遇到问题,请及时私信上传者)

版权申诉

0 下载量 199 浏览量

2024-12-02

14:54:09

上传

评论

收藏 1.45MB PDF 举报

温馨提示

内容概要:本文系统地研究了大规模语言模型(LLM)微调时,模型大小、预训练数据大小、微调数据大小以及参数高效微调(PET)方法对性能的影响。研究表明,增加LLM模型大小比增加预训练数据更有助于提高微调性能;PET方法的效果受任务和微调数据量的依赖较强,而且在某些设置下,增加PET参数反而表现不佳。文章提出了一个乘法联合缩放定律来描述这些因素之间的关系,为理解和选择LLM微调方法提供了依据。 适合人群:自然语言处理研究人员、机器学习工程师、数据科学家。 使用场景及目标:①理解不同因素对LLM微调性能的影响;②优化LLM微调方法的选择,尤其是在数据有限的情况下;③探索大规模语言模型在不同任务上的最佳应用方法。 其他说明:本文通过对多种实验条件的对比分析,揭示了LLM微调过程中的一些重要规律,有助于指导实际项目中的模型选择和优化策略。同时,也为未来的相关研究提供了有价值的参考。

资源推荐

资源详情

资源评论

Published as a conference paper at ICLR 2024

WHEN SCALING MEETS LLM FINETUNING:

THE EFFECT OF DATA, MODEL AND FINETUNING

METHOD

Biao Zhang

†

Zhongtao Liu

⋄

Colin Cherry

⋄

Orhan Firat

†

†

Google DeepMind

⋄

Google Research

{biaojiaxing,zhongtao,colincherry,orhanf}@google.com

ABSTRACT

While large language models (LLMs) often adopt finetuning to unlock their ca-

pabilities for downstream applications, our understanding on the inductive biases

(especially the scaling properties) of different finetuning methods is still limited.

To fill this gap, we conduct systematic experiments studying whether and how dif-

ferent scaling factors, including LLM model size, pretraining data size, new fine-

tuning parameter size and finetuning data size, affect the finetuning performance.

We consider two types of finetuning – full-model tuning (FMT) and parameter ef-

ficient tuning (PET, including prompt tuning and LoRA), and explore their scaling

behaviors in the data-limited regime where the LLM model size substantially out-

weighs the finetuning data size. Based on two sets of pretrained bilingual LLMs

from 1B to 16B and experiments on bilingual machine translation and multilin-

gual summarization benchmarks, we find that 1) LLM finetuning follows a power-

based multiplicative joint scaling law between finetuning data size and each other

scaling factor; 2) LLM finetuning benefits more from LLM model scaling than

pretraining data scaling, and PET parameter scaling is generally ineffective; and

3) the optimal finetuning method is highly task- and finetuning data-dependent.

We hope our findings could shed light on understanding, selecting and developing

LLM finetuning methods.

1 INTRODUCTION

Leveraging and transferring the knowledge encoded in large-scale pretrained models for downstream

applications has become the standard paradigm underlying the recent success achieved in various

domains (Devlin et al., 2019; Lewis et al., 2020; Raffel et al., 2020; Dosovitskiy et al., 2021; Baevski

et al., 2020), with the remarkable milestone set by large language models (LLMs) that have yielded

ground-breaking performance across language tasks (Brown et al., 2020; Zhang et al., 2022b; Scao

et al., 2022; Touvron et al., 2023). Advanced LLMs, such as GPT-4 (OpenAI, 2023) and PaLM

2 (Anil et al., 2023), often show emergent capabilities and allow for in-context learning that could

use just a few demonstration examples to perform complex reasoning and generation tasks (Wei

et al., 2022; Zhang et al., 2023; Fu et al., 2023; Shen et al., 2023). Still, LLM finetuning is required

and widely adopted to unlock new and robust capabilities for creative tasks, get the most for focused

downstream tasks, and align its value with human preferences (Ouyang et al., 2022; Yang et al.,

2023; Gong et al., 2023; Schick et al., 2023). This becomes more significant in traditional industrial

applications due to the existence of large-scale annotated task-specific data accumulated over years.

There are many potential factors affecting the performance of LLM finetuning, including but not

limited to 1) pretraining conditions, such as LLM model size and pretraining data size; and 2) fine-

tuning conditions, such as downstream task, finetuning data size and finetuning methods. Intuitively,

the pretraining controls the quality of the learned representation and knowledge in pretrained LLMs,

and the finetuning affects the degree of transfer to the donwstream task. While previous studies have

well explored the scaling for LLM pretraining or training from scratch (Kaplan et al., 2020; Hoff-

mann et al., 2022) and the development of advanced efficient finetuning methods (Hu et al., 2021;

He et al., 2022), the question of whether and how LLM finetuning scales with the above factors

unfortunately receives very little attention (Hernandez et al., 2021), which is the focus of our study.

1

arXiv:2402.17193v1 [cs.CL] 27 Feb 2024

Published as a conference paper at ICLR 2024

Note, apart from improving finetuning performance, studying the scaling for LLM finetuning could

help us to understand the impact of different pretraining factors from the perspective of finetuning,

which may offer insights for LLM pretraining.

In this paper, we address the above question by systematically studying the scaling for two popular

ways of LLM finetuning: full-model tuning (FMT) that updates all LLM parameters and parameter-

efficient tuning (PET) that only optimizes a small amount of (newly added) parameters, such as

prompt tuning (Lester et al., 2021, Prompt) and low-rank adaptation (Hu et al., 2021, LoRA). We

first examine finetuning data scaling (Hernandez et al., 2021), on top of which we further explore

its scaling relationship with other scaling factors, including LLM model size, pretraining data size,

and PET parameter size. We focus on the data-limited regime, where the finetuning data is much

smaller than the LLM model, better reflecting the situation in the era of LLM. For experiments,

we pretrained two sets of bilingual LLMs (English&German, English&Chinese) with model size

ranging from 1B to 16B, and performed large-scale study on WMT machine translation (English-

German, English-Chinese) and multilingual summarization (English, German, French and Spanish)

tasks with up to 20M finetuning examples. Our main findings are summarized below:

• We propose the following multiplicative joint scaling law for LLM finetuning:

ˆ

L(X, D

f

) = A ∗

1

X

α

∗

1

D

β

f

+ E, (1)

where {A, E, α, β} are data-specific parameters to be fitted, D

f

denotes finetuning data

size, and X refer to each of the other scaling factors. We show empirical evidence that this

joint law generalizes to different settings.

• Scaling LLM model benefits LLM finetuning more than scaling pretraining data.

• Increasing PET parameters doesn’t scale well for LoRA and Prompt, although LoRA shows

better training stability.

• The scaling property for LLM finetuning is highly task- and data-dependent, making the

selection of optimal finetuning method for a downstream task non-trivial.

• LLM-based finetuning could encourage zero-shot generalization to relevant tasks, and PET

performs much better than FMT.

2 SETUP

Downstream Tasks We consider machine translation and multilingual summarization as the

downstream tasks for the finetuning, because 1) these tasks require resolving cross-lingual under-

standing and generation, which represent high complexity and are challenging; and 2) they are well

established in NLP with rich amount of available finetuning corpora. Specially, we adopt WMT14

English-German (En-De) and WMT19 English-Chinese (En-Zh) (Kocmi et al., 2022) for transla-

tion. We combine the De, Spanish (Es) and French (Fr) portion of the multilingual summarization

dataset (Scialom et al., 2020) with CNN/Daily-Mail (Hermann et al., 2015, En) for summarization

and denote it as MLSum. Details about each task are listed in Table 1a. Note for MLSum, we di-

rectly concatenate the datasets of different languages for training and evaluation, where each article

is prepended a prompt indicating its language “Summarize the following document in {lang}:”.

LLMs and Preraining We adopt the exact setup as in Garcia et al. (2023) for LLM pretraining.

The model is a decoder-only Transformer with multi-query attention (Chowdhery et al., 2022) and

trained with the modified UL2 objective (Tay et al., 2022). Considering the focused downstream

tasks and also to ensure the generalization of our study, we pretrained two sets of bilingual LLMs,

i.e. En-De LLM and En-Zh LLM. The pretraining data is a mix of monolingual data from two

languages: we use En/De (En/Zh) data with about 280B (206B) tokens to pretrain the En-De (En-

Zh) LLM. We train LLMs with parameter sizes from 1B to 16B by varying model configurations as

in Table 3 and keep all other settings intact. All LLMs are optimized using Adafactor (Shazeer &

Stern, 2018) for one training epoch under a cosine learning rate decay schedule (from 0.01 to 0.001).

We refer the readers to (Garcia et al., 2023) for more details about the pretraining.

2

Published as a conference paper at ICLR 2024

Table 1: Setups for finetuning. “K/B/M”: thousand/billion/million; “#Train”: the number of training examples;

“Length”: maximum source/target sequence length cut at training. Note pretraining data size is for token count.

Bold numbers denote the held-out settings we leave for scaling law verification.

(a) Details for finetuning tasks.

Task #Train Length Dev Test Zero-Shot Base LLM

WMT14 En-De 4.5M 256/256 newstest2013 newstest2020,2021,2022 Flores200 En-De LLM

WMT19 En-Zh 25M 256/256 newsdev2017 newstest2020,2021,2022 Flores200 En-Zh LLM

MLSum 1.1M 512/256 official dev sets official test sets - En-De LLM

(b) Scaling settings for different factors.

LLM Model Sizes 1B, 2B, 4B, 8B, 16B

Pretraining Data Sizes

En-De LLM 84B, 126B, 167B, 209B, 283B

En-Zh LLM 84B, 105B, 126B, 147B, 167B, 206B

PET Parameter Sizes

Prompt Length 50, 100, 150, 200, 300, 400, 600

LoRA Rank 4, 8, 16, 32, 48, 64, 128

Finetuning Data Sizes

Prompt & LoRA 8K, 10K, 20K, 30K, 40K, 50K, 60K, 70K, 80K, 90K, 100K

FMT– WMT En-De 100K, 500K, 1M, 1.5M, 2M, 2.5M, 3M, 3.5M, 4M, 4.5M

FMT– WMT En-Zh 1M, 2M, 3M, 4M, 5M, 10M, 15M, 20M, 25M

FMT– MLSum 100K, 200K, 300K, 400K, 500K, 600K, 700K, 800K, 900K

Finetuning Settings We mainly study the scaling for the following three finetuning methods:

• Full-Model Tuning (FMT): This is the vanilla way of finetuning which simply optimizes

all LLM parameters;

• Prompt Tuning (Prompt): Prompt prepends the input embedding X ∈ R

|X|×d

with a tun-

able “soft-prompt” P ∈ R

|P |×d

, and feeds their concatenation [P; X] ∈ R

(|P |+|X|)×d

to

LLM. |·| and d denote sequence length and model dimension, respectively. During finetun-

ing, only the prompt parameter P is optimized. We initialize P from sampled vocabulary,

and set the prompt length |P | to 100 by default (Lester et al., 2021).

• Low-Rank Adaptation (LoRA): Rather than modifying LLM inputs, LoRA updates pre-

trained model weights W ∈ R

m×n

with trainable pairs of rank decomposition matrices

B ∈ R

m×r

, A ∈ R

r×n

, and uses W + BA instead during finetuning. m, n are dimensions

and r is LoRA rank. Only Bs and As are optimized. We apply LoRA to both attention and

feed-forward layers in LLMs, and set the rank r to 4 by default (Hu et al., 2021).

We explore 4 different factors for the scaling, which are summarized in Table 1b. Except LLM model

scaling, all experiments are based on the corresponding 1B LLM. For pretraining data scaling, we

adopt intermediate pretrained checkpoints as the proxy due to computational budget constraint while

acknowledge its sub-optimality. Details for optimization are given in Appendix.

Evaluation We use the best checkpoint based on token-level perplexity (PPL) on the dev set for

evaluation. For scaling laws, we report PPL on test sets; for general generation, we use greedy

decoding, and report BLEURT (Sellam et al., 2020) and RougeL (Lin, 2004) for translation and

summarization, respectively. For zero-shot evaluation, we adopt Flores200 (NLLB Team, 2022)

and evaluate on {Fr, De, Hindi (Hi), Turkish (Tr), Polish (Po)→Zh} and {Fr, Zh, Hi, Tr, Po→De}

for En-Zh and En-De translation respectively. For scaling law evaluation, we split empirical data

points into two sets, empirical fitting and held-out set, where the former is used for fitting scaling

parameters and the latter is used for evaluation. We report mean absolute deviation. To reduce noise,

we perform three runs, each with a different random subset of the finetuning data, and report average

performance. When sampling for MLSum, we keep the mixing ratio over different languages fixed.

3 WHY MULTIPLICATIVE JOINT SCALING LAW?

We consider 4 scaling factors in this study but jointly modeling all of them is time and resource

consuming. Instead, we treat finetuning data as the pivoting factor and perform joint scaling analysis

3

Published as a conference paper at ICLR 2024

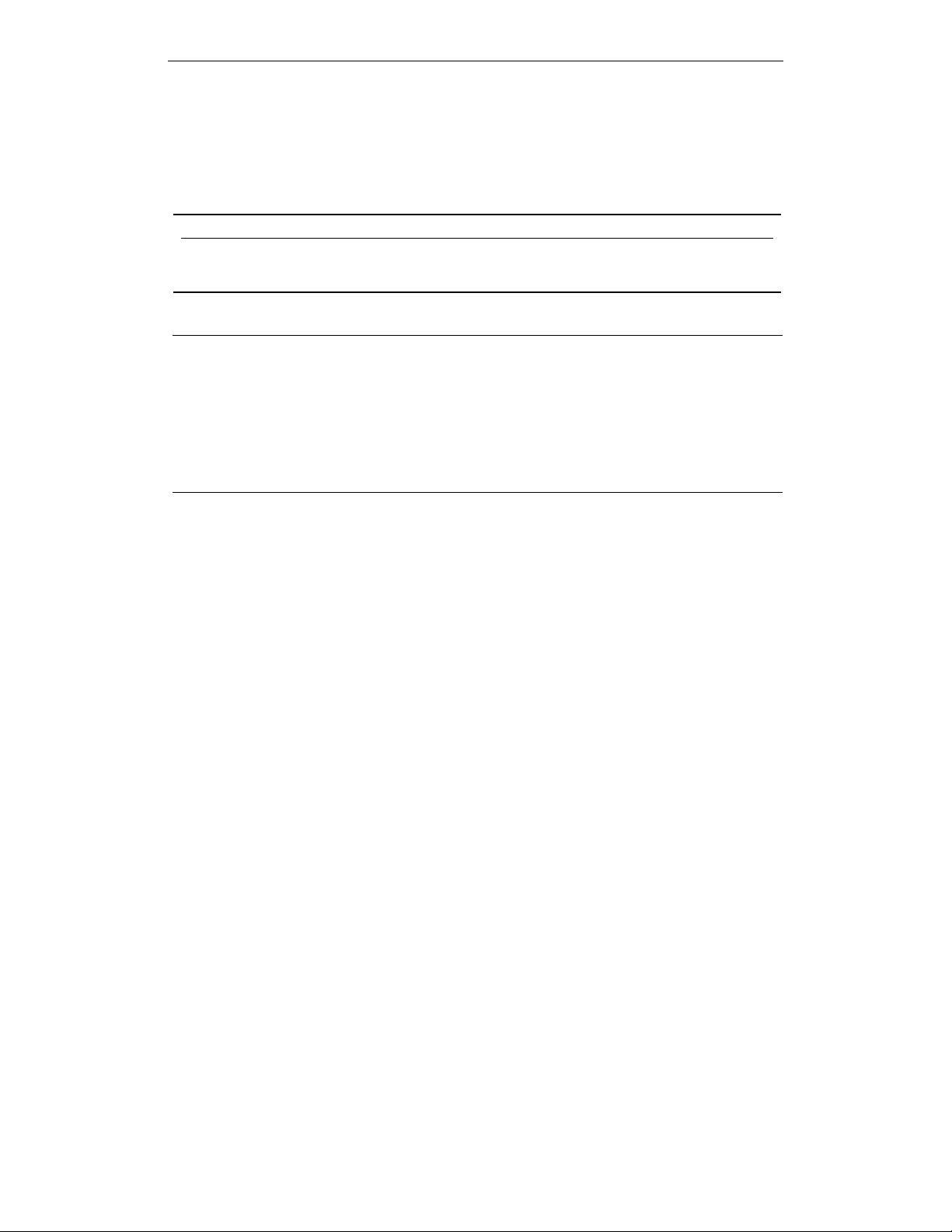

Figure 1: Fitted single-variable scaling laws for finetuning data scaling over different LLM model sizes on

WMT14 En-De. Solid lines denote fitted scaling curves. Filled circles and triangles denote fitting and held-out

data points. ∆

h

: mean absolute deviation on the held-out data.

0 1 2 3 4

#Sentences (finetuning)

1e6

0.8

0.9

1.0

1.1

1.2

1.3

Test PPL

FMT,

h

= 0.002

1b = 0.22

2b = 0.09

4b = 0.04

8b = 0.05

0.0 0.2 0.4 0.6 0.8 1.0

#Sentences (finetuning)

1e5

Prompt,

h

= 0.002

1b = 0.71

2b = 0.22

4b = 0.26

8b = 0.91

0.0 0.2 0.4 0.6 0.8 1.0

#Sentences (finetuning)

1e5

LoRA,

h

= 0.003

0.5

1.0

1.5

Model Size

1e10

1b = 0.06

2b = 0.32

4b = 0.17

8b = 0.83

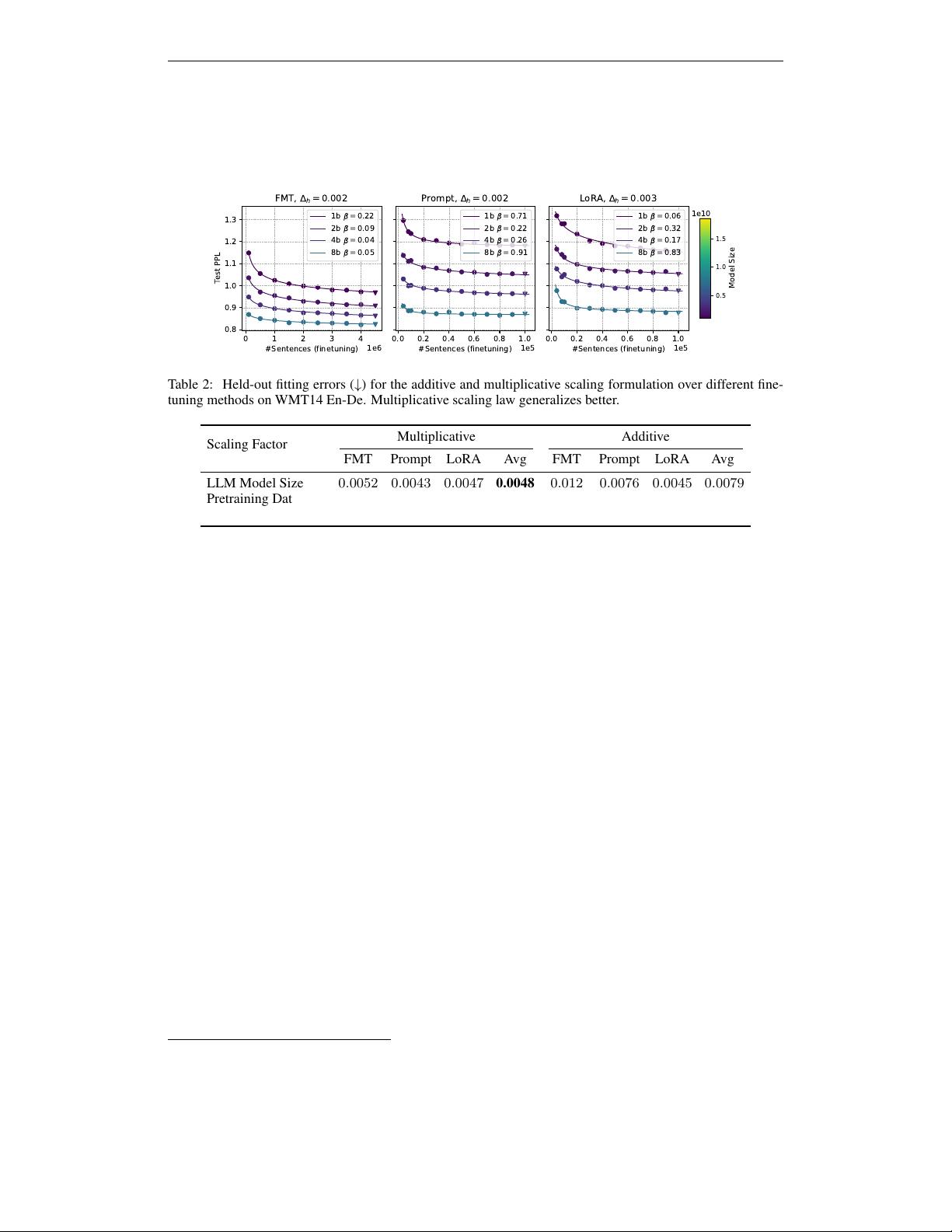

Table 2: Held-out fitting errors (↓) for the additive and multiplicative scaling formulation over different fine-

tuning methods on WMT14 En-De. Multiplicative scaling law generalizes better.

Scaling Factor

Multiplicative Additive

FMT Prompt LoRA Avg FMT Prompt LoRA Avg

LLM Model Size 0.0052 0.0043 0.0047 0.0048 0.012 0.0076 0.0045 0.0079

Pretraining Data Size 0.0057 0.0061 0.0084 0.0068 0.0048 0.0075 0.0082 0.0069

PET parameter size - 0.005 0.0031 0.004 - 0.0069 0.0032 0.005

between it and every other factor separately. Below, we start with finetuning experiments for FMT,

Prompt and LoRA on WMT14 En-De, and then explore the formulation for the joint scaling.

Finetuning data scaling follows a power law. We first examine the scaling over finetuning data

size for each LLM model size independently, with a single variable formulation:

ˆ

L(D

f

) =

A

/D

β

f

+

E. Following Hoffmann et al. (2022), we estimate {A, β, E} using the Huber loss (δ = 0.001) and

the L-BFGS algorithm, and select the best fit from a grid of initializations. Figure 1 shows that the

above formulation well describes LLM finetuning data scaling with small predictive errors across

model sizes and methods, echoing with the findings of Hernandez et al. (2021). Such scaling trend

also implies that, while finetuning with small amount of examples could achieve decent results (Zhou

et al., 2023; Gao et al., 2023), larger scale finetuning data still contributes to improved downstream

performance, especially when the downstream application is well defined.

Additive or multiplicative joint scaling law for LLM finetuning? Figure 1 also shows some

scaling pattern over LLM model sizes, suggesting the existence of a joint scaling law. We explore

two formulations: multiplicative as in Eq. (1) and additive:

ˆ

L(X, D

f

) =

A

/X

α

+

B

/D

β

f

+ E (Hoff-

mann et al., 2022), and compare them via empirical experiments.

1

In both formulations, α and β reflect the impact of factor X and finetuning data size on the perfor-

mance, respectively, which are factor-specific. E is a model- and task-dependent term, describing

irreducible loss (Ghorbani et al., 2021). We notice that the meaning for β and E generalizes over

different factors X, and thus propose to estimate them first based on results for both LLM model and

pretraining data scaling.

2

Such joint fitting could also reduce overfitting and improve extrapolation

ability. We apply the following joint fitting loss:

min

a

X

,b

X

,α

X

,β,e

X

run i in factor X

Huber

δ

ˆ

L

X

i

, D

i

f

|a

X

, b

X

, α

X

, β, e

− L

i

, (2)

1

For LLM model scaling, we omitted the newly added parameters in PET because 1) the added parameters

only take a very tiny proportion, and 2) the proportion across LLM model sizes is similar. Take the 1B LLM

as example. |P | = 100 in Prompt adds 0.017% parameters; r = 4 in LoRA adds 0.19% parameters. We also

explored different formulations for the new parameters for PET, which don’t make a substantial difference.

2

We didn’t consider PET parameter scaling when estimating β and E because this scaling is pretty weak

and ineffective, as shown in Section 4.

4

剩余19页未读,继续阅读

资源评论

pk_xz123456

- 粉丝: 2275

- 资源: 2353

下载权益

C知道特权

VIP文章

课程特权

开通VIP

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

- JAVA的SpringBoot项目记账本源码带开发文档数据库 MySQL源码类型 WebForm

- NetBox2及大疆智图影像缓存lrc模板

- 123456789自用解答題

- JAVA的SpringBoot个人理财系统源码数据库 MySQL源码类型 WebForm

- 全屋智能全球市场报告:2023年中国全屋智能行业市场规模已达到3705亿元

- 康复医疗全球市场报告:2023年年复合增长率高达18.19%

- 微信小程序期末大作业-商城-2024(底部导航栏,轮播图,注册登录,购物车等等)

- 碘产业全球市场报告:2023年全球碘需求量已攀升至约3.86万吨

- 基于CNN、RNN、GCN、BERT的中文文本分类源码Python高分期末大作业

- 最新源支付Ypay系统开心稳定最新免授权源码,三平台免挂免签约支付

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功