没有合适的资源?快使用搜索试试~ 我知道了~

温馨提示

内容概要:本文介绍了名为MAGPIE的一种新方法,用于直接从对齐后的大型语言模型(如Llama-3-Instruct)自动生成高质量的指令数据。MAGPIE利用模型的自动回归特性,在没有外部输入的情况下合成指令及其响应,最终生成了400万条指令和对应的回复,并从中精选出了30万个高质量的数据实例。通过与多个公开数据集进行对比,研究团队发现基于MAGPIE训练的模型表现接近甚至超过官方版本Llama-3-8B-Instruct,显示出MAGPIE在提升AI模型性能方面的能力。 适合人群:从事自然语言处理相关研究与应用的学者和技术人员。 使用场景及目标:主要应用于大规模高质量指令数据集的快速生成,促进开放AI领域的进一步发展。具体来说,MAGPIE可以帮助解决现有开源数据创建方式效率低下、难以扩大规模以及质量受限的问题,为后续模型训练提供更加丰富多样的数据支持。 其他说明:文章还包括详细的实验设置和数据分析,证明了MAGPIE的有效性和优越性。此外还提供了多种预设过滤配置供用户选择,方便针对不同应用场景优化数据集品质。

资源推荐

资源详情

资源评论

MAGPIE: Alignment Data Synthesis from Scratch by

Prompting Aligned LLMs with Nothing

Zhangchen Xu

♠

Fengqing Jiang

♠

Luyao Niu

♠

Yuntian Deng

♢

Radha Poovendran

♠

Yejin Choi

♠♢

Bill Yuchen Lin

♢

♠

University of Washington

♢

Allen Institute for AI

https://magpie-align.github.io/

https://hf.co/magpie-align

Abstract

High-quality instruction data is critical for aligning large language models (LLMs).

Although some models, such as Llama-3-Instruct, have open weights, their align-

ment data remain private, which hinders the democratization of AI. High human

labor costs and a limited, predefined scope for prompting prevent existing open-

source data creation methods from scaling effectively, potentially limiting the

diversity and quality of public alignment datasets. Is it possible to synthesize

high-quality instruction data at scale by extracting it directly from an aligned

LLM? We present a self-synthesis method for generating large-scale alignment data

named MAGPIE. Our key observation is that aligned LLMs like Llama-3-Instruct

can generate a user query when we input only the left-side templates up to the

position reserved for user messages, thanks to their auto-regressive nature. We use

this method to prompt Llama-3-Instruct and generate 4 million instructions along

with their corresponding responses. We perform a comprehensive analysis of the

extracted data and select 300K high-quality instances. To compare MAGPIE data

with other public instruction datasets (e.g., ShareGPT, WildChat, Evol-Instruct,

UltraChat, OpenHermes, Tulu-V2-Mix), we fine-tune Llama-3-8B-Base with each

dataset and evaluate the performance of the fine-tuned models. Our results indicate

that in some tasks, models fine-tuned with MAGPIE perform comparably to the

official Llama-3-8B-Instruct, despite the latter being enhanced with 10 million data

points through supervised fine-tuning (SFT) and subsequent feedback learning.

We also show that using MAGPIE solely for SFT can surpass the performance of

previous public datasets utilized for both SFT and preference optimization, such

as direct preference optimization with UltraFeedback. This advantage is evident

on alignment benchmarks such as AlpacaEval, ArenaHard, and WildBench, and

importantly, it is achieved without compromising performance on reasoning tasks

like MMLU-Redux, despite the alignment tax.

1 Introduction

Large language models (LLMs) such as GPT-4 [

1

] and Llama-3 [

40

] have become integral to AI

applications due to their exceptional performance on a wide array of tasks by following instructions.

The success of LLMs is heavily reliant on the data used for instruction fine-tuning, which equips them

to handle a diverse range of tasks, including those not encountered during training. The effectiveness

of this instruction tuning depends crucially on access to high-quality instruction datasets. However,

the alignment datasets used for fine-tuning models like Llama-3-Instruct are typically private, even

when the model weights are open, which impedes the democratization of AI and limits scientific

research for understanding and enhancing LLM alignment.

To address the challenges in constructing such datasets, researchers have developed two main

approaches. The first type of method involves human effort to generate and curate instruction data

arXiv:2406.08464v1 [cs.CL] 12 Jun 2024

WildChat

OpenHermes

Tulu V2 Mix

UltraFeedback

ShareGPT

Magpie

-

Air

Magpie

-

Pro

Llama

-

3

-

Instruct

5%

10%

25.08

22.66

9.94

9.91 9.73

10.90

18.36

22.92

15%

20%

25%

30%

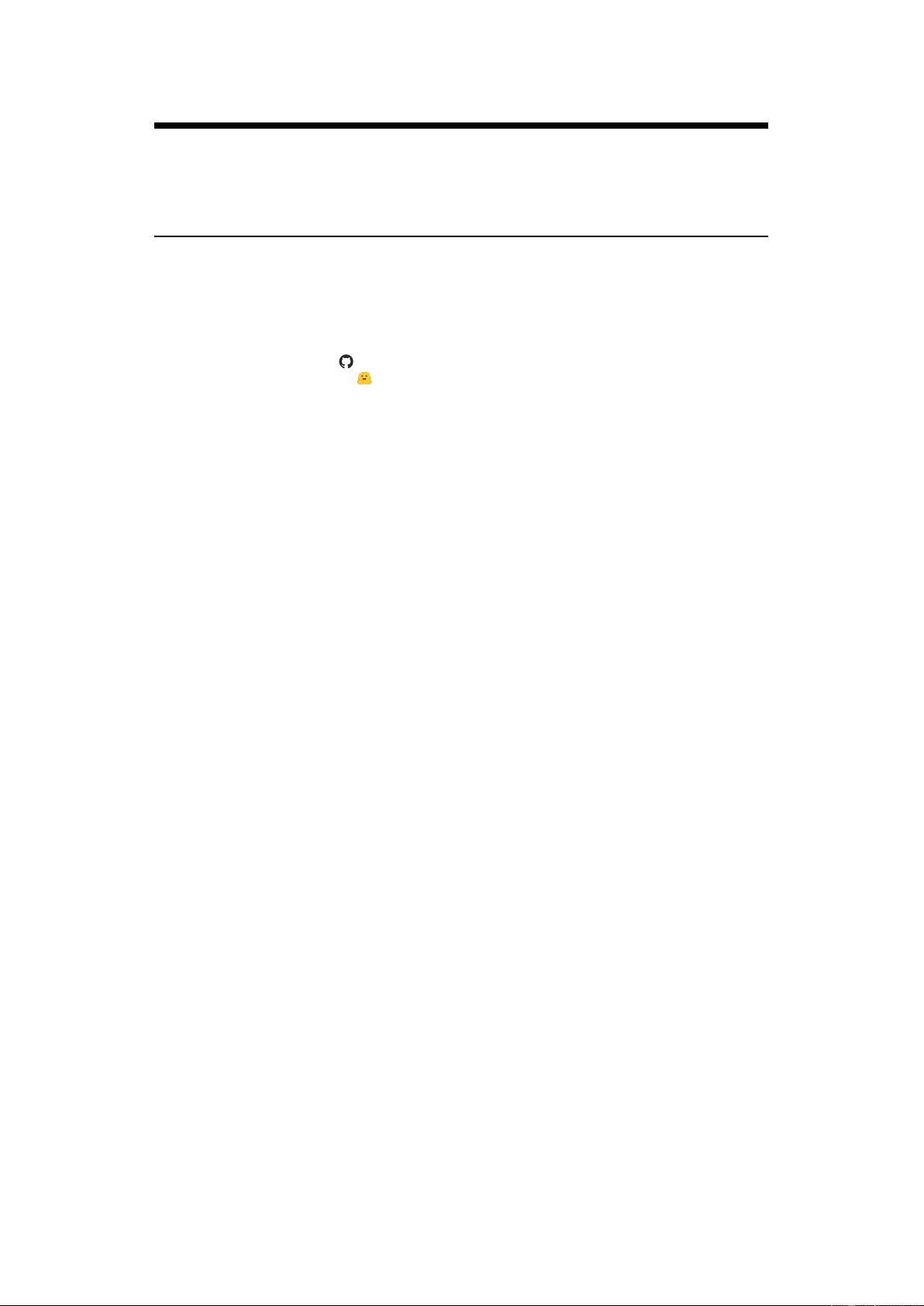

Step 1

<|start_header_id|>user

<|end_header_id|>

LLM

<|start_header_id|>user

<|end_header_id|>

What materials should I

use to build a nest?

<|start_header_id|>

assistant<|end_header_id|>

Building a nest! That’s a

wonderful project! ……

Instruction

Response

Instruction: What materials

should I use to build a nest?

Response: Building a nest!

That’s a wonderful project!

……

What materials should I

use to build a nest?

Step 2

SFT Only

SFT + DPO

SFT + RLHF

Filters

SFT

AlpacaEval 2

(Length Control)

MAGPIE

Evol Instruct

14.62

Length Control Win Rate

“Other birds collect twigs for their nests. Magpies acquire jewels for theirs.”

Figure 1: This figure illustrates the process of self-synthesizing instruction data from aligned LLMs

(e.g., Llama-3-8B-Instruct) to create a high-quality instruction dataset. In Step 1, we input only the

pre-query template into the aligned LLM and generate an instruction along with its response using

auto-regressive generation. In Step 2, we use a combination of a post-query template and another

pre-query template to wrap the instruction from Step 1, prompting the LLM to generate the query

for the second turn. This completes the construction of the instruction dataset. MAGPIE efficiently

generates diverse and high-quality instruction data. Our experimental results show that MAGPIE

outperforms other public datasets for aligning Llama-3-8B-base.

[

14

,

26

,

64

,

65

,

66

], which is both time-consuming and labor-intensive [

37

]. In contrast, the second

type of method uses LLMs to produce synthetic instructions [

16

,

31

,

46

,

47

,

53

,

55

,

58

,

59

]. Although

these methods reduce human effort, its success heavily depends on prompt engineering and the careful

selection of initial seed questions. The diversity of synthetic data tends to decrease as the dataset size

grows. Despite ongoing efforts, the scalable creation of high-quality and diverse instruction datasets

continues to be a challenging problem.

Is it possible to synthesize high-quality instructions at scale by directly extracting data from advanced

aligned LLMs themselves? A typical input to an aligned LLM contains three key components: the pre-

query template, the query, and the post-query template. For instance, an input to Llama-2-chat could

be “

[INST]

Hi!

[/INST]

”, where

[INST]

is the pre-query template and

[/INST]

is the post-query

template. These templates are predefined by the creators of the aligned LLMs to ensure the correct

prompting of the models. We observe that when we only input the pre-query template to aligned

LLMs such as Llama-3-Instruct, they self-synthesize a user query due to their auto-regressive nature.

Our preliminary experiments indicate that these random user queries are of high quality and great

diversity, suggesting that the abilities learned during the alignment process are effectively utilized.

Based on these findings, we developed a self-synthesis method to construct high-quality instruction

datasets at scale, named MAGPIE (as illustrated in Figure 1). Unlike existing methods, our approach

does not rely on prompt engineering or seed questions. Instead, it directly constructs instruction

data by prompting aligned LLMs with a pre-query template for sampling instructions. We applied

this method to the Llama-3-8B-Instruct and Llama-3-70B-Instruct models, creating two instruction

datasets: MAGPIE-Air and MAGPIE-Pro, respectively.

Our MAGPIE-Air and MAGPIE-Pro datasets were created using 206 and 614 GPU hours, respectively,

without requiring any human intervention or API access to production LLMs like GPT-4. Addi-

tionally, we generated two multi-turn instruction datasets, MAGPIE-Air-MT and MAGPIE-Pro-MT,

which contain sequences of multi-turn instructions and responses. The statistics and advantages

of our instruction datasets compared to existing ones are summarized in Table 1. We perform a

comprehensive analysis of the generated data, allowing practitioners to filter and select data instances

from these datasets for fine-tuning according to their particular needs.

To compare MAGPIE data with other public instruction datasets (e.g., ShareGPT [

10

], WildChat [

64

],

Evol Instruct [

58

], UltraChat [

16

], OpenHermes [

49

], Tulu V2 Mix [

24

]) and various preference

tuning strategies with UltraFeedback [

13

], we fine-tune the Llama-3-8B-Base model with each

dataset and assess the performance of the resultant models on LLM alignment benchmarks such as

AlpacaEval 2 [

33

], Arena-Hard [

32

], and WildBench [

34

]. Our results show that models fine-tuned

with MAGPIE achieve superior performance, even surpassing the official Llama-3-8B-Instruct model

on AlpacaEval, which was fine-tuned with over 10 million data points for supervised fine-tuning

(SFT) and follow-up feedback learning. Not only does MAGPIE excel in SFT alone compared to

prior public datasets that incorporate both SFT and preference optimization (e.g., direct preference

2

Table 1: Statistics of instruction datasets generated by MAGPIE compared to other instruction datasets.

Tokens are counted using the tiktoken library [42].

Instruction

Source

Dataset Name #Convs #Turns

Human

Effort

Response

Generator

#Tokens / Turn #Total Tokens

Synthetic

Alpaca [47] 52K 1 Low text-davinci-003 67.38

±54.88

3.5M

Evol Instruct [58] 143K 1 Low ChatGPT 473.33

±330.13

68M

UltraChat [16] 208K 3.16 Low GhatGPT 376.58

±177.81

238M

Human

Dolly [14] 15K 1 High ChatGPT 94.61

±135.84

1.42M

ShareGPT [66] 112K 4.79 High ChatGPT 465.38

±368.37

201M

WildChat [64] 652K 2.52 High GPT-3.5 & GPT-4 727.09

±818.84

852M

LMSYS-Chat-1M [65] 1M 2.01 High Mix 260.37

±346.97

496M

Mixture

Deita [38] 9.5K 22.02 - Mix 372.78

±182.97

74M

OpenHermes [49] 243K 1 - Mix 297.86

±258.45

72M

Tulu V2 Mixture [24] 326K 2.31 - Mix 411.94

±447.48

285M

MAGPIE

Llama-3-MAGPIE-Air 3M 1 No Llama-3-8B 426.39

±217.39

1.28B

Llama-3-MAGPIE-Air-MT 300K 2 No Llama-3-8B 610.80

±90.61

366M

Llama-3-MAGPIE-Pro 1M 1 No Llama-3-70B 478.00

±211.09

477M

Llama-3-MAGPIE-Pro-MT 300K 2 No Llama-3-70B 554.53

±133.64

333M

optimization with UltraFeedback [

13

]), but it also delivers the best results when evaluated against

six baseline instruction datasets and four preference tuning methods (DPO [

44

], IPO [

2

], KTO

[

19

], and ORPO [

23

] with the UltraFeedback dataset). These findings show the exceptional quality

of instruction data generated by MAGPIE, enabling it to outperform even the official, extensively

optimized LLMs.

2 MAGPIE: A Scalable Method to Synthesize Instruction Data

Overview of MAGPIE. In what follows, we describe our method, MAGPIE, to synthesize instruction

data for fine-tuning LLMs. An instance of instruction data consists of at least one or multiple

instruction-response pairs. Each pair specifies the roles of instruction provider and follower, along

with their instruction and response. As shown in Figure 1, MAGPIE consists of two steps: (1)

instruction generation, and (2) response generation. The pipeline of MAGPIE can be fully automated

without any human intervention. Given the data generated by MAGPIE, practitioners may customize

and build their own personalized instruction dataset accordingly (see Section 3 and Appendix B for

more details). We detail each step in the following.

Step 1: Instruction Generation. The goal of this step is to generate an instruction for each instance

of instruction data. Given an open-weight aligned LLM (e.g., Llama-3-70B-Instruct), MAGPIE crafts

an input query in the format of the predefined instruction template of the LLM. This query defines

only the role of instruction provider (e.g., user), and does not provide any instruction. Note that

the auto-regressive LLM has been fine-tuned using instruction data in the format of the predefined

instruction template. Thus, the LLM autonomously generates an instruction when the query crafted

by MAGPIE is given as an input. MAGPIE stops generating the instruction once the LLM produces

an end-of-sequence token. Sending the crafted query to the LLM multiple times leads to a set of

instructions. Compared with existing synthetic approaches [

16

,

31

,

47

,

53

,

55

,

58

,

59

], MAGPIE does

not require specific prompt engineering techniques since the crafted query follows the format of the

predefined instruction template. In addition, MAGPIE autonomously generates instructions without

using any seed question, ensuring the diversity of generated instructions.

Step 2: Response Generation. The goal of this step is to generate responses to the instructions

obtained from Step 1. MAGPIE sends these instructions to the LLM to generate the corresponding

responses. Combining the roles of instruction provider and follower, the instructions from Step 1, and

the responses generated in Step 2 yields the instruction dataset. Detailed discussion on the generation

configuration can be found in Appendix D.

Extensions of MAGPIE. MAGPIE can be readily extended to generate multi-turn instruction datasets

and preference datasets. In addition, practitioners can specify the task requested by the instructions.

We defer the detailed discussion on these extensions to Appendix A.

3

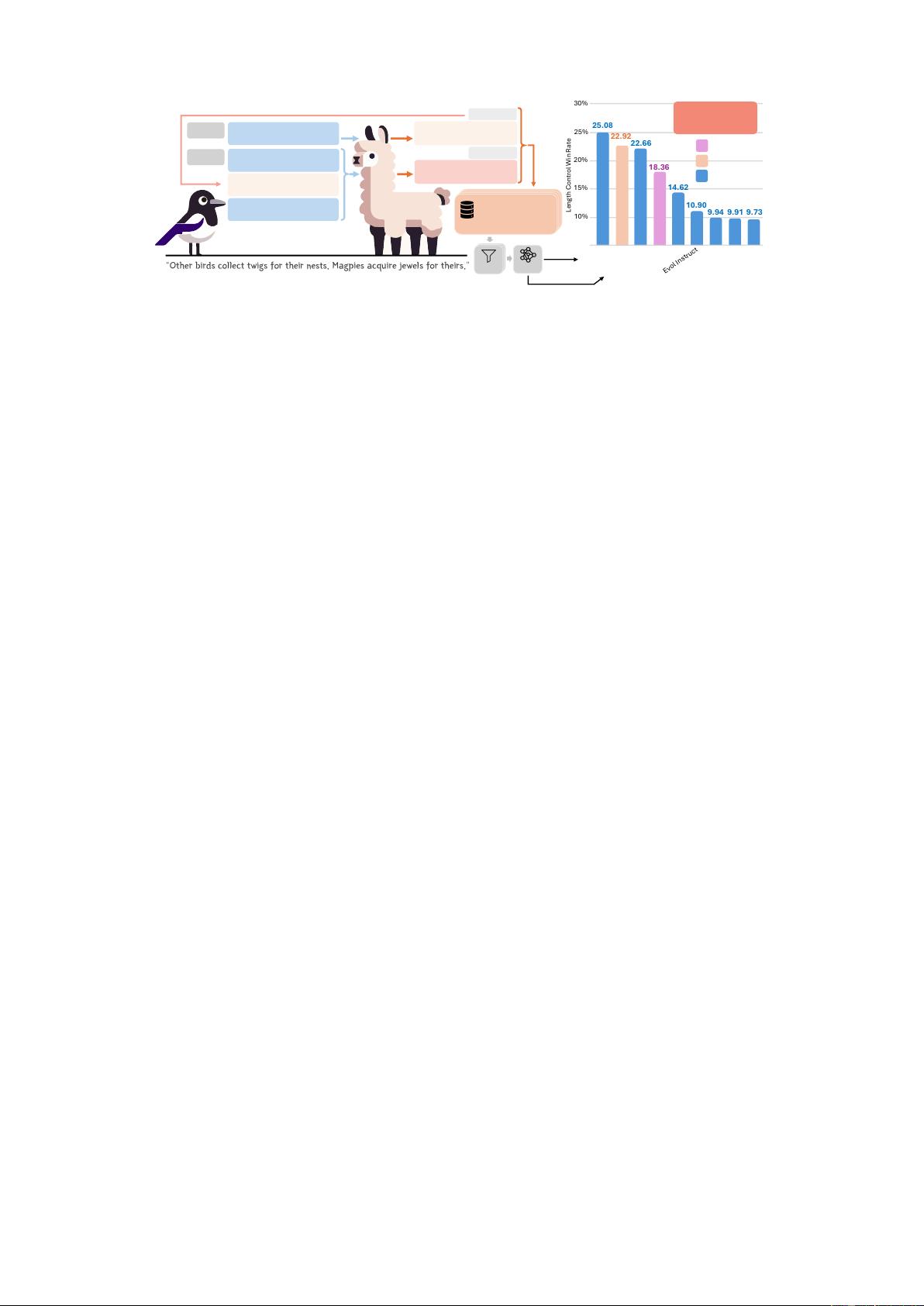

(a) Input Length of MAGPIE-Air (in tokens)

(b) Output Length of MAGPIE-Air (in tokens)

(c) Input Length of MAGPIE-Pro (in tokens)

(d) Input Length of MAGPIE-Pro (in tokens)

Figure 2: Lengths of instructions

and responses in MAGPIE-Air/Pro.

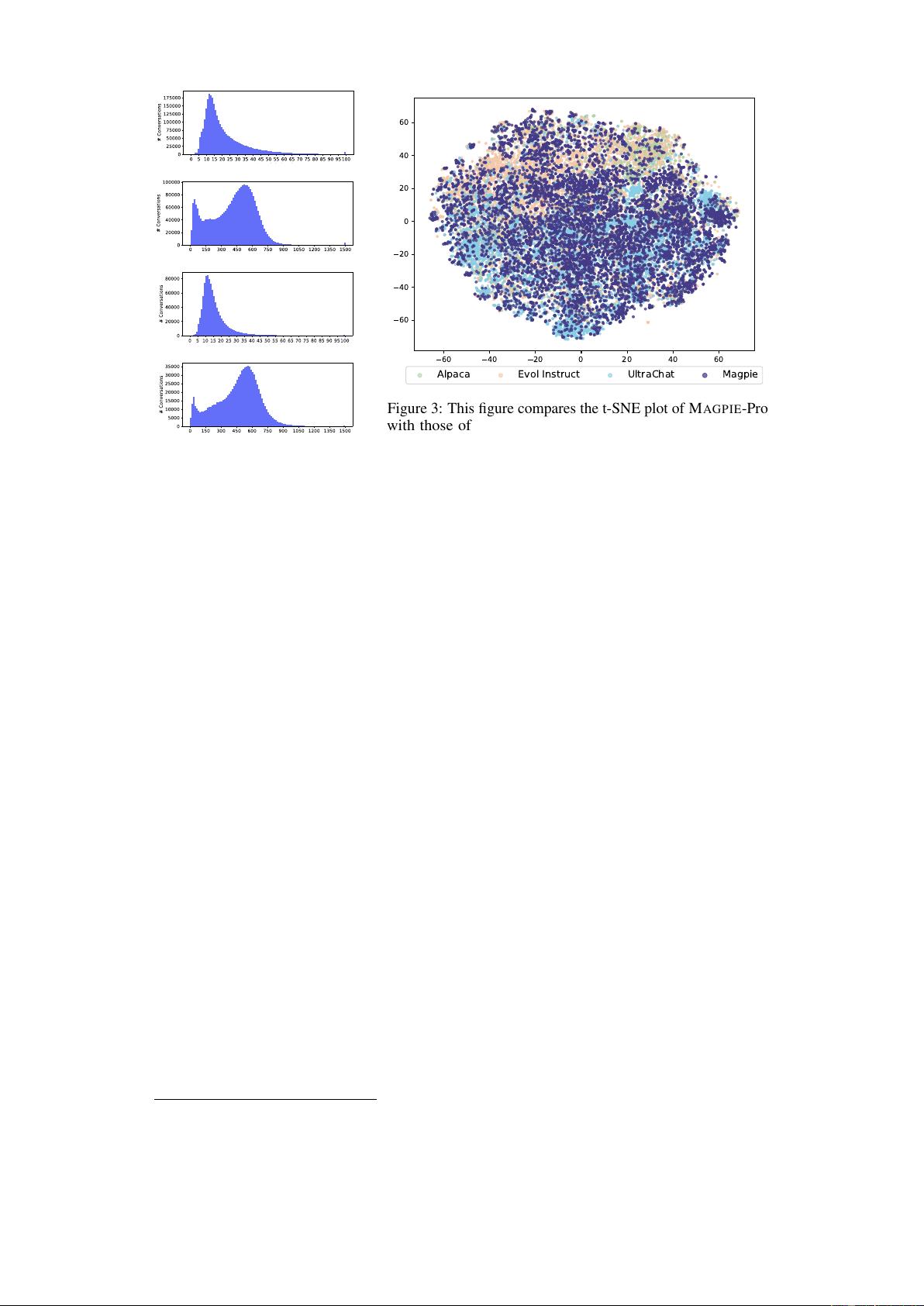

60 40 20 0 20 40 60

60

40

20

0

20

40

60

Alpaca Evol Instruct UltraChat Magpie

Figure 3: This figure compares the t-SNE plot of MAGPIE-Pro

with those of Alpaca, Evol Instruct, and UltraChat, each of

which is sampled with 10,000 instructions. The t-SNE plot of

MAGPIE-Pro encompasses the area covered by the other plots,

demonstrating the comprehensive coverage of MAGPIE-Pro.

3 Dataset Analysis

We apply MAGPIE to the Llama-3-8B-Instruct and Llama-3-70B-Instruct models to construct two

instruction datasets: MAGPIE-Air and MAGPIE-Pro, respectively. Examples of instances in both

datasets can be found in Appendix G. In this section, we present a comprehensive statistical analysis

of the MAGPIE-Air and MAGPIE-Pro datasets. An overview of the lengths of instructions and

responses of the data in MAGPIE-Air and MAGPIE-Pro is presented in Figure 2. In what follows,

we first assess the breadth of MAGPIE-Pro by analyzing its coverage. We then discuss the attributes

of MAGPIE-Pro, including topic coverage, difficulty, quality, and similarity of instructions, as well

as quality of response. Finally, we provide the safety analysis and cost analysis. Using our dataset

analysis, practitioners can customize and configure their own datasets for fine-tuning LLMs. In

Appendix B, we showcase the process of customizing and filtering an instruction dataset based on

our analysis. Specifically, we select 300K instances from MAGPIE-Pro and MAGPIE-Air-Filtered,

yielding datasets MAGPIE-Pro-300K and MAGPIE-Air-300K-Filtered, respectively.

3.1 Dataset Coverage

We follow the approach in [

64

] and analyze the coverage of MAGPIE-Pro in the embedding space.

Specifically, we use the

all-mpnet-base-v2

embedding model

1

to calculate the input embeddings,

and employ t-SNE [

51

] to project these embeddings into a two-dimensional space. We adopt three

synthetic datasets as baselines, including Alpaca [

47

], Evol Instruct [

58

], and UltraChat [

16

], to

demonstrate the coverage of MAGPIE-Pro.

Figure 3 presents the t-SNE plots of MAGPIE-Pro, Alpaca, Evol Instruct, and UltraChat. Each t-SNE

plot is generated by randomly sampling 10,000 instructions from the associated dataset. We observe

that the t-SNE plot of MAGPIE-Pro encompasses the area covered by the plots of Alpaca, Evol

Instruct, and UltraChat. This suggests that MAGPIE-Pro provides a broader or more diverse range

of topics, highlighting its extensive coverage across varied themes and subjects. We also follow the

practice in [

53

] and present the most common verbs and their top direct noun objects in instructions

in Appendix C, indicating the diverse topic coverage of MAGPIE dataset. Coverage analysis of

MAGPIE-Air can also be found in Appendix C.

1

https://huggingface.co/sentence-transformers/all-mpnet-base-v2

4

3.2 Dataset Attributes

Attribute: Task Categories of Instructions.

We use Llama-3-8B-Instruct to categorize the instances in MAGPIE-Pro (see Figure 7 in Appendix

C.1 for detail). The prompts used to query Llama-3-8B-Instruct can be found in Appendix F. Our

observations indicate that over half of the tasks in MAGPIE-Pro pertain to information seeking,

making it the predominant category. This is followed by tasks involving creative writing, advice

seeking, planning, and math. This distribution over the task categories aligns with the practical

requests from human users [33].

(a) Statistics on Input Quality

(b) Statistics on Input Difficulty

Figure 4: The statistics of input dif-

ficulty and quality.

Attribute: Quality of Instructions. We use the Llama-3-

8B-Instruct model to assess the quality of each instruction in

MAGPIE-Air and MAGPIE-Pro, categorizing them as ‘very

poor’, ‘poor’, ‘average’, ‘good’, and ‘excellent’. We present

the histograms of qualities for both datasets in Figure 4-(a). We

have the following two observations. First, both datasets are

of high quality, with the majority of instances rated ‘average’

or higher. In addition, the overall quality of MAGPIE-Pro

surpasses that of MAGPIE-Air. We hypothesize that this is due

to the enhanced capabilities of Llama-3-70B compared with

Llama-3-8B.

Attribute: Difficulty of Instructions. We use the Llama-

3-8B-Instruct model to rate the difficulty of each instruction

in MAGPIE-Air and MAGPIE-Pro. Each instruction can be

labeled as ‘very easy’, ‘easy’, ‘medium’, ‘hard’, or ‘very hard’.

Figure 4-(b) presents the histograms of the levels of difficulty

for MAGPIE-Air and MAGPIE-Pro. We observe that the dis-

tributions across difficulty levels are similar for MAGPIE-Air

and MAGPIE-Pro. Some instructions in MAGPIE-Pro are more challenging than those in MAGPIE-Air

because MAGPIE-Pro is generated by a more capable model (Llama-3-70B-Instruct).

(a) Min Neighbor Distance of MAGPIE-Air

(b) Reward Difference of Base Model and Instruct Model

Figure 5: This figure summarizes

the minimum neighbor distances and

reward differences.

Attribute: Instruction Similarity. We quantify the similarity

among instructions generated by MAGPIE to remove repeti-

tive instructions. We measure the similarity using minimum

neighbor distance in the embedding space. Specifically, we

first represent all instructions in the embedding space using

the

all-mpnet-base-v2

embedding model. For any given

instruction, we then calculate the minimum distance from the

instruction to its nearest neighbors in the embedding space

using Facebook AI Similarity Search (FAISS) [

17

]. The min-

imum neighbor distances of instructions in MAGPIE-Air after

removing repetitions are summarized in Figure 5-(a).

Attribute: Quality of Responses. We assess the quality of

responses using a metric named reward difference. For each

instance in our dataset, the reward difference is calculated as

r

∗

− r

base

, where

r

∗

is the reward assigned by a reward model

to the response in our dataset, and

r

base

is the reward assigned

by the same model to the response generated by the Llama-3 base model for the same instruction. We

use URIAL [

35

] to elicit responses from the base model. A positive reward difference indicates that

the response from our dataset is of higher quality, and could potentially benefit instruction tuning.

In our experiments, we follow [

29

] and use

FsfairX-LLaMA3-RM-v0.1

[

57

] as our reward model.

Our results on the reward difference are presented in Figure 5-(b).

3.3 Safety Analysis

We use Llama-Guard-2 [

48

] to analyze the safety of MAGPIE-Air and MAGPIE-Pro. Our results

indicate that both datasets are predominantly safe, with less than 1% of the data potentially containing

harmful instructions or responses. Please refer to Appendix C.2 for detailed safety analysis.

5

剩余24页未读,继续阅读

资源评论

pk_xz123456

- 粉丝: 2821

- 资源: 4000

下载权益

C知道特权

VIP文章

课程特权

开通VIP

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

- 中国30个省份的环境治理总投入(万元)(2013-2022年)

- Matlab simulink模型 光伏发电三相并网: 1.光伏100kw+MPPT控制+两级式并网逆变器(boost+三相桥式逆变) 2.坐标变+锁相环+dq功率控制+解耦控制+电流内环电压外环控制

- 2023-4-8-笔记-第一阶段-第2节-分支循环语句- 4.goto语句 5.本章完 -2025.01.05

- 两极式三相光伏逆变并网仿真 ★前级为Boost变器,采用mppt算法(扰动观察法) 逆变器为三相两电平LCL型并网逆变器,采用SVPWM调制算法 控制环路: ★除了直流电压环外,电流控制环采用双环

- 2025春节倒计时微信小程序源码.zip

- STM32无感FOC,非线性磁链观测器,STM32F030定点运算,低速性能好,无需定位强拖,零速启动 VESC降本 可国产化

- 轨道车辆客车转向架的装配体3维sw图,CRH380B转向架,CW-200型转向架 209HS型转向架,用于160km m准高速客车 包括轮轴系统、构架,制动闸片,空气弹簧,减震器等转向架关键零部件

- 车用驱动电机原理与控制基础-P144公式(6-48)

- Matlab联合comsol实现边坡可靠度分析蒙特卡洛模拟-同时对内聚力和内摩擦角进行折减

- 各种裂缝(包括墙面裂缝,路面裂缝等)的目标检测yolo数据标注,画框打标签 语义分割数据标注,打标签,像素级分割

- 中国31个省份的第二产业生产总值(2013-2022年)

- PFC3D岩石注浆破坏,可改注浆速度及注浆流量,注浆孔位置(未考虑渗流场)

- Matlab指数型相关函数随机场生成

- SJA1000,CAN通信,fpga,vhdl编写 只提供代码,通过上位机发送,遥测返回等

- 锂离子电池恒流恒压充电Simulink仿真模型(CC-CV) 电路结构包括:直流电压源、DC DC变器、锂离子电池、CCCV控制系统 赠送2000多字的说明文档和参考文献,帮助您更快理解 恒流恒压充电

- 自适应巡航控制(ACC),上层控制器采用MPC算法,下层控制器采用标定法 该ACC接入了Carsin中的GPS,在大地坐标系的基础上设计了MPC,可以在此基础上,加入规划层,实现超车等功能 此外对

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功