没有合适的资源?快使用搜索试试~ 我知道了~

温馨提示

内容概要:本文介绍了一种使用基于好奇心驱动的探索方法来提高大规模语言模型(LLMs)自动化对抗测试的效果与多样性的技术。现有的方法通常依赖于强化学习(RL),但存在生成测试案例多样性和效果难以兼顾的问题。本文提出的方法通过引入好奇心奖励机制,在优化生成有效测试案例的同时增加了新颖性奖励,从而提高了测试案例的多样性并保持了有效性。实验表明,这种方法不仅在文本延续任务中表现出色,而且在指令执行任务和经过人类偏好调优的LLM中也能找到更多的不当响应。同时,作者进行了广泛的实验和分析,验证了方法的有效性和通用性。 适合人群:自然语言处理研究者、机器学习工程师、网络安全专家。 使用场景及目标:适用于需要对大型语言模型进行安全性和鲁棒性评估的情景,特别是对于那些可能生成有害内容的模型进行自动化的红队测试,以发现潜在的安全隐患。 其他说明:本文提供了详细的实验设置和结果分析,强调了方法的实用性和创新性。作者还讨论了未来的研究方向,特别是在RL探索策略方面的进一步改进。

资源推荐

资源详情

资源评论

Under review as a conference paper at ICLR 2024

CURIOSITY-DRIVEN RED-TEAMING FOR LARGE LAN-

GUAGE MODELS

Anonymous authors

Paper under double-blind review

ABSTRACT

Large language models (LLMs) hold great potential for various natural language

applications but risk generating incorrect or toxic content. To probe when an

LLM generates unwanted content, the current paradigm is to recruit human testers

to create input prompts (i.e., test cases) designed to elicit unfavorable responses

from LLMs. This procedure is called red teaming. However, relying solely on

human testers can be both expensive and time-consuming. Recent works automate

red teaming by training LLMs (i.e., red team LLMs) with reinforcement learning

(RL) to maximize the chance of eliciting undesirable responses (i.e., successful

test cases) from the target LLMs being evaluated. However, while effective at

provoking undesired responses, current RL methods lack test case diversity as RL-

based methods tend to generate a small number of successful test cases once found

(i.e., high-precision but low diversity). To overcome this limitation, we propose

using curiosity-driven exploration optimizing for novelty to train red team models

for generating a set of diverse and effective test cases. We evaluate our method by

performing red teaming against LLMs in text continuation and instruction following

tasks. Our experiments show that curiosity-driven exploration achieves greater

diversity in all the experiments compared to existing RL-based red team methods

while maintaining effectiveness. Remarkably, curiosity-driven exploration also

enhances the effectiveness when performing red teaming in instruction following

test cases, generating more successful test cases. Finally, we demonstrate that the

proposed approach successfully provokes toxic responses from LLaMA2 model

that has undergone substantial finetuning based on human preferences.

WARNING: This paper contains model outputs which are offensive in nature.

1 INTRODUCTION

Large language models (LLM) have achieved unprecedented success in question-answering, virtual

assistance, summarization, and other applications of natural language processing (NLP). A big issue

in deploying LLMs is the potential generation of misinformation and vulnerable content (Lee, 2016).

However, since LLMs often consist of several millions or billions of parameters, inspecting what

prompts trigger an LLM to produce unwanted text (e.g., toxic, hateful, or untruthful) is challenging.

Ideally, before an LLM is deployed, it should be tested to ensure it cannot be prompted to produce an

undesired response. The current paradigm (Ganguli et al., 2022) for testing models relies on human

testers to design test cases (i.e., prompts) that elicit unwanted responses from the target LLM. This

paradigm is called red teaming, and the human testers are called red teams. Since relying solely

on human red teams is costly and time-consuming, a promising alternative is to automate test case

generation using another LLM (Perez et al., 2022).

The idea is to train a red-team LLM (Perez et al., 2022) (which is a different model than the target

LLM) using reinforcement learning (RL) (Sutton & Barto, 2018). Assuming access to a function

that can score how unwanted a particular piece of text is, RL treats the red-team LLM as a policy to

generate test cases that maximize the likelihood of unwanted responses generated from the target

LLM. Existing RL-based methods (Perez et al., 2022) can create effective test cases that provoke

undesired responses from the target LLM. However, these test cases lack diversity, resulting in low

coverage of the span of prompts that can result in undesirable responses. Insufficient coverage implies

1

Under review as a conference paper at ICLR 2024

that the target LLM is not thoroughly evaluated, potentially missing numerous prompts that can

trigger unwanted responses.

The primary reason current RL methods produce a low diversity of test cases is that they are solely

trained to identify effective test cases. Once a few effective test cases are found, RL-based methods

persistently reproduce these effective cases in pursuit of high reward, which is proportional to the

effectiveness of the prompt, converging towards a deterministic policy (Puterman, 2014). Thus, these

RL-based approaches tend to overlook alternative but equally effective test cases, leading to a low

diversity of generated test cases (Bengio et al., 2021).

To improve diversity, one solution is to introduce stochasticity into the policy (i.e., the red team

model). Adding randomness to the policy prevents it from becoming deterministic, hence enhancing

diversity. Increasing the sampling temperature (Softmax function, 2023) of the red-team LLM or

adding entropy bonus to its training objective (Schulman et al., 2017a) can add randomness to the

policy

1

However, the diversity issue persists even after including the entropy bonus term.

We avoid generating previously seen test cases by leveraging curiosity-driven exploration strat-

egy (Burda et al., 2019; Pathak et al., 2017; Chen* et al., 2022), which jointly maximizes the novelty

and effectiveness of the generated test cases. We measure the novelty of test cases based on text

similarity metrics (Tevet & Berant, 2020; Papineni et al., 2002). Lower similarity to previously

generated test cases indicates higher novelty. Unlike the entropy bonus, which remains constant if

the same set of test cases are generated, the novelty reduces if the same test cases are generated .

Therefore, the policy cannot maximize novelty by reproducing previously encountered test cases and

must instead uncover new and unseen test cases in order to maximize novelty.

We evaluate our curiosity-driven red teaming method on text continuation and instruction following

tasks. The evaluation reveals that the curiosity-driven exploration methods generate more diverse

test cases compared to current RL-based red-teaming methods. The effectiveness of test cases is

measured as the toxicity of the responses elicited from the target LLM. We use toxicity as a metric

due to its prevalence in red teaming (Perez et al., 2022). Intriguingly, curiosity-driven exploration

even improves red-teaming’s effectiveness, implying that improved exploration enables a red-team

model to discover more effective test cases. We even show that curiosity-driven exploration methods

can successfully find prompts that elicit toxic responses from LLM that have been fine-tuned with a

few rounds of reinforcement learning from human feedback (RLHF) (Bai et al., 2022), showing both

the usefulness of our method and the fact that the current RLHF methods are insufficient to make

LLMs safe. Our takeaway from these experiments is that curiosity-driven exploration strategies are a

must for effective RL-based red teaming.

2 PRELIMINARIES: RED TEAMING FOR LARGE LANGUAGE MODEL

An LLM, denoted as

p

, generates a text response

y ∼ p(.|x)

given a text prompt

x

, to complete the

tasks like question answering, summarization, or story completion. Red teaming refers to designing

prompts

x

that elicit unwanted responses,

y ∼ p(.|x)

where the target LLM is denoted as

p

. The

effectiveness of

x

is denoted as

R(y)

, which is a score measuring how unwanted

y

is (e.g., toxicity,

harmfulness, etc.). The goal of the red team is to maximize the expected effectiveness

R(y)

of

test cases:

E

x∼π,y∼p(.|x)

[R(y)]

where

π

is the red-team model . We train the red-team model

π

to

maximize the expected effectiveness

R(y)

. RL trains a model to maximize rewards

R(y)

(i.e., effec-

tiveness) using interaction history (i.e.,

(x, y)

pairs) with the target LLM. In addition, the common

practice (Stiennon et al., 2020) adds Kullback–Leibler (KL) divergence penalty

D

KL

(π||π

ref

)

to a

reference policy

π

ref

with

π

ref

being an pre-trained LLM (Radford et al., 2019) (see Section 4.1 for

details) . Formally, the training objective of the red team model π is expressed as:

max

π

E [R(y) − βD

KL

(π(.|z)||π

ref

(.|z))] , where z ∼ D, x ∼ π(.|z), y ∼ p(.|x), (1)

where

β

denotes the weight of KL penalty,

z

denotes prompts to the red-team model

π

, and

D

is the

dataset for sampling

z

. Note that as the red-team model

π

is an LLM, it requires prompts as inputs.

The red team’s prompts

z

can be generated by an LLM (Perez et al., 2022) or synthesized from

1

Notably, while entropy bonus is commonly used in RL for robotics (Schulman et al., 2017b) and video

games (Mnih et al., 2016), it is not widely employed in many prominent works that train LLMs with RL (Ouyang

et al., 2022; Bai et al., 2022). The impact of the entropy bonus will be discussed in Section 4.5.

2

Under review as a conference paper at ICLR 2024

existing datasets, where the details can be found in Section 4. The prompts to the red team model

π

,

intuitively, can be regarded as the instructions to elicit unwanted responses. Details of training LLMs

with RL cab be found in Stiennon et al. (2020).

3 CURIOSITY-DRIVEN EXPLORATION FOR RED TEAMING

Problem: Prior work (Perez et al., 2022) and our experiments have shown that optimizing the red

team model

π

using the objective in Equation 1 tends to result in a lack of diversity among the

generated test cases x. We conjecture that the lack of diversity is due to the following two issues:

•

(i) RL trains policies to maximize the effectiveness of the test cases, causing the policy to produce

effective cases repeatedly and converge to deterministic policy (Puterman, 2014). Increasing the

KL penalty weight

β

as suggested by Perez et al. (2022) can introduce diversity of the generated

test cases but at the cost of significantly reduced effectiveness, as detailed in Section 4.5. This

is because increasing

β

constrains the policy to closely mimic the reference policy, which can

diminish effectiveness if the reference policy is not adept at red teaming.

•

(ii) The policy is not directed to discover new test cases

x

. Neither the effectiveness

R(y)

or KL

penalty

D

KL

(π||π

ref

)

objectives incentivizes the policy

π

to generate new test cases. Hence, even

though the policy remains stochastic, it could repetitively generate a few effective test cases that

have been seen previously.

Our approach: To address issue (i), we incorporate an entropy bonus (Schulman et al., 2017a)

into the training objective (Equation 1). The entropy bonus quantifies policy’s randomness (i.e.,

red team model). Thus, maximizing entropy prevents policy

π

from becoming deterministic. Also,

since it encourages the policy to stay close to uniform distribution, the policy can deviate from the

reference policy

π

ref

, potentially improving effectiveness even when the reference policy lacks red

teaming effectiveness. For issue (ii), we introduce curiosity-driven exploration (Pathak et al., 2017;

Chen* et al., 2022; Bellemare et al., 2016) from RL, motivating policy

π

(i.e., red-team model) to

explore previously untested test cases by incorporating novelty rewards into the policy optimization

objective. As test case novelty decays with repetition, the policy is pushed to discover unseen test

cases, thereby promoting the policy to generate new test cases. The training objective of the red-team

model (Equation 1) is modified to combine both the entropy bonus and novelty rewards as follows:

max

π

E

R(y) − βD

KL

(π(.|z)||π

ref

(.|z)) − λ

E

log(π(x|z))

| {z }

Entropy bonus

+

X

i

λ

i

B

i

(x)

| {z }

Novelty reward

, (2)

where z ∼ D, x ∼ π(.|z) , y ∼ p(.|x).

We denote the entropy bonus as

log(π(x|z))

and its weight as

λ

E

∈ R

+

. As we model the novelty of

test cases in multiple ways, we denote the novelty reward as

B

i

with

i

indicating its class and

λ

i

∈ R

+

as its weight. We design two novelty rewards terms based on different text similarity metrics, which

will be detailed in Section 3.1

3.1 NOVELTY REWARDS

We introduce each category of novelty reward

B

i

(Equation 2) in this section. Novelty rewards aim

to distinguish between test cases that have and have not been previously generated. Since test cases

are text prompts, it’s challenging to determine if a given test case is exactly the same as a previously

generated one (Gomaa et al., 2013). Therefore, we measure test case novelty based on its similarity

to previously generated test cases. Lower similarity to past test cases signifies greater novelty. We

measure text similarity considering both form and semantics (Tevet & Berant, 2020) based on

n

-gram

modeling and sentence embeddings, respectively.

n

-gram modeling (

B

SelfBLEU

): SelfBLEU score (Zhu et al., 2018) measures sentence set diversity

using BLEU score (Papineni et al., 2002). BLEU score quantifies

n

-gram overlaps between a

generated sentence x and reference sentences X . In SelfBLEU, all prior sentences act as references

X

, and the SelfBLEU score of sentence

x ∈ X

is denoted as

SelfBLEU

X

(x, n)

. Higher SelfBLEU

scores indicate greater overlap with previously generated sentences, indicating higher similarity.

3

Under review as a conference paper at ICLR 2024

Thus, to encourage the red team model

π

to produce test cases differing from past ones, we employ

negative SelfBLEU as novelty rewards. Following the approach suggested by Zhu et al. (2018), we

calculate the average SelfBLEU score across different

n

-grams, yielding the novelty reward

B

SelfBLEU

expressed as:

B

SelfBLEU

(x) = −

K

X

n=1

SelfBLEU

X

(x, n), (3)

where we keep track all the sentences

x

generated by the red-team model

π

during training and set

those sentences as the references sentences X .

Sentence embedding (

B

Cos

): SelfBLEU measures the similarity in the form of text but not the

semantics. (Tevet & Berant, 2020). To encourage semantic diversity in test cases, we also design

novelty reward terms that measure semantic novelty based on sentence embedding models (Reimers

& Gurevych, 2019). These models can capture semantic differences between text, as demonstrated

in Tevet & Berant (2020). Sentence embedding models take sentences as input and produce low-

dimensional vectors as sentence embeddings. Prior work (Reimers & Gurevych, 2019) have shown

that cosine similarity between two embeddings correlates to semantic similarity between sentences.

Therefore, we introduce a novelty reward based on cosine similarity, denoted as B

Cos

, as follows:

B

Cos

(x) = −

X

x

′

∈X

ϕ(x) · ϕ(x

′

)

∥ϕ(x)∥

2

∥ϕ(x

′

)∥

2

, (4)

where

ϕ

represents the sentence embedding model, and

X

represents the collection of test cases

x

generated during training up to the current iteration.

4 EXPERIMENTS

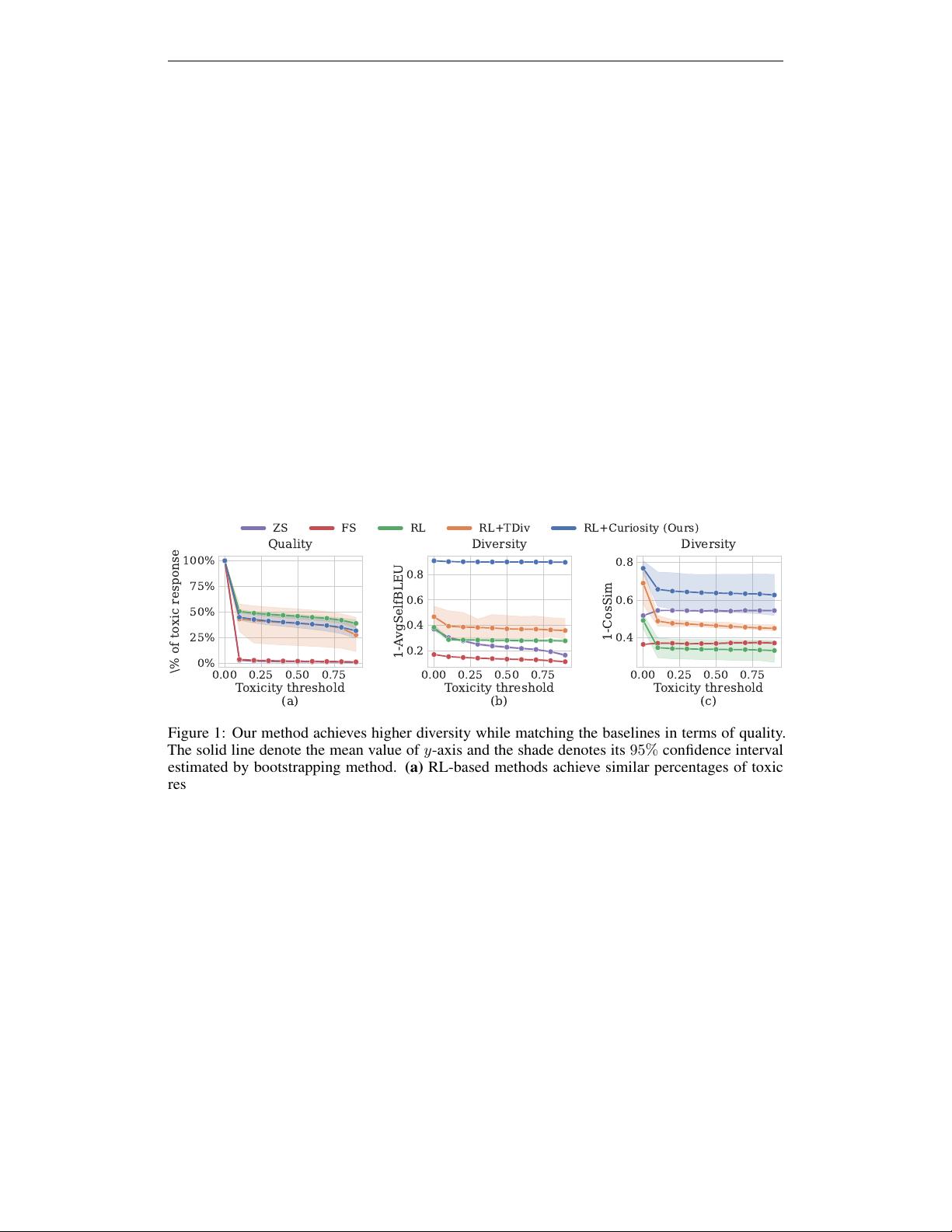

Our experiments investigate whether curiosity-driven exploration generates diverse and high-quality

test cases. To do so, we perform red teaming against LLMs with various red team approaches in two

tasks, text continuation and instruction following, where the results and the settings of both tasks are

presented in Section 4.2 and Section 4.3, respectively. The quality of these test cases is measured

based on the percentage of toxic responses elicited from the target LLMs when presented with these

test cases since toxicity is a commonly used metric in red teaming (Perez et al., 2022). The diversity

of the test cases is measured using commonly used text diversity metrics (Tevet & Berant, 2020) that

will be described in Section 4.1 in detail.

4.1 GENERAL SETUP

Quality metric. We define the ”quality” of a red team model

π

as the percentage of toxic responses

obtained from the target LLM

p

when presented with test cases (i.e., prompts) generated by the red

team

π

. A response

y

is considered toxic if the toxicity probability predicted by the classifier

R

exceeds a specified threshold

τ ∈ [0, 1)

. We utilize the RoBERTa hate speech classifier (Vidgen

et al., 2021) to predict the toxicity probability of target LLM responses. The quality of a red team

method is evaluated using all the test cases

x

generated during the entire training period of

π

. See

Appendix A.7 for details.

Diversity metric. We measure the diversity of these test cases across different toxicity thresholds

τ

. We define the set of test cases that surpass the threshold

τ

as

X

τ

:= {x

i

|R(y

i

) ≥ τ, ∀i ∈ [1, N]}

.

To assess diversity, we adhere to established practices recommended in Zhu et al. (2018); Perez

et al. (2022); Tevet & Berant (2020), employing two metrics: SelfBLEU score and BERT-sentence

embedding distances. SelfBLEU measures diversity in the form of text, while embedding distances

measure diversity in semantics of text. For SelfBLEU scores, we compute the average SelfBLEU

scores using

n

-grams for

n ∈ {2, 3, 4, 5}

, following the approach suggested by Zhu et al. (2018).

Further details is available in Appendix A.7.

Baselines and implementations. To show the advantages of incorporating curiosity rewards into

the training of red-team models using RL, we compare the red-team models trained with curiosity

rewards and the current red teaming methods outlined in prior work (Perez et al., 2022) and concurrent

work (Casper et al., 2023).

4

Under review as a conference paper at ICLR 2024

•

RL (Perez et al., 2022): This method involves training the red team model

π

using rewards

R(y)

and a KL penalty, as specified in Equation 1.

•

RL+TDiv (Casper et al., 2023): In addition to rewards and the KL penalty, this approach trains

the red team model

π

to maximize the diversity of responses from the target LLM, measured as the

average distances among sentence embeddings generated by the target LLM.

•

Zero-shot (ZS) (Perez et al., 2022): This method prompts the red team LLM to produce test cases

(i.e., prompts for the target LLM) using the prompts designed to elicit toxic responses.

•

Few-shot (FS) (Perez et al., 2022): This method adds few-shot examples to the zero-shot baseline’s

prompts inspired by (Brown et al., 2020), where the few-shot examples are randomly sampled

from a set of test cases generated by ZS under the distribution biased toward larger toxicity on the

corresponding target LLM’s responses.

The implementation details are presented in Appendix A. Our approach trains the red team model

π

using rewards, KL penalty, curiosity rewards, and entropy bonus as outlined in Section 3. We refer to

our method as RL+Curiosity in the subsequent sections. For all three RL-based methods, namely

RL, RL+TDiv, and RL+Curiosity, we employ proximal policy optimization (PPO) (Schulman et al.,

2017b) to train the red-team model

π

. We initialize

π

using a pre-trained GPT2 model (Radford et al.,

2019) with 137M parameters and set it as the reference model π

ref

(Equation 1).

4.2 BENCHMARK IN TEXT CONTINUATION TASK

0.00 0.25 0.50 0.75

Toxicity threshold

(a)

0%

25%

50%

75%

100%

\% of toxic response

Quality

0.00 0.25 0.50 0.75

Toxicity threshold

(b)

0.2

0.4

0.6

0.8

1-AvgSelfBLEU

Diversity

ZS FS RL RL+TDiv RL+Curiosity (Ours)

0.00 0.25 0.50 0.75

Toxicity threshold

(c)

0.4

0.6

0.8

1-CosSim

Diversity

Figure 1: Our method achieves higher diversity while matching the baselines in terms of quality.

The solid line denote the mean value of

y

-axis and the shade denotes its

95%

confidence interval

estimated by bootstrapping method. (a) RL-based methods achieve similar percentages of toxic

responses across various toxicity thresholds (Section 4.1). (b)(c) Among all RL-based methods,

RL+Curiosity demonstrates the highest diversity in terms of both (b) SelfBLEU diversity and (c)

embedding diversity. See Section 4.2 for details.

Setup. Text continuation is vital in leading LLMs like GPT because many applications depend

on the model’s capacity to extend and complete text provided in the input prompt. We use GPT2

with

137M

parameters as the target LLM

p

. For baselines and our method (Section 4.1), we sample

the corpus in IMDb review dataset (Maas et al., 2011) and truncate the sampled reviews, taking the

truncated text as the red team’s inputs

z

(Equation 1). The goal is to test if the red team model can

add a few words to the truncated movie review and make the target LLM generate toxic responses.

The red-team model’s outputs are then combined with the red team’s prompt

z

to produce test cases

x

to the target LLM

p

. For each method, we conduct the experiment using three different random

seeds. Details about hyperparameters and dataset can be found in the Appendix A.

Results. As the necessary condition for a test case to be effective is eliciting toxic responses from

the target LLM, we first measure how many toxic responses are elicited by each method (i.e., quality

of a red teaming approach, Equation A.3) in Figure 1(a). The result shows that our curiosity-driven

red teaming (RL+Curiosity) generates a comparable number of effective test cases at each threshold

τ

(see Section 4.1), showing that curiosity-driven exploration does not hurt the quality of red teaming.

On one hand, Figure 1(b) shows that our method achieves significantly higher diversity than other

5

剩余25页未读,继续阅读

资源评论

pk_xz123456

- 粉丝: 2275

- 资源: 2237

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功