A Brief Introduction to Boosting

Robert E. Schapire

AT&T Labs, Shannon Laboratory

180 Park Avenue, Room A279, Florham Park, NJ 07932, USA

www. research. att .com/~schapire

schapire@research. att. com

Abstract

Boosting is a general method for improving the

accuracy of any given learning algorithm. This

short paper introduces the boosting algorithm

AdaBoost, and explains the underlying theory

of boosting, including an explanation of why

boosting often does not suffer from overfitting.

Some examples of recent applications of boost-

ing are also described.

Background

Boosting is a general method which attempts to "boost"

the accuracy of any given learning algorithm. Boosting

has its roots in a theoretical framework for studying ma-

chine learning called the "PAC" learning model, due to

Valiant [37]; see Kearns and Vazirani [24] for a good in-

troduction to this model. Kearns and Valiant [22, 23]

were the first to pose the question of whether a "weak"

learning algorithm which performs just slightly bet-

ter than random guessing in the PAC model can be

"boosted" into an arbitrarily accurate "strong" learning

algorithm. Schapire [30] came up with the first prov-

able polynomial-time boosting algorithm in 1989. A

year later, Freund [14] developed a much more efficient

boosting algorithm which, although optimal in a certain

sense, nevertheless suffered from certain practical draw-

backs. The first experiments with these early boosting

algorithms were carried out by Drucker, Schapire and

Simard [13] on an OCR task.

AdaBoost

The AdaBoost algorithm, introduced in 1995 by Freund

and Schapire [18], solved many of the practical difficul-

ties of the earlier boosting algorithms, and is the fo-

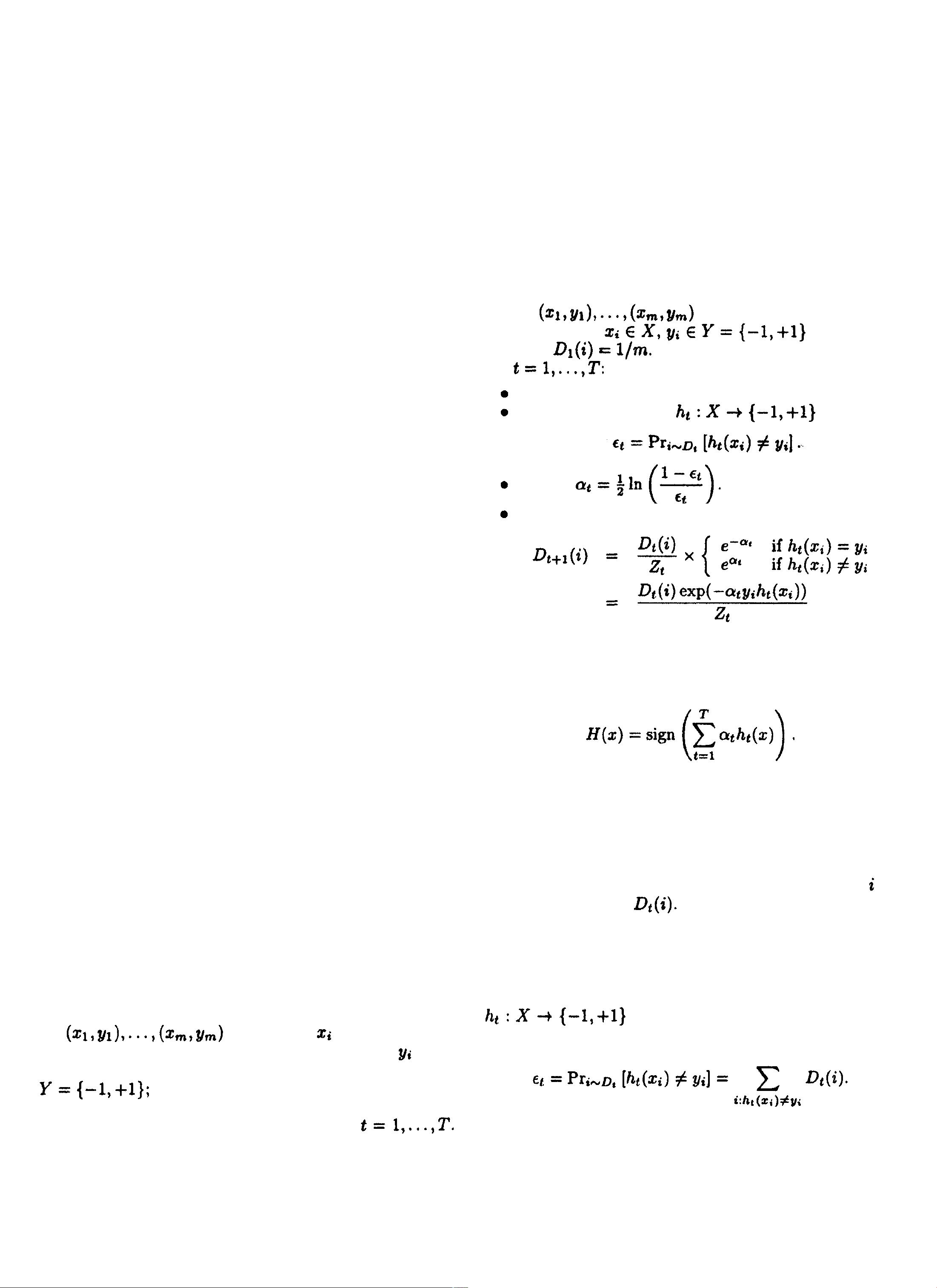

cus of this paper. Pseudocode for AdaBoost is given

in Fig. 1. The algorithm takes as input a training

set where each belongs to some

domain or instance space X, and each label is in

some label set Y. For most of this paper, we assume

later, we discuss extensions to the multi-

class case. AdaBoost calls a given weak or base learning

algorithm repeatedly in a series of rounds

Given:

where

Initialize

For

Train weak learner using distribution D

t

.

Get weak hypothesis with error

Choose

Update:

where Z

t

is a normalization factor (chosen so that

D

t

+1 will be a distribution).

Output the final hypothesis:

Figure 1: The boosting algorithm AdaBoost.

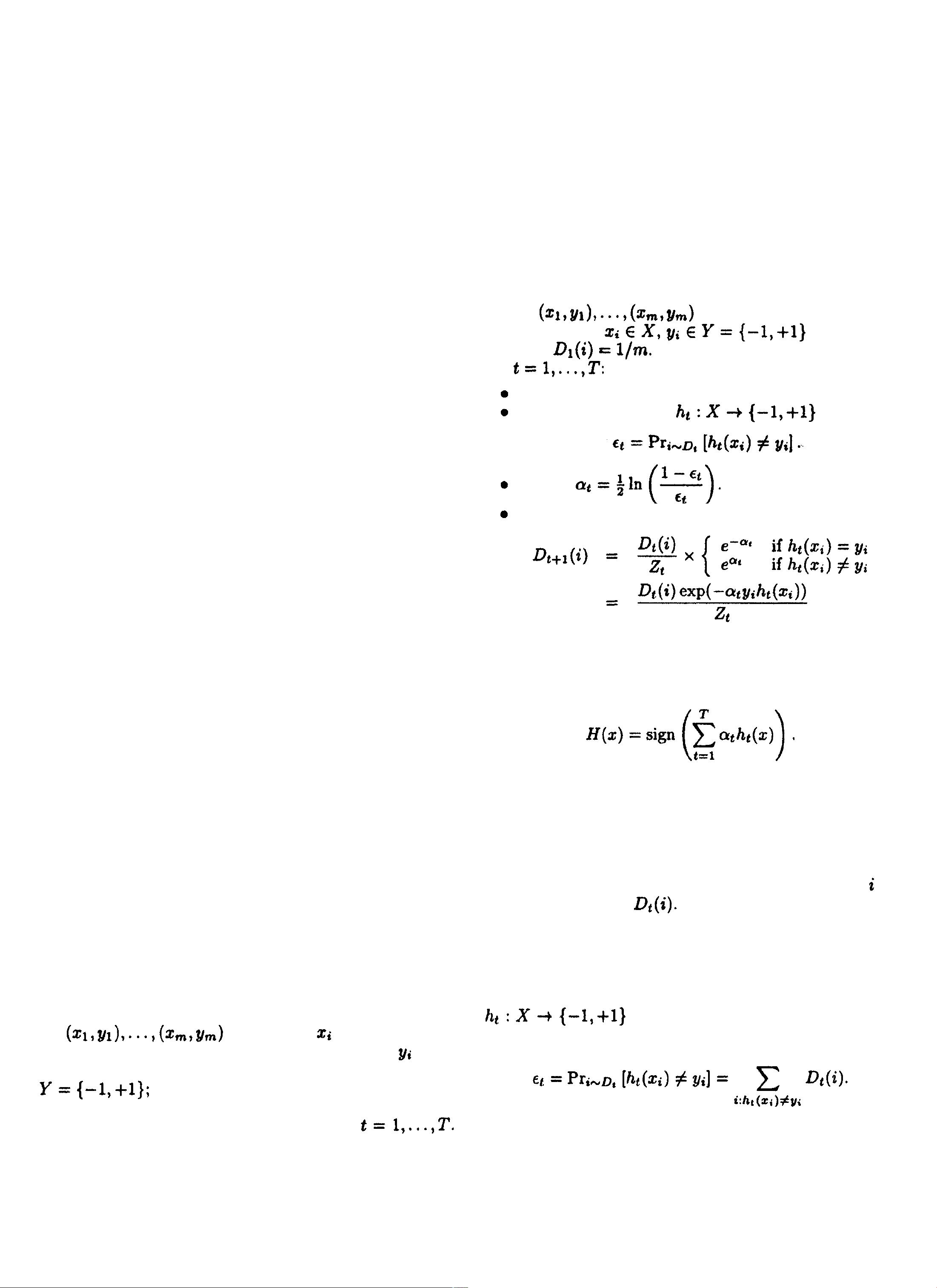

One of the main ideas of the algorithm is to maintain

a distribution or set of weights over the training set.

The weight of this distribution on training example on

round t is denoted Initially, all weights are set

equally, but on each round, the weights of incorrectly

classified examples are increased so that the weak learner

is forced to focus on the hard examples in the training

set.

The weak learner's job is to find a weak hypothesis

appropriate for the distribution D

t

.

The goodness of a weak hypothesis is measured by its

error

Notice that the error is measured with respect to the

SCHAPIRE 1401