没有合适的资源?快使用搜索试试~ 我知道了~

内容概要:本文提出了一种新型方法来提高直接偏好评价(DPO)的数据选择效率,在大规模语言模型对人类偏好的对齐过程中减少标签错误并节省人工成本。文中研究了从模型中提取的句子嵌入,将高相关性和低相关性的文本对用于训练对比数据集中发现的方法能够有效地进行对齐训练,并减少了噪声标签的影响,从而提高了模型的安全性与有用性。实验表明,选取最不相似的回答比随机或者最相似的回答能取得更高效地效果,可以大幅减少注释工作的量。另外,通过聚类得到质心的方法也显示出了良好的结果。 适合人群:自然语言处理、机器学习、大规模语言模型对齐的相关研究人员。 使用场景及目标:用于优化现有大规模语言模型(如LLM),让它们的响应更加贴近人类的期望标准并减少不当内容的生成。 使用方法建议:应用提出的挑选最不相关响应对的技巧去提升现有基于直接偏好优化法(Direct Preference Optimization,简称 DPO)的大规模语言模型对齐的效率,以此节约大量标记时间同时保持甚至提升质量

资源推荐

资源详情

资源评论

REAL: Response Embedding-based Alignment for LLMs

Honggen Zhang

1

, Igor Molybog

1

, June Zhang

1

*

, Xufeng Zhao

2

,

1

Univerity of Hawaii at Manoa,

2

University of Hamburg,

Email:{honggen,molybog,zjz}@hawaii.edu{xufeng.zhao}@uni-hamburg.de

Abstract

Aligning large language models (LLMs) to hu-

man preferences is a crucial step in building

helpful and safe AI tools, which usually in-

volve training on supervised datasets. Popular

algorithms such as Direct Preference Optimiza-

tion rely on pairs of AI-generated responses

ranked according to human feedback. The la-

beling process is the most labor-intensive and

costly part of the alignment pipeline, and im-

proving its efficiency would have a meaning-

ful impact on AI development. We propose a

strategy for sampling a high-quality training

dataset that focuses on acquiring the most in-

formative response pairs for labeling out of a

set of AI-generated responses. Experimental

results on synthetic HH-RLHF benchmarks in-

dicate that choosing dissimilar response pairs

enhances the direct alignment of LLMs while

reducing inherited labeling errors. We also

applied our method to the real-world dataset

SHP2, selecting optimal pairs from multiple

responses. The model aligned on dissimilar

response pairs obtained the best win rate on

the dialogue task. Our findings suggest that

focusing on less similar pairs can improve

the efficiency of LLM alignment, saving up

to

65%

of annotators’ work. The code of

the work can be found

https://github.com/

honggen-zhang/REAL-Alignment

1 Introduction

Large Language models (LLMs), empowered by

the enormous pre-trained dataset from the Internet,

show the power to generate the answers to vari-

ous questions and solutions to challenging tasks.

However, they might generate undesirable content

that is useless or even harmful to humans (Wang

et al., 2024). Additional training steps are required

to optimize LLMs and, thus, align their responses

with human preferences. For that purpose, Chris-

tiano et al. (2017) proposed Reinforcement learning

*

Corresponding Author

from human feedback (RLHF). It consists of esti-

mating the human preference reward model (RM)

from response preference data (Ouyang et al., 2022)

and steering LLM parameters using a popular re-

inforcement learning algorithm of proximal pol-

icy optimization (PPO). RLHF requires extensive

computational resources and is prone to training

instabilities.

Recently, direct alignment from preference

(DAP) approach, which does not explicitly learn

the reward model, has emerged as an alternative to

RLHF (Zhao et al., 2022; Song et al., 2023; Zhao

et al., 2023; Xu et al., 2023; Rafailov et al., 2024).

Direct Preference Optimization (DPO) (Rafailov

et al., 2024; Azar et al., 2023) is a milestone

DAP method. It formulates the problem of learn-

ing human preferences through finetuning LLM

with implicit reward model using a training set

D = {x

i

, y

+

i

, y

−

i

}

N

i=1

, where

x

i

is the

i

th prompt,

y

+

i

, y

−

i

are the corresponding preferred and not-

preferred responses.

DPO requires the explicit preference signal from

an annotator within the dataset. Some DPO varia-

tions such as Contrastive Post-training (Xu et al.,

2023), RPO(Song et al., 2023) were proposed to

augment

D

using AI but might generate low-quality

pairs. The labeling rough estimate is $0.1 - $1

per prompt; with 100,000-1,000,000 prompts, it

would cost approximately $100,000 to augment the

dataset. Some other DPO variation methods(Guo

et al., 2024; Yu et al., 2024) actively choose better

samples using additional annotators at the cost of

increased computations.

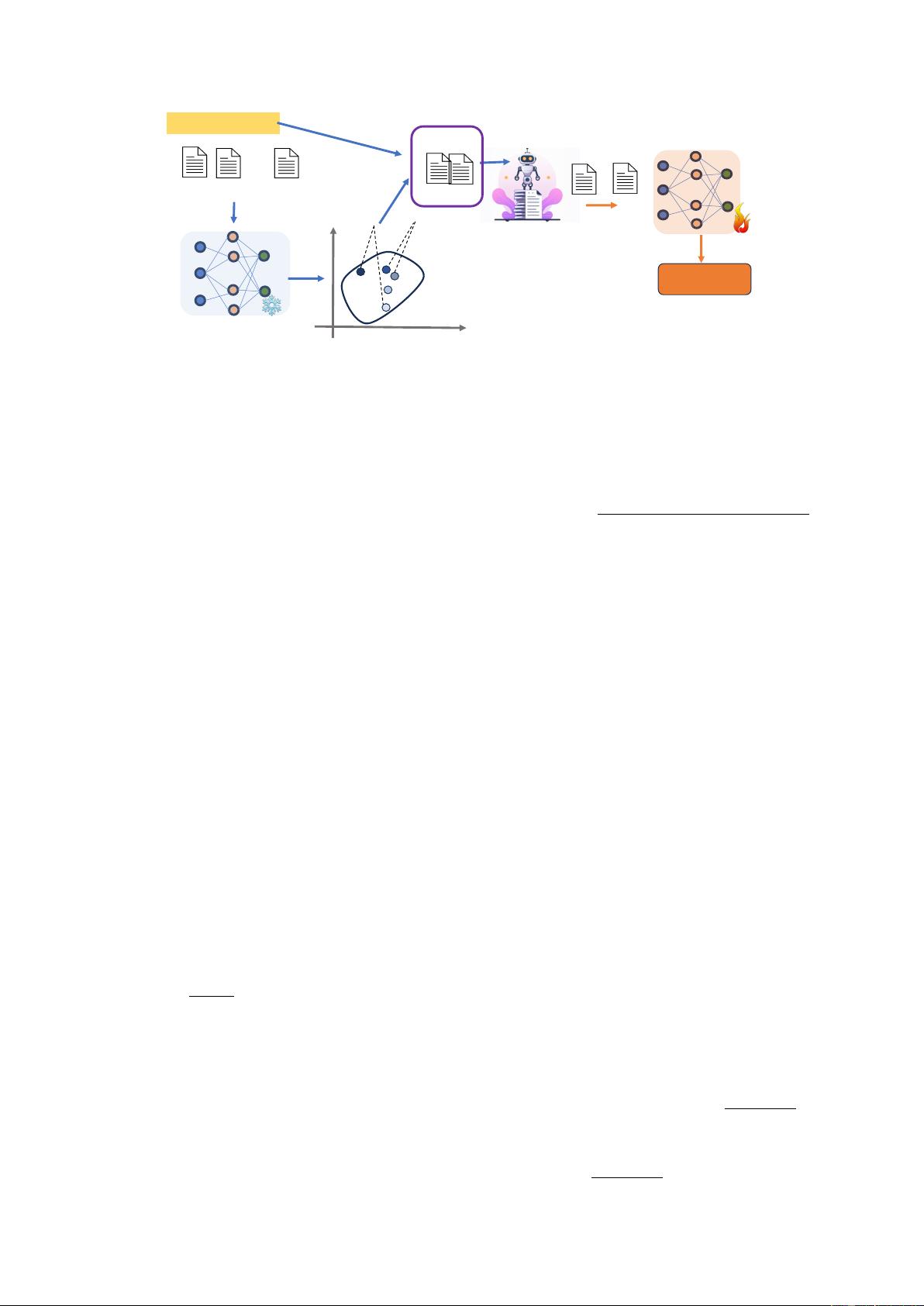

In this paper, we propose a novel method for en-

hancing DPO learning with efficient data selection

(see Fig. 1). We should only train DPO on the most

informative subset of samples in

D

. Inspired by

works in contrastive learning(Chen et al., 2020), we

connect the usefulness of response pair

(y

i

, y

j

)

to

the cosine similarity between their representations

in the embedding space. Sampling similar pairs

1

arXiv:2409.17169v1 [cs.CL] 17 Sep 2024

(large cosine similarity) will bring a harder prob-

lem for learning, which is usually encouraged in

contrastive learning. However, they are more prone

to erroneous labeling (i.e., non-preferred responses

being labeled as preferred and vice versa) which

might damp this effect (Zhang et al., 2024; Chuang

et al., 2020). On the other hand, dissimilar pairs can

be preferred for the DPO owing to smaller noise

in the labels. We select similar and dissimilar re-

sponse pairs from HH-RLHF dataset and show that

dissimilar pairs empirically form the best dataset

for alignment when compared on several metrics

to randomly selected or similar pairs.

We extended this methodology to a real-world

dataset SHP2, which contains multiple responses

per prompt. We want to extract a high-quality pair

(y

i

, y

j

)

to label from

{y

1

, y

2

, · · · , y

k

}

. In addition

to considering the most similar and most dissimilar

pairs of responses, we implemented an approach

that splits the responses into two clusters and se-

lects centroids of the clusters as the training pairs.

Although more difficult to implement, this method

demonstrates the best performance according to

our experimental results, with dissimilar pairs be-

ing the close second compared to similar or random

pairs.

1.

We highlight the overlooked importance of

sentence embeddings in LLM training: The

model learning can be enhanced by investigat-

ing the sentence embeddings and integrating

this information into the fine-tuning process.

2.

We introduce efficient response pair selection

strategies to acquire high-quality data, main-

taining an offline dataset throughout the train-

ing to conserve sampling resources.

3.

Our experiments demonstrate that pairs dis-

similar in the embedding space align better

with human preferences than random or simi-

lar pairs, owing to reduced errors in the label.

2 Related work

Direct Alignment of Language Models: Despite

RLHF’s effectiveness in aligning language mod-

els (LMs) with human values, its complexity and

resource demands have spurred the exploration

of alternatives. Sequence Likelihood Calibration

(SLiC)(Zhao et al., 2022) is a DAP method to

directly encourage the LLMs to output the posi-

tive response and penalize the negative response.

Chain of Hindsight (CoH) (Liu et al., 2023) is

equivalent to learning a conditional policy. DPO

(Rafailov et al., 2024) directly optimizes LMs using

a preference-based loss function to enhance train-

ing stability in comparison to traditional RLHF.

DPO with Dynamic

β

(Wu et al., 2024) introduced

a framework that dynamically calibrates

β

at the

batch level, informed by the underlying preference

data. Existing work(Azar et al., 2023) identified

that DPO were susceptible to overfitting and intro-

duced Identity Preference Optimization (IPO) as

a solution to this issue. The generative diversity

of LLM deteriorated and the KL divergence grew

faster for less preferred responses compared with

preferred responses, and they proposed token-level

DPO (TDPO)(Zeng et al., 2024) to enhance the

regulation of KL divergence.

Data Quality in Direct Alignment Due to

the large data needed for the Direct Alignment,

PRO(Song et al., 2023) proposed preference rank-

ing with listwise preference datasets, which could

directly implement alignment in the fine-tuning

process. However, fine-tuning was constrained

by the limitations of available data and the imper-

fections inherent in human-generated data. Con-

trastive Post-training (Xu et al., 2023) tries to build

more datasets using other LLMs to advance the

training process of DPO without considering the

mistakes. Recently, similar to active learning to

select samples based on current models (Settles,

2009), (Guo et al., 2024; Morimura et al., 2024)

use the AI as an annotator to monitor the quantity

of data pairs for each training step but it will be

expensive. (Yu et al., 2024) use LLMs to design

a refinement function, which estimates the qual-

ity of positive and negative responses. LESS (Xia

et al., 2024) is an optimizer-aware and practically

efficient algorithm to estimate data influences on

gradient Similarity Search for instruction data se-

lection. However, this data selection is online so

needs more computation.

3 Background

LLM alignment refers to the process of training a

language model to assign a higher probability to

a response

y

with a higher human preference re-

ward

R

xy

. An estimation

r

of the reward is used in

practice. It is imperative to ensure that for a given

prompt

x

the estimated reward

r(x, y)

is close to

the true reward

R

xy

for each response. The prob-

lem of learning the best estimator for the reward

2

𝑥": A dog is…?

⋮

Training reference LLM

…

𝑦

!

𝑦

"

𝑦

#

DPO Loss

⋮

𝑦

$

𝑦

%

Easy

Hard

𝑥

𝑦

&

𝑦

'

≻

Human or AI labeling

with less error

Response Pairs

Response Embedding

The Base LLM

Figure 1: The diagram of our data selecting for DPO alignment. We extract the embeddings of the responses from

the base model. Selecting a sub-set of dissimilar pairs (easy) to label. The easy pairs will be used to directly align

the LLMs.

function can be formulated as

r = arg min

r

′

E

(x,y,R

xy

)∼R

[(r

′

(x, y) −R

xy

)

2

] (1)

where

R

is the dataset consisting of the prompt

x

,

responses y, and true reward values R

xy

.

3.1 LLM Alignment to Human Feedback

The human feedback rarely comes in the form of

the true reward samples

(y, R

xy

).

Ranking or pair-

wise comparison of responses is more common.

There are two sample responses

(y

+

, y

−

)

that cor-

respond to a single prompt

x

in the pairwise com-

parison case. Human subjects provide a preference

to label them as

R

xy

+

> R

xy

−

|x

where

y

+

and

y

−

are the preferred and non-preferred responses,

respectively. This method of labeling does not ex-

plicitly provide the true reward signal. However,

alignment can still be performed by applying the

Reinforcement Learning from Human Feedback

(RLHF) algorithm using binary human preference

data. The Bradley-Terry(Bradley and Terry, 1952)

model defines the log-odds

log

p

∗

1 − p

∗

= r(x, y

+

) − r(x, y

−

) (2)

Where

p

is the preference probability. Modeling

the margins of the response pair can also be viewed

in the perspective of estimating the true reward

in Eq. 1. Assume the ground truth reward for

r(x, y

+

)

and

r(x, y

−

)

is 1 and 0 respectively. The

difference between the estimated reward and the

truth is

E

q(y

+

)

[r(x, y

+

) − 1] + E

q(y

−

)

[r(x, y

+

) −

0] = E[r(x, y

+

) − r(x, y

−

) − 1].

We, therefore, have the preference model.

p(y

+

> y

−

|x) =

exp(r(x, y

+

))

exp(r(x, y

+

)) + exp(r(x, y

−

))

,

(3)

where

r(·)

is the reward model. In practice, we

will learn the parametrized reward model given the

human-labeled preference data. The reward model

learned from the human preference responses can

be used to score LLM-generated content. It pro-

vides feedback to the language model

π

θ

by maxi-

mizing the objective function

max

π

θ

E

x∼D,y∼π

θ

(y|x)

[r(x, y)] (4)

− βD

KL

[π

θ

(y|x)||π

ref

(y|x)]

where

π

ref

is the reference model after the super-

vised fine-tunring.

3.2 Direct Language Model Alignment

RLHF is expensive, and we cannot guarantee that

the reward model will be optimal. Recently, Direct

alignment from preferences methods have emerged

to replace the RLHF when aligning the LLMs. Di-

rect Preference Optimization(DPO)(Rafailov et al.,

2024) optimizes the policy

π

directly as an alter-

native to reward model

r

. Given the static dataset

of

D = {x

i

, y

+

i

, y

−

i

}

N

i=1

sampled from the human

preference distribution p, the objective becomes:

L

DP O

= − E

x,y

+

,y

−

log σ

β log

π

θ

(y

+

|x)

π

ref

(y

+

|x)

(5)

−β log

π

θ

(y

−

|x)

π

ref

(y

−

|x)

3

剩余12页未读,继续阅读

资源评论

sp_fyf_2024

- 粉丝: 2103

- 资源: 66

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功