Published as a conference paper at ICLR 2023

trained model using modest resources, typically a few thousand data samples and a few hours of

computation. Post-training approaches are particularly interesting for massive models, for which

full model training or even finetuning can be expensive. We focus on this scenario here.

Post-training Quantization. Most post-training methods have focused on vision models. Usually,

accurate methods operate by quantizing either individual layers, or small blocks of consecutive

layers. (See Section 3 for more details.) The AdaRound method (Nagel et al., 2020) computes a

data-dependent rounding by annealing a penalty term, which encourages weights to move towards

grid points corresponding to quantization levels. BitSplit (Wang et al., 2020) constructs quantized

values bit-by-bit using a squared error objective on the residual error, while AdaQuant (Hubara et al.,

2021) performs direct optimization based on straight-through estimates. BRECQ (Li et al., 2021)

introduces Fisher information into the objective, and optimizes layers within a single residual block

jointly. Finally, Optimal Brain Quantization (OBQ) (Frantar et al., 2022) generalizes the classic

Optimal Brain Surgeon (OBS) second-order weight pruning framework (Hassibi et al., 1993; Singh

& Alistarh, 2020; Frantar et al., 2021) to apply to quantization. OBQ quantizes weights one-by-one,

in order of quantization error, always adjusting the remaining weights. While these approaches can

produce good results for models up to ≈ 100 million parameters in a few GPU hours, scaling them

to networks orders of magnitude larger is challenging.

Large-model Quantization. With the recent open-source releases of language models like

BLOOM (Laurenc¸on et al., 2022) or OPT-175B (Zhang et al., 2022), researchers have started to

develop affordable methods for compressing such giant networks for inference. While all exist-

ing works—ZeroQuant (Yao et al., 2022), LLM.int8() (Dettmers et al., 2022), and nuQmm (Park

et al., 2022)— carefully select quantization granularity, e.g., vector-wise, they ultimately just round

weights to the nearest (RTN) quantization level, in order to maintain acceptable runtimes for very

large models. ZeroQuant further proposes layer-wise knowledge distillation, similar to AdaQuant,

but the largest model it can apply this approach to has only 1.3 billion parameters. At this scale,

ZeroQuant already takes ≈ 3 hours of compute; GPTQ quantizes models 100× larger in ≈ 4 hours.

LLM.int8() observes that activation outliers in a few feature dimensions break the quantization

of larger models, and proposes to fix this problem by keeping those dimensions in higher preci-

sion. Lastly, nuQmm develops efficient GPU kernels for a specific binary-coding based quantization

scheme.

Relative to this line of work, we show that a significantly more complex and accurate quantizer can

be implemented efficiently at large model scale. Specifically, GPTQ more than doubles the amount

of compression relative to these prior techniques, at similar accuracy.

3 BACKGROUND

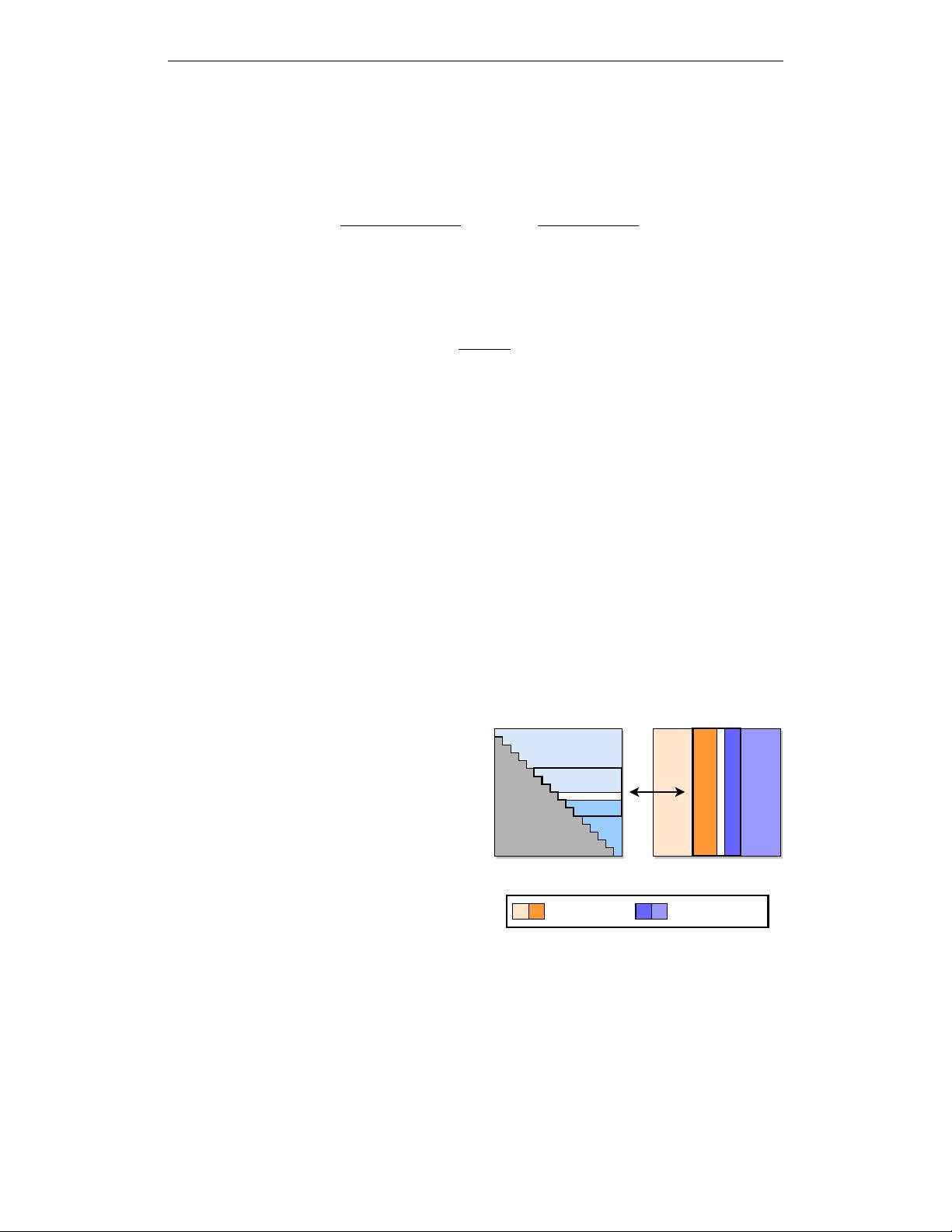

Layer-Wise Quantization. At a high level, our method follows the structure of state-of-the-art

post-training quantization methods (Nagel et al., 2020; Wang et al., 2020; Hubara et al., 2021; Fran-

tar et al., 2022), by performing quantization layer-by-layer, solving a corresponding reconstruction

problem for each layer. Concretely, let W

`

be the weights corresponding to a linear layer ` and let

X

`

denote the layer input corresponding to a small set of m data points running through the network.

Then, the objective is to find a matrix of quantized weights

c

W which minimizes the squared error,

relative to the full precision layer output. Formally, this can be restated as

argmin

c

W

||WX −

c

WX||

2

2

. (1)

Further, similar to (Nagel et al., 2020; Li et al., 2021; Frantar et al., 2022), we assume that the

quantization grid for

c

W is fixed before the process, and that individual weights can move freely as

in (Hubara et al., 2021; Frantar et al., 2022).

Optimal Brain Quantization. Our approach builds on the recently-proposed Optimal Brain

Quanization (OBQ) method (Frantar et al., 2022) for solving the layer-wise quantization problem

defined above, to which we perform a series of major modifications, which allow it to scale to large

language models, providing more than three orders of magnitude computational speedup. To aid

understanding, we first briefly summarize the original OBQ method.

The OBQ method starts from the observation that Equation (1) can be written as the sum of the

squared errors, over each row of W. Then, OBQ handles each row w independently, quantizing one

weight at a time while always updating all not-yet-quantized weights, in order to compensate for

the error incurred by quantizing a single weight. Since the corresponding objective is a quadratic,

3

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功