A Multi-Sensor Fusion System for Moving Object Detection and

Tracking in Urban Driving Environments

Hyunggi Cho, Young-Woo Seo, B.V.K. Vijaya Kumar, and Ragunathan (Raj) Rajkumar

Abstract— A self-driving car, to be deployed in real-world

driving environments, must be capable of reliably detecting

and effectively tracking of nearby moving objects. This paper

presents our new, moving object detection and tracking system

that extends and improves our earlier system used for the 2007

DARPA Urban Challenge. We revised our earlier motion and

observation models for active sensors (i.e., radars and LIDARs)

and introduced a vision sensor. In the new system, the vision

module detects pedestrians, bicyclists, and vehicles to generate

corresponding vision targets. Our system utilizes this visual

recognition information to improve a tracking model selection,

data association, and movement classification of our earlier

system. Through the test using the data log of actual driving,

we demonstrate the improvement and performance gain of our

new tracking system.

I. INTRODUCTION

The 2005 DARPA Grand Challenge and the 2007 Urban

Challenge offered researchers with unique opportunities to

demonstrate the state of the art in the autonomous driving

technologies. These events were milestones in that they pro-

vided opportunities of reevaluating the status of the relevant

technologies and of regaining the public attention on self-

driving car development. Since then, the related technolo-

gies have been drastically advanced. Industry and academia

have reported notable achievements including: autonomous

driving more than 300,000 miles in daily driving contexts

[19], intercontinental autonomous driving [3], a self-driving

car with a stock-car appearance [20], and many more. Such

developments and demonstrations increased possibility of

self-driving cars in near future.

After the Urban Challenge, Carnegie Mellon University

started a new effort to advance the findings of the Urban

Challenge and developed a new autonomous vehicle [20]

to fill the gap between the experimental robotic vehicles

and consumer cars. Among these efforts, this paper details

our perception system, particularly, a new moving objects

detection and tracking system. The Urban Challenge was

held in a simplified, urban driving setup where restricted

vehicle interactions occurred and no pedestrians, bicyclists,

motorcyclists, traffic lights, GPS dropouts appeared. How-

ever, as shown in Figure 1, to be deployed in real-world

driving environments, autonomous driving vehicles must be

capable of safely interacting with nearby pedestrians and

vehicles. The prerequisite to safe interactions with nearby

objects is reliable detection and tracking of moving objects.

H. Cho, B.V.K Vijaya Kumar, and Ragunathan (Raj) Rajkumar are

with the ECE Department and Young-Woo Seo is with the Robotics

Institute, Carnegie Mellon University, 5000, Forbes Ave., Pittsburgh,

PA 15213, USA. {hyunggic, kumar, raj}@ece.cmu.edu,

young-woo.seo@ri.cmu.edu

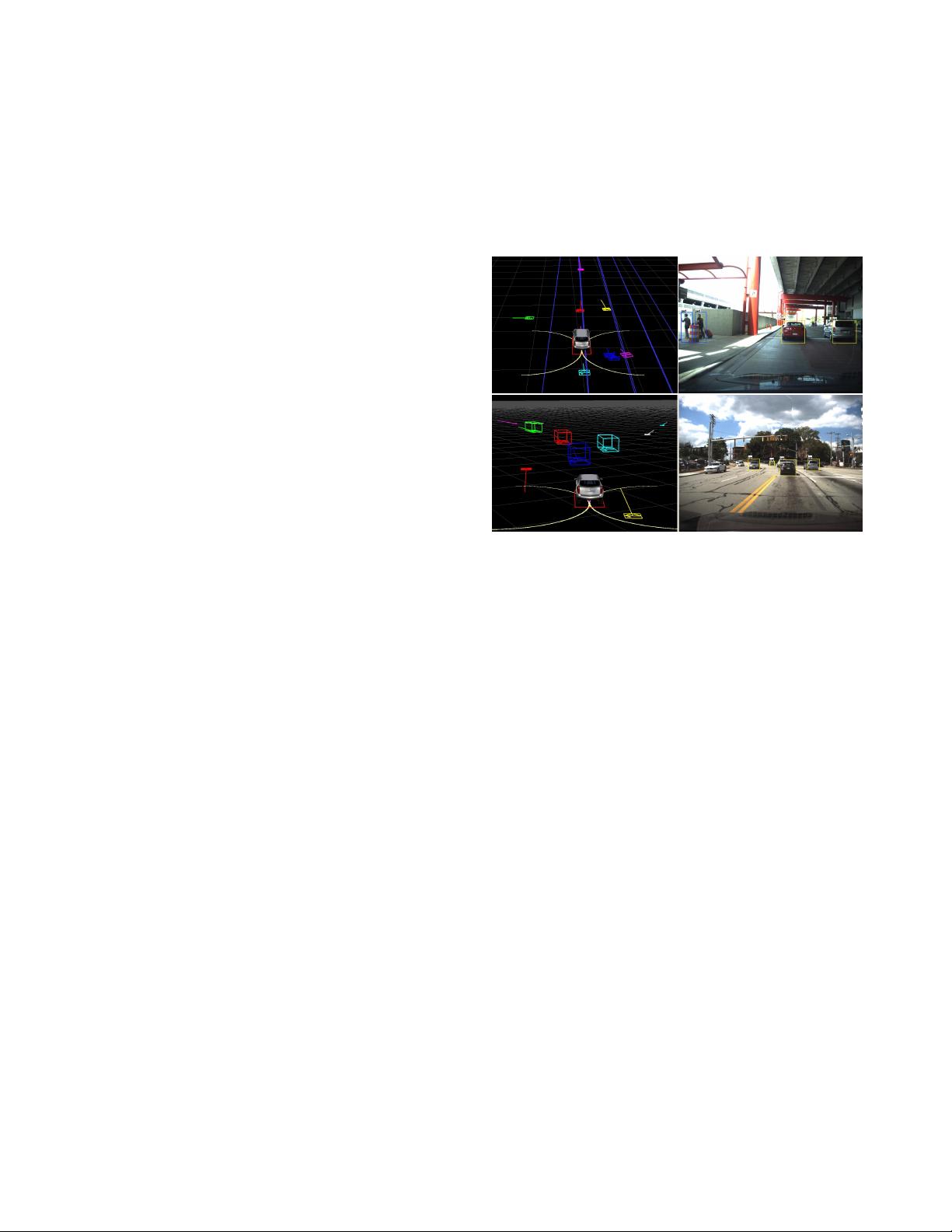

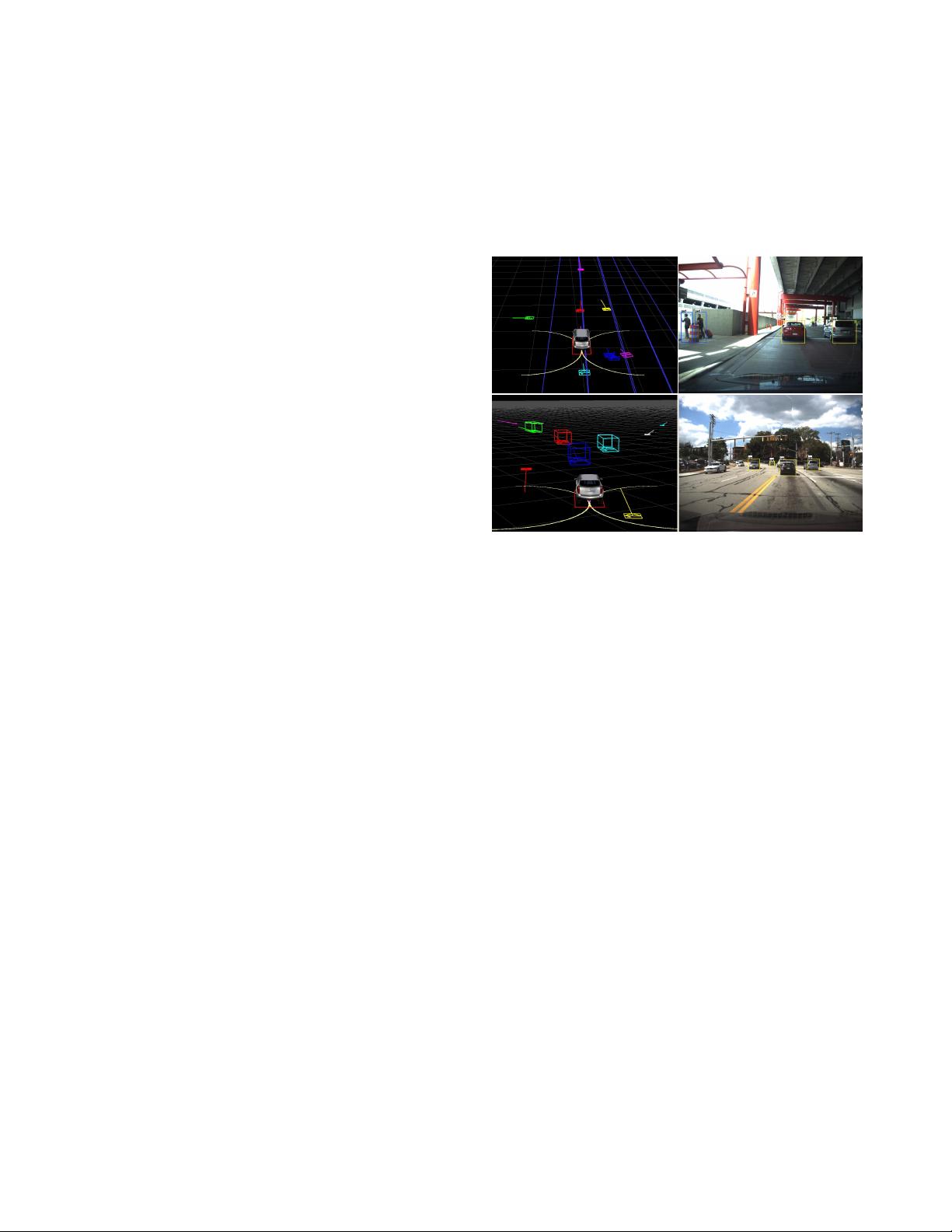

Fig. 1. Sample images show urban driving environments and screen-

captures of our tracking system’s results. The images in the first row

show detection and tracking results from an arriving area of Pittsburgh

international airport. The other two images in the second row show those

of an urban street.

To develop such a reliable perception capability for au-

tonomous urban driving, we redesigned our sensing system,

extended our earlier moving obstacle tracking system and

introduced new sensors in different modalities. Section III

and Section IV detail the configuration of multiple sensors in

different modalities. Knowledge of moving objects’ classes

(e.g., car, pedestrian, bicyclists, etc.) is greatly helpful to

reliably track them and derive a better inference about driving

contexts. To acquire such a knowledge, we exploit vision

sensors to identify the classes of moving objects and to en-

hance measurements from automotive-grade active sensors,

such as LIDARs and radars. Section V describes interactions

between our vision sensor based object detection system

and active sensor based object tracking system. Section VI

discusses the experimental results and the findings. Section

VII summarizes our work and discusses future work.

II. RELATED WORK

Detection and tracking of moving objects is a core task

in mobile robotics and as well as in the field of intelligent

vehicles. Due to such a critical role, this subject has been

extensively studied for the past decades. Since a compre-

hensive literature survey of this topic is beyond the scope

of this paper (we refer to [12], [16] for such surveys), here

we review only the earlier work on multi-sensor fusion for

2014 IEEE International Conference on Robotics & Automation (ICRA)

Hong Kong Convention and Exhibition Center

May 31 - June 7, 2014. Hong Kong, China

978-1-4799-3684-7/14/$31.00 ©2014 IEEE 1836

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功