REVIEW

Advances in natural

language processing

Julia Hirschberg

1

* and Christopher D. Manning

2,3

Natural language processing emplo y s computational techniques for the purpose of learning,

understanding, and producing human language content. Early computational approaches to

language resear ch focused on automating the analysis of the linguistic structur e of language

and developing basic technologies such as machine translation, speech recognition, and speech

synthesis. Today’s researcher s refine and make use of such tools in real-world applications,

creating spok en dialogue systems and speech-to-speech translation engines, mining social

media for information about health or finance, and identifying sentiment and emotion towar d

products and services. We describe successes and challenges in this rapidly advancing ar ea.

O

ver the past 20 years, computational lin-

guistics has grown into both an exciting

area of scientific research and a practical

technology that is increasingly being in-

corporated into consumer products (for

example, in applications such as Apple’sSiriand

Skype Translator). Four key factors enabled these

developments: (i) a vast increase in computing

power , (ii) the availability of very large amounts

of linguistic data, (iii) the development of highly

successful machine learning (ML) methods, and

(iv) a much richer understanding of the structure

of human language and its deployment in social

contexts. In this Review, we describe some cur-

rent application areas of interest in language

research. These efforts illustrate computational

approaches to big data, based on current cut ting-

edge methodologies that combine statistical anal-

ysis and ML with knowledge of language.

Computational linguistics, also known as nat-

ural language processing (NLP), is the subfield

of computer science concerned with using com-

putational techniques to learn, understand, and

produce human language content. C omputation-

al linguistic systems can have multiple purposes:

The goal can be aiding human-human commu-

nication, such as in machine translation (MT);

aiding human-machine communication, such as

with conversational agents; or benefiting both

humans and machines by analyzing and learn-

ing from the enormous quantity of human lan-

guage content that is now available online.

During the first several decades of work in

computational linguistics, scientists attempted

to write down for computers the vocabularies

and rules of human languages. This proved a

difficult task, owing to the variability, ambiguity,

and context-dependent interpretation of human

languages. For instance, a star can be either an

astronomical object or a person, and “star” can

be a noun or a verb. In another example, two in-

terpretations are possible for the headline “Teacher

strikes idle kids,” depending on the noun, verb, and

adjective assignments of the words in the sentence,

as well as grammatical structure. Beginning in the

1980s, but more widely in the 1990s, NLP was

transformed by researchers starting to build mod-

els over large quantities of empirical language

data. Statistical or corpus (“body of words”)–based

NLP was one of the first notable successes of

the use of big data, long before the power of

ML was more generally recognized or the term

“big data” even introduced.

A central finding of this statistical approach to

NLP has been that simple methods using words,

part-of-speech (POS) sequences (such as whether

a word is a noun, verb, or preposition), or simple

templates can often achieve notable results when

trained on large quantities of data. Many text

and sentiment classifiers are still based solely on

the different sets of words (“bag of words”)that

documents contain, without regard to sentence

and discourse structure or meaning. Achieving

improvements over these simple baseli ne s can be

quite difficult. Nevertheless, the best-performing

systems now use sophisticated ML approaches

and a rich understanding of linguistic structure.

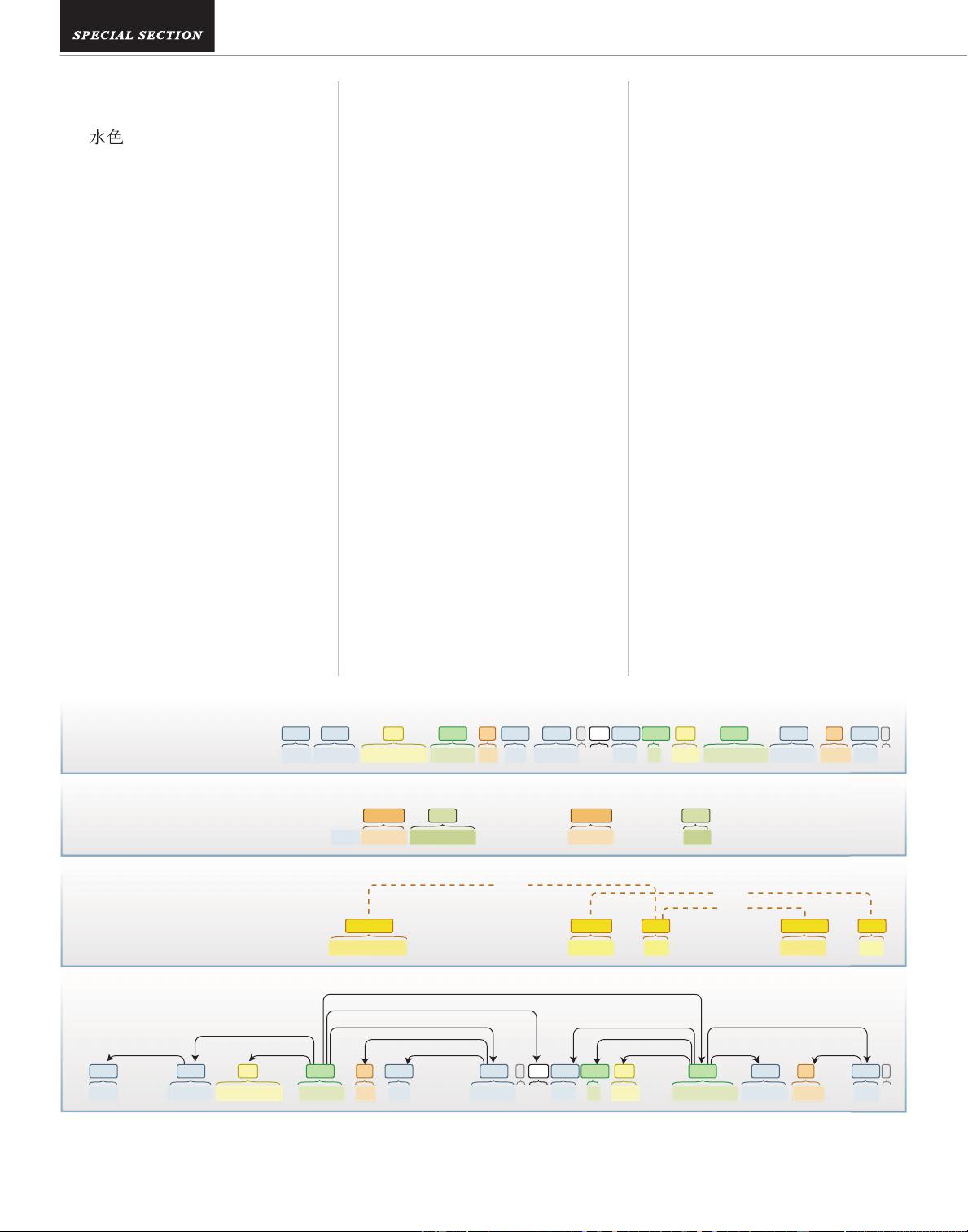

High-performance tools that identify syntactic

and semantic information as well as information

about discourse context are now available. One

example is Stanford CoreNLP (1), which provides

a standard NLP preprocessing pipeline that in-

cludes POS tagging (with tags such as noun, verb,

and preposition); identification of named entities,

such as people , places, and organizations; parsing

of sentences into their grammatical structures ;

and identifying co-references between noun

phrase mentions (Fig. 1).

Historically, two developments enabled the

initial transformation of NLP into a big data field.

The first was the early availability to researchers

of linguistic data in digital form, particularly

through the Linguistic Data Consortium (LDC)

(2), established in 1992. Today, large amounts

of digital text can easily be downloaded from

the Web. Available as linguistically annotated

data are large speech and text corpora anno-

tated with POS tags, syntactic parses, semantic

labels, annotations of named entities (persons,

places, organizations), dialogue acts (statement,

question, request), emotions and positive or neg-

ative sentiment, and discourse structure (topic

or rhetorical structure). Second, performance im-

provements in NLP were spurred on by shared

task competitions. Originally, these competitions

were largely funded and organized by the U.S.

Department of Defense, but they were later or-

ganized by the research community itself, such

as the CoNLL Shared Tasks (3). These tasks were

a precursor of modern ML predictive modeling

and analytics competitions, such as on Kaggle (4),

in which companies and researchers post their

data and statisticians and data miners from all over

theworldcompetetoproducethebestmodels.

A major limitation of NLP today is the fact that

most NLP resources and systems are available

only for high-resource languages (HRLs), such as

English, French, Spanish, German, and Chinese.

In contrast, many low-resource languages (LRLs)—

such as Bengali, Indonesian, Punjabi, Cebuano,

and Swahili—s pok en and wri t ten by millions of

people have no such resources or systems avail-

able.Afuturechallengeforthelanguagecommu-

nity is how to develop resources and tools for

hundreds or thousands of languages, not just a few.

Machine translation

Proficiency in languages was traditionally a hall-

mark of a learned person. Although the social

standing of this human skill has declined in the

modern age of science and machines, translation

between human languages remains crucially im-

portant, and MT is perhaps the most substantial

way in which computers could aid human-human

communication. Moreover, the ability of com-

puters to translate between human languages

remains a consummate test of machine intel-

ligence: Correct translation requires not only

the ability to analyze and generate sentences in

human languages but also a humanlike under-

standing of world knowledge and context, de-

spite the ambiguities of languages. For example,

the French word “bordel” st raightforwardly means

“brothel”; but if someone says “My room is un

bordel,” then a translating machine has to know

enough to suspect that this person is probably not

running a brothel in his or her room but rather is

saying “My room is a complete mess.”

Machine translation was one of the first non-

numeric applications of computers and was studied

intensively starting in the late 1950s. However , the

hand-built grammar-basedsystemsofearlydec-

ades achieved very limited success. The field was

transformed in the early 1990s when researchers

at IBM acquired a large quantity of English and

French sentences that weretranslationsofeach

other (known as parallel text), produced as the

proceedings of the bilingual Canadian Parliament.

These data allowed them to collect statistics of

word translations and word sequences and to

build a probabilistic model of MT (5).

Following a quiet period in the late 1990s,

the new millennium brought the potent combina-

tion of ample online text, including considerable

quantities of parallel text, much more abundant

and inexpensive computing, and a new idea

for building statistical phrase-based MT systems

SCIENCE sciencemag.org 17 J ULY 2015 • VOL 349 ISSUE 6245 261

1

Department of Computer Science, Columbia University, New York,

NY 10027, USA.

2

Department of Linguistics, Stanford University,

Stanford, CA 94305-2150, USA.

3

Department of Computer

Science, Stanford University, Stanford, CA 94305-9020, USA.

*Corresponding author. E-mail: julia@cs.columbia.edu

on July 16, 2015www.sciencemag.orgDownloaded from on July 16, 2015www.sciencemag.orgDownloaded from on July 16, 2015www.sciencemag.orgDownloaded from on July 16, 2015www.sciencemag.orgDownloaded from on July 16, 2015www.sciencemag.orgDownloaded from on July 16, 2015www.sciencemag.orgDownloaded from

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功