没有合适的资源?快使用搜索试试~ 我知道了~

温馨提示

内容概要:本文介绍了STIV(Scalable Text and Image Conditioned Video Generation),一个能够同时处理文本到视频(T2V)和文本-图像到视频(TI2V)任务的视频生成模型。该模型基于扩散变压器(DiT),通过帧替换和联合图像-文本分类器自由引导(JIT-CFG)等技术,实现了高性能和多任务能力。STIV通过系统研究模型架构、训练策略和数据处理方法,显著提高了生成视频的质量和语义对齐度。文中还展示了STIV在多种下游应用中的灵活性,如视频预测、帧插值、多视角生成和长视频生成等。 适合人群:从事计算机视觉、深度学习和视频生成领域的研究人员和工程师,尤其是对视频生成技术和多模态生成模型感兴趣的读者。 使用场景及目标:该框架主要用于研究和开发高性能的视频生成模型,特别是在需要结合文本和图像条件的情况下。其应用范围包括高质量视频生成、视频编辑、动画制作、增强现实等领域。 其他说明:文章详细介绍了STIV的架构设计、训练流程、性能评估和应用场景,为未来的研究提供了透明且可扩展的方法。实验结果表明,STIV在多个基准测试中取得了优于现有模型的性能,特别是对于高分辨率和长视频生成任务。

资源推荐

资源详情

资源评论

STIV: Scalable Text and Image Conditioned Video Generation

Zongyu Lin

1⋆

*

Wei Liu

1⋆

Chen Chen

2⋆

Jiasen Lu

2⋆

Wenze Hu

2⋆

Tsu-Jui Fu

2⋆

Jesse Allardice

2⋆

Zhengfeng Lai

2⋆

Liangchen Song

2⋆

Bowen Zhang

2⋆

Cha Chen

2⋆

Yiran Fei

⋆

Yifan Jiang

⋆

Lezhi Li

⋆

Yizhou Sun

⋄†

Kai-Wei Chang

⋄†

Yinfei Yang

⋄⋆

⋆

Apple

†

University of California, Los Angeles

Abstract

The field of video generation has made remarkable advancements, yet there remains a pressing need for a clear,

systematic recipe that can guide the development of robust and scalable models. In this work, we present a

comprehensive study that systematically explores the interplay of model architectures, training recipes, and

data curation strategies, culminating in a simple and scalable text-image-conditioned video generation method,

named STIV. Our framework integrates image condition into a Diffusion Transformer (DiT) through frame

replacement, while incorporating text conditioning via a joint image-text conditional classifier-free guidance. This

design enables STIV to perform both text-to-video (T2V) and text-image-to-video (TI2V) tasks simultaneously.

Additionally, STIV can be easily extended to various applications, such as video prediction, frame interpolation,

multi-view generation, and long video generation, etc. With comprehensive ablation studies on T2I, T2V, and TI2V,

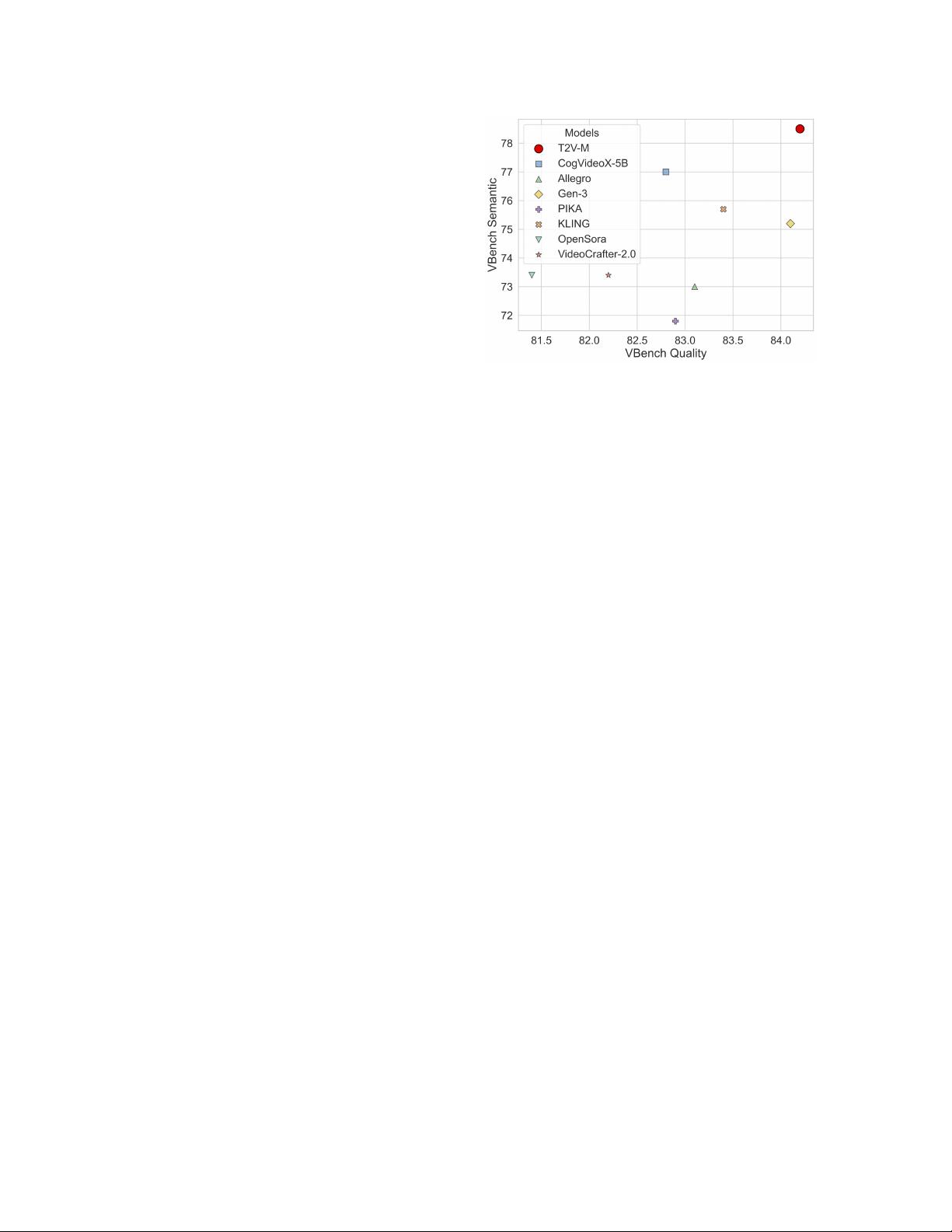

STIV demonstrate strong performance, despite its simple design. An 8.7B model with

512

2

resolution achieves

83.1 on VBench T2V, surpassing both leading open and closed-source models like CogVideoX-5B, Pika, Kling, and

Gen-3. The same-sized model also achieves a state-of-the-art result of 90.1 on VBench I2V task at

512

2

resolution.

By providing a transparent and extensible recipe for building cutting-edge video generation models, we aim to

empower future research and accelerate progress toward more versatile and reliable video generation solutions.

1. Introduction

The field of video generation has witnessed a significant progress with the introduction of Sora [

42

], a video

generation model based on Diffusion Transformer (DiT) [

43

] architecture. Researchers have been actively

exploring optimal methods to incorporate text and other conditions into the DiT architecture. For example,

PixArt-

α

[

8

] leverages cross attention, while SD3 [

19

] concatenates text with the noised patches and applies

self-attention using the MMDiT block. Several video generation models [

21

,

46

,

65

] adopt similar approaches

and have made substantial progress in the text-to-video (T2V) task. Pure T2V approaches often struggle with

producing coherent and realistic videos, as their outputs are not grounded in external references or contextual

constraints [

13

]. To address this limitation, text-image-to-video (TI2V) introduce an initial image frame along

with the textual prompt, providing a more concrete grounding for the generated video.

*

This work was done during an internship at Apple.

1

First authors

2

Core authors

⋄

Senior authors

1

arXiv:2412.07730v1 [cs.CV] 10 Dec 2024

Figure 1. Performance comparison of our Text-to-Video

model against both open-source and closed-source state-of-

the-art models on VBench [31].

Despite substantial progress in video generation,

achieving Sora-level performance for T2V and TI2V

remains challenging. A central challenge is how to

seamlessly integrate image-based conditions into the

DiT architecture, calling for innovative techniques

blend visual inputs smoothly with textual cues. Mean-

while, there is a pressing need for stable, efficient

large-scale training strategies, as well as improving

the overall quality of training datasets. To address

these issues, a comprehensive, step-by-step “recipe”

would greatly assist in developing unified models that

handle both T2V and TI2V task under one framework.

Overcoming these challenges is essential for advanc-

ing the field and fully realizing the potential of video

generation models.

Although various studies [

2

,

6

,

11

,

14

,

49

,

62

,

70

]

have examined methods of integrating image condi-

tions into the U-Net architectures, how to effectively

incorporate such conditions into the DiT architecture remains unsolved. Moreover, existing studies in video

generation often focuses on individual aspects independently, overlooking the how their collective impact on

overall performance. For instance, while stability tricks like QK-norm [

19

,

28

] have been introduced, they prove

insufficient as models scale to larger sizes [

57

], and no existing approach has successfully unified T2V and TI2V

capabilities within a single model. This lack of systematic, holistic research limits progress toward more efficient

and versatile video generation solutions.

In this work, we first present a comprehensive study of model architectures and training strategies to establish

a robust foundation for T2V. Our analysis reveals three key insights: (1) stability techniques such as QK-norm

and sandwich-norm [

17

,

25

] are critical for effectively scaling larger video generation models; (2) employing

factorized spatial-temporal attention [

1

], MaskDiT [

73

], and switching to AdaFactor [

54

] significantly improve

training efficiency and reduce memory usage with minimal impact on performance loss; (3) progressive training,

where spatial and temporal layers are initialized from separate models, outperforms using a single model under

the same compute constraints. Starting from a PixArt-

α

baseline architecture, we address scaling challenges with

these stability and efficiency measures, and further enhance performance with Flow Matching [

41

], RoPE [

56

],

and micro conditions [

45

]. As a result, our largest T2V model (8.7B parameters) achieves state-of-the-art semantic

alignment and a VBench score of 83.1.

We then identify the optimal model architecture and hyperparameters established in the T2V setting and apply

them to the TI2V task. Our results show that simply replacing the first noised latent frame with the un-noised

image condition latent yields strong performance. Although ConsistI2V [

49

] introduced a similar idea in a U-Net

setting, it required spatial self-attention for each frame and window-based temporal self-attention to match our

quality. In contrast, the DiT architecture natively propagates the image-conditioned first frame through stacked

spatial-temporal attention layers, eliminating the need for these additional operations. However, as we scale up

spatial resolution, we observe the model producing slow or nearly static motion. To solve this, we introduce

random dropout of the image condition during training and apply joint image-text conditional classifier-free

guidance (JIT-CFG) for both text and image conditions during inference. This strategy resolves the motion issue

and also enables a single model to excel at both T2V and TI2V tasks.

With all these changes, we finalize our model and scale it up from 600M to 8.7B parameters. Our best STIV

model achieves a state-of-the-art result of 90.1 in the VBench I2V task at

512

2

resolution. Beyond enhancing video

generation quality, we demonstrate the potential of extending our framework to various downstream applications,

including video prediction, frame interpolation, multi-view generation and long video generation. These results

2

Text-to-Video

Prompt: An adorable kangaroo wearing blue jeans and a white t shirt taking a pleasant stroll in Johannesburg

South Africa during a beautiful sunset.

Prompt: A swan with wings tipped in gold gliding across a misty lake, leaving a trail of soft, shimmering light

that fades as the sun rises.

Text-Image-to-Video

Prompt: The video presents a sequence of frames that depict a space scene with a large, green and yellow planet

at the center, surrounded by smaller celestial bodies. The background is a deep blue, speckled with stars.

Prompt: Robots move efficiently through a futuristic laboratory, adjusting holographic displays and conducting

experiments, while scientists observe and interact with the high-tech equipment.

Figure 2. Text-to-Video and Text-Image-to-Video generation samples by T2V and STIV models. The text prompts and first

frame image conditions are borrowed from Sora’s demos [42] and MovieGenBench [46].

3

++

Spatial

Attention

MHA with

QK-norm

Shared

AdaLN

FFN

Scale & Shift

STIV Block

x N

Cross

Attention

MHA With

QK-norm

Q

Linear

Scaled Dot-Product

Attention

MHA with QK-Norm

Frame Replacement +

Image Condition Dropout

Gate

+

A pirate ship sailing

through a

thunderstorm with

enormous waves.

+

Pooled Text

Embedding

VAE Enc

Temporal

Attention

MHA With

QK-norm

Micro Conditions

Timestep

Projection norm

Projection norm

Sequence Text

Embedding

norm

+

Scale & Shift

Gate

RMSNorm

Projection

norm

norm

norm

norm

norm

norm

CLIP Text

Enc

K

Linear

RMSNorm

V

Linear

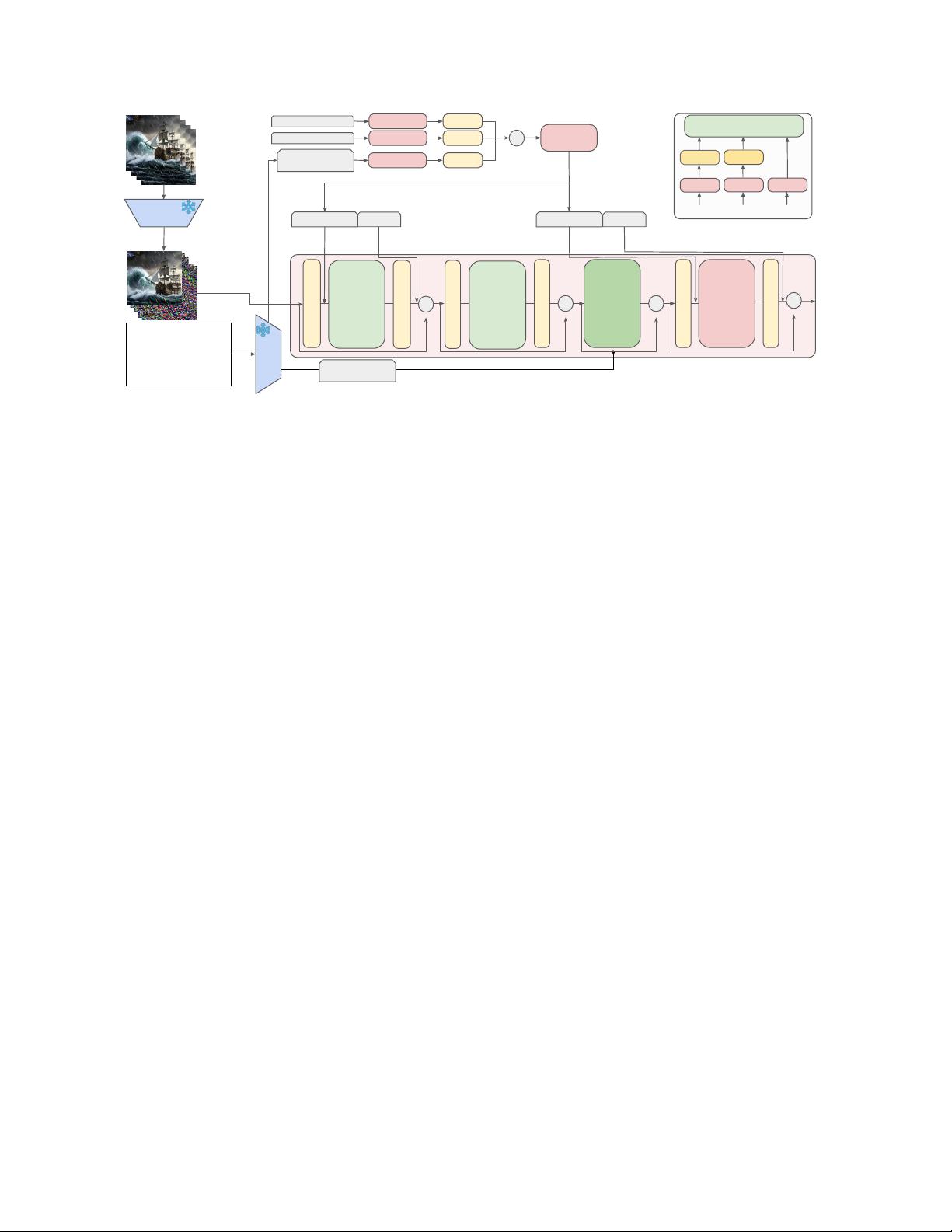

Figure 3. We replace the first frame of the noised video latents with the ground truth latent and randomly drop out the

image condition. We use cross attention to incorporate the text embedding, and use QK-norm in multi-head attention, the

sandwich-norm in both attention and feedforward, and stateless layernorm after singleton conditions to stabilize the training.

validate the scalability and versatility of our approach, showcasing its ability to address diverse video generation

challenges. We summarize our contributions as follows:

•

We present STIV, a single model capable of performing both T2V and TI2V tasks. At its core, we replace the

noised latent with the un-noised image condition latent and introduce joint image-text conditioned CFG.

•

We conduct a systematic study for T2I, T2V and TI2V, covering model architectures, efficient and stable training

techniques, and progressive training recipes to scale up the model size, spatial resolution, and duration.

•

These design features make it easy to train and adaptable to various tasks, including video prediction, frame

interpolation, and long video generation.

•

Our experiments include detailed ablation studies on different design choices and hyperparameters, evaluated

on VBench, VBench-I2V and MSRVTT. These studies demonstrate the effectiveness of the proposed model

compared with a range of recent state-of-the-art open-source and closed-source video generation models. Some

of the generated videos are shown in Fig. 2. More examples can be found in the Sec. K in the Appendix.

2. Basics for STIV

This section describes our key components of our proposed STIV method for text-image-to-video (TI2V) genera-

tion, which is illustrated in Fig. 3. Afterward, Sec. 3 and 4 presents detailed experimental results.

2.1. Base Model Architecture

The STIV model is based on PixArt-

α

[

8

], which converts the input frames into spatial and temporal latent

embeddings using a frozen Variational Autoencoder (VAE). These embeddings are then processed by a stack of

learnable DiT-like blocks. We employ the T5 [

48

] tokenizer and an internally trained CLIP [

47

] text encoder to

process text prompts. The overall framework is illustrated in Fig. 3. For more details, please refer to the appendix.

The other significant architectural changes are outlined below.

Spatial-Temporal Attention We employ factorized spatial and temporal attention [

1

] to handle video frames. We

first fold the temporal dimension into the batch dimension and perform spatial self-attention on spatial tokens.

Then, we permute the outputs and fold the spatial dimension into the batch dimension to perform temporal

4

self-attention on temporal tokens. By using factorized spatial and temporal attention, we can easily preload

weights from a text-to-image (T2I) model, as images are a special case of videos with only one temporal token

and only need spatial attention.

Singleton Condition We use the original image resolution, crop coordinates, sampling stride, and number of

frames as micro conditions to encode the meta information of the training data. We first use a sinusoidal embedding

layer to encode these properties, followed by an MLP to project them into a d-dimensional embedding space.

These micro condition embeddings, along with the diffusion timestep embedding and the last text token embedding

from the last layer of the CLIP model, are added to form a singleton condition. We also apply stateless layer

normalization to each singleton embedding and then add them together. This singleton condition is used to

produce shared scale-shift-gate parameters that are utilized in the spatial attention and feed-forward layers of each

Transformer layer.

Rotary Positional Embedding Rotary Positional Embeddings (RoPE) [

56

] are used so that the model has a

strong inductive bias for processing relative temporal and spatial relationships. Additionally, RoPE can be made

compatible with the masking methods used in high compute applications and are highly adaptable to variations in

resolution [

76

]. We apply 2D RoPE [

39

] for the spatial attention and 1D RoPE for the temporal attention inside

the factorized Spatial-Temporal attention.

Flow Matching Instead of employing the conventional diffusion loss, we opt for a Flow Matching training

objective. This objective defines a conditional optimal transport between two examples drawn from a source and

target distribution. In our case, we assume the source distribution to be Gaussian and utilize linear interpolates

[41] to achieve this.

x

t

= t · x

1

+ (1 − t) · ϵ. (1)

The training objective is then formulated as

min

θ

E

x,ϵ∈N (0,I),c,t

h

∥F

θ

(x

t

, c, t) − v

t

∥

2

2

i

(2)

where the velocity vector field v

t

= x

1

− ϵ.

In inference time, we solve the corresponding reverse-time SDE, from timestep 0 to 1, to generate images from

a randomly sampled Gaussian noise ϵ.

2.2. Model Scaling

As we scale up the model, we encounter training instability and infrastructure challenges in fitting larger models

into memory. In this section, we outline the methods to stabilize the training and enhance training efficiency.

Stable Training Recipes We discovered that QK-Norm — applying RMSNorm [

68

] to the query and key vectors

prior to computing attention logits — significantly stabilizes training. This finding aligns with the results reported

in SD3 [

19

]. We also change from pre-norm to sandwich-norm [

17

] for both MHA and FFN, which involves

adding pre-norm and post-norm with stateless layer normalization [37] to both the layers within the STIV block.

MHA(x) = x + gate · norm (Attn (scale · norm(x) + shift))

FFN(x) = x + gate · norm (MLP (scale · norm(x) + shift))

Efficient DiT Training We follow MaskDiT [

73

] by randomly masking 50% of spatial tokens before passing

them into the major DiT blocks. After unmasking, we add two additional DiT blocks. We also switch from

AdamW to AdaFactor optimizer and employ gradient checkpointing to only store the self-attention outputs. These

modifications significantly enhance efficiency and reduce memory consumption, enabling the training of larger

models at higher resolution and longer duration.

5

剩余45页未读,继续阅读

资源评论

码流怪侠

- 粉丝: 2w+

- 资源: 109

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功