没有合适的资源?快使用搜索试试~ 我知道了~

基于梯度的可视化解释 Grad-CAM:VisualExplanationsfromDeepNetworks viaGradie...

1.该资源内容由用户上传,如若侵权请联系客服进行举报

2.虚拟产品一经售出概不退款(资源遇到问题,请及时私信上传者)

2.虚拟产品一经售出概不退款(资源遇到问题,请及时私信上传者)

版权申诉

0 下载量 81 浏览量

2021-06-28

22:55:34

上传

评论

收藏 6.93MB PDF 举报

温馨提示

我们提出了一种技术,用于从一大类基于卷积神经网络(CNN)的模型中生成决策的“视觉解释”,使它们更透明和更可解释。我们的方法-梯度加权类激活映射(Grad-CAM),使用流入最终卷积层的任何目标概念(例如分类网络中的“狗”或字幕网络中的一系列单词)的梯度来生成粗定位图,突出显示图像中用于预测概念的重要区域。

资源推荐

资源详情

资源评论

Grad-CAM: Visual Explanations from Deep Networks

via Gradient-based Localization

Ramprasaath R. Selvaraju · Michael Cogswell · Abhishek Das · Ramakrishna

Vedantam · Devi Parikh · Dhruv Batra

Abstract

We propose a technique for producing ‘visual ex-

planations’ for decisions from a large class of Convolutional

Neural Network (CNN)-based models, making them more

transparent and explainable.

Our approach – Gradient-weighted Class Activation Mapping

(Grad-CAM), uses the gradients of any target concept (say

‘dog’ in a classification network or a sequence of words

in captioning network) flowing into the final convolutional

layer to produce a coarse localization map highlighting the

important regions in the image for predicting the concept.

Unlike previous approaches, Grad-CAM is applicable to a

wide variety of CNN model-families: (1) CNNs with fully-

connected layers (e.g. VGG), (2) CNNs used for structured

outputs (e.g. captioning), (3) CNNs used in tasks with multi-

modal inputs (e.g. visual question answering) or reinforce-

ment learning, all without architectural changes or re-training.

We combine Grad-CAM with existing fine-grained visual-

izations to create a high-resolution class-discriminative vi-

Ramprasaath R. Selvaraju

Georgia Institute of Technology, Atlanta, GA, USA

E-mail: ramprs@gatech.edu

Michael Cogswell

Georgia Institute of Technology, Atlanta, GA, USA

E-mail: cogswell@gatech.edu

Abhishek Das

Georgia Institute of Technology, Atlanta, GA, USA

E-mail: abhshkdz@gatech.edu

Ramakrishna Vedantam

Georgia Institute of Technology, Atlanta, GA, USA

E-mail: vrama@gatech.edu

Devi Parikh

Georgia Institute of Technology, Atlanta, GA, USA

Facebook AI Research, Menlo Park, CA, USA

E-mail: parikh@gatech.edu

Dhruv Batra

Georgia Institute of Technology, Atlanta, GA, USA

Facebook AI Research, Menlo Park, CA, USA

E-mail: dbatra@gatech.edu

sualization, Guided Grad-CAM, and apply it to image clas-

sification, image captioning, and visual question answering

(VQA) models, including ResNet-based architectures.

In the context of image classification models, our visualiza-

tions (a) lend insights into failure modes of these models

(showing that seemingly unreasonable predictions have rea-

sonable explanations), (b) outperform previous methods on

the ILSVRC-15 weakly-supervised localization task, (c) are

robust to adversarial perturbations, (d) are more faithful to the

underlying model, and (e) help achieve model generalization

by identifying dataset bias.

For image captioning and VQA, our visualizations show that

even non-attention based models learn to localize discrimina-

tive regions of input image.

We devise a way to identify important neurons through Grad-

CAM and combine it with neuron names [

4

] to provide tex-

tual explanations for model decisions. Finally, we design

and conduct human studies to measure if Grad-CAM ex-

planations help users establish appropriate trust in predic-

tions from deep networks and show that Grad-CAM helps

untrained users successfully discern a ‘stronger’ deep net-

work from a ‘weaker’ one even when both make identical

predictions. Our code is available at

https://github.com/

ramprs/grad-cam/

, along with a demo on CloudCV [

2

]

1

,

and a video at youtu.be/COjUB9Izk6E.

1 Introduction

Deep neural models based on Convolutional Neural Net-

works (CNNs) have enabled unprecedented breakthroughs

in a variety of computer vision tasks, from image classi-

fication [

33

,

24

], object detection [

21

], semantic segmenta-

tion [

37

] to image captioning [

55

,

7

,

18

,

29

], visual question

answering [

3

,

20

,

42

,

46

] and more recently, visual dialog [

11

,

13

,

12

] and embodied question answering [

10

,

23

]. While

1

http://gradcam.cloudcv.org

arXiv:1610.02391v4 [cs.CV] 3 Dec 2019

2 Ramprasaath R. Selvaraju et al.

these models enable superior performance, their lack of de-

composability into individually intuitive components makes

them hard to interpret [

36

]. Consequently, when today’s intel-

ligent systems fail, they often fail spectacularly disgracefully

without warning or explanation, leaving a user staring at an

incoherent output, wondering why the system did what it did.

Interpretability matters. In order to build trust in intelligent

systems and move towards their meaningful integration into

our everyday lives, it is clear that we must build ‘transparent’

models that have the ability to explain why they predict what

they predict. Broadly speaking, this transparency and abil-

ity to explain is useful at three different stages of Artificial

Intelligence (AI) evolution. First, when AI is significantly

weaker than humans and not yet reliably deployable (e.g.

visual question answering [

3

]), the goal of transparency and

explanations is to identify the failure modes [

1

,

25

], thereby

helping researchers focus their efforts on the most fruitful re-

search directions. Second, when AI is on par with humans and

reliably deployable (e.g., image classification [

30

] trained on

sufficient data), the goal is to establish appropriate trust and

confidence in users. Third, when AI is significantly stronger

than humans (e.g. chess or Go [

50

]), the goal of explana-

tions is in machine teaching [

28

] – i.e., a machine teaching a

human about how to make better decisions.

There typically exists a trade-off between accuracy and sim-

plicity or interpretability. Classical rule-based or expert sys-

tems [

26

] are highly interpretable but not very accurate (or

robust). Decomposable pipelines where each stage is hand-

designed are thought to be more interpretable as each indi-

vidual component assumes a natural intuitive explanation.

By using deep models, we sacrifice interpretable modules

for uninterpretable ones that achieve greater performance

through greater abstraction (more layers) and tighter integra-

tion (end-to-end training). Recently introduced deep residual

networks (ResNets) [

24

] are over 200-layers deep and have

shown state-of-the-art performance in several challenging

tasks. Such complexity makes these models hard to interpret.

As such, deep models are beginning to explore the spectrum

between interpretability and accuracy.

Zhou et al. [

59

] recently proposed a technique called Class

Activation Mapping (CAM) for identifying discriminative

regions used by a restricted class of image classification

CNNs which do not contain any fully-connected layers. In

essence, this work trades off model complexity and perfor-

mance for more transparency into the working of the model.

In contrast, we make existing state-of-the-art deep models

interpretable without altering their architecture, thus avoiding

the interpretability vs. accuracy trade-off. Our approach is

a generalization of CAM [

59

] and is applicable to a signifi-

cantly broader range of CNN model families: (1) CNNs with

fully-connected layers (e.g. VGG), (2) CNNs used for struc-

tured outputs (e.g. captioning), (3) CNNs used in tasks with

multi-modal inputs (e.g. VQA) or reinforcement learning,

without requiring architectural changes or re-training.

What makes a good visual explanation?

Consider image

classification [

14

] – a ‘good’ visual explanation from the

model for justifying any target category should be (a) class-

discriminative (i.e. localize the category in the image) and

(b) high-resolution (i.e. capture fine-grained detail).

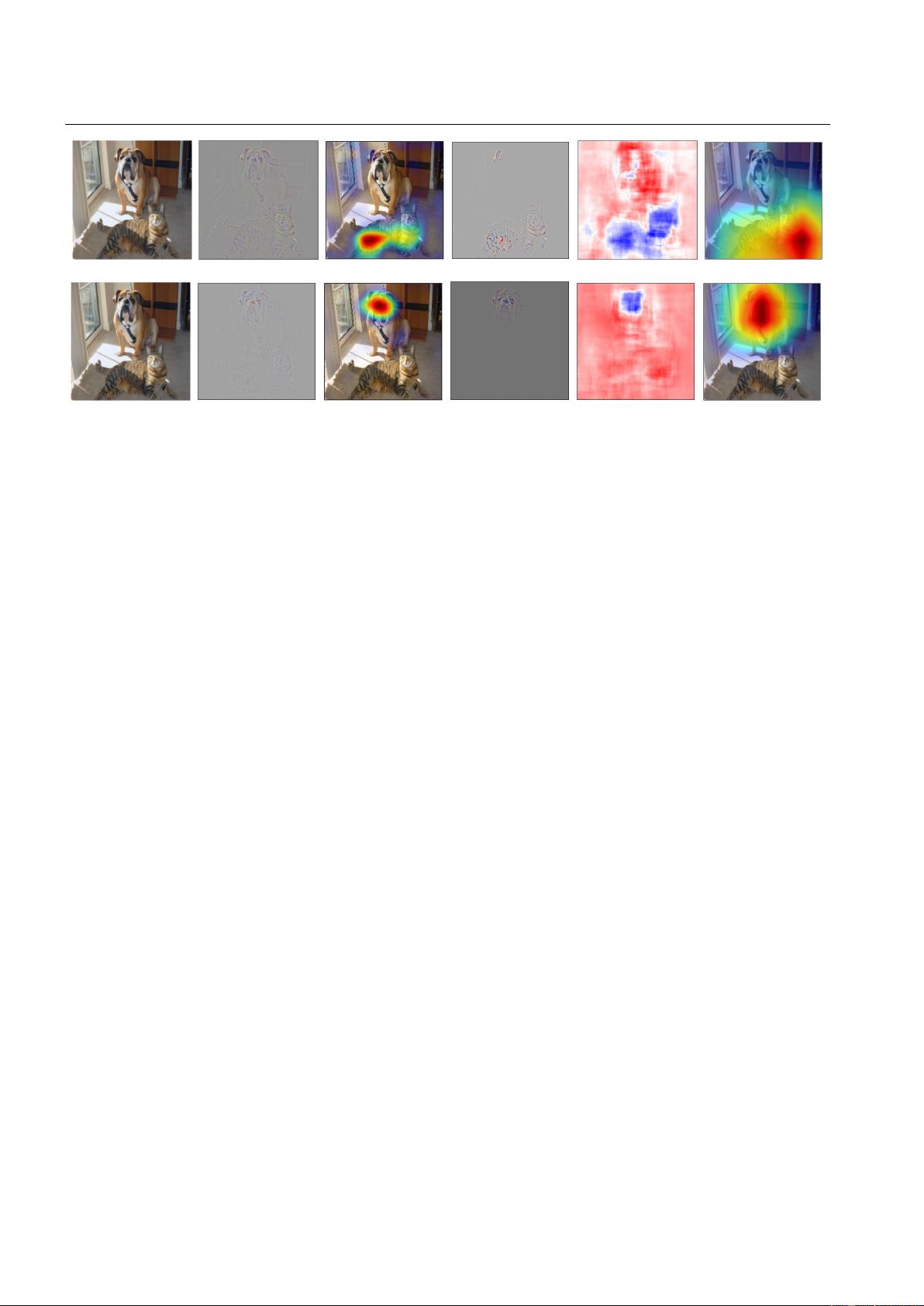

Fig. 1 shows outputs from a number of visualizations for

the ‘tiger cat’ class (top) and ‘boxer’ (dog) class (bottom).

Pixel-space gradient visualizations such as Guided Back-

propagation [

53

] and Deconvolution [

57

] are high-resolution

and highlight fine-grained details in the image, but are not

class-discriminative (Fig. 1b and Fig. 1h are very similar).

In contrast, localization approaches like CAM or our pro-

posed method Gradient-weighted Class Activation Mapping

(Grad-CAM), are highly class-discriminative (the ‘cat’ expla-

nation exclusively highlights the ‘cat’ regions but not ‘dog’

regions in Fig. 1c, and vice versa in Fig. 1i).

In order to combine the best of both worlds, we show that it

is possible to fuse existing pixel-space gradient visualizations

with Grad-CAM to create Guided Grad-CAM visualizations

that are both high-resolution and class-discriminative. As a

result, important regions of the image which correspond to

any decision of interest are visualized in high-resolution de-

tail even if the image contains evidence for multiple possible

concepts, as shown in Figures 1d and 1j. When visualized

for ‘tiger cat’, Guided Grad-CAM not only highlights the cat

regions, but also highlights the stripes on the cat, which is

important for predicting that particular variety of cat.

To summarize, our contributions are as follows:

(1)

We introduce Grad-CAM, a class-discriminative local-

ization technique that generates visual explanations for any

CNN-based network without requiring architectural changes

or re-training. We evaluate Grad-CAM for localization (Sec. 4.1),

and faithfulness to model (Sec. 5.3), where it outperforms

baselines.

(2)

We apply Grad-CAM to existing top-performing classi-

fication, captioning (Sec. 8.1), and VQA (Sec. 8.2) models.

For image classification, our visualizations lend insight into

failures of current CNNs (Sec. 6.1), showing that seemingly

unreasonable predictions have reasonable explanations. For

captioning and VQA, our visualizations expose that common

CNN + LSTM models are often surprisingly good at local-

izing discriminative image regions despite not being trained

on grounded image-text pairs.

(3)

We show a proof-of-concept of how interpretable Grad-

CAM visualizations help in diagnosing failure modes by

uncovering biases in datasets. This is important not just for

generalization, but also for fair and bias-free outcomes as

more and more decisions are made by algorithms in society.

(4)

We present Grad-CAM visualizations for ResNets [

24

]

applied to image classification and VQA (Sec. 8.2).

Grad-CAM: Visual Explanations from Deep Networks via Gradient-based Localization 3

(a) Original Image (b) Guided Backprop ‘Cat’ (c) Grad-CAM ‘Cat’

(d)

Guided Grad-CAM ‘Cat’ (e) Occlusion map ‘Cat’

(f)

ResNet Grad-CAM ‘Cat’

(g) Original Image

(h)

Guided Backprop ‘Dog’ (i) Grad-CAM ‘Dog’

(j)

Guided Grad-CAM ‘Dog’ (k) Occlusion map ‘Dog’

(l)

ResNet Grad-CAM ‘Dog’

Fig. 1: (a) Original image with a cat and a dog. (b-f) Support for the cat category according to various visualizations for VGG-16 and ResNet. (b)

Guided Backpropagation [53]: highlights all contributing features. (c, f) Grad-CAM (Ours): localizes class-discriminative regions, (d) Combining

(b) and (c) gives Guided Grad-CAM, which gives high-resolution class-discriminative visualizations. Interestingly, the localizations achieved by

our Grad-CAM technique, (c) are very similar to results from occlusion sensitivity (e), while being orders of magnitude cheaper to compute. (f,

l) are Grad-CAM visualizations for ResNet-18 layer. Note that in (c, f, i, l), red regions corresponds to high score for class, while in (e, k), blue

corresponds to evidence for the class. Figure best viewed in color.

(5)

We use neuron importance from Grad-CAM and neuron

names from [

4

] and obtain textual explanations for model

decisions (Sec. 7).

(6)

We conduct human studies (Sec. 5) that show Guided

Grad-CAM explanations are class-discriminative and not

only help humans establish trust, but also help untrained users

successfully discern a ‘stronger’ network from a ‘weaker’

one, even when both make identical predictions.

Paper Organization:

The rest of the paper is organized as

follows. In section 3 we propose our approach Grad-CAM

and Guided Grad-CAM. In sections 4 and 5 we evaluate the

localization ability, class-discriminativeness, trustworthyness

and faithfulness of Grad-CAM. In section 6 we show certain

use cases of Grad-CAM such as diagnosing image classifi-

cation CNNs and identifying biases in datasets. In section 7

we provide a way to obtain textual explanations with Grad-

CAM. In section 8 we show how Grad-CAM can be applied

to vision and language models – image captioning and Visual

Question Answering (VQA).

2 Related Work

Our work draws on recent work in CNN visualizations, model

trust assessment, and weakly-supervised localization.

Visualizing CNNs

. A number of previous works [

51

,

53

,

57

,

19

] have visualized CNN predictions by highlighting ‘impor-

tant’ pixels (i.e. change in intensities of these pixels have

the most impact on the prediction score). Specifically, Si-

monyan et al . [

51

] visualize partial derivatives of predicted

class scores w.r.t. pixel intensities, while Guided Backprop-

agation [

53

] and Deconvolution [

57

] make modifications

to ‘raw’ gradients that result in qualitative improvements.

These approaches are compared in [

40

]. Despite produc-

ing fine-grained visualizations, these methods are not class-

discriminative. Visualizations with respect to different classes

are nearly identical (see Figures 1b and 1h).

Other visualization methods synthesize images to maximally

activate a network unit [

51

,

16

] or invert a latent represen-

tation [

41

,

15

]. Although these can be high-resolution and

class-discriminative, they are not specific to a single input

image and visualize a model overall.

Assessing Model Trust

. Motivated by notions of interpretabil-

ity [

36

] and assessing trust in models [

47

], we evaluate Grad-

CAM visualizations in a manner similar to [

47

] via human

studies to show that they can be important tools for users to

evaluate and place trust in automated systems.

Aligning Gradient-based Importances

. Selvaraju et al. [

48

]

proposed an approach that uses the gradient-based neuron

importances introduced in our work, and maps it to class-

specific domain knowledge from humans in order to learn

classifiers for novel classes. In future work, Selvaraju et al.

[

49

] proposed an approach to align gradient-based impor-

tances to human attention maps in order to ground vision and

language models.

Weakly-supervised localization

. Another relevant line of

work is weakly-supervised localization in the context of

CNNs, where the task is to localize objects in images us-

ing holistic image class labels only [8,43,44,59].

Most relevant to our approach is the Class Activation Map-

ping (CAM) approach to localization [

59

]. This approach

modifies image classification CNN architectures replacing

fully-connected layers with convolutional layers and global

average pooling [

34

], thus achieving class-specific feature

maps. Others have investigated similar methods using global

max pooling [44] and log-sum-exp pooling [45].

4 Ramprasaath R. Selvaraju et al.

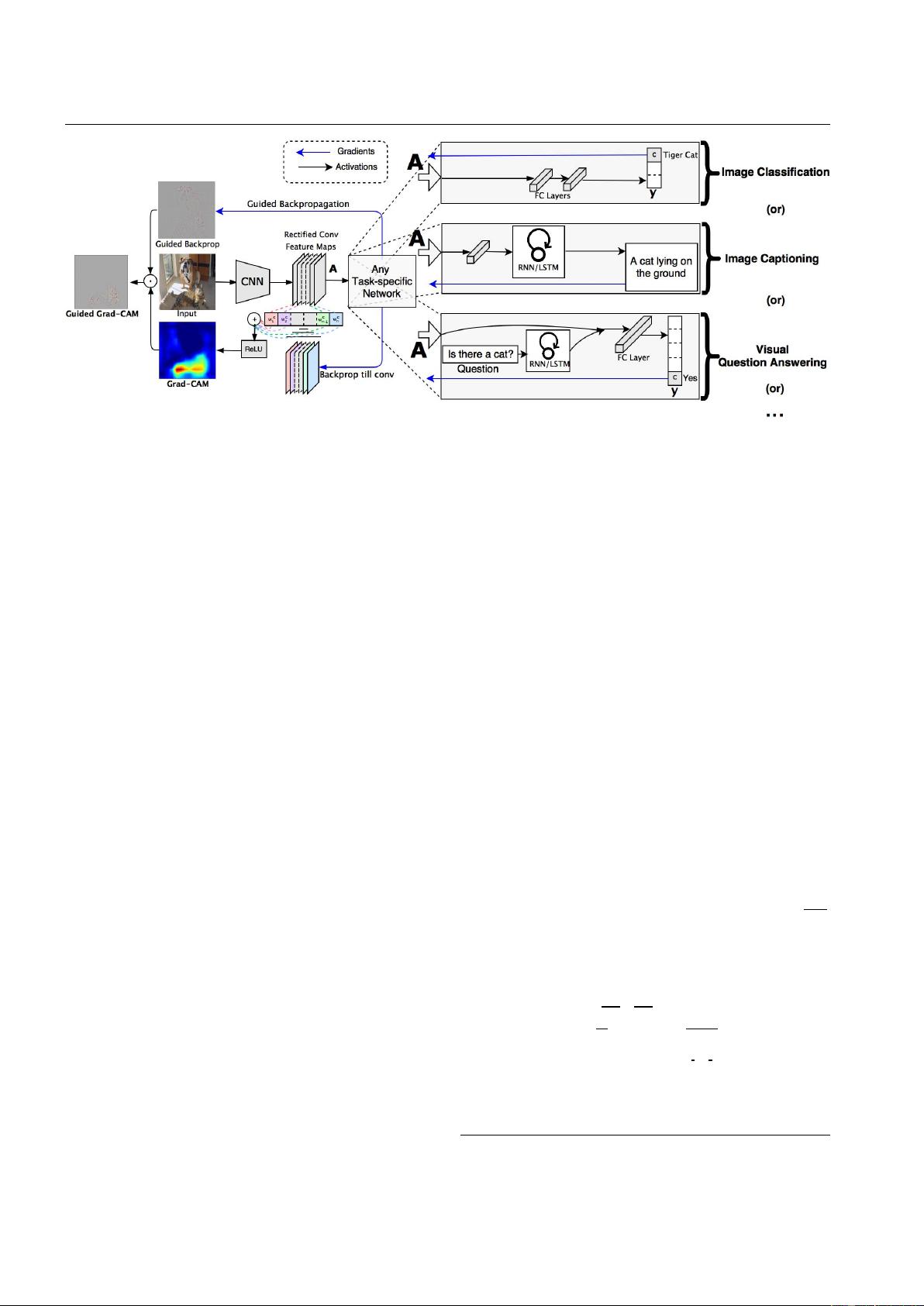

Fig. 2:

Grad-CAM overview: Given an image and a class of interest (e.g., ‘tiger cat’ or any other type of differentiable output) as input, we forward propagate the image

through the CNN part of the model and then through task-specific computations to obtain a raw score for the category. The gradients are set to zero for all classes except the

desired class (tiger cat), which is set to 1. This signal is then backpropagated to the rectified convolutional feature maps of interest, which we combine to compute the coarse

Grad-CAM localization (blue heatmap) which represents where the model has to look to make the particular decision. Finally, we pointwise multiply the heatmap with guided

backpropagation to get Guided Grad-CAM visualizations which are both high-resolution and concept-specific.

A drawback of CAM is that it requires feature maps to di-

rectly precede softmax layers, so it is only applicable to a

particular kind of CNN architectures performing global av-

erage pooling over convolutional maps immediately prior to

prediction (i.e. conv feature maps

→

global average pooling

→

softmax layer). Such architectures may achieve inferior

accuracies compared to general networks on some tasks (e.g.

image classification) or may simply be inapplicable to any

other tasks (e.g. image captioning or VQA). We introduce a

new way of combining feature maps using the gradient signal

that does not require any modification in the network architec-

ture. This allows our approach to be applied to off-the-shelf

CNN-based architectures, including those for image caption-

ing and visual question answering. For a fully-convolutional

architecture, CAM is a special case of Grad-CAM.

Other methods approach localization by classifying perturba-

tions of the input image. Zeiler and Fergus [

57

] perturb inputs

by occluding patches and classifying the occluded image,

typically resulting in lower classification scores for relevant

objects when those objects are occluded. This principle is ap-

plied for localization in [

5

]. Oquab et al. [

43

] classify many

patches containing a pixel then average these patch-wise

scores to provide the pixel’s class-wise score. Unlike these,

our approach achieves localization in one shot; it only re-

quires a single forward and a partial backward pass per image

and thus is typically an order of magnitude more efficient. In

recent work, Zhang et al . [

58

] introduce contrastive Marginal

Winning Probability (c-MWP), a probabilistic Winner-Take-

All formulation for modelling the top-down attention for

neural classification models which can highlight discrimina-

tive regions. This is computationally more expensive than

Grad-CAM and only works for image classification CNNs.

Moreover, Grad-CAM outperforms c-MWP in quantitative

and qualitative evaluations (see Sec. 4.1 and Sec. D).

3 Grad-CAM

A number of previous works have asserted that deeper repre-

sentations in a CNN capture higher-level visual constructs [

6

,

41

]. Furthermore, convolutional layers naturally retain spatial

information which is lost in fully-connected layers, so we

can expect the last convolutional layers to have the best com-

promise between high-level semantics and detailed spatial

information. The neurons in these layers look for semantic

class-specific information in the image (say object parts).

Grad-CAM uses the gradient information flowing into the

last convolutional layer of the CNN to assign importance

values to each neuron for a particular decision of interest.

Although our technique is fairly general in that it can be used

to explain activations in any layer of a deep network, in this

work, we focus on explaining output layer decisions only.

As shown in Fig. 2, in order to obtain the class-discriminative

localization map Grad-CAM

L

c

Grad-CAM

∈ R

u×v

of width

u

and height

v

for any class

c

, we first compute the gradient of

the score for class

c

,

y

c

(before the softmax), with respect to

feature map activations

A

k

of a convolutional layer, i.e.

∂y

c

∂A

k

.

These gradients flowing back are global-average-pooled

2

over the width and height dimensions (indexed by

i

and

j

respectively) to obtain the neuron importance weights α

c

k

:

α

c

k

=

global average pooling

z }| {

1

Z

X

i

X

j

∂y

c

∂A

k

ij

|{z}

gradients via backprop

(1)

During computation of

α

c

k

while backpropagating gradients

with respect to activations, the exact computation amounts

2

Empirically we found global-average-pooling to work better than

global-max-pooling as can be found in the Appendix.

剩余22页未读,继续阅读

资源评论

电动汽车控制与安全

- 粉丝: 268

- 资源: 4186

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功