没有合适的资源?快使用搜索试试~ 我知道了~

温馨提示

内容概要:本文提出了一种基于尺度空间流(Scale-space flow)的新型运动补偿方法,用于端到端优化的视频压缩。与传统的双线性插值法相比,尺度空间流能够更好地处理遮挡和快速运动等困难情况。实验结果显示,该方法在PSNR和MS-SSIM指标上均优于现有的一些深度学习方法和传统视频编码标准如H.264和HEVC。同时,该模型采用简化的架构和训练流程,不需要预训练的光流网络。 适合人群:视频压缩领域的研究人员和技术开发人员。 使用场景及目标:适用于低延迟视频流传输场景,旨在提供更好的压缩性能,减少带宽占用并保持较高的图像质量。 其他说明:文中介绍了具体的模型架构、训练数据和方法,并提供了详细的实验结果对比。未来的研究方向可能包括探索更多复杂的架构以及改进对动画视频的处理能力。

资源推荐

资源详情

资源评论

Scale-space flow for end-to-end optimized video compression

Eirikur Agustsson, David Minnen, Nick Johnston, Johannes Ballé, Sung Jin Hwang, George Toderici

Google Research, Perception Team

{eirikur, dminnen, nickj, jballe, sjhwang, gtoderici}@google.com

Abstract

Despite considerable progress on end-to-end optimized

deep networks for image compression, video coding re-

mains a challenging task. Recently proposed methods for

learned video compression use optical flow and bilinear

warping for motion compensation and show competitive

rate–distortion performance relative to hand-engineered

codecs like H.264 and HEVC. However, these learning-

based methods rely on complex architectures and training

schemes including the use of pre-trained optical flow net-

works, sequential training of sub-networks, adaptive rate

control, and buffering intermediate reconstructions to disk

during training. In this paper, we show that a generalized

warping operator that better handles common failure cases,

e.g. disocclusions and fast motion, can provide competi-

tive compression results with a greatly simplified model and

training procedure. Specifically, we propose scale-space

flow, an intuitive generalization of optical flow that adds

a scale parameter to allow the network to better model un-

certainty. Our experiments show that a low-latency video

compression model (no B-frames) using scale-space flow

for motion compensation can outperform analogous state-

of-the art learned video compression models while being

trained using a much simpler procedure and without any

pre-trained optical flow networks.

1. Introduction

Recently, there has been significant progress in the

area of end-to-end optimized image compression, which

went from barely matching JPEG [

33] to methods such

as [

8, 26, 5] that can outperform the best hand-engineered

codecs when evaluated in terms of multi-scale structural

similarity (MS-SSIM) [

36], PSNR, and subjective quality

assessments from user studies. While this is very encourag-

ing, over 60% of downstream internet traffic currently con-

sists of streaming video data [

1], which means that in order

to maximize impact on bandwidth reduction, researchers

should focus on video compression.

Since the area of neural video compression is in early

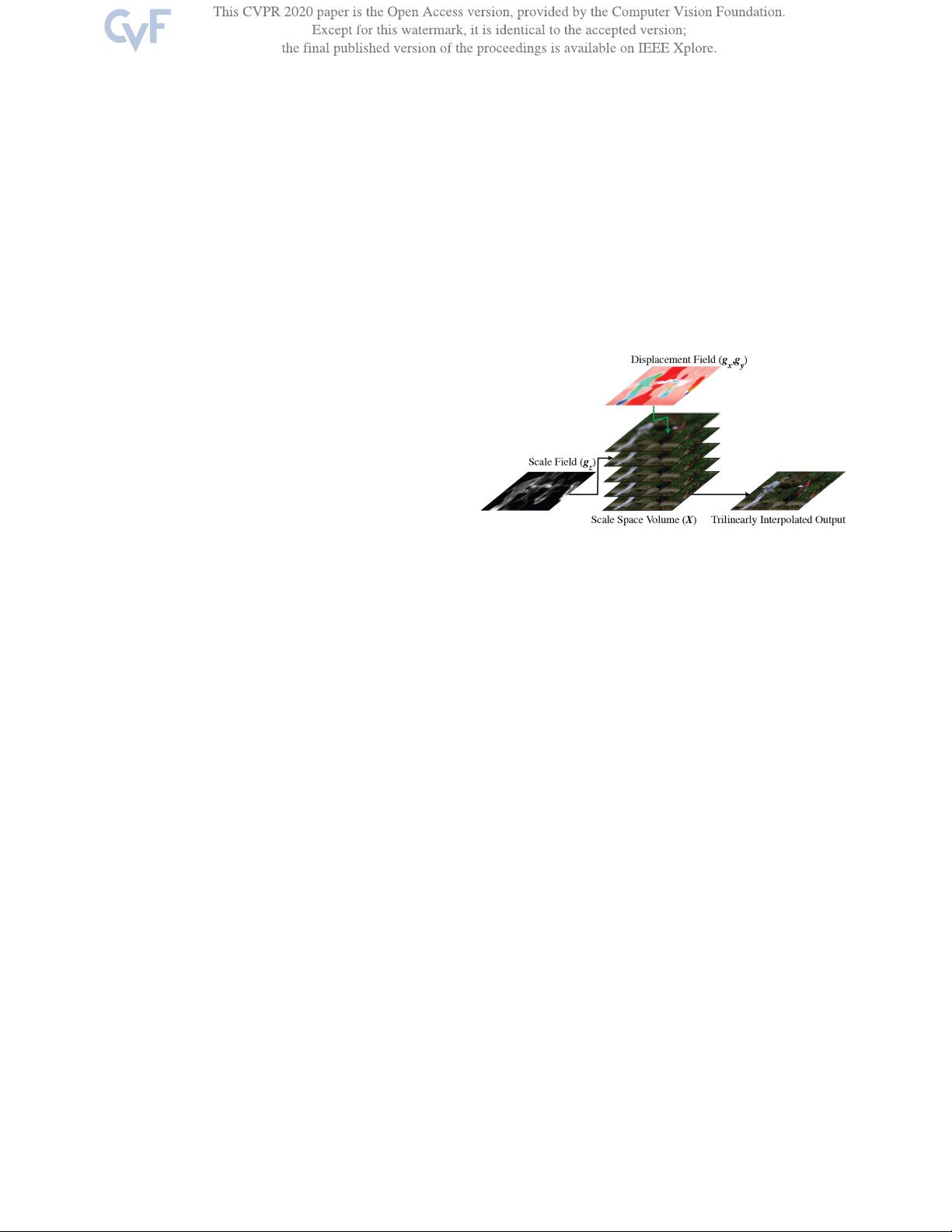

Figure 1. Our proposed scale-space warping module. From the

source image x, we construct a fixed-resolution scale-space vol-

ume X. In contrast to bilinear warping, where the warped output

is sampled directly from the 2-D source image using a 2-channel

displacement field (f

x

, f

y

), we trilinearly sample from the 3-D

scale-space volume using a 3-channel displacements+scale field

(g

x

, g

y

, g

z

). The scale value gives a continuous, differentiable

knob that can adaptively blur the source image when warping if

the warp is not a good prediction of the target image.

stages, it is not yet clear which network architectures are

most effective for different application scenarios. We can

roughly categorize the existing research methods into the

following three categories:

1) 3D autoencoders are a natural extension of the work

done for learned image compression, but [

27] demonstrated

that representing video using spatiotemporal transforma-

tions alone does not lead to better performance compared

to standard methods. However, when combined with tem-

porally conditioned entropy models [

19], such methods can

perform on par with standard methods in terms of MS-

SSIM.

2) Frame interpolation methods use neural networks to

temporally interpolate between frames in a video and then

encode the residuals [

38, 17]. This approach is commonly

used in standard video coding (called “bidirectional predic-

tion” or “B-frame coding”) [

37], but has the disadvantage

that it is generally not suitable for low-latency streaming

since such methods need information “from the future” to

decode each B-frame. However, in standard codecs, the use

of B-frames typically provides the best rate–distortion (RD)

1

8503

资源评论

码流怪侠

- 粉丝: 2w+

- 资源: 89

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功