ONCE-FOR-ALL TRAIN ONE NETWORK AND SPECIALIZE IT FOR EFFICIENT D...

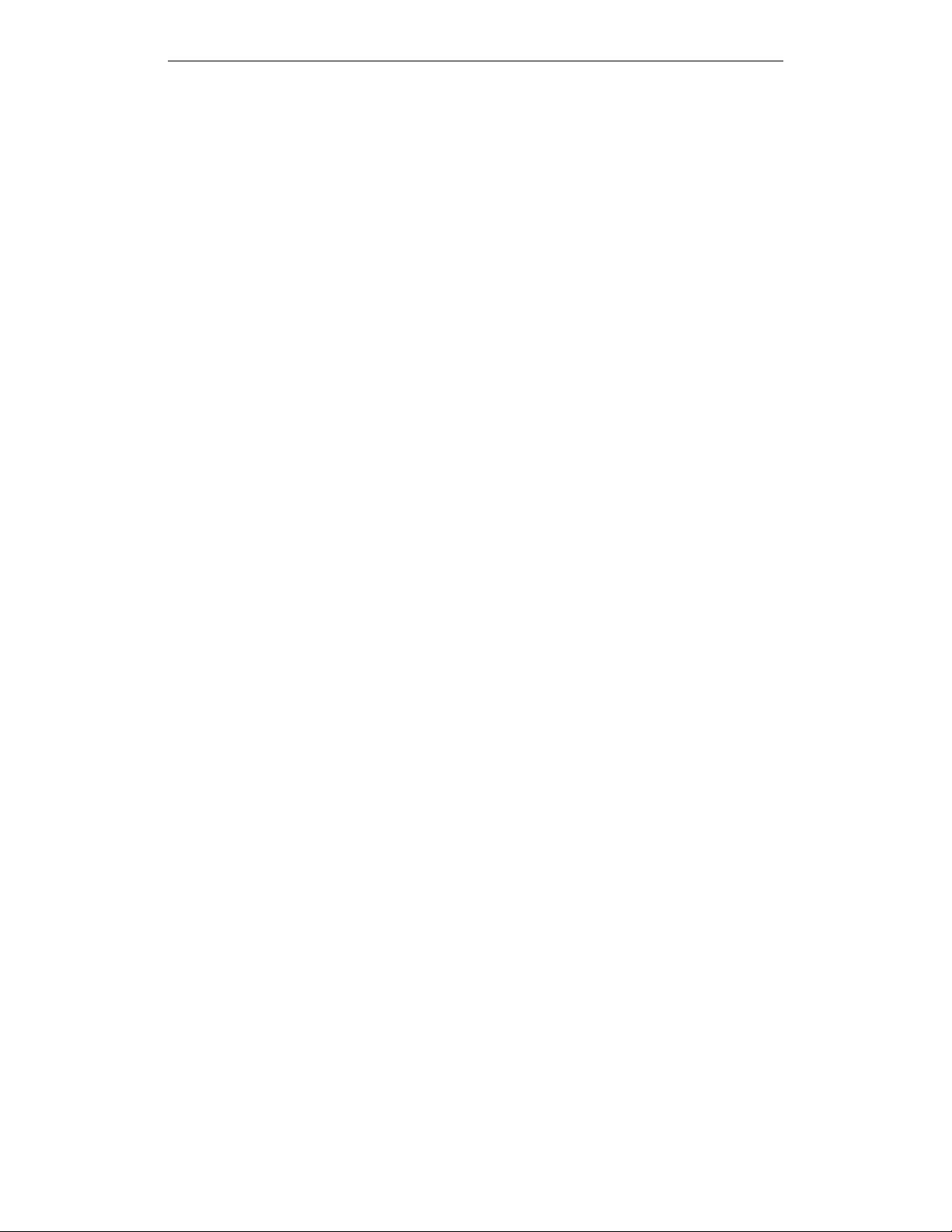

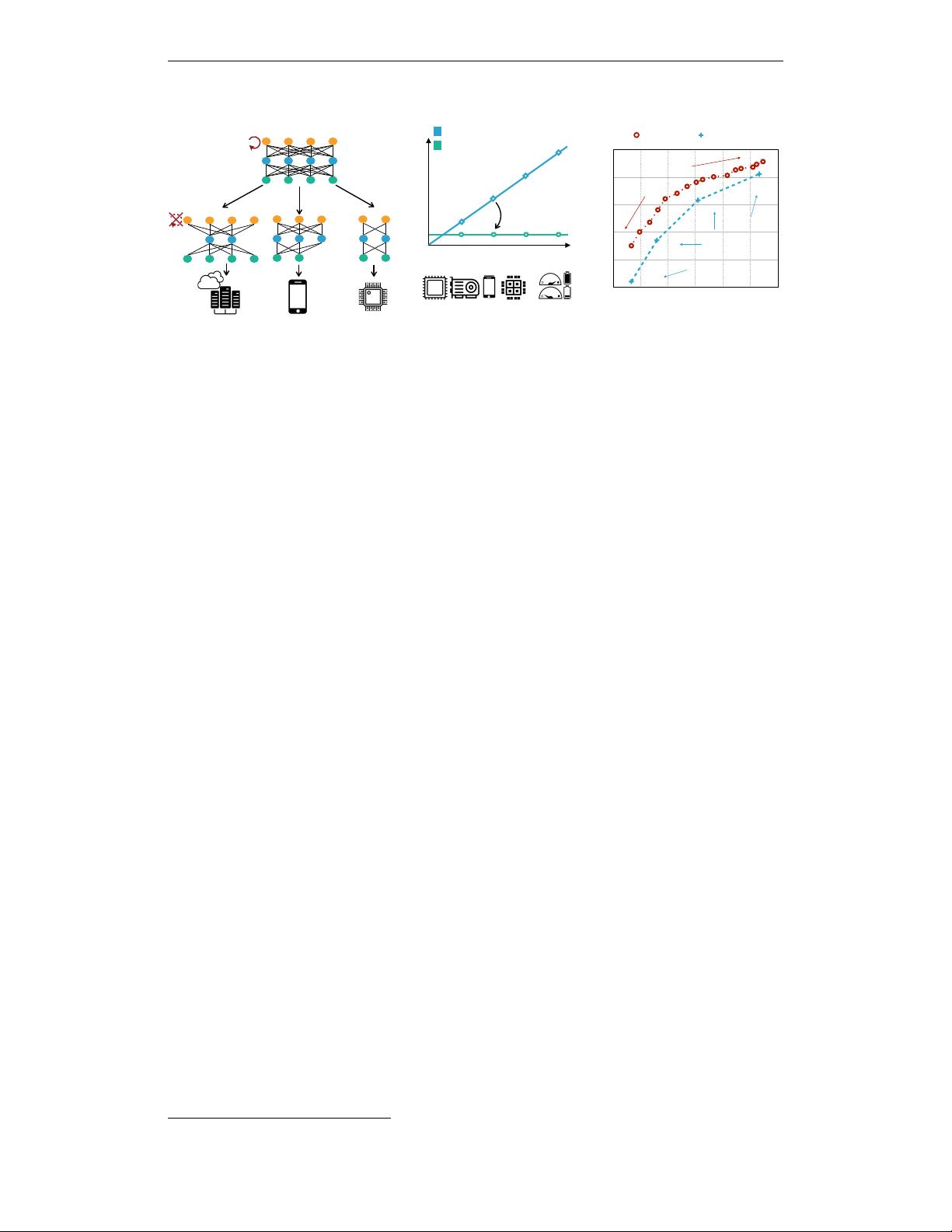

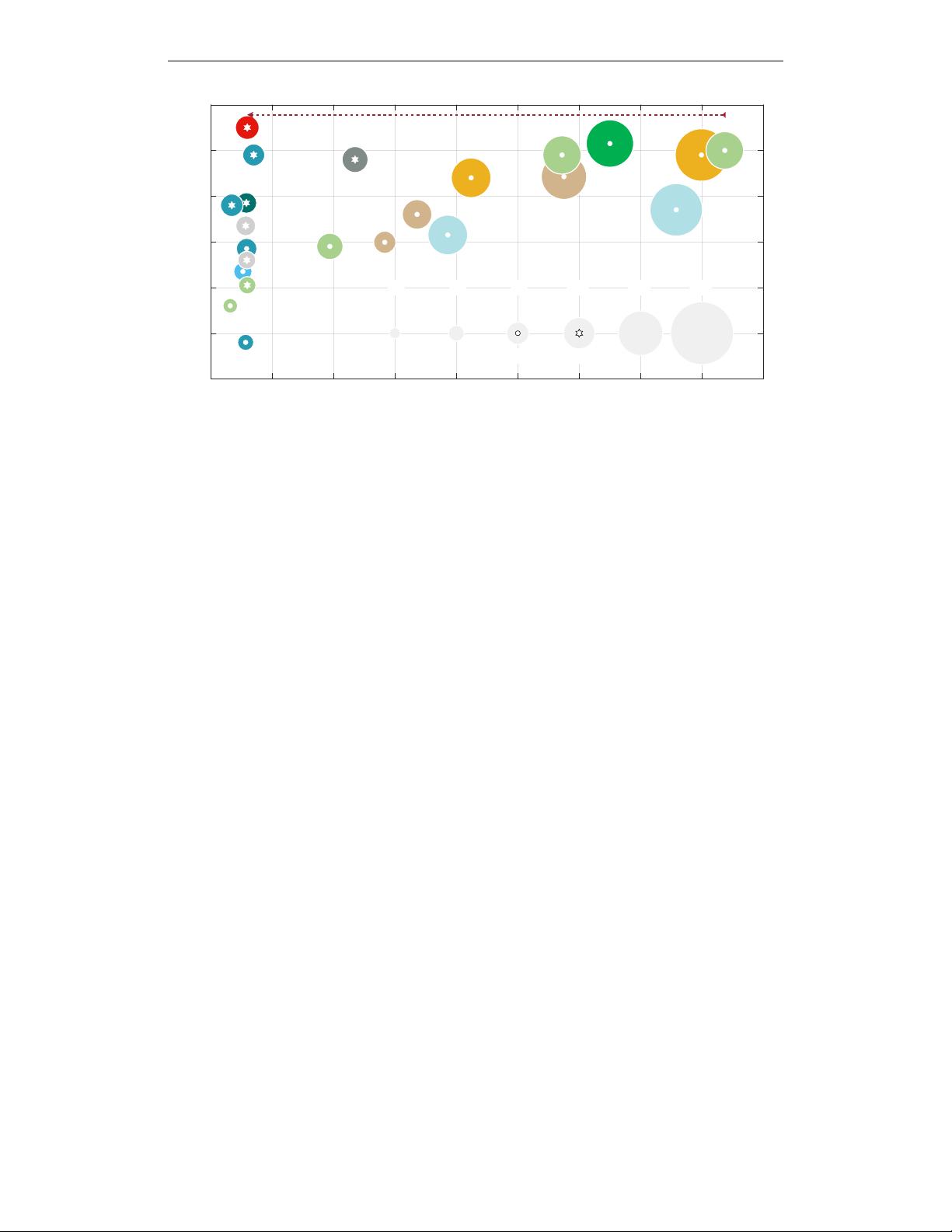

本文介绍的是一种名为“一次训练,全网部署”(ONCE-FOR-ALL, OFA)的新型神经网络训练和部署策略,旨在解决在多种设备和资源限制条件下高效推理的难题,特别是在边缘设备上。传统的做法要么是手工设计网络,要么是使用神经架构搜索(Neural Architecture Search, NAS)来找到一个专门的神经网络,并且每次都是从头开始训练,这在计算上是禁止的,会导致巨大的二氧化碳排放,因此是不可扩展的。在这项工作中,研究者提出了训练一个OFA网络,通过解耦训练和搜索来支持多样化的架构设置,以降低成本。通过从OFA网络中选择特定的子网络,我们可以快速获得专门的子网络而无需额外训练。为了高效地训练OFA网络,研究者还提出了一种新颖的渐进式收缩算法,这是一种广义的剪枝方法,它在多个维度上减少模型大小(深度、宽度、卷积核大小和分辨率),能够获得数量惊人的子网络(>10^19),它们能够适应不同的硬件平台和延迟限制,同时保持与独立训练相同水平的准确度。在多种边缘设备上,OFA的一致性优于最先进的NAS方法(与MobileNetV3相比,ImageNet top-1准确度最多提高4.0%,或者在保持相同准确度的情况下,比MobileNetV3快1.5倍,比EfficientNet快2.6倍,以测量的延迟为基准)。此外,OFA在移动设置下达到了新的标准,即80.0%的ImageNet top-1准确度(<600百万次运算),同时显著减少了很多数量级的GPU小时和二氧化碳排放。 OFA网络的关键优势在于其能够适应多样化的硬件平台和延迟约束,而无需对每个特定环境进行新的训练。OFA方法通过预先训练一个包含多种可能性的全量网络,并通过选择子网来实现针对特定硬件环境的优化,从而解决了传统NAS方法的局限性。这种做法大大降低了针对特定硬件优化所需求的计算资源,使得在资源有限的设备上部署高性能神经网络变得更加可行。 此外,OFA网络提出的渐进式收缩算法也是突破性的,因为它不仅在深度、宽度、卷积核大小上进行优化,还涉及到了模型分辨率的调整,使得模型大小能够在多个维度上进行收缩。这种多维度的模型剪枝技术,赋予了OFA网络极高的灵活性,能够在保持推理准确性的同时,进一步优化网络模型以适应各种不同的硬件限制和应用需求。 OFA网络的成功在多个基准测试中得到了验证,它在移动设置下的ImageNet top-1准确度达到了新的业界最高水平。而且,通过在多个不同的设备和延迟约束条件下测试,OFA网络都展现出了超越竞争对手的表现。OFA网络因此在第三和第四届低功耗计算机视觉挑战赛(LPCVC)中获奖,证明了其在实际应用中的优越性和实用性。 整体来看,OFA网络代表了一种在多设备环境下进行高效神经网络部署的新范式,它通过一次性训练得到的全量网络,提供了快速适应不同硬件和延迟需求的可能性。OFA网络的提出,不仅为计算视觉领域带来了新的优化思路,也为深度学习在移动和边缘设备上的应用开辟了新的前景。其开源代码和预先训练好的模型已经发布在GitHub上,供研究者和开发人员下载和使用,这将进一步促进高效部署深度学习模型的研究和应用。

剩余14页未读,继续阅读

- 粉丝: 0

- 资源: 40

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

- 基于mosquitto的android mqtt客户端详细文档+全部资料.zip

- 基于mqtt的消息推送系统,单点推送,分组推送详细文档+全部资料.zip

- 基于MQTT的聊天系统演示详细文档+全部资料.zip

- 基于mqtt的遥控器,在app上点击按钮,将码(空调码,电视码,风扇码)发送到mqqt,mqtt通过WiFi发给esp8266,esp8266解析转为红外,发出

- 基于Mqtt实现的简单推送服务的服务端详细文档+全部资料.zip

- 基于mqtt实现的即时通讯IM服务详细文档+全部资料.zip

- 基于mqtt开发sdk源码详细文档+全部资料.zip

- 基于MQTT实现的局域网通讯,模仿微信详细文档+全部资料.zip

- 最简单优雅的SQL操作类库

- 基于MQTT物联网用户终端程序详细文档+全部资料.zip

- 基于MQTT协议,物联网云平台的智慧路灯管理系统,在PC机上进行项目软件的Web开发,采集端的数据采用MQTT.fx进行模拟,数据通过MQTT协议进行传输到服务

- 基于MQTT协议的一个即时通讯安卓APP详细文档+全部资料.zip

- 基于MQTT协议的底层通讯SDK详细文档+全部资料.zip

- 基于MQTT协议的物联网健康监测系统详细文档+全部资料.zip

- 基于netty, spring boot, redis等开源项目实现的物联网框架, 支持tcp, udp底层协议和http, mqtt, modbus等上层协议

- 基于MQTT协议实现消息的即时推送Android开发详细文档+全部资料.zip

信息提交成功

信息提交成功