dining table.96

person1.00

person1.00

person1.00

person1.00

person1.00

person1.00

person1.00

person.94

bottle.99

bottle.99

bottle.99

motorcycle1.00

motorcycle1.00

person1.00

person1.00

person.96

person1.00

person.83

person.96

person.98

person.90

person.92

person.99

person.91

bus.99

person1.00

person1.00

person1.00

backpack.93

person1.00

person.99

person1.00

backpack.99

person.99

person.98

person.89

person.95

person1.00

person1.00

car1.00

traffic light.96

person.96

truck1.00

person.99

car.99

person.85

motorcycle.95

car.99

car.92

person.99

person1.00

traffic light.92

traffic light.84

traffic light.95

car.93

person.87

person1.00

person1.00

umbrella.98

umbrella.98

backpack1.0 0

handbag.96

elephant1.00

person1.00

person1.00

person.99

sheep1.00

person1.00

sheep.99

sheep.91

sheep1.00

sheep.99

sheep.99

sheep.95

person.99

sheep1.00

sheep.96

sheep.99

sheep.99

sheep.96

sheep.96

sheep.96

sheep.86

sheep.82

sheep.93

dining table.99

chair.99

chair.90

chair.99

chair.98

chair.96

chair.86

chair.99

bowl.81

chair.96

tv.99

bottle.99

wine glass.99

wine glass1.00

bowl.85

knife.83

wine glass1.00

wine glass.93

wine glass.97

fork.95

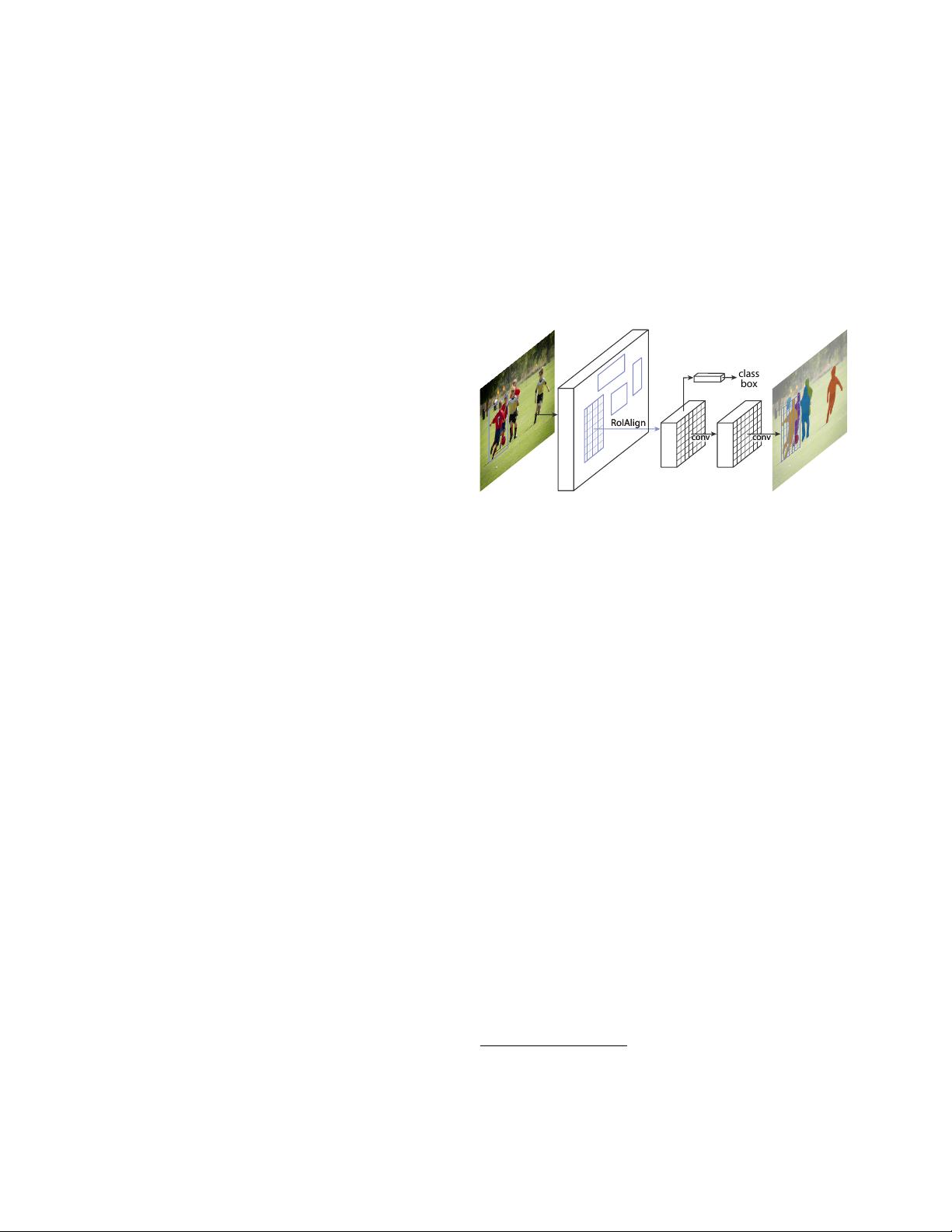

Figure 2. Mask R-CNN results on the COCO test set. These results are based on ResNet-101 [19], achieving a mask AP of 35.7 and

running at 5 fps. Masks are shown in color, and bounding box, category, and confidences are also shown.

a seemingly minor change, RoIAlign has a large impact: it

improves mask accuracy by relative 10% to 50%, showing

bigger gains under stricter localization metrics. Second, we

found it essential to decouple mask and class prediction: we

predict a binary mask for each class independently, without

competition among classes, and rely on the network’s RoI

classification branch to predict the category. In contrast,

FCNs usually perform per-pixel multi-class categorization,

which couples segmentation and classification, and based

on our experiments works poorly for instance segmentation.

Without bells and whistles, Mask R-CNN surpasses all

previous state-of-the-art single-model results on the COCO

instance segmentation task [28], including the heavily-

engineered entries from the 2016 competition winner. As

a by-product, our method also excels on the COCO object

detection task. In ablation experiments, we evaluate multi-

ple basic instantiations, which allows us to demonstrate its

robustness and analyze the effects of core factors.

Our models can run at about 200ms per frame on a GPU,

and training on COCO takes one to two days on a single

8-GPU machine. We believe the fast train and test speeds,

together with the framework’s flexibility and accuracy, will

benefit and ease future research on instance segmentation.

Finally, we showcase the generality of our framework

via the task of human pose estimation on the COCO key-

point dataset [28]. By viewing each keypoint as a one-hot

binary mask, with minimal modification Mask R-CNN can

be applied to detect instance-specific poses. Without tricks,

Mask R-CNN surpasses the winner of the 2016 COCO key-

point competition, and at the same time runs at 5 fps. Mask

R-CNN, therefore, can be seen more broadly as a flexible

framework for instance-level recognition and can be readily

extended to more complex tasks.

We will release code to facilitate future research.

2. Related Work

R-CNN: The Region-based CNN (R-CNN) approach [13]

to bounding-box object detection is to attend to a manage-

able number of candidate object regions [38, 20] and evalu-

ate convolutional networks [25, 24] independently on each

RoI. R-CNN was extended [18, 12] to allow attending to

RoIs on feature maps using RoIPool, leading to fast speed

and better accuracy. Faster R-CNN [34] advanced this

stream by learning the attention mechanism with a Region

Proposal Network (RPN). Faster R-CNN is flexible and ro-

bust to many follow-up improvements (e.g., [35, 27, 21]),

and is the current leading framework in several benchmarks.

Instance Segmentation: Driven by the effectiveness of R-

CNN, many approaches to instance segmentation are based

on segment proposals. Earlier methods [13, 15, 16, 9] re-

sorted to bottom-up segments [38, 2]. DeepMask [32] and

following works [33, 8] learn to propose segment candi-

dates, which are then classified by Fast R-CNN. In these

methods, segmentation precedes recognition, which is slow

and less accurate. Likewise, Dai et al. [10] proposed a com-

plex multiple-stage cascade that predicts segment proposals

from bounding-box proposals, followed by classification.

Instead, our method is based on parallel prediction of masks

and class labels, which is simpler and more flexible.

Most recently, Li et al. [26] combined the segment pro-

posal system in [8] and object detection system in [11] for

“fully convolutional instance segmentation” (FCIS). The

common idea in [8, 11, 26] is to predict a set of position-

sensitive output channels fully convolutionally. These

channels simultaneously address object classes, boxes, and

masks, making the system fast. But FCIS exhibits system-

atic errors on overlapping instances and creates spurious

edges (Figure 5), showing that it is challenged by the fun-

damental difficulties of segmenting instances.

2

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功