1041-4347 (c) 2019 IEEE. Personal use is permitted, but republication/redistribution requires IEEE permission. See http://www.ieee.org/publications_standards/publications/rights/index.html for more information.

This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication. Citation information: DOI 10.1109/TKDE.2019.2956700, IEEE

Transactions on Knowledge and Data Engineering

JOURNAL OF L

A

T

E

X CLASS FILES, VOL. 13, NO. 9, AUGUST 2019 1

Fast Stochastic Ordinal Embedding with

Variance Reduction and Adaptive Step Size

Ke Ma, Jinshan Zeng, Jiechao Xiong, Qianqian Xu, Xiaochun Cao, Wei Liu, Yuan Yao

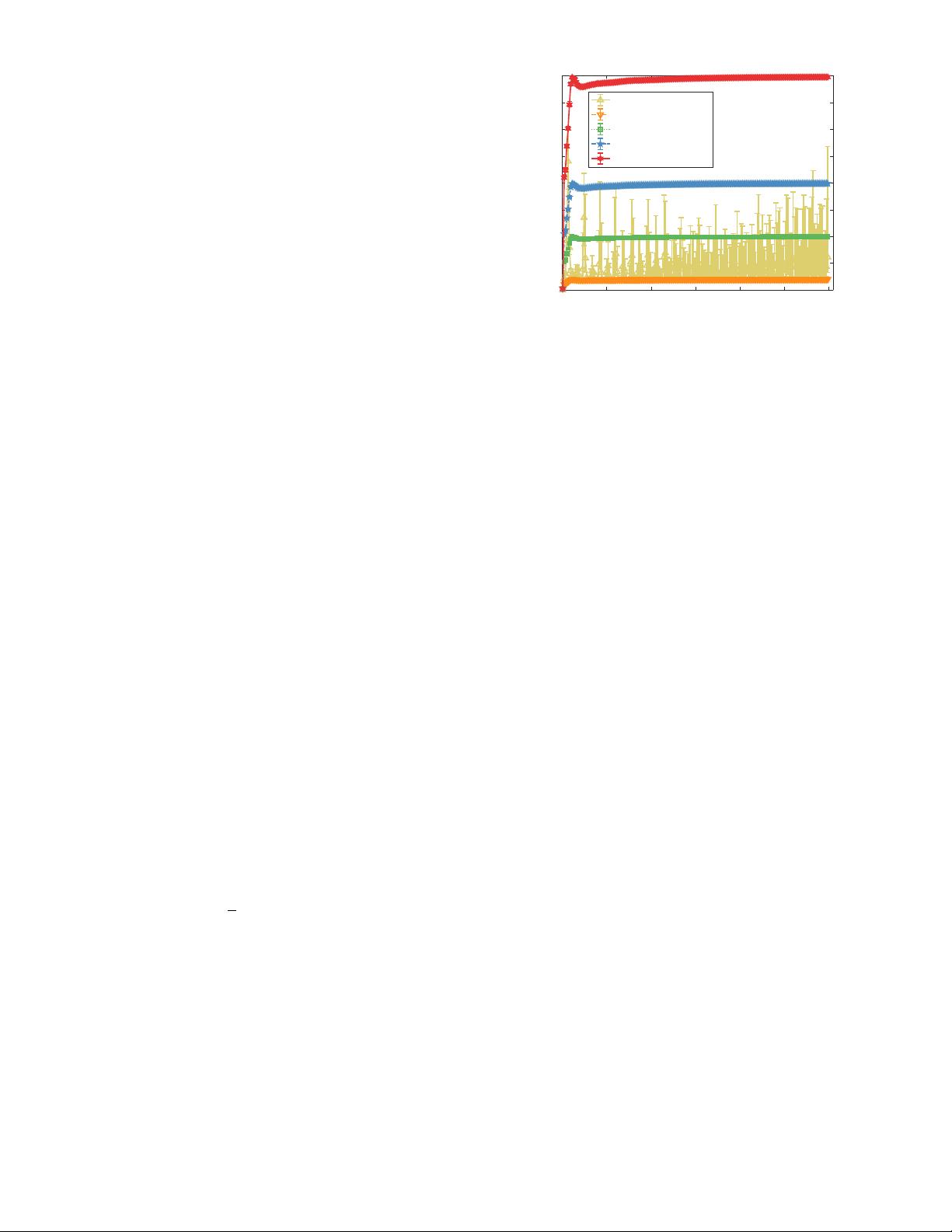

Abstract—Learning representation from relative similarity comparisons, often called ordinal embedding, gains rising attention in recent

years. Most of the existing methods are based on the semi-definite programming (SDP), which is generally time-consuming and

degrades the scalability, especially meeting the large-scale data. To overcome this challenge, we propose a stochastic algorithm called

SVRG-SBB, which has the following features: i) achieving good scalability via dropping positive semi-definite (PSD) constraints as

serving a fast algorithm, i.e., stochastic variance reduced gradient (SVRG) method, and ii) adaptive learning via introducing a new,

adaptive step size called the stabilized Barzilai-Borwein (SBB) step size. Theoretically, under some natural assumptions, we show the

O(

1

T

) rate of convergence to a stationary point of the proposed algorithm, where T is the number of total iterations. Under the further

Polyak-Łojasiewicz assumption, we can show the global linear convergence (i.e., exponentially fast converging to a global optimum) of

the proposed algorithm. Numerous simulations and real-world data experiments are conducted to show the effectiveness of the

proposed algorithm by comparing with the state-of-the-art methods, notably, much lower computational cost with good prediction

performance.

Index Terms—Ordinal Embedding, Stochastic Variance Reduction Gradient (SVRG), Non-Convex Optimization, Barzilai-Borwein (BB)

Step Size, .

F

1INTRODUCTION

O

RDINAL embedding aims to learn the representation

of data as points in a low-dimensional embedded

space. Here the “low-dimensional” means the embedding

dimension is much smaller than the number of data points.

The distances between these points agree with a set of

relative similarity comparisons. Relative comparisons are

often collected via the participators who are asked to answer

questions like:

“Is the similarity between object i and j larger than the

similarity between

l and k?”

• K. Ma is with the State Key Laboratory of Information Security (SKLOIS),

Institute of Information Engineering, Chinese Academy of Sciences, Bei-

jing, 100093, China, and with the School of Cyber Security, University of

Chinese Academy of Sciences, Beijing, 100049, China.

E-mail: make@iie.ac.cn.

• J. Zeng is with the School of Computer Information Engineering, Jiangxi

Normal University, Nanchang, Jiangxi, 330022, China, and with the

Department of Mathematics, Hong Kong University of Science and

Technology, Clear Water Bay, Kowloon, Hong Kong. Jinshan Zeng’s work

is supported in part by the National Natural Science Foundation (NNSF)

of China (No. 61977038, 61603162, 61876074), and part of this work was

performed when he was at Department of Mathematics, The Hong Kong

University of Science and Technology.(E-mail: jsh.zeng@gmail.com)

• J. Xiong is with the Tencent AI Lab, Shenzhen, Guangdong, China.

E-mail: jcxiong@tencent.com

• Q. Xu is with the Key Laboratory of Intelligent Information Processing,

Institute of Computing Technology, Chinese Academy of Sciences, Beijing

100190, China. E-mail: qianqian.xu@vipl.ict.ac.cn, xuqianqian@ict.ac.cn.

• X. Cao is with the State Key Laboratory of Information Security

(SKLOIS), Institute of Information Engineering, Chinese Academy of

Sciences, Beijing, 100093, China. E-mail: caoxiaochun@iie.ac.cn.

• W. Liu is with the Tencent AI Lab, Shenzhen, Guangdong, China.

E-mail: wliu@ee.columbia.edu

• Y. Yao is with the Department of Mathematics, and by courtesy, the De-

partment of Computer Science and Engineering, Hong Kong University

of Science and Technology, Clear Water Bay, Kowloon, Hong Kong.

E-mail: yuany@ust.hk.

Manuscript received April 19, 2005; revised September 17, 2014.

The feedback of these questions provide us with a set

of quadruplets, i.e.,

(i, j, l, k) which indicates that the sim-

ilarity between object

i and j is larger than the similarity

between

l and k. These relative similarity comparisons are

the supervision information for ordinal embedding. Without

prior knowledge, the relative similarity comparisons always

involve all objects, and the number of potential quadruplets

could be

O(n

4

). Even under the so-called “local” setting

where we restrict

l = i, the triple-wise comparisons, (i, j, k),

also have the complexity

O(n

3

).

The ordinal embedding problem was firstly studied by

[1], [2], [3], [4] in the psychometric society. In recent years, it

has drawn a lot of attention in machine learning [5], [6], [7],

[8], [9], [10], statistic ranking [11], [12], [13], artificial intel-

ligence [14], [15], information retrieval [16], and computer

vision [17], [18], etc.

Most of the ordinal embedding methods are based on the

semi-definite programming (SDP). Some typical methods

include the Generalized Non-Metric Multidimensional Scal-

ing (GNMDS) [19], Crowd Kernel Learning (CKL) [20], and

Stochastic Triplet Embedding (STE/TSTE) [21]. The main

idea of such methods is to formulate the ordinal embedding

problem into a convex, low-rank SDP problem with respect

to the Gram matrix of the embedding points. In order to

solve such a SDP problem, the traditional methods gener-

ally employ the projection gradient descent to satisfy the

positive semi-definite constraint, where the singular value

decomposition (SVD) is required at each iteration. This

inhibits the popularity of this type of methods for large-

scale and online ordinal embedding applications.

To handle the large-scale ordinal embedding problem,

we reformulate the considered problem using the embed-

ding matrix instead of its Gram matrix. By taking ad-

vantage of this new non-convex formulation, the positive

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功