This article has been accepted for inclusion in a future issue of this journal. Content is final as presented, with the exception of pagination.

IEEE TRANSACTIONS ON INTELLIGENT TRANSPORTATION SYSTEMS 1

Traffic Sign Recognition Using Kernel Extreme

Learning Machines With Deep Perceptual Features

Yujun Zeng, Xin Xu, Senior Member, IEEE, Dayong Shen, Yuqiang Fang, and Zhipeng Xiao

Abstract—Traffic sign recognition plays an important role

in autonomous vehicles as well as advanced driver assistance

systems. Although various methods have been developed, it

is still difficult for the state-of-the-art algorithms to obtain

high recognition precision with low computational costs. In this

paper, based on the investigation on the influence that color

spaces have on the representation learning of convolutional

neural network, a novel traffic sign recognition approach called

DP-KELM is proposed by using a kernel-based extreme learning

machine (KELM) classifier with deep perceptual features. Unlike

the previous approaches, the representation learning process in

DP-KELM is implemented in the perceptual Lab color space.

Based on the learned deep perceptual feature, a kernel-based

ELM classifier is trained with high computational efficiency

and generalization performance. Through the experiments on

the German traffic sign recognition benchmark, the proposed

method is demonstrated to have higher precision than most of the

state-of-the-art approaches. In particular, when compared with

the hinge loss stochastic gradient descent method which has the

highest precision, the proposed method can achieve a comparable

recognition rate with significantly fewer computational costs.

Index Terms—Traffic sign recognition, convolutional neural

network, extreme learning machine, kernel, color space, lab.

I. INTRODUCTION

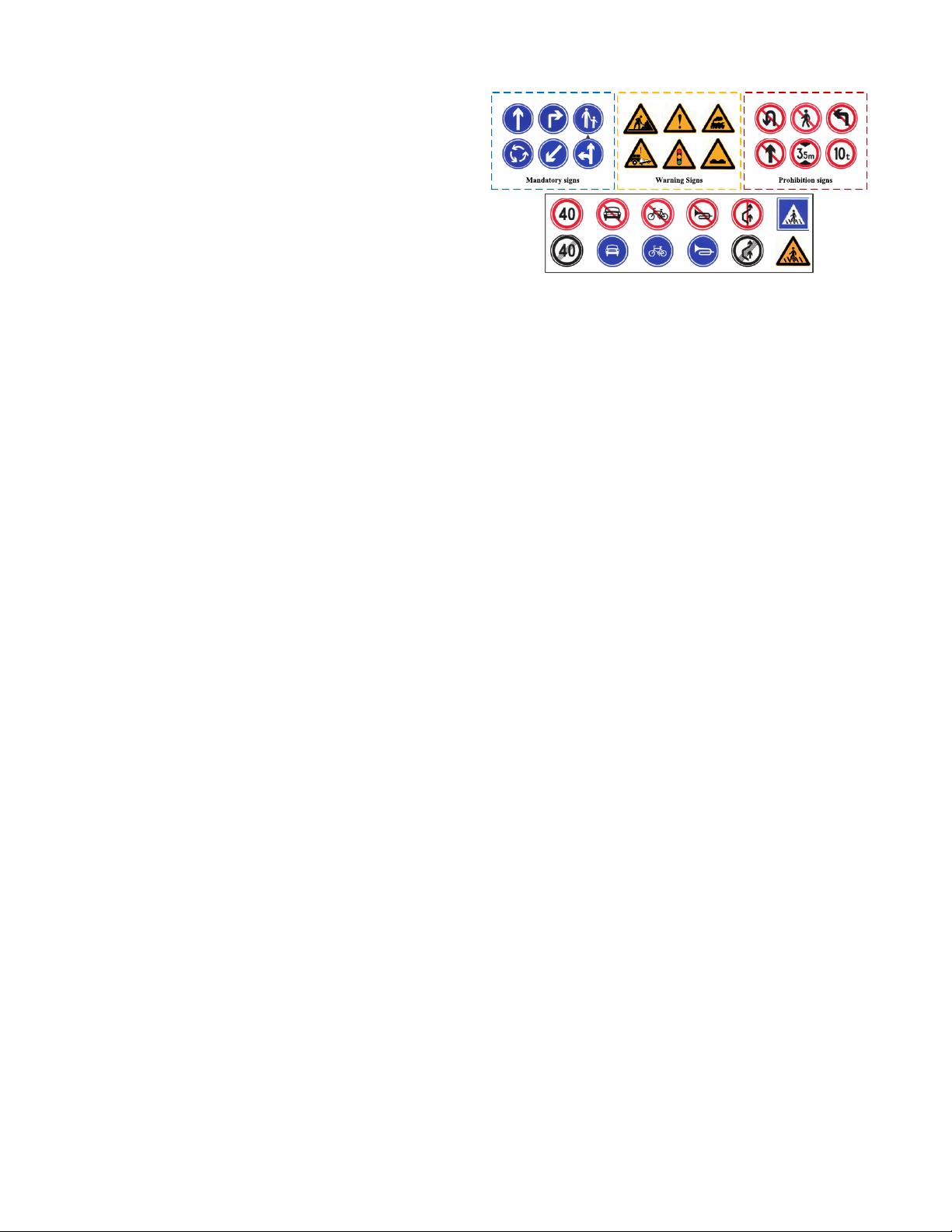

D

RIVEN by the development of driver assistance system

(DAS) and autonomous vehicles, traffic sign recogni-

tion (TSR) has received lots of attention since it is necessary

to automatically provide timely information of traffic signs

for safe driving [1]. TSR is also beneficial for tasks like

traffic sign monitoring and maintenance. In the past decade,

traffic sign recognition has become an important research topic

not only in intelligent transportation systems but also in the

pattern recognition community. Factors such as changeable

viewpoint, motion blur, partial occlusion, color distortion,

contrast degradation, etc., always make TSR a challenging

problem.

Manuscript received November 9, 2015; revised August 2, 2016; accepted

September 17, 2016. This work was supported in part by the National

Natural Science Foundation of China (NSFC) under Grant 91220301 and

Grant 61375050 and in part by the Joint Innovation Foundation between NSFC

and Chinese Automobile Industry under Grant U1564214. The Associate

Editor for this paper was J. Zhang.

Y. Zeng, X. Xu, Y. Fang, and Z. Xiao are with the College of Mechatronics

and Automation, National University of Defense Technology, Changsha

410073, China (e-mail: xinxu@nudt.edu.cn).

D. Shen is with the College of Information Systems and Management,

National University of Defense Technology, Changsha 410073, China.

Color versions of one or more of the figures in this paper are available

online at http://ieeexplore.ieee.org.

Digital Object Identifier 10.1109/TITS.2016.2614916

As a typical pattern recognition task, the accuracy of traf-

fic sign recognition mainly lies on the feature extractor as

well as the classifier. Earlier TSR methods generally share

a similar scheme which consists of hand-crafted features

and conventional classifiers. Even though many hand-crafted

features have been created and integrated with classifiers like

support vector machine (SVM) [2], [3], random forests [4], and

extreme learning machine (ELM) [5], etc., it is still difficult

to deal with the increasing diversity and variability of traffic

signs. The recognition performance is far from satisfaction.

Vondrick et al. [6] demonstrated that hand-crafted features

like histogram of oriented gradients (HOG) [7] were not

discriminative enough. Samples from different classes could

often be similar in the hand-crafted feature space.

With the growth of massive databases and high-performance

computing hardware (e.g. Graphics Processing Units, GPUs),

deep neural network (DNN) [8]–[10] has gradually shown

its outstanding feature-learning capabilities. In contrast with

hand-crafted features, the learned deep features could auto-

matically learn the potential essence stored in massive data

even better. As a consequence, the DNN-based methods have

obtained state-of-the-art results in many pattern classification

tasks.

As a representative DNN model, the convolutional neural

network (CNN) was inspired from the research on creature

visual systems [11]. Representative CNN models were pro-

posed by Fukushima [12] and LeCun [13], both of which

tried to imitate the perceptual mechanism of human visual

cortex and could learn more discriminative features. Recently,

CNN has been used to tackle TSR tasks and some promising

results have been obtained [14]–[17]. However, CNN is a

deep multi-layer neural network and it is usually trained by

back-propagation (BP) algorithm. The local minima problem

of BP will cause the limited generalization capability of the

fully-connected layers in CNN. In order to obtain state-of-the-

art performance, CNN-based algorithms usually suffer from

a huge computational burden due to the need of a rather

deep single CNN or an ensemble of multiple CNNs. Besides,

current CNN-based TSR approaches usually deal with images

in RGB space. Various issues such as information coupling

and non-uniform color distribution in RGB space would have

a negative effect on the feature learning process of CNN.

In this paper, based on an investigation on the influence

that color spaces have on the representation learning of CNN,

we propose a novel TSR method named DP-KELM that uses

the kernel ELM classifier with deep perceptual (DP) features.

1524-9050 © 2016 IEEE. Personal use is permitted, but republication/redistribution requires IEEE permission.

See http://www.ieee.org/publications_standards/publications/rights/index.html for more information.

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功