bankruptcy prediction. The results showed that DERBF had a good

performance of generalization on bank bankruptcy datasets.

Chauhan et al. (2009) employed differential evolution algorithm to

train wavelet neural network (DEWNN), predicting the bankruptcy

in bank s. The results on the four bankruptcy datasets revealed that

the DEWNN was obviously superior to other existed methods. Ravi

and Pramodh (2008) proposed a new architecture called principal

component neural network (PCNN) applied to bankruptcy pre-

diction problem in commercial banks. It is inferred that the pro-

posed PCNN hybrids outperformed other classifiers on the bank-

ruptcy dataset. A new neural network architecture kernel principal

component neural network (KPCNN) trained by threshold ac-

cepting was presented in Ravisankar and Ravi (2009). Its applica-

tion to bankruptcy prediction in banks reveled that KPCNN yields

comparable results with all the techniques. Vasu and Ravi (2011)

proposed new principal component analysis-wavelet neural net-

work hybrid (PCATAWNN) architecture trained by threshold ac-

cepting algorithm to predict bankruptcy in banks. The experi-

mental results showed that the PCATAWNN could convincingly

outperformed other techniques in terms of area under ROC curve

(AUC) in 10-fold cross-validation. In all of the employed methods,

ANN (Tsai and Wu, 2008; Atiya, 2001b; Zhang et al., 1999) has

become more and more popular for financial prediction, thanks to

its prominent ability to capture the nonlinearity relationship that

exists between different features in real data set. Nevertheless, it is

worth to point out that traditional ANN learning methods, such as

the back-propagation approach, are based on the gradient descent

strategy which may result in local optimum. Furthermore, it is

generally required that a fair amount of network parameters be

tuned.

In order to avoid ANN's drawbacks, Huang et al. proposed a

new machine learning paradigm named extreme learning ma-

chine (ELM) (Huang et al., 2006). ELM is a representative learning

model of neural network named after single hidden layer feed-

forward neural networks (SLFNs). The hidden biases and input

weights in this method can be randomly generated, and the output

weights are mathematically determined using Moore-Penrose

(MP) generalized inverse. It is well-known that the universal ap-

proximation can reflect the approximation capabilities of the

neural networks. The approximation capabilities of multilayer

feedforward networks were proved by Hornik (1991), namely, no-

constant bounded continuous activation functions and continuous

mappings could be approximated in measure by neural networks.

Leshno et al. (1993) advocated that continuous functions could be

approximated by feedforward networks with a non-polynomial

activation function. Guang-Bin and Babri (1998) proposed that

SLFNs with N hidden nodes and almost nonlinear activation

function could exactly learn N distinct observations. Due to its

classification performance, ELM has been adopted in fields such as

image classification (Cao et al., 2016a; Jun et al., 2011), disease

diagnosis (Chen et al., 2015; Zhang et al., 2007), and engineering

application (Cao et al., 2016b, 2015 ). In addition, methods based on

ELM have also been widely applied in fi nancial areas such as

bankruptcy prediction (Yu et al., 2014), corporate life cycle pre-

diction (Lin et al., 2013) and corporate credit ratings (Zhong et al.,

2014). One limitation of ELM, nevertheless, is that the randomly

assigned input weights can increase the variations of accuracies

obtained by classi fiers in multiple trials. In order to overcome this

limitation, Huang et al. (2012) proposes an extension version of

ELM, namely, kernel extreme learning machine (KELM), whose

connection weights between hidden layers and input are not ne-

cessary. Compared with ELM, KELM can achieve comparative or

more excellent property with faster training speed and much ea-

sier implementation in applications such as hyperspectral remote-

sensing image classification (Pal et al., 2013; Chen et al., 2014),

activity recognition (Deng et al., 2014), 2-D profiles reconstruction

(Liu et al., 2014), disease diagnosis (Chen et al., 2016) and fault

diagnosis (Jiang et al., 2014).

We recently applied the KELM to bankruptcy prediction's issue

(Zhao et al., 2017), and obtained better performance than other

five competitive approaches including SVM, ELM, random forest

(RF), particle swarm optimization boosted fuzzy KNN, and Logit

model on the same real data set. Nevertheless, it should be noticed

that the two significant parameters in KELM with RBF kernel are

kernel penalty parameter C and bandwidth

γ

.Ccontrols the trade-

off between the model complexity and the fitting error mini-

mization, while

γ

defines the non-linear mapping from the input

space to some high-dimensional feature space. Several studies

have illustrated that these two parameters have an important ef-

fect on KELM's performance, similar to that in SVM. Thus, these

two key parameters must be properly set prior to its application to

realistic problems. These parameters are traditionally obtained

using the grid-search method whose main drawback, however, is

that it is easy to be trapped in a local optimum. Presently, it has

been shown that biologically-inspired methods (such as the ge-

netic algorithm (Liu et al., 2014), particle swarm optimization

(PSO) (Zhang and Yuan, 2015), and artificial bee colony (Ma et al.,

2016 ) are more likely to find the global-best solution than the

grid-search method. As a new member in the nature-inspired

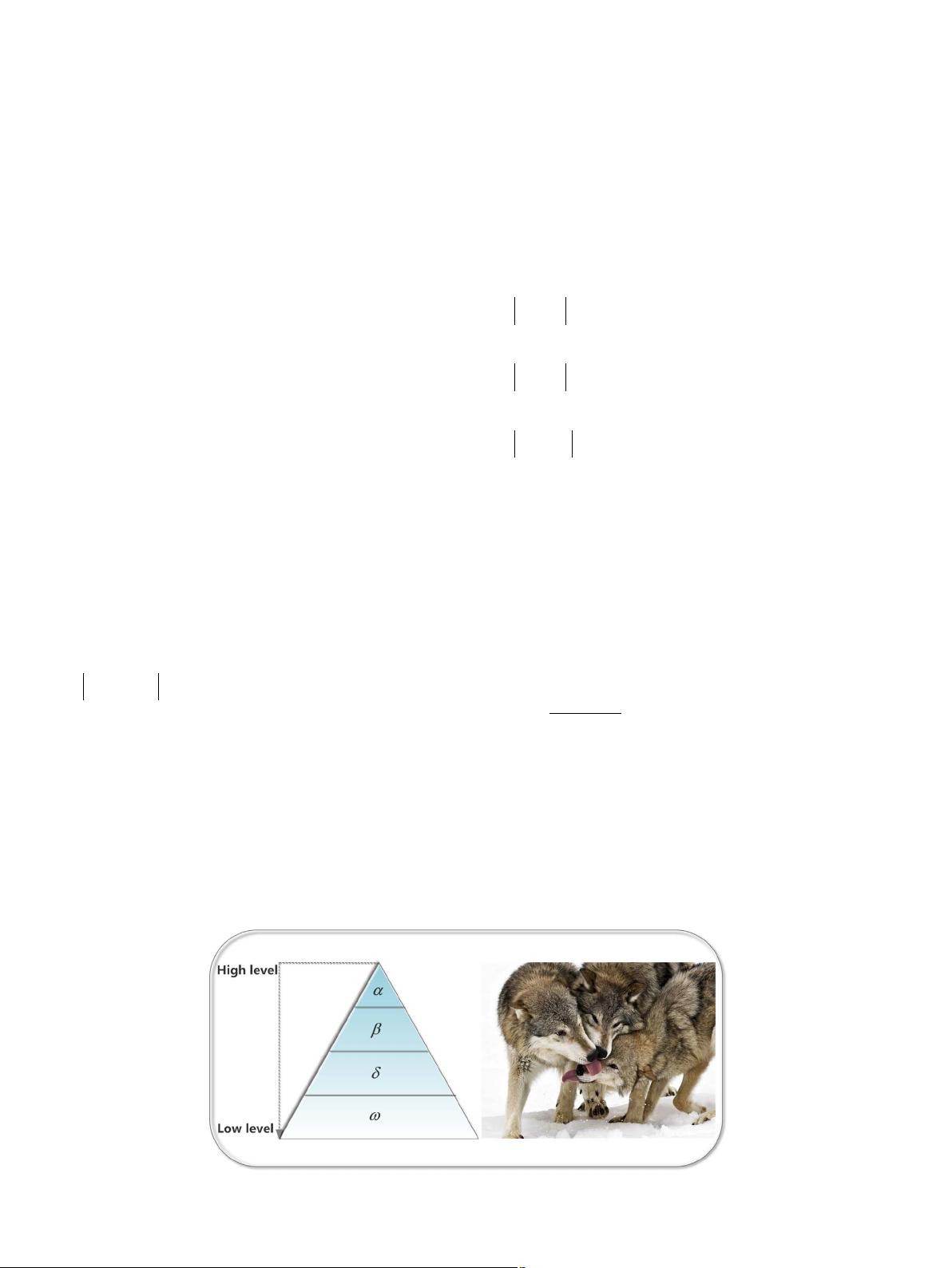

methods, Grey Wolf Optimizer (GWO) (Mirjalili et al., 2014) mi-

mics the social hierarchy and hunting behavior of grey wolves in

nature. The main traits of GWO are social hierarchy, encircling

prey, hunting, attacking prey (exploitation), and search for prey

(exploration).

Due to its good search ability, GWO has been applied in a var-

ious fields. Muangkote et al. (2014) used the GWO with improve-

ments to training q-Gaussian Radial Basis Functional-link nets

neural networks. The experimental result indicated that the pro-

posed algorithm obtained competitive performance comparing

with other meta-heuristic methods. Komaki and Kayvanfar (2015)

successfully applied GWO for the two-stage assembly flow shop

scheduling problem with release time to greatly improve the ef-

ficiency. Sulaiman et al. (2015) used GWO to solve optimal reactive

power dispatch problem. Mirjalili (2015) employed GWO to train

multi-layer perceptron and eight standard datasets including five

classification and three function-approximation datasets ware

evaluated. The results demonstrated that a high level of accuracy

in classification and approximation of the proposed trainer could

be obtained. However, to the best of our knowledge, the potential

of GWO has not been explored to fine tune the optimal parameters

appeared in KELM. Therefore, this study aims at exploring the

GWO technique's ability to address KELM's model selection pro-

blem for classification, and further applying the resulted model

GWO-KELM to successfully and effectively predict company

bankruptcy. For verification purpose, the effectiveness and effi-

ciency of the proposed GWO-KELM is compared against the

common methods such as grid-search optimized KELM (GS-

KELM), genetic algorithm optimized KELM (GA-KELM), particle

swarm optimization optimized KELM (PSO-KELM) and other four

advanced machine learning methods including original ELM, self-

adaptive evolutionary extreme learning machine proposed by Cao

et al. (2012) (SaE-ELM), SVM and RF on the real-life financial da-

taset. All methods are compared in terms of the training accuracy,

the validation accuracy and test accuracy, Type I error, Type II error

and the area under the receive operating characteristic curve

(AUC) criterion. For the stability of the results, the cross validation

(CV) strategy is also adopted including external 10-fold CV and the

inner 5-fold CV. The experimental results show that our proposed

methodology, GWO-KELM, performs better when compared with

some other well-known common methods. The main contribution

of this study can be summarized as follows:

M. Wang et al. / Engineering Applications of Artificial Intelligence 63 (2017) 54–68 55

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功