没有合适的资源?快使用搜索试试~ 我知道了~

A Text Sentiment Classification Modeling Method Based on Coordin...

1 下载量 35 浏览量

2021-02-08

13:48:20

上传

评论

收藏 1.94MB PDF 举报

温馨提示

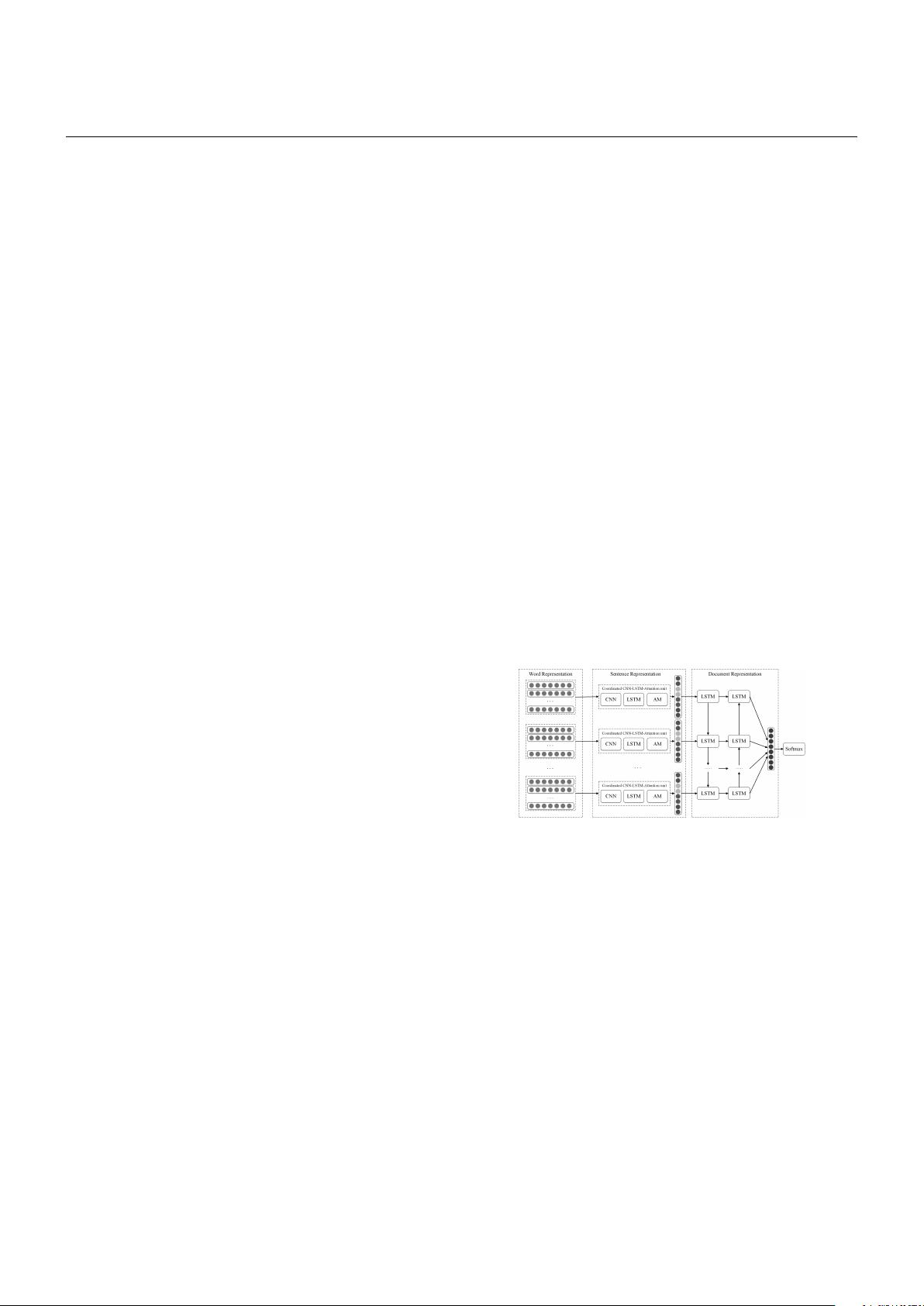

The major challenge that text sentiment classification modeling faces is how to capture the intrinsic semantic, emotional dependence information and the key part of the emotional expression of text. To solve this problem, we proposed a Coordinated CNNLSTM-Attention(CCLA) model. We learned the vector representations of sentence with CCLA unit. Semantic and emotional information of sentences and their relations are adaptively encoded to vector representations of document. We used softmax regressio

资源推荐

资源详情

资源评论

Chinese Journal of Electronics

Vol.28, No.1, Jan. 2019

A Text Sentiment Classification Modeling

Method Based on Coordinated

CNN-LSTM-Attention Model

∗

ZHANG Yangsen

1,2

, ZHENG Jia

1

, JIANG Yuru

1,2

, HUANG Gaijuan

1,2

and CHEN Ruoyu

1,2

(1. Institute of Intelligent Information Processing, Beijing Information Science and Technology University,

Beijing 100192, China)

(2. Beijing Laboratory of National Economic Security Early-Warning Engineering,Beijing 100192,China)

Abstract — The major challenge that text sentiment

classification modeling faces is how to capture the

intrinsic semantic, emotional dependence information and

the key part of the emotional expression of text. To

solve this problem, we proposed a Coordinated CNN-

LSTM-Attention(CCLA) model. We learned the vector

representations of sentence with CCLA unit. Semantic and

emotional information of sentences and their relations are

adaptively encoded to vector representations of document.

We used softmax regression classifier to identify the

sentiment tendencies in the text. Compared with other

methods, the CCLA model can well capture the local

and long distance semantic and emotional information.

Experimental results demonstrated the effectiveness of

CCLA model. It shows superior performances over several

state-of-the-art baseline methods.

Key words — Coordinated CNN-LSTM-Attention,

Sentiment analysis, Text modeling, Semantic information.

I. Introduction

Text sentiment classification modeling is a funda-

mental problem in the field of Nature language processing

(NLP) and is a crux to understand user intention

in product reviews or social networks

[1,2]

. The core

of text sentiment classification modeling is to capture

semantic features from variable-length text units. As a

traditional method, the bag-of-words model

[3]

is the most

common and popular vector representations method for

texts because of its efficiency, simplicity and surprising

accuracy. But the bag-of-words model treats sentence or

document as an unordered collection of words. Lacking

word order, different sentences can have the exactly same

representation, given that the same words are used.

Until now, some machine learning algorithms have

achieved good results on text sentiment classification

modeling

[4]

, but with the deep learning models have

achieved remarkable effects in the field of speech

recognition and computer vision in recent years, order-

sensitive models based on the neural networks model such

as Recursive neural networks (RNNs), Recurrent neural

networks (RNN), Convolutional neural networks (CNN),

Long short-term memory (LSTM) and attention model

are becoming increasingly popular due to their ability

to capture word order information and further learn

the semantic and emotional information from text. Deep

learning comes from traditional neural network models.

It is not just a multi-layer network but emphasizes the

extraction of hidden features and higher-level abstract

features.

II. Related Work

1. Deep learning model

RNNs have been proved effective in modeling text

semantics

[5−7]

. However, it need to construct semantic

tree and its performance depends on the accuracy of the

semantic tree. But, the semantic relationship between

two sentences may not be able to form a tree structure.

RNN do not need to build the semantic tree

[8]

and it

can capture the context information over long distances.

However, RNN is a bias model, or to be more specific, a

positive model, in which the relatively backward words

in the text occupy a more dominant position. At the

same time, RNN also have the problem of exploding and

vanishing gradient.

In order to solve the semantic bias problem of RNN,

it is proposed to use CNN for text semantic modeling.

∗

Manuscript Received Aug. 4, 2017; Accepted May 29, 2018. This work is supported by the National Natural Science

Foundation of China (No.61772081, No.61602044) and the Science and Technology Development Project of Beijing Municipal Education

Commission(No.KM201711232014).

© 2019 Chinese Institute of Electronics. DOI:10.1049/cje.2018.11.004

A Text Sentiment Classification Modeling Method Based on Coordinated CNN-LSTM-Attention Model 121

CNN is always used in conjunction with the pooling

technology to find the most useful information from

the text. However, the existing CNN models always

use relatively simple convolutional kernels, such as fixed

windows

[9]

. It brings great limitations to the semantic

representation of text.

Recently, RNN with LSTM units have revitalized

become a popular architecture due to its representa-

tional ability and effectiveness at capturing long-term

dependence information

[10,11]

. LSTM can also solve the

problem of exploding and vanishing gradient of RNN.

A LSTM block may be described as an “intelligent”

network unit that can remember a value which contain

some history information for an arbitrary length of time.

The attention model is also a popular language

model in recent years. Bahdanau et al.

[12]

think that the

traditional Encoder-Decoder model generates fixed-length

intermediate semantics representation is the bottleneck

to enhance the performance of neural machine translation

model. To solve this problem, they proposed an automatic

alignment model between the output and the source

sentence and achieved good performance. This alignment

model is the basis of most of the present attention models.

The attention model is good at capturing the key parts

that are important to the semantic representation.

2. Deep learning model for sentiment classifi-

cation

Deep learning has been proved effective for text sen-

timent classification tasks

[13,14]

. For the text sentiment

classification task, the magic of deep learning is that

it can learn continuous and multidimensional semantic

and emotional representations with different grains. The

deep learning model for text sentiment classification

modeling always includes two steps: the first step is

learning distributed vector representations of words, next

step is utilizing the principle of compositionality which

states the meaning of a longer expression (e.g. sentence or

document) depends on the meanings of its constituents,

to construct the vector representation of sentences and

documents based on the vector representations of the

words.

For learning distributed vector representations of

words, Maas

[15]

presented a model that uses a mix

of unsupervised and supervised techniques to learn

the vector representations of word, which can capture

semantic information as well as rich emotional content.

Mikolov

[13,16]

proposed two novel deep learning models

for computing continuous vector representations of words

from larger corpora and built a well-known open source

project dubbed word2vec. Pennington

[17]

introduced the

GloVe model, which is an unsupervised global log-bilinear

regression model for learning the representations of word.

In order to construct the representation of sentences

and documents, inspired by learning vector representa-

tions of words, Le

[18]

proposed the paragraph vector

model, an unsupervised learning algorithm that learns

fixed-length feature representations of sentences. Kalch-

brenner

[14]

designed a dynamic convolutional architecture

dubbed the Dynamic convolutional neural network

(DCNN) that uses dynamic k-max pooling, which is

a global pooling operation over linear sequences to

capture the most useful information from the text. Tai

[10]

generalized LSTM to Tree-LSTM architecture where

each LSTM unit combines information from its children

units to improve semantic representation for sentences.

For some specific domain text sentiment classification

modeling, deep learning model also has a good effect.

Zhang

[19]

built a language model based on RNN to handle

negative sentences and double negative sentences for

Chinese Microblog sentiment classification.

Text modeling should take full account of the

semantic relation of text in short distance and the

semantic dependence of text in long distance, as well as

the key part of the emotional expression. In order to solve

the defects of various single deep learning models for text

sentiment modeling, we proposed a new model dubbed

Coordinated CNN-LSTM-attention(CCLA) model which

can encode the semantic and emotional information of

text for sentiment classification task as shown in Fig.1.

Fig. 1. The architecture of the CCLA model

III. Model

Before introducing our approach for sentence model-

ing, we will introduce word vector representation model.

In the process of word modeling, each word is represented

as a low dimensional, continuous and real-valued vector

and associated with a point in a vector space

[20]

. Then

all the word vectors are mapped into a word embedding

matrix R

d×|V|

, where d is the dimension of word vectors

and |V| is the vocabulary size of embedding matrix.

1. Sentence vector representation model

To construct vector representations for sentences,

CNN and LSTM are two state-of-the-art models. In this

paper, we combined the advantages of CNN and LSTM

and incorporated with the attention model to construct

the CCLA unit to encode the semantic and emotional

information for sentences. The architecture of the CCLA

unit is given in Fig. 2.

剩余6页未读,继续阅读

资源评论

weixin_38688097

- 粉丝: 5

- 资源: 928

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

- 职工上、下班交通费补贴规定.docx

- 房地产公司圣诞活动策划方案.docx

- 全球旅游与经济指标数据集,旅游影响因素数据集,旅游与收入数据(六千六百多条数据)

- 公司下午茶费用预算.xlsx

- 下午茶.docx

- 毕设和企业适用springboot计算机视觉平台类及在线平台源码+论文+视频.zip

- 2014年度体检项目.xls

- 年度员工体检项目.xls

- 年度体检.xlsx

- 毕设和企业适用springboot跨境电商平台类及虚拟现实体验平台源码+论文+视频.zip

- 毕设和企业适用springboot平台对接类及全球电商管理平台源码+论文+视频.zip

- 数据库-sqlite客户端-sqlite-访问sqlite数据库

- 住宅小区汽车超速检测及报警系统设计(单片机源码+图+报告)

- 毕设和企业适用springboot区块链技术类及客户关系管理平台源码+论文+视频.zip

- 毕设和企业适用springboot区块链技术类及音频处理平台源码+论文+视频.zip

- 毕设和企业适用springboot区块链交易平台类及交通信息平台源码+论文+视频.zip

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功