COGAIN ’18, June 14–17, 2018, Warsaw, Poland Y. Zhou et al.

In this paper, we propose to alleviate the semantic gap problem

in the framework of implicit relevance feedback using eye track-

ing data. In this framework, the retrieval is still performed based

on low level visual features. Due to its superiority in extracting

abstract features, the convolutional neural network is employed

to classify the eye tracking data to the patterns of being relevant

or irrelevant. In our work, an optimal subset of low level visual

features with 70 components are rst extracted and selected with

a feature selection method proposed in [Zhou et al

.

2017]. These

subset of visual features are then used for our image retrieval.

2 RELATED WORK

Relevance Feedback (RF) is an interactive and/or supervised learn-

ing process used to improve the performance of information re-

trieval systems [Rui et al

.

1998]. Explicit RF methods initially con-

sidered global-level feedback information. In [Rahman et al

.

2007],

a two-level strategy was proposed for image retrieval. In the lower

level, the supervised support vector machine method and the un-

supervised fuzzy c-mean clustering technique were rst combined

to prelter images in a principle component analysis (PCA)-based

eigenspace while in the ner level, images were retrieved with

a category-based statistical similarity matching technique. Upon

employing an explicit RF scheme, the user’s semantic perception

was incorporated to adjust the similarity matching function. More

recently, the analysis of explicit RF methods has shifted to a ner

level of detail and region-based RF approaches. Methods of this kind

still receive feedback information at global-level but the estimate

of the relevant local-level objects is used to rene the retrieved

results. For example, an image retrieval framework that is based on

a graph-theoretic region correspondence estimation was presented

in [Li and Hsu 2008]. Another SVM-based approach was proposed

in [Djordjevic and Izquierdo 2007] which utilizes an adaptive convo-

lution kernel to realize object-based indexing and images retrieval.

The main drawbacks of these methods are their inaccurate relevant

region identication and requiring extensive eort from the user

to provide feedback in every iteration.

Over the past few years, implicit RF has received particular at-

tention in the image retrieval community. Both advantages and dis-

advantages exist for this new technology. The advantages include

its non-intrusive time-ecient capturing of the user’s feedback and

its being more expressive than the explicitly provided feedback.

On the other hand, the drawback of this technique is the presence

of large amounts of noise in the feedback data. Among the dier-

ent types of implicit feedback data, eye tracking is of particular

importance to image retrieval applications, since it can provide

valuable information with respect to which parts of the image the

user has observed as well as cues regarding the relevance of the

latter to the query at hand. Most eye tracking methods related to

image retrieval have focused on predicting the user’s relevance

assessment at the image level. Maiorana [Maiorana 2013] proposed

an enhanced CBIR system where an eye tracking data based rele-

vance feedback component was integrated. In this system, the gaze

points were used to infer regions of interest in the query image

thus allowing for searches based on global or local features. Gaze

features such as the total xation time and the number of xations

were used to weight the relevance of the returned images. Two

images with the highest scores were then used as new queries for a

new round of retrieval. In [Liang et al

.

2010], a region based image

retrieval method was proposed where the eye tracking data were

used to determine the region of interests whose importance was

weighted with the xation time. With these importance weights

of image regions, contributions to image distance from dierent

image regions were updated.

The fundamental issue of the implicit RF based on the eye track-

ing data is how to discover the user’s relevance assessment of

returned images. In most existing work, gaze features such as times

an image was visited, total time the user spent on image, number

of xations, total xation time were used for discovering user’s

relevance assessment [Papadopoulos et al

.

2014]. Actually, the task

of user’s relevance assessment discovery is just a two-class pattern

analysis problem, i.e., to partition the patterns of the eye track-

ing data to relevance or irrelevance classes. Many recent research

results showed that deep learning (DL) performs excellently espe-

cially in pattern classication and hence attracted more and more

attention. LeCun rst proposed a set of network models called

LeNet to identify handwritten numbers [Lécun et al

.

1998]. In 2012,

Hinton and Krizhevsky [Krizhevsky et al

.

2012] used the convolu-

tion neural network to classify images in ImageNet. Zhang [Zhang

et al

.

Zhang et al

.

] proposed an image retrieval algorithm that used

the characteristics of the CNN fully connected layer and the group

cross-index. For better discovering the user’s retrieval intention

and considering the strong power in pattern classication of the

deep learning technique, the deep neural network is employed to

classify the eye tracking data to the patterns of being relevant or

irrelevant, which is then used to update the retrieval strategy. Ex-

periment results show that our method can achieve comparable

results from the explicit RF method and outperforms the results

from the implicit RF where the xation time was used to re-weight

the importance of dierent regions [Liang et al. 2010].

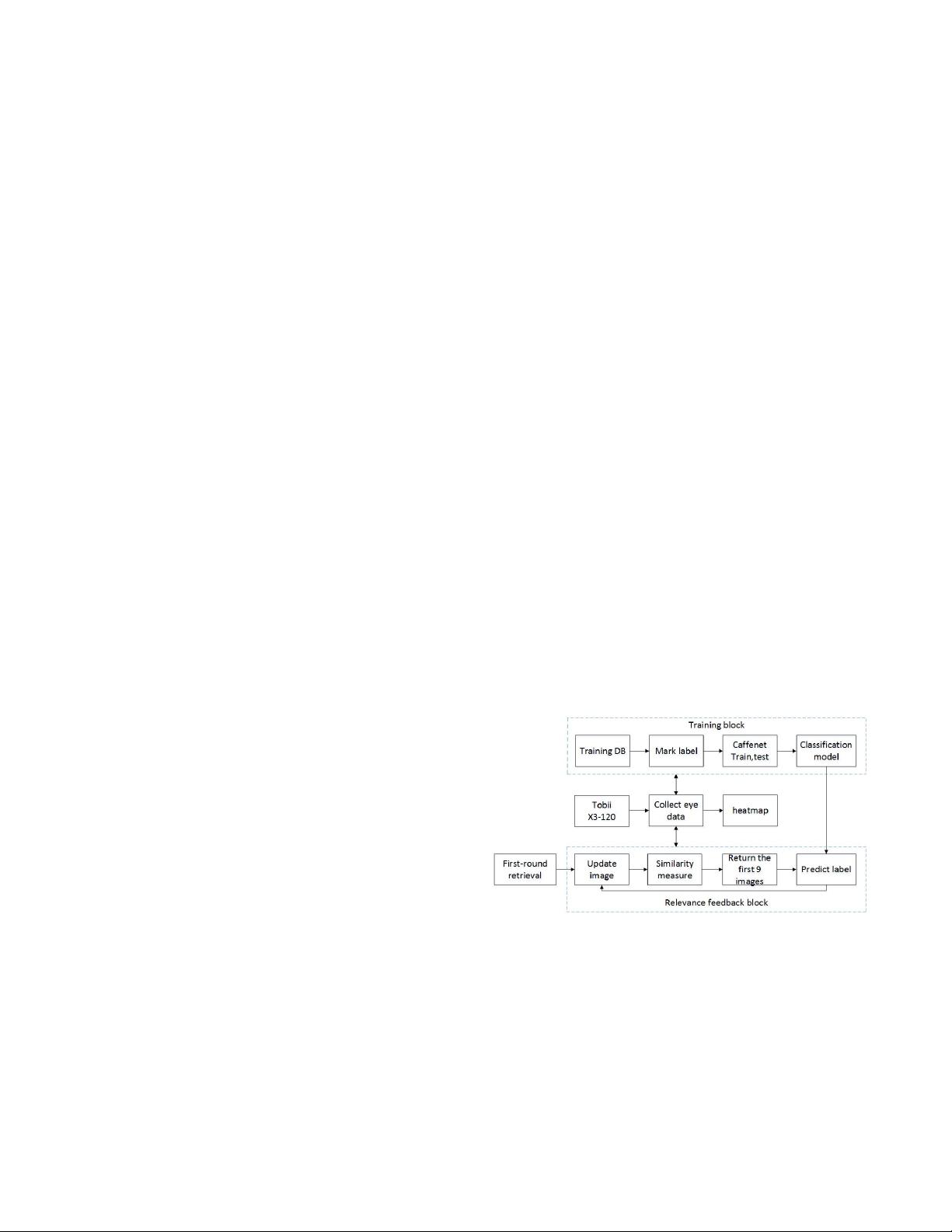

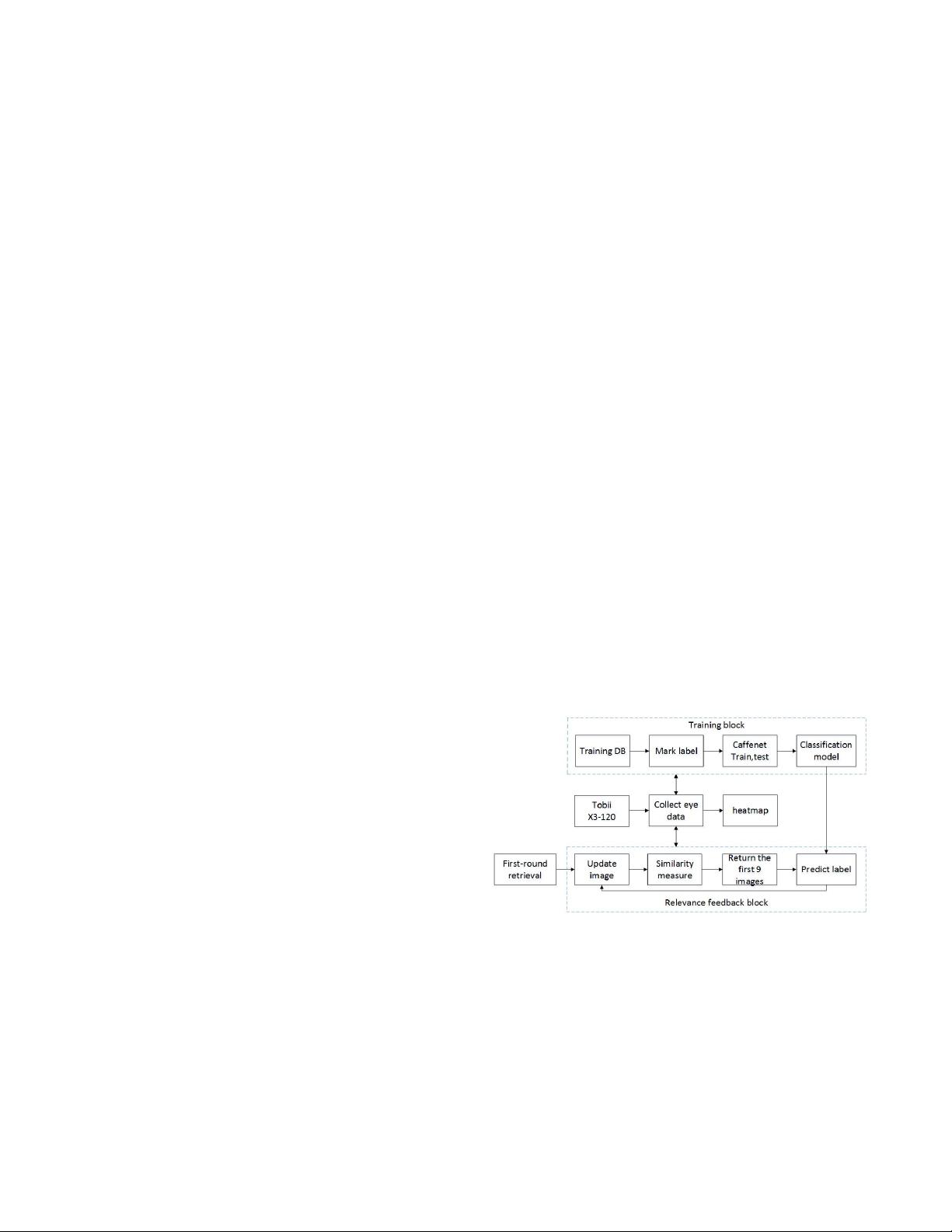

Figure 1: Block diagram of our image retrieval system

3 METHOD

3.1 System Overview

The block diagram of our proposed image retrieval system is shown

in Fig.1. There are two sub-blocks in this system with one being the

RF training block and the other one being the RF based retrieval

block.

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功