the multi-user location correlation efficiently.

The main contributions of this paper are organized as

following.

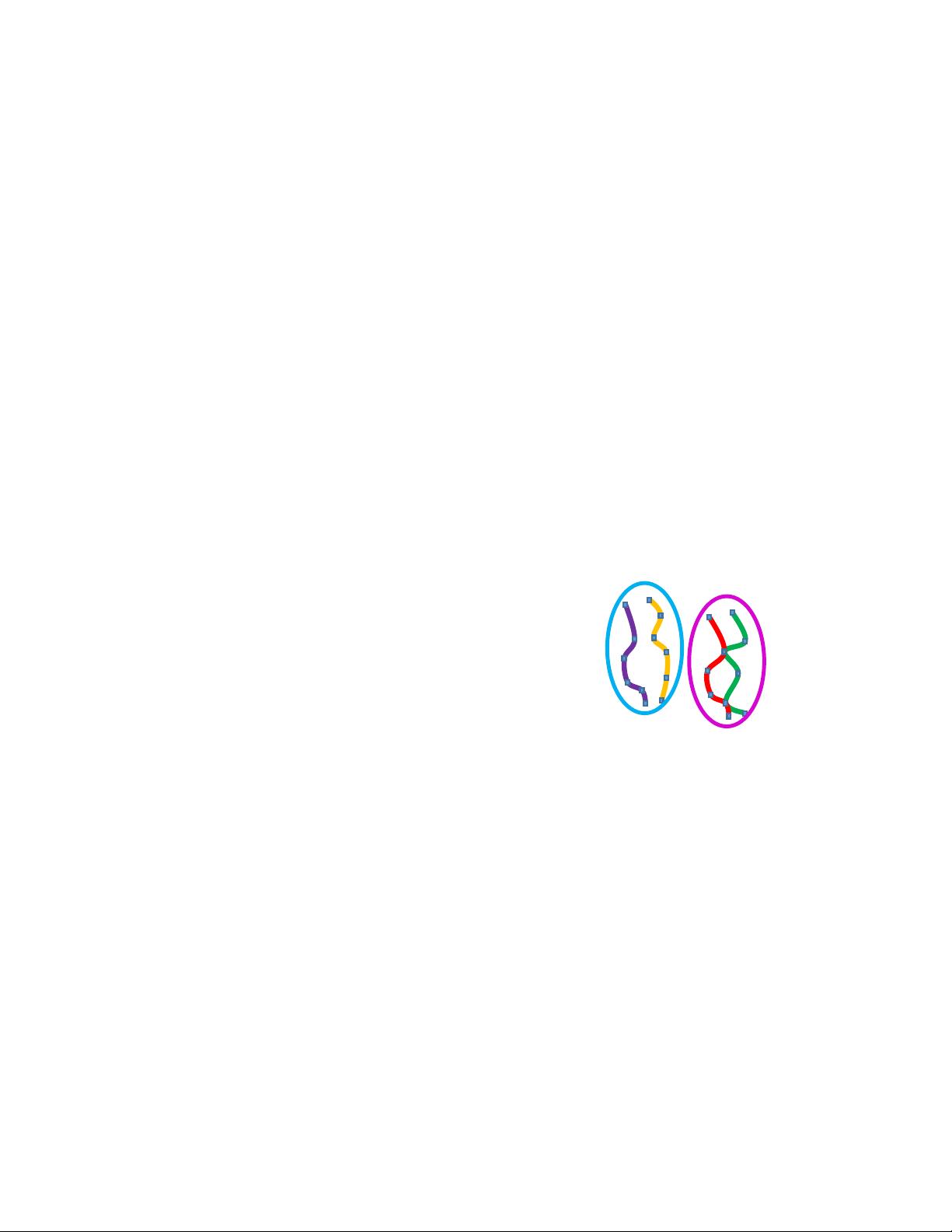

∙ By using HMMs, we can achieve the probable tra-

jectories of each user, preparing for extending the

differential privacy on private candidate sets to protect

the location correlations.

∙ We quantify the location correlation between two users

through the similarity measurement of two hidden

Markov Models. Through such measurement, we can

not only analyze the historical multi-user location

correlations, but also predict the potential correlations.

∙ We propose a private trajectory releasing mechanism

which satisfies the differential privacy and can protect

the location correlations.

∙ The evaluations demonstrate that our approach can

achieve differentially private location correlation re-

leasing with high efficiency and utility.

The remainder of this paper is organized as follows. In

Section II, we review the related works in the literature. In Sec-

tion III, we give some basic concepts, such as HMMs, as well

as similarity of HMMs and the bounded differential privacy.

Then, we formulate an adversary model and discuss motivation

and basic idea to protect multi-user location correlation priva-

cy. In Section IV, we present the details of multi-user location

correlation protection and a trajectory releasing mechanism.

Furthermore, the security analysis, the utility analysis and the

analysis on the adversary’s knowledge are depicted in Section

V. The experimental performance evaluation is shown Section

VI. Finally, Section VII concludes the paper.

II. R

ELATED WORK

In the literature, most research works on location privacy

protection and be summarized into two categories, i.e., location

privacy and correlation protection with differential privacy.

A. Location Privacy

There are a lot of works about location privacy protection.

In order to protect users’ privacy, early solutions are proposed

to remove users’ identities or replace them with pseudonyms

[1]. However, such solutions cannot meet requirements of

privacy protection in the context of LBSs. Actually, adversaries

can use users’ published locations and related data with

background knowledge to de-anonymized users. As a result,

pseudonymizing is not good enough to preserve users’ privacy.

Furthermore, 𝑘-anonymity is also used to protect location

privacy. To achieve that a record of one user in the context

databases cannot be distinguished from 𝑘 − 1 other users. A

concept of “hide locations of users inside a crowd” is proposed

and adapted to preserving users’ locations by capacitating users

to request the LBSs using a spatial area called a “cloak” instead

of their precise locations. There are some works dealing with

this solution [2], [3]. And they also try to balance the trade-off

between the resulting utility and the offered privacy guarantee.

The resulting utility and privacy guarantee are qualified by the

cloak size and 𝑘 respectively. For example, the performance of

a 𝑘-anonymity approach depends on the quadtrees that produce

the smallest cloak. What’s more, researchers extend this model,

to allow users to customize their desired privacy levels.

In addition, to protect online distributed LBSs, the

𝑘-anonymity is also introduced. For instance, a privacy-aware

query processing framework is proposed, called Casper [4].

It disposes the users’ queries based on their cloak location,

i.e., anonymous location. The critical disadvantage of the

spatio-temporal variant 𝑘-anonymity is that the third party is

trusted undesirably and it plays a role of anonymity server.

When users request an LBS, the third party will obtain users’

location information and obfuscate it. If adversaries have the

background knowledge and the prior knowledge about users,

the 𝑘-anonymity may not prevent adversaries analysing the

users’ privacy.

In order to eliminate this drawback, location privacy quan-

tifying is proposed. Under this approach, when adversaries per-

forms inference attacks, the estimation error will be measured

[5], [6]. For example, the main intention of the inference attack

is to infer the observed users’ true locations or re-identify the

users based on published locations. But a strong assumption

should be made on the knowledge available to the adversaries

in this approach.

Also, some approaches are propose for preserving location

privacy with differential privacy [7], [8], [15], [16]. The first

approach is proposed to deal with the differentially private

computation of the points of interest from a geographical

database populated with the mobility traces of users based on

a quadtree algorithm [7]. And then a database is considered as

a containing commuting patterns one. In this database, each

record represents a user and there are origin and destination

for each user. A synthetic location information is generated

simulating the original location information, instead of re-

leasing the original location information. Recently, a concept

of geo-indistinguishability [11] was proposed, and it expands

differential privacy. But this technology does not consider

correlations among multiple users. And Markov models are

used to build users mobility and infer user location or trajectory

[12], [13]. There are several works that consider temporal

correlations of multiple locations about only one user [10],

[14], [15]. These technologies can protect location privacy in

some extent, but they still do not consider correlations among

multiple users. However, these technologies cannot protect

location privacy well [9], [10]. Most of them do not consider

the correlation among multiple users and this correlation will

be exposed by inference attacks.

B. Correlation Protection with Differential Privacy

Differential privacy has been studied for several years,

and there many variants or generalizations are proposed. But

applying differential privacy for protecting location privacy has

not been studied in depth. Recently there are several works ap-

plying differential privacy for releasing aggregate information

from a huge amount of locations, trajectories or spatiotemporal

data [17]–[20]. Also, a location protection extended differential

privacy is proposed [15]. This method proposed 𝛿-location set

of continual location of only one user and the user’s locations

are temporally correlated. And in order to protect correlated

data, there are several works about correlation protection with

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功