commonly, dynamic voltage and frequency scaling (DVFS)

[22], [23] is implemented at the operating system level to

manage power and to regulate the frequency and voltage of

CPUs. Generally speaking, two DVFS techniques exist for

multi-core systems: One is global DVFS, which scales the fre-

quency and voltage of all the cores simultaneously, and the

other is local DVFS, which regulates the frequency and volt-

age on a per core basis [7]. Experiments indicated that local

DVFS could achieve better performance than global DVFS

[8], [9], but it is more complicated.

The energy efficiency of embedded systems has been stud-

ied by a number of researchers. Because the architectures and

applications for embedded systems are quite diverse,

researchers have needed to establish various theories to study

the problem of energy efficiency in these different systems. In

[26], the authors investigated the tradeoff between inter-appli-

cation concurrency with performance and power consump-

tion under various system configurations. They proposed a

runtime optimization approach to achieve energy efficiency,

implemented on a real platform called Odroid XU- 3. In [27],

the minimum energy consumption was obtained based on a

running model generated through regression-based learning

of energy/performance trade-offs between different comput-

ing resources in the system. In [28], to support application

quality of service and to save energy, an energy-efficient soft

real-time CPU scheduler for mobile devices was proposed

that primarily ran multimedia applications.

In addition to embedded computing, energy efficiency

also plays an important role in cloud computing, which is

marked by huge and increasing power consumption. The

techniques for achieving energy efficiency used in multi-

core embedded systems and cloud computing systems are

similar. Therefore, they could learn from each other. In [10],

the author used DVFS and workload dependent dynamic

power management to improve system performance and to

reduce energy consumption. In [11], based on a cooperative

game-theoretical approach and DVFS technology, the

authors investigated the problem of allocating tasks onto a

computational grid, with the aim of minimizing simulta-

neously the energy consumption and the makespan. In [12],

the authors also employed a game-theoretic approach to

study the problem of minimizing energy consumption in a

distributed system.

An efficient load balancing strategy is a key component

to building out any distributed architecture. The complexi-

ties are reflected in the extensive body of literature on the

topic, as exemplified by the excellent reference collection

given in [13]. The purpose of load balancing is to assign

tasks appropriately to nodes in terms of the workload and

computing power of each node. In [15], researchers pro-

posed a fault tolerant, hybrid load balancing strategy for a

heterogeneous grid computing environment. In [16], the

authors addressed the problem of optimal load balancing of

tasks when power is constrained.

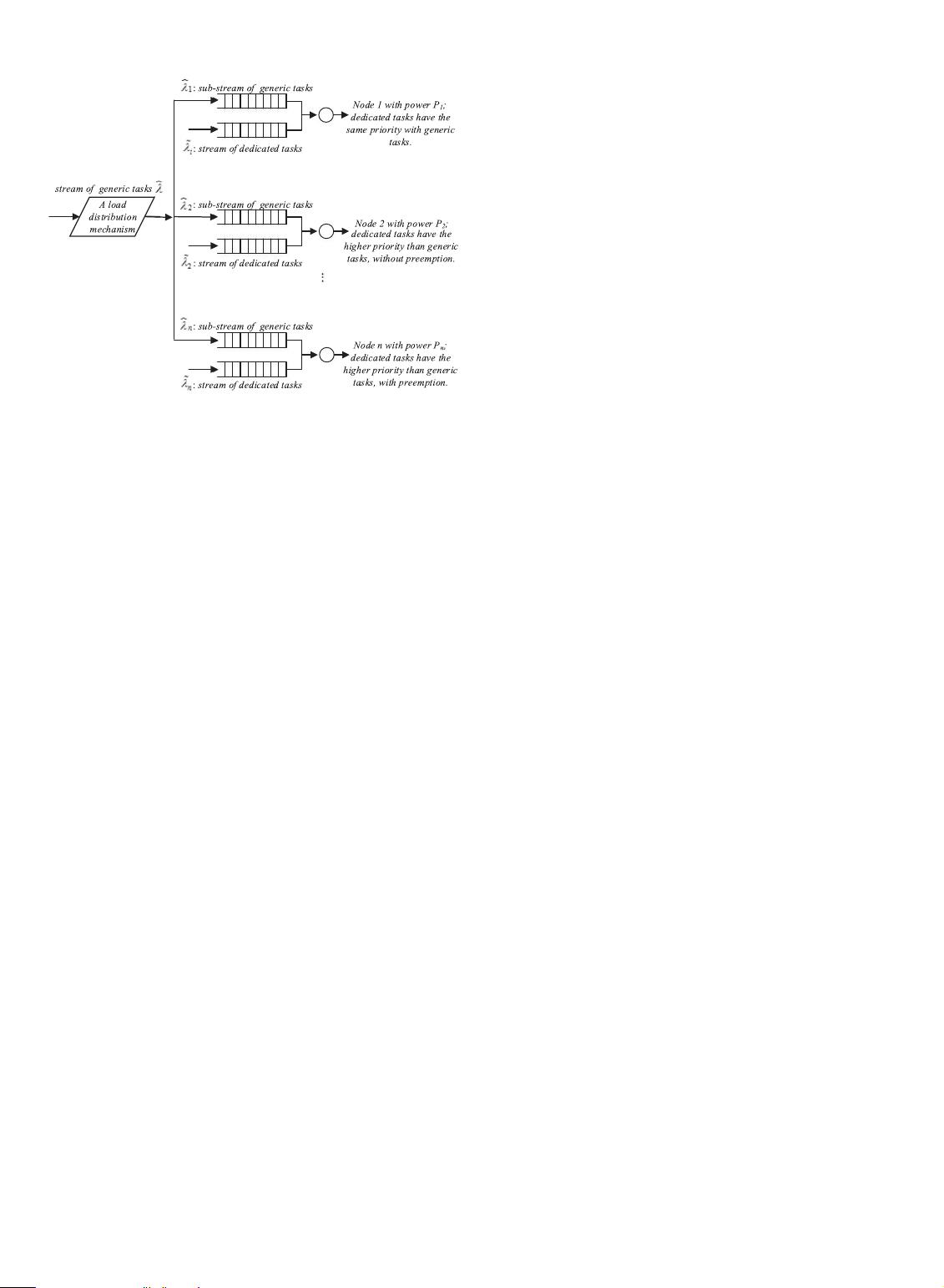

The queueing discipline has also been studied widely. In

[14], two types of cases were considered, namely, systems

with and without special tasks. The authors addressed the

problem of minimizing the average response time of generic

tasks. Both [17] and [18] studied optimal load distribution in

heterogeneous distributed computer systems with both

generic and dedicated applications. In [17], each node was

modeled as an M/G/1 non-preemptive queuing system,

and was applied to several types of dedicated tasks, while

in [18], each node was treated as an M/M/1 non-

preemptive queuing system. The authors of [19] assumed

that each node was preloaded with dedicated tasks, and

three conditions were taken into account: Dedicated tasks

without priority, and prioritized dedicated tasks with and

without preemption. Each node was treated as an M/G/1

queueing system, and the authors focused on the problem

of optimal load balancing of general tasks.

In distributed heterogeneous embedded systems, in

order to achieve energy efficiency and effective utilization

of resources, it is necessary to consider the combination of

node heterogeneity, applications urgency (priority of tasks,

which might be different for each node), energy efficiency,

and the idle CPU state. To the best of our knowledge, pres-

ent studies on load balancing and energy efficiency have

not considered fully all of these factors together.

3SYSTEM MODEL AND PROBLEM FORMULATION

3.1 Power Model

The power dissipation of an embedded processor core

mainly consists of three parts, namely, dynamic, static, and

short-circuits consumption, among which dynamic power

consumption is the dominant component. The dynamic

power consumption can be expressed by P ¼ kCV

2

f where

k is an activity factor, C is the loading capacitance, V is the

supply voltage, and f is the clock frequency. Given that

s / f and f / V , then P

i

/ s

a

i

i

, where a

i

is around 3 [21].

For ease of discussion, we model the power allocated to pro-

cessor core with speed s

i

as s

i

a

i

.

The core busy-power is different from core idle-power. There

are implied energy-frequency and frequency-performance

relations. In this paper, the performance (speed) is defined

as the number of instructions a core can perform per second

(IPS). Therefor, the dynamic power is s

i

a

i

when the core is

working at frequency f

i

and the corresponding speed is s

i

.

When a core is not working, because there are no instruc-

tions to perform, it is inappropriate to define the core speed

directly. In that case, our research focuses on the power con-

sumption rather than core speed. Therefore, when the core

is idle, we assume the speed to be s

Ii

, corresponding to a

frequency f

i

, such that s

Ii

a

i

equals the actual power of the

core, i.e., s

Ii

a

i

¼ CV

i

2

f

i

. A processor core still consumes

some amount of basic power P

i

that includes static power

dissipation, short circuit power dissipation, and other lea-

kages and wasted power. Therefore, the power model can

be formulated as

P

i

¼ðs

a

i

i

þ P

i

Þr

i

þðs

a

i

Ii

þ P

i

Þð1 r

i

Þ

¼ r

i

s

a

i

i

þ 1 r

i

ðÞs

a

i

Ii

þ P

i

¼

b

i

b

r þ

e

i

e

r

i

s

a

i

1

i

þ 1

b

i

b

r þ

e

i

e

r

i

s

a

i

i

!

s

a

i

Ii

þ P

i

:

(1)

3.2 Queueing Model

The queueing model is used to formulate and study the prob-

lem of power allocation and load balancing in a heteroge-

neous distributed embedded environments. Taking n as the

number of heterogeneous embedded computing nodes

1520 IEEE TRANSACTIONS ON COMPUTERS, VOL. 66, NO. 9, SEPTEMBER 2017

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功