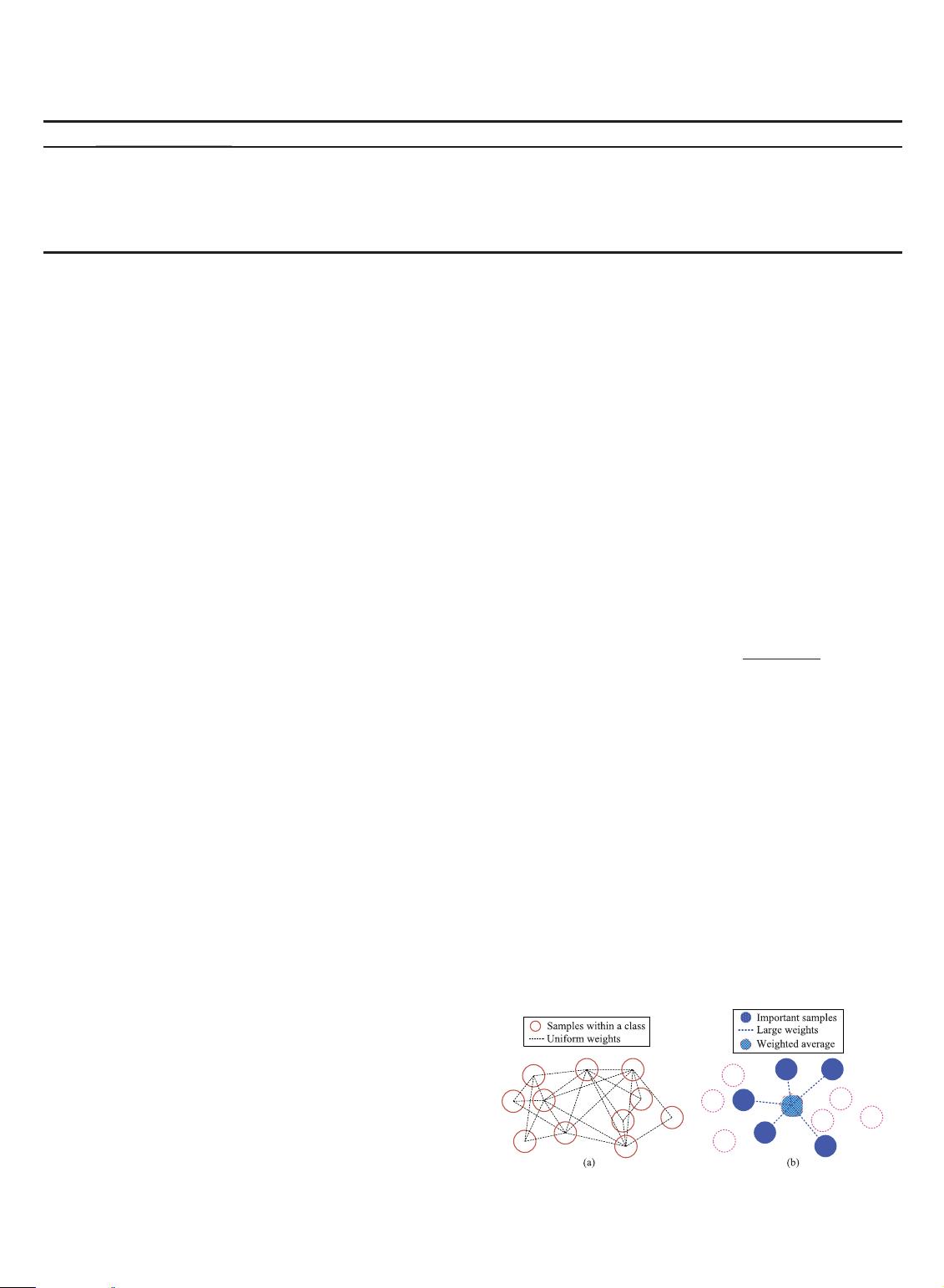

To deal with the measurement of contribution to building

the discriminant subspace for each instance, new approaches

based on sample weighting, instead of relation weighting, will

lead to high efficiency. For example, importance sam-

pling [16] plays an important role in correcting the bias intro-

duced by sampling from the wrong distribution, and hence

attract a great deal of attention these days in the learning

community [17], since it can be used for various statistical

data processing tasks such as variable selection [18] and

transfer learning [19]. However, the ground truth distribu-

tion in practice may be unknown, even though the IID is

used for simplifying the theoretical assumptions. In other

words, independence is often an assumption, rather than

observations-based deduction. Moreover, the IID assump-

tion cannot be strictly justified in the real-world problems,

and many learning applications such as time sequence analy-

sis and speech recognition are inherently temporal in nature

and, consequently, do not follow the IID process. Therefore,

learning from observations of unknown distributions is

very meaningful in both the machine learning and statistics

inference literature.

Inspired by the idea of importance sampling, we study

the sample weighting method for discriminant subspace

learning, and our proposal is formulated by an importance

weighted graph model. As shown later, the new algorithm

can treat the outlier problem well, thus it is called Discrimi-

nation with Outlier Suppressing (DOS) in this paper.

Notice that the high dimension and the relatively few

samples can usually lead to over-fitting in the training

procedure then, specially, the sample covariance matrix can

be rank-deficient and irreversible [20], [21]. It is called small

sample size (SSS) problem in data mining and pattern rec-

ognition [20], [22], [23]. Thus a new regularization method

is proposed to attain the generalized eigenvalue decomposi-

tion-based solutions and deal with the subspace of zero-

eigenvalues. Although some spectrum-based regularization

methods have been proposed to deal with the SSS problem,

such as [21], [24], [25], there are still some drawbacks in the

application practice. In [21], only the classical and linear

Fisher’s LDA is well treated, thus it has limited application

scope in solving generalized criteria. On the contrary, our

method can be applied to both linear and nonlinear objec-

tive criteria, and it can deal with more general discriminant

learning problem. Our method is also distinctive to that

of [25], which only considers the standard kernel discrimi-

nant analysis (KDA), and the zero-subspace of S

w

which

may has important discriminant information is not well

addressed. However, our method uses the estimated con-

stant eigenvalues to substitute instead of discarding the

zero-subspace, and will outperform the method of [25] espe-

cially in the noisy and outlier cases.

Several contributions of this paper are shown here:

1) Samples are assigned weights according to their

approximated contributions to building the optimal

subspace. Parts of the samples having large weights

are considered as active instances. The negative

influence of outliers will be shrunk by this method.

2) Subject-specifical averages and variances are estimated

for different classes, and then a linear discriminant

criterion is proposed for subspace learning. Notice

that the sample weighting approach can be applied to

other methods related to covariance estimation.

3) The linear discriminant criterion is extended to the

nonlinear case by using a positive semi-definite Mer-

cer kernel. To deal with the possible rank-deficient

problem, a new regularization algorithm is proposed

for the sample weighting-based discriminant models.

In summary, a two-track way for improving the robust-

ness of subspace methods is presented. Both sample weight-

ing and eigen-regularization strategies play an important

role in compressing the influences of noise and outliers.

Also notice that the sample weighting scheme has

already been applied to support vector machine (SVM) and

its variants. By weighting each instance, SVM obtains good

generalization ability and classification accuracy. However,

we stress that SVM is a classifier and thus it cannot be used

to reduce dimensions. As we know, the predictive features

of the high-dimensional data, such as facial images and

gene micro-arrays, are often embedded in a lower-dimen-

sional subspace. Therefore, direct classification without

effective dimension reduction cannot be optimal. In Sec-

tion 5, we will compare the final results of our recognition

system with those of SVM for fairness.

The rest of this paper is organized as follows. The

weighted average and the weighted covariance matrix are

calculated according to the importance sampling, and then a

linear subspace learning method and its nonlinear extension

are proposed in Section 2. The main differences from some

closely related methods are also presented. The new regulari-

zation algorithm for the kernel-based method is presented in

Section 3. Parameter selection and algorithm performance

evaluation are presented in Section 4. Extensive experiment

results of synthetic data and real data classification are shown

in Section 5, in which the new algorithm is compared with

some other related methods. Section 6 concludes this paper.

2DISCRIMINANT SUBSPACE LEARNING

We now define the contribution values to calculate the

within-class and between-class scatters, and then propose a

supervised criterion to obtain the optimal subspace. Some

important notations are summarized into Table 1 for

convenience.

2.1 Basic Statistics Estimation

Intuitively, the favorable expectation will be efficiently

estimated via their respective contribution weights to the

intrinsic discriminant structure. This motivation will give

preferences to a small proportion of samples which are

viewed as important in subspace learning, and determine a

subspace for more discriminant prediction.

To show how the statistics can be used as the basis for

our new algorithm, the density function pðxÞ is first evalu-

ated in terms of graph Laplacian. We can then express the

expectation in the form of a finite sum over the cluster.

Suppose that there are n data, and each of them repre-

sents a sample x

i

in an m-dimensional space (1 i n).

The expectation

x and covariance VðxÞ are estimated by

x ¼

Z

xpðxÞdx

X

n

i¼1

x

i

pðx

i

Þ;

(1)

REN ET AL.: SAMPLE WEIGHTING: AN INHERENT APPROACH FOR OUTLIER SUPPRESSING DISCRIMINANT ANALYSIS 3071

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功