没有合适的资源?快使用搜索试试~ 我知道了~

温馨提示

2007 Conference on Analysis of Algorithms, AofA 07 DMTCS proc. AH, 2007, 127–146HyperLogLog: the analysis of a near-optimal cardinality estimation algorithmPhilippe Flajolet1 and Éric Fusy1 and Olivier Gandouet2 and Frédéric Meunier11Algorithms Project, INRIA–Rocquencourt, F78153 Le Chesnay (France) 2LIRMM, 161 rue Ada, 34392 Montpellier (France)This extended abstract describes and analyses a near-optimal probabilistic algorithm, HYPERLOGLOG, dedicated to estimating the number of distinct elemen

资源推荐

资源详情

资源评论

2007 Conference on Analysis of Algorithms, AofA 07 DMTCS proc. AH, 2007, 127–146

HyperLogLog: the analysis of a near-optimal

cardinality estimation algorithm

Philippe Flajolet

1

and Éric Fusy

1

and Olivier Gandouet

2

and Frédéric

Meunier

1

1

Algorithms Project, INRIA–Rocquencourt, F78153 Le Chesnay (France)

2

LIRMM, 161 rue Ada, 34392 Montpellier (France)

This extended abstract describes and analyses a near-optimal probabilistic algorithm, HYPERLOGLOG, dedicated to

estimating the number of distinct elements (the cardinality) of very large data ensembles. Using an auxiliary memory

of m units (typically, “short bytes”), HYPERLOGLOG performs a single pass over the data and produces an estimate

of the cardinality such that the relative accuracy (the standard error) is typically about 1.04/

√

m. This improves on

the best previously known cardinality estimator, LOGLOG, whose accuracy can be matched by consuming only 64%

of the original memory. For instance, the new algorithm makes it possible to estimate cardinalities well beyond 10

9

with a typical accuracy of 2% while using a memory of only 1.5 kilobytes. The algorithm parallelizes optimally and

adapts to the sliding window model.

Introduction

The purpose of this note is to present and analyse an efficient algorithm for estimating the number of

distinct elements, known as the cardinality, of large data ensembles, which are referred to here as multisets

and are usually massive streams (read-once sequences). This problem has received a great deal of attention

over the past two decades, finding an ever growing number of applications in networking and traffic

monitoring, such as the detection of worm propagation, of network attacks (e.g., by Denial of Service),

and of link-based spam on the web [3]. For instance, a data stream over a network consists of a sequence

of packets, each packet having a header, which contains a pair (source–destination) of addresses, followed

by a body of specific data; the number of distinct header pairs (the cardinality of the multiset) in various

time slices is an important indication for detecting attacks and monitoring traffic, as it records the number

of distinct active flows. Indeed, worms and viruses typically propagate by opening a large number of

different connections, and though they may well pass unnoticed amongst a huge traffic, their activity

becomes exposed once cardinalities are measured (see the lucid exposition by Estan and Varghese in [11]).

Other applications of cardinality estimators include data mining of massive data sets of sorts—natural

language texts [4, 5], biological data [17, 18], very large structured databases, or the internet graph, where

the authors of [22] report computational gains by a factor of 500

+

attained by probabilistic cardinality

estimators.

1365–8050

c

2007 Discrete Mathematics and Theoretical Computer Science (DMTCS), Nancy, France

128 P. Flajolet, É. Fusy, O. Gandouet, F. Meunier

Clearly, the cardinality of a multiset can be exactly determined with a storage complexity essentially

proportional to its number of elements. However, in most applications, the multiset to be treated is far too

large to be kept in core memory. A crucial idea is then to relax the constraint of computing the value n of

the cardinality exactly, and to develop probabilistic algorithms dedicated to estimating n approximately.

(In many practical applications, a tolerance of a few percents on the result is acceptable.) A whole range

of algorithms have been developed that only require a sublinear memory [2, 6, 10, 11, 15, 16], or, at worst

a linear memory, but with a small implied constant [24].

All known efficient cardinality estimators rely on randomization, which is ensured by the use of hash

functions. The elements to be counted belonging to a certain data domain D, we assume given a hash

function, h : D → {0, 1}

∞

; that is, we assimilate hashed values to infinite binary strings of {0, 1}

∞

, or

equivalently to real numbers of the unit interval. (In practice, hashing on 32 bits will suffice to estimate

cardinalities in excess of 10

9

; see Section 4 for a discussion.) We postulate that the hash function has been

designed in such a way that the hashed values closely resemble a uniform model of randomness, namely,

bits of hashed values are assumed to be independent and to have each probability

1

2

of occurring—

practical methods are known [20], which vindicate this assumption, based on cyclic redundancy codes

(CRC), modular arithmetics, or a simplified cryptographic use of boolean algebra (e.g., sha1).

The best known cardinality estimators rely on making suitable, concise enough, observations on the

hashed values h(M) of the input multiset M, then inferring a plausible estimate of the unknown cardi-

nality n. Define an observable of a multiset S ≡ h(M) of {0, 1}

∞

strings (or, equivalently, of real [0, 1]

numbers) to be a function that only depends on the set underlying S, that is, a quantity independent of

replications. Then two broad categories of cardinality observables have been studied.

— Bit-pattern observables: these are based on certain patterns of bits occurring at the beginning of

the (binary) S-values. For instance, observing in the stream S at the beginning of a string a bit-

pattern 0

ρ−1

1 is more or less a likely indication that the cardinality n of S is at least 2

ρ

. The

algorithms known as Probabilistic Counting, due to Flajolet-Martin [15], together with the more

recent LOGLOG of Durand-Flajolet [10] belong to this category.

— Order statistics observables: these are based on order statistics, like the smallest (real) values, that

appear in S. For instance, if X = min(S), we may legitimately hope that n is roughly of the order

of 1/X, since, as regards expectations, one has E(X) = 1/(n + 1). The algorithms of Bar-Yossef

et al. [2] and Giroire’s MINCOUNT [16, 18] are of this type.

The observables just described can be maintained with just one or a few registers. However, as such,

they only provide a rough indication of the sought cardinality n, via log

2

n or 1/n. One difficulty is due

to a rather high variability, so that one observation, corresponding to the maintenance of a single variable,

cannot suffice to obtain accurate predictions. An immediate idea is then to perform several experiments

in parallel: if each of a collection of m random variables has standard deviation σ, then their arithmetic

mean has standard deviation σ/

√

m, which can be made as small as we please by increasing m. That

simplistic strategy has however two major drawbacks: it is costly in terms of computation time (we would

need to compute m hashed values per element scanned), and, worse, it would necessitate a large set of

independent hashing functions, for which no construction is known [1].

The solution introduced in [15] under the name of stochastic averaging, consists in emulating the

effect of m experiments with a single hash function. Roughly speaking, we divide the input stream

h(M) into m substreams, corresponding to a partition of the unit interval of hashed values into [0,

1

m

[,

HyperLogLog: analysis of a near-optimal cardinality algorithm 129

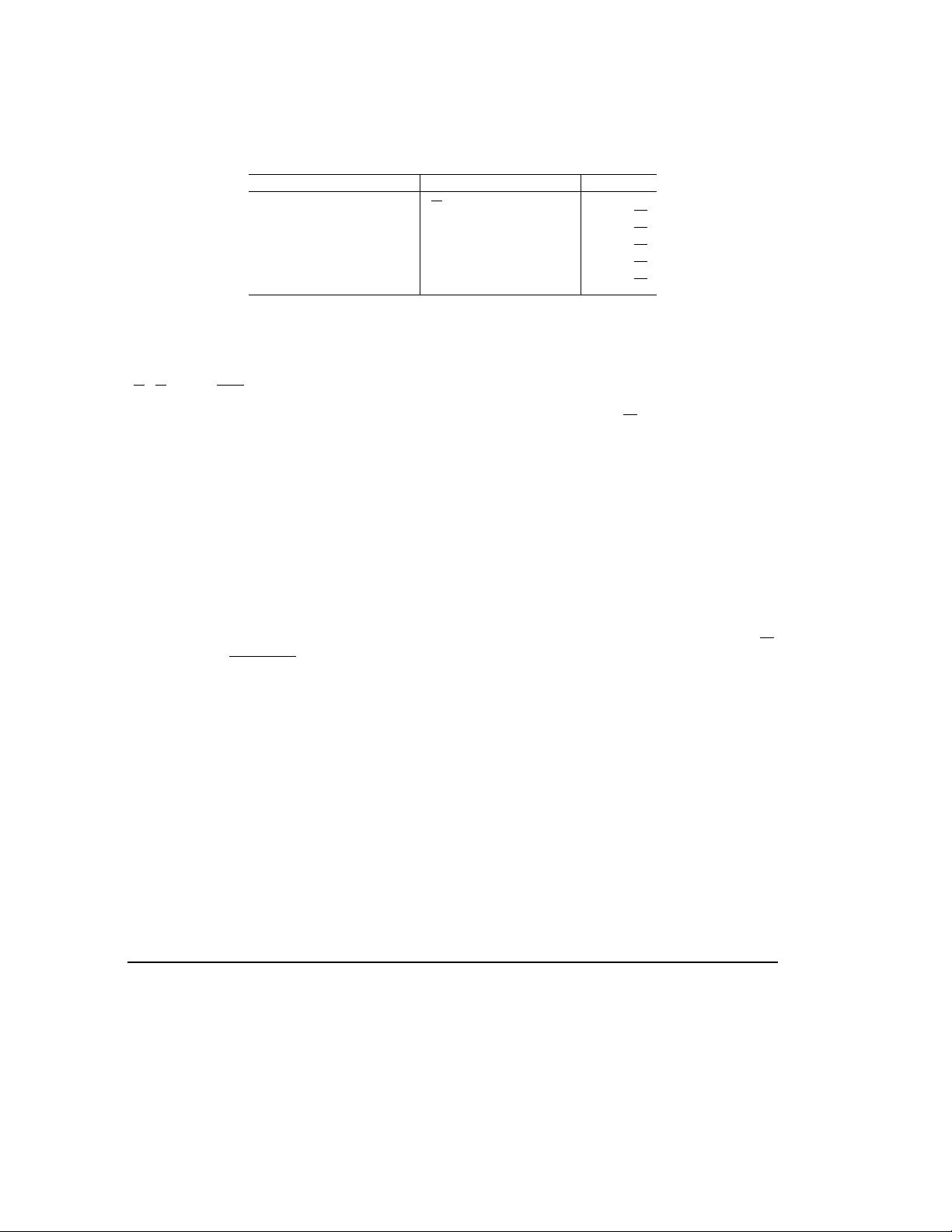

Algorithm Cost (units) Accuracy

Hit Counting [24]

1

10

N bits ≈2%

Adaptive Sampling [12] m words (≈32 bits) 1.20/

√

m

Probabilistic Counting [15] m words (≤32 bits) 0.78/

√

m

MINCOUNT [2, 6, 16, 18] m words (≤32 bits) 1.00/

√

m

LOGLOG [10] m bytes (≤5 bits) 1.30/

√

m

HYPERLOGLOG m bytes (≤5 bits) 1.04/

√

m

Fig. 1: A comparison of major cardinality estimators for cardinalities ≤ N: (i) algorithms; (ii) memory complexity

and units (for N ≤ 10

9

); (iii) relative accuracy.

[

1

m

,

2

m

[, . .. , [

m−1

m

, 1]. Then, one maintains the m observables O

1

, . . . , O

m

corresponding to each of

the m substreams. A suitable average of the {O

j

} is then expected to produce an estimate of cardinalities

whose quality should improve, due to averaging effects, in proportion to 1/

√

m, as m increases. The

benefit of this approach is that it requires only a constant number of elementary operations per element of

the multiset M (as opposed to a quantity proportional to m), while only one hash function is now needed.

The performances of several algorithms are compared in Figure 1; see also [13] for a review. HYPER-

LOGLOG, described in detail in the next section, is based on the same observable as LOGLOG, namely

the largest ρ value obtained, where ρ(x) is the position of the leftmost 1-bit in binary string x. Stochastic

averaging in the sense above is employed. However, our algorithm differs from standard LOGLOG by its

evaluation function: its is based on harmonic means, while the standard algorithm uses what amounts to

a geometric mean

1

. The idea of using harmonic means originally drew its inspiration from an insight-

ful note of Chassaing and Gérin [6]: such means have the effect of taming probability distributions with

slow-decaying right tails, and here they operate as a variance reduction device, thereby appreciably in-

creasing the quality of estimates. Theorem 1 below summarizes our main conclusions to the effect that the

relative accuracy (technically, the standard error) of HYPERLOGLOG is numerically close to β

∞

/

√

m,

where β

∞

=

√

3 log 2 − 1

.

= 1.03896. The algorithm needs to maintain a collection of registers, each

of which is at most log

2

log

2

N + O(1) bits, when cardinalities ≤ N need to be estimated. In particular,

HYPERLOGLOG achieves an accuracy matching that of standard LOGLOG by consuming only 64% of

the corresponding memory. As a consequence, using m = 2048, hashing on 32 bits, and short bytes of 5

bit length each: cardinalities till values over N = 10

9

can be estimated with a typical accuracy of 2%

using 1.5kB (kilobyte) of storage.

The proofs base themselves in part on techniques that are now standard in analysis of algorithms,

like poissonization, Mellin transforms, and saddle-point depoissonization. Some nonstandard problems

however present themselves due to the special nonlinear character of harmonic means, so that several

ingredients of the analysis are not completely routine.

1 The HYPERLOGLOG algorithm

The HYPERLOGLOG algorithm is fully specified in Figure 2, the corresponding program being discussed

later, in Section 4. The input is a multiset M of data items, that is, a stream whose elements are read

sequentially. The output is an estimate of the cardinality, defined as the number of distinct elements

1

The paper [10] also introduces a variant called SUPERLOGLOG, which attempts to achieve variance reduction by censoring

extreme data. It has however the disadvantage of not being readily amenable to analysis, as regards bias and standard error.

剩余19页未读,继续阅读

资源评论

weixin_38607088

- 粉丝: 5

- 资源: 921

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

- 410.基于SpringBoot的高校科研信息管理系统(含报告).zip

- 附件1.植物健康状态的影响指标数据.xlsx

- Windows 10 1507-x86 .NET Framework 3.5(包括.NET 2.0和3.0)安装包

- Image_1732500699692.png

- Windows 10 21h1-x86 .NET Framework 3.5(包括.NET 2.0和3.0)安装包

- VMware 是一款功能强大的虚拟化软件,它允许用户在一台物理计算机上同时运行多个操作系统

- 31万条全国医药价格与采购数据.xlsx

- SQL注入详解,SQL 注入是一种常见的网络安全漏洞,攻击者通过在输入数据中插入恶意的 SQL 语句,欺骗应用程序执行这些恶意语句,从而获取、修改或删除数据库中的数据,甚至控制数据库服务器

- 用C语言实现哈夫曼编码:从原理到实现的详细解析

- py爱心代码高级粒子!!

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功