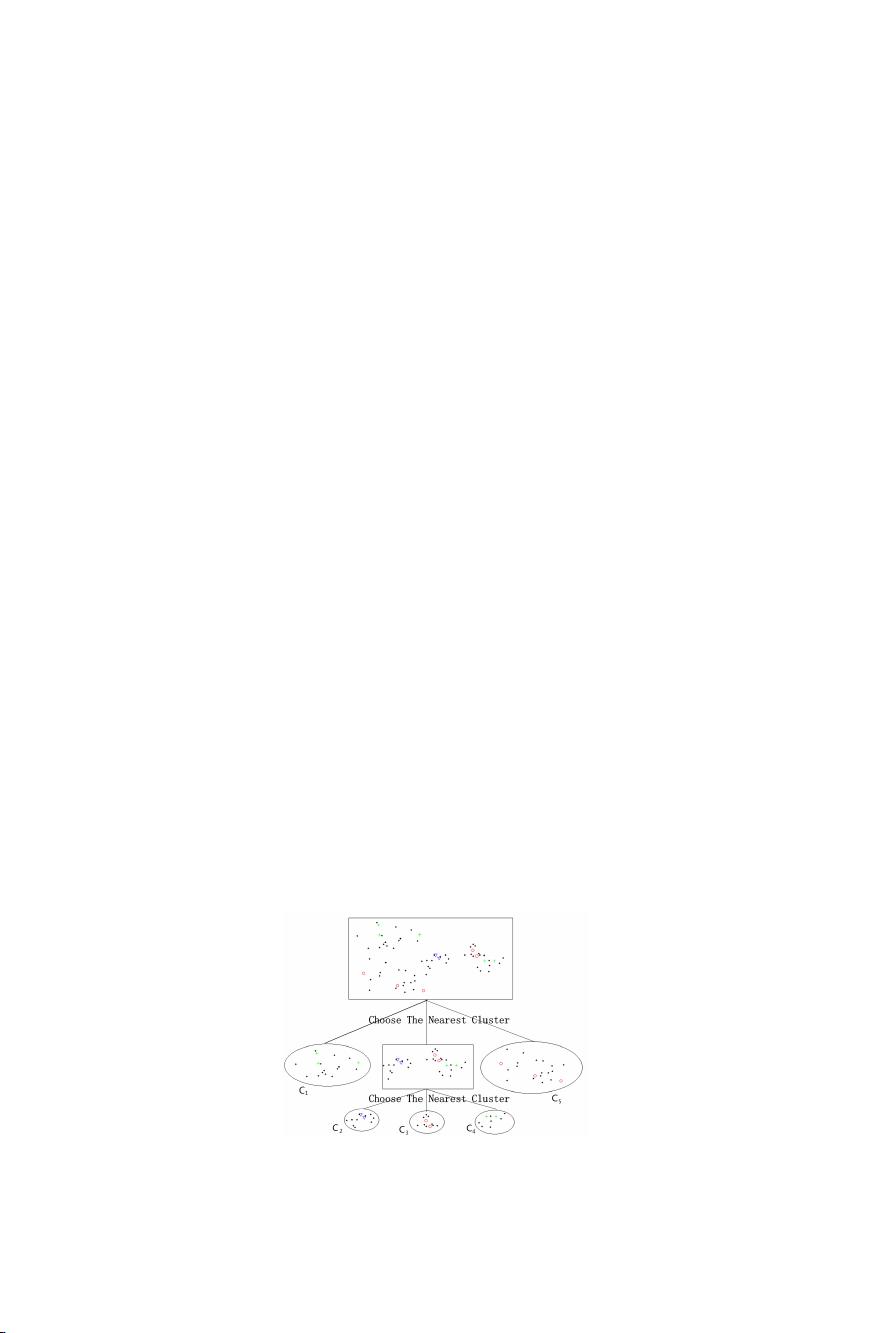

本文研究的是主动学习在半监督集群树中的应用以及在文本分类任务中的成本驱动策略,核心概念是主动学习可以在较少数据或成本的情况下,通过机器学习者主动选择数据,达到更高的性能。本文深入研究了标签成本与模型性能之间的关系,并提出了一种称为成本性能的准则,以平衡这一关系。基于此准则,提出了一种成本驱动的主动半监督聚类算法(cost-driven active SSC),该算法可以自动停止主动学习过程。实证结果表明,该方法优于active SVM和co-EMT。 主动学习是机器学习领域的一个重要分支,它允许机器学习模型在训练过程中主动选择最有信息量的数据点来进行标注,并将这些数据用于模型的进一步训练。这与传统的被动学习方法不同,被动学习往往依赖于大量预先标注的数据进行训练。主动学习的核心优势在于能够用更少的标注数据达到甚至超越传统方法的性能,即“少即是多”的现象。 在主动学习过程中,一般包括以下三个步骤:从未标注的数据集中选择最不确定的数据点(例如,接近类别边界的数据);由“预言机”(oracle)对这些数据点进行标注,并将标注后的数据添加到已标注数据集中;在新的和扩充的已标注数据集上重新训练主动学习器。通过重复以上三个步骤T次,主动学习器会变得越来越强大。 然而,现有的大多数主动学习算法存在一个缺陷,即没有机制能够在适当的时刻自动停止主动学习过程。例如,通常需要预先设定T的值(例如T=50)。这就意味着,在某些情况下,可能需要进行过多不必要的标注操作,从而导致不必要的成本增加。 为了克服这一问题,本文提出了一个基于成本性能准则的成本驱动的主动学习策略。该策略考虑了标注成本和模型性能之间的关系,并能够在成本与性能之间找到平衡点。基于这一准则,本文提出了一种成本驱动的主动半监督聚类算法(cost-driven active SSC),它能够自动决定何时停止主动学习过程。 本研究对主动学习算法的发展具有重要意义,尤其是在如何提高算法的经济效率方面。通过更智能的主动学习策略,可以在降低标注成本的同时保持甚至提升模型的分类性能。这对于那些标注资源有限的应用场景尤其有价值,例如医疗诊断、金融风险分析和网络内容过滤等。 本文的研究还涉及到半监督学习和集群树的概念。半监督学习是一种结合了有监督学习和无监督学习的学习范式,旨在利用少量的标注数据和大量的未标注数据进行学习,从而实现更好的学习效果。集群树是一种常用于聚类分析的数据结构,它能够通过数据点之间的相似性来组织数据,并将相似的数据点聚集在一起形成簇。在半监督学习中,集群树可以用于识别和组织数据集中的数据结构,从而有助于提高学习算法的性能。 主动学习与半监督学习的结合,在文本分类任务中具有广泛的应用前景。文本分类是自然语言处理中的一个核心任务,其目标是将文本数据划分到一个或多个预定义的类别中。在诸如情感分析、垃圾邮件识别、新闻分类等场景中,主动学习与半监督学习结合的策略能够在确保分类性能的前提下,大幅度降低人工标注的代价,提高分类系统的实际应用价值。

剩余10页未读,继续阅读

- 粉丝: 4

- 资源: 910

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

信息提交成功

信息提交成功