LOCALITY SENSITIVE DICTIONARY LEARNING FOR IMAGE CLASSIFICATION

Bao-Di Liu, Bin Shen*, Xue Li

College of Information and Control Engineering China University of Petroleum Qingdao, 266580, China

Google Research, New York, USA

Department of Electronic Engineering Tsinghua University Beijing, 100084, China

thu.liubaodi@gmail.com, bshen@google.com, xue-li11@mails.tsinghua.edu.cn

ABSTRACT

In this paper, motivated by the superior performance of sparse rep-

resentation based dictionary learning for application of image clas-

sification and the usage of nonlinearity property in improving per-

formance of image representation, we propose a locality sensitive

dictionary learning algorithm with global consistency and smooth-

ness constraint to overcome the restriction of linearity at relatively

low cost. Specifically, the image features are partitioned into several

groups in a locality sensitive way and a global consistency regular-

izer is embedded into locality sensitive dictionary learning algorith-

m. The proposed algorithm is efficient to capture complex nonlin-

ear structure. Experimental results on several benchmark data sets

demonstrate the efficiency of our proposed locality sensitive dictio-

nary learning algorithm.

Index Terms— Dictionary Learning, Sparse Representation,

Locality Sensitive, Image Classification

1. INTRODUCTION

Image classification task, which aims at automatically associate im-

ages with semantic labels, has become quite a significant topic in

computer vision area. The most common framework for image clas-

sification is the discriminative model [1, 2, 3, 4, 5]. Five main step-

s, include image feature extraction, dictionary learning, image fea-

ture coding, image pooling, and SVM-based classification, construct

the discriminative model [1], where dictionary learning plays a key

role. A dictionary is usually composed of visual words, which en-

code low level visual information of images across different classes.

The primitive versions of vocabulary are learnt by typically k-means

clustering [6]. Yang et al. [2] first introduced sparse representa-

tion based dictionary learning algorithm and obtained state-of-the-

art performance in image classification.

On the other hand, various sparse representation based dictio-

nary learning algorithms were emerged. And sparse representation

based dictionary learning technique has been gradually revealed in

computer vision areas, such as image classification[7, 8, 9], im-

age inpainting [10, 11], image resolution, face recognition [12, 13]

etc. Different from other factorization methods, such as PCA, non-

negative matrix factorization [14, 15, 16, 17, 18, 19, 20], tensor fac-

This work was done when Bin was with Department of Computer Sci-

ence, Purdue University, West Lafayette.

This work was supported by the National Natural Science Foundation

of China (Grant No.61402535 No.61271407), the Natural Science Founda-

tion for Youths of Shandong Province, China (Grant No.ZR2014FQ001),

Qingdao Science and Technology Project (No.14-2-4-111-jch), and the Fun-

damental Research Funds for the Central Universities, China University of

Petroleum (East China) (Grant No. 14CX02169A).

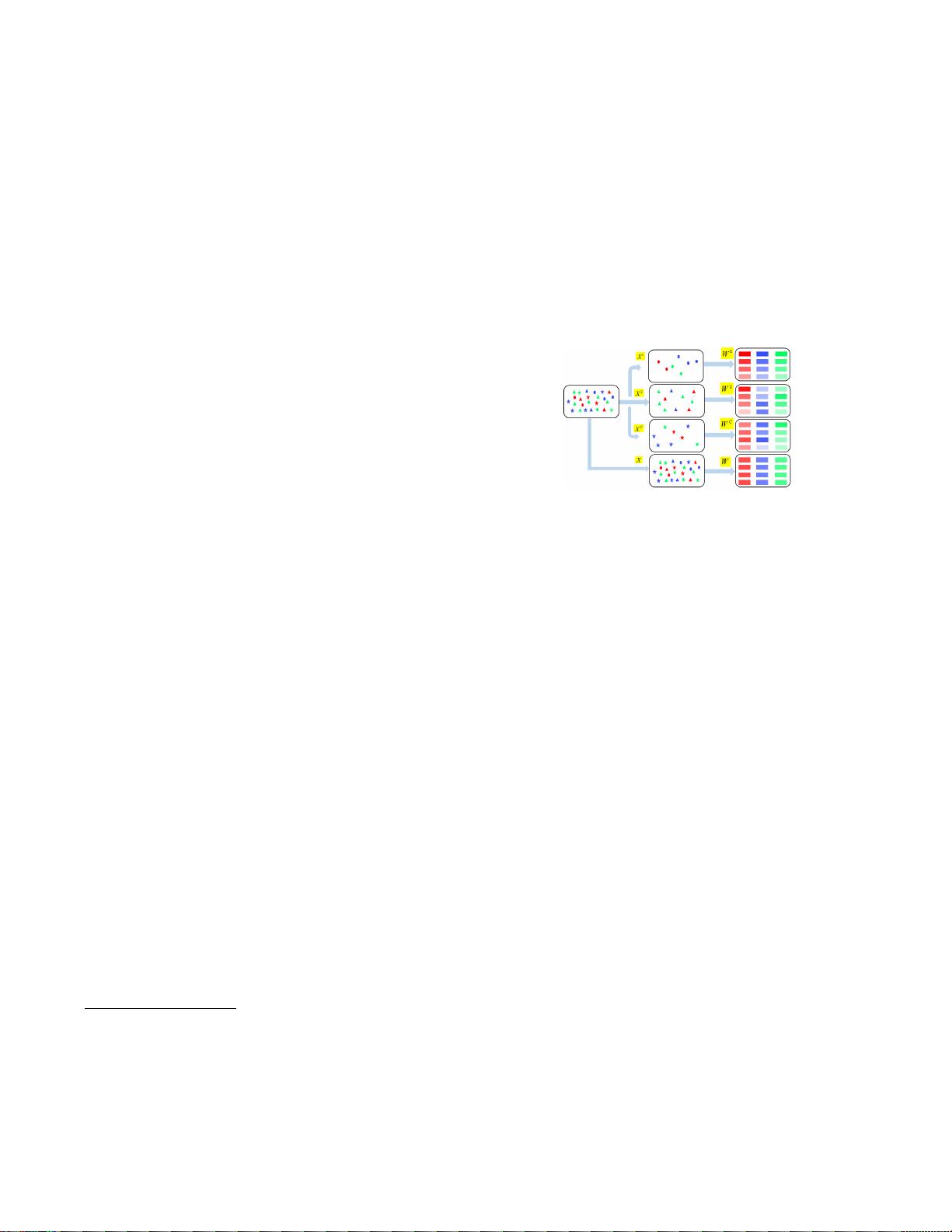

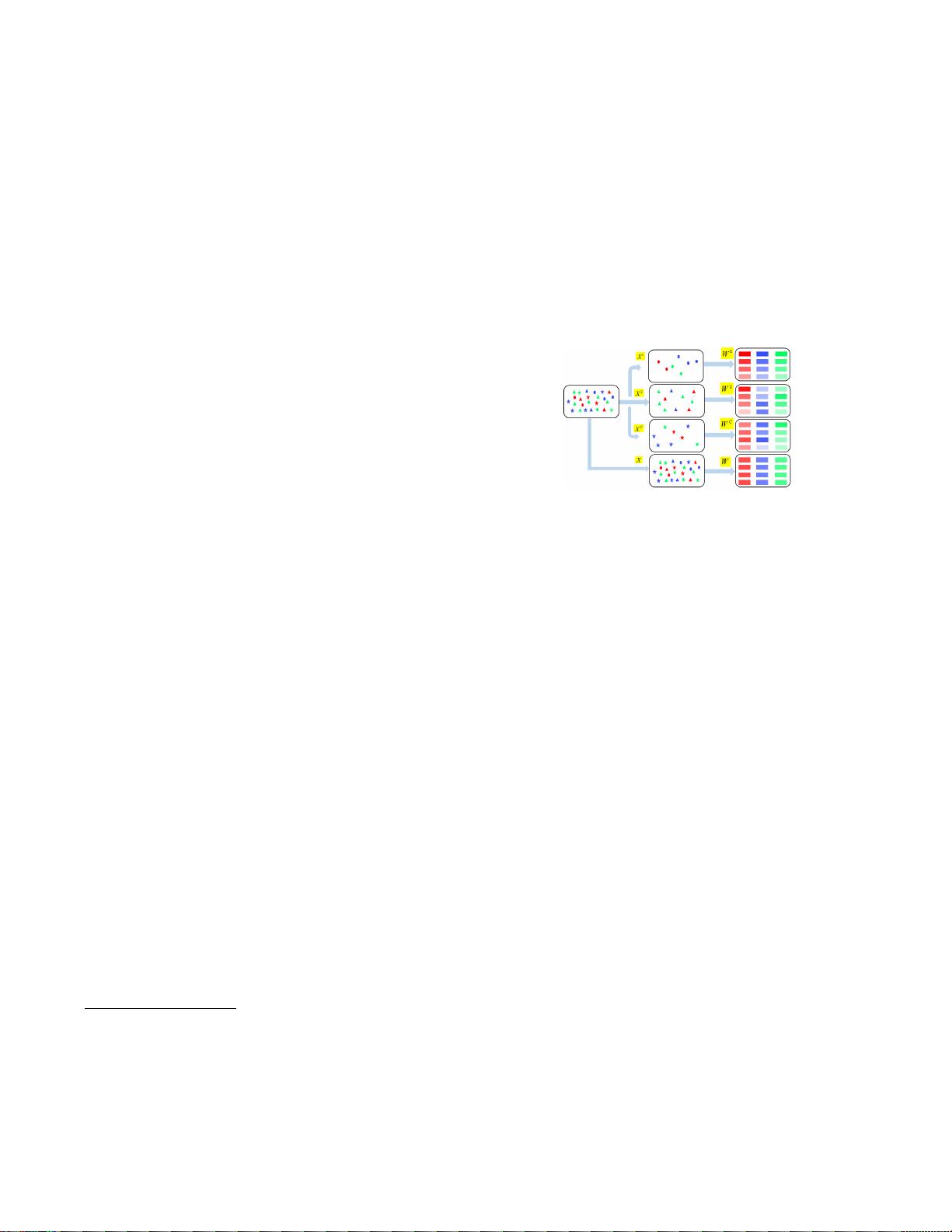

Fig. 1. Framework of the proposed LSDL. The data matrix X is

partitioned into C clusters, then a dictionary is learnt for each region.

The dictionary W learnt for the data matrix X is the smoothness and

consistency of the local dictionaries learnt for each region.

torization [21, 22, 23, 24] and low-rank factorization [25, 26], sparse

representation based dictionary learning has the ability to represent

data by sparse linear combination of bases. Recently, more and more

researchers focused on locality-preserving dictionary learning algo-

rithms due that locality was more essential than sparsity [27]. Wang

et al. [3] considered each word in the vocabulary lying on a man-

ifold to preserve the local information. Wei et al. [28] embedded

the local data structure into sparse representation based dictionary

learning algorithm. Gao et al. [29] incorporated the histogram in-

tersection kernel based laplacian matrix into the sparse representa-

tion based dictionary learning algorithm to enforce the consistence

in sparse representation of similar local features. Zheng et al. [30]

explicitly embedded the vector quantization based laplancian matrix

into dictionary learning algorithm. Liu et al. [5] proposed sparse

representation in k-nearest neighbor to construct the graph model to

improve the accuracy and robustness of image representation.

However, all these methods are restricted to linear dictionary

learning methods or graph embedded dictionary learning methods,

and thus unable to capture complex nonlinear properties. Many real

word data require complex nonlinearity in dictionary learning due to

their distribution. For example, handwritten digits form manifolds

or human face form manifolds in the feature space is hard to cap-

ture such complex nonlinearity structure by conventional dictionary

learning methods, especially when facing large data. Moreover, k-

ernel trick needs to apply the kernel function to all pairs of sam-

ples which cost a lot of computation. To improve the nonlinearity

and to keep the computational efficiency, a locality sensitive dictio-

nary learning (LSDL) method is proposed to approximate the global

nonlinear dictionary to capture complex correlation structures. The

assumption is that the global dictionary learning is nonlinear, but it

is linear locally, i.e. dictionary learning method is applicable when

978-1-4799-8339-1/15/$31.00 ©2015 IEEE

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功