102 IEEE/ACM TRANSACTIONS ON AUDIO, SPEECH, AND LANGUAGE PROCESSING, VOL. 25, NO. 1, JANUARY 2017

A Reverberation-Time-Aware Approach to Speech

Dereverberation Based on Deep Neural Networks

Bo Wu, Kehuang Li, Minglei Yang, and Chin-Hui Lee, Fellow, IEEE

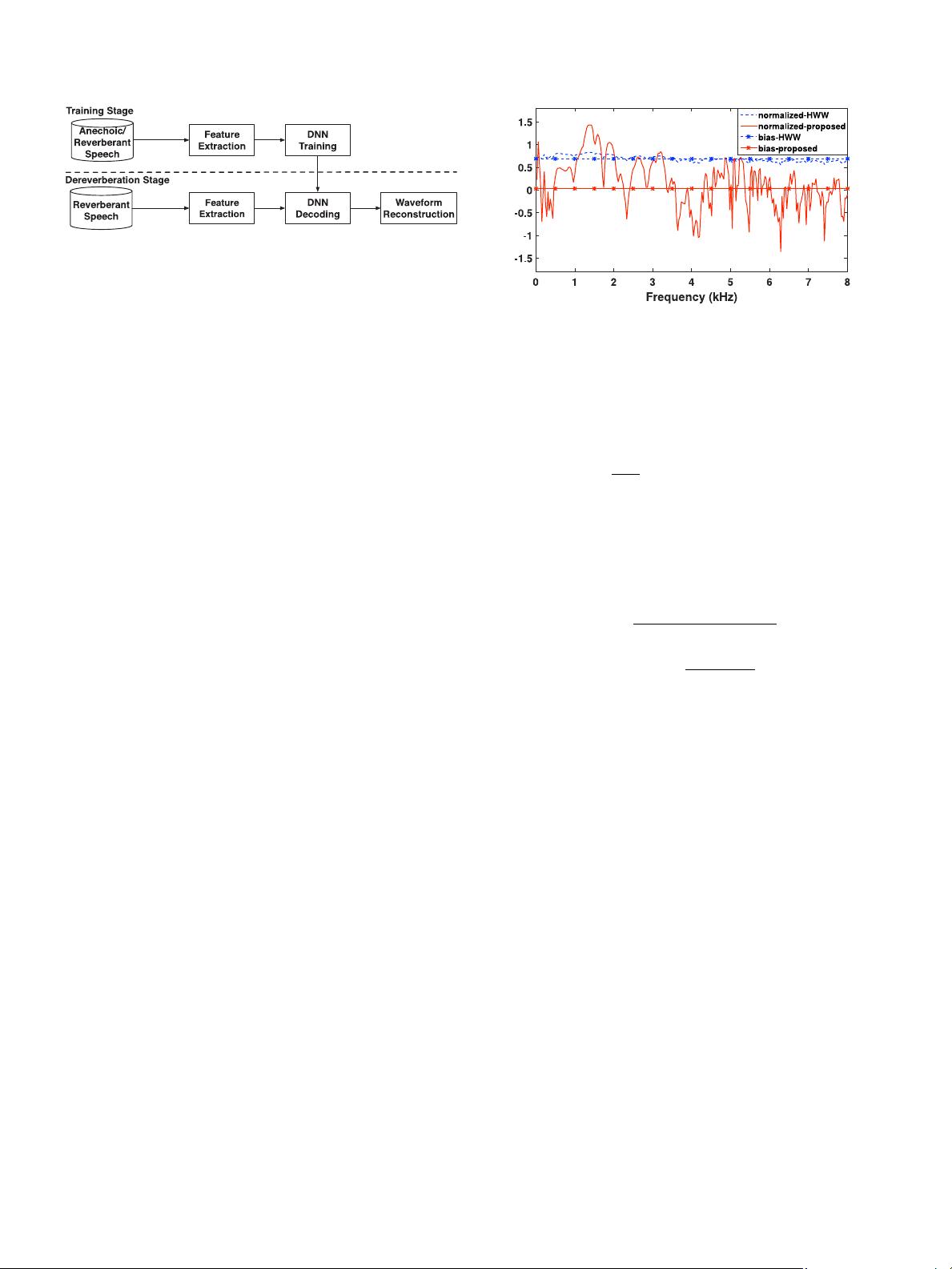

Abstract—A reverberation-time-aware deep-neural-network

(DNN)-based speech dereverberation framework is proposed to

handle a wide range of reverberation times. There are three key

steps in designing a robust system. First, in contrast to sigmoid acti-

vation and min–max normalization in state-of-the-art algorithms,

a linear activation function at the output layer and global mean-

variance normalization of target features are adopted to learn the

complicated nonlinear mapping function from reverberant to ane-

choic speech and to improve the restoration of the low-frequency

and intermediate-frequency contents. Next, two key design param-

eters, namely, frame shift size in speech framing and acoustic con-

text window size at the DNN input, are investigated to show that

RT60-dependent parameters are needed in the DNN training stage

in order to optimize the system performance in diverse reverberant

environments. Finally, the reverberation time is estimated to select

the proper frame shift and context window sizes for feature extrac-

tion before feeding the log-power spectrum features to the trained

DNNs for speech dereverberation. Our experimental results indi-

cate that the proposed framework outperforms the conventional

DNNs without taking the reverberation time into account, while

achieving a performance only slightly worse than the oracle cases

with known reverberation times even for extremely weak and se-

vere reverberant conditions. It also generalizes well to unseen room

sizes, loudspeaker and microphone positions, and recorded room

impulse responses.

Index Terms—Acoustic context, deep neural networks (DNNs),

frame shift, linear output layer, mean-variance normalization,

reverberation-time-aware (RTA), speech dereverberation.

I. INTRODUCTION

W

HEN a microphone is placed at a distance from a talker

in an enclosed space in hands-free, eyes-busy speech

applications, the received signal will be a collection of many

delayed and attenuated copies of the original speech signals,

caused by the reflections from walls, ceilings, and floors [1]. As

a result, reverberation often seriously degrades speech quality

and intelligibility. Such deteriorations can cause decreased per-

formances for automatic speech recognition, hearing aids and

Manuscript received April 29, 2016; revised August 8, 2016 and October

20, 2016; accepted October 20, 2016. Date of publication October 31, 2016;

date of current version November 28, 2016. This work was supported in part

by the National Natural Science Foundation of China (61571344). The work

of B.Wu was supported by a grant from the China Scholarship Council. The

associate editor coordinating the review of this manuscript and approving it for

publication was Prof. DeLiang Wang.

B. Wu and M. Yang are with the National Laboratory of Radar Signal Process-

ing, Xidian University, Xi’an 710126, China (e-mail: rambowu11@gmail.com;

mlyang@xidian.edu.cn).

K. Li and C.-H. Lee are with the School of Electrical and Computer En-

gineering, Georgia Institute of Technology, Atlanta, GA 30332 USA (e-mail:

kehlekernel@gmail.com; chl@ece.gatech.edu).

Color versions of one or more of the figures in this paper are available online

at http://ieeexplore.ieee.org.

Digital Object Identifier 10.1109/TASLP.2016.2623559

source localization. Thus, an effective dereverberation solution

will benefit many speech applications.

Many dereverberation techniques have been proposed in the

past (e.g., [2]–[6]). One direct way is to estimate an inverse

filter of the room impulse response (RIR) [7] to deconvolve the

reverberant signal. However, a minimum phase assumption is

often needed, which is almost never satisfied in practice [7].

The RIR can also be varying in time and hard to estimate [1].

The work presented in [2] estimated a fixed length of an inverse

filter of RIR by maximizing the kurtosis of the linear prediction

(LP) residual for the reduction of early reverberation, without

taking into account the impact of RIR in distinct reverberant

environments on the system performance. The inverse filtering

is only effective in a short reverberation time (RT60) [8] range,

from 0.2 to 0.4 s. Mosayyebpour et al. [4] presented an iterative

method to blindly determine the filter length according to the

reverberant condition, which could be used in highly reverber-

ant rooms. Nevertheless, the stopping criterion was empirically

chosen. Kinoshita et al. [3] estimated the late reverberations

using long-term multi-step linear prediction, and then reduced

the late reverberation effect by employing spectral subtraction.

Some studies attempted to separate speech and reverberation

via homomorphic transformation [9], [10]. Nevertheless, they

are not very effective when the human auditory system is the

target. Other methods dealt with dereverberation by exploiting

the essential properties of speech such as harmonic filtering

[11]. The dereverberation filter is only estimated from voiced

speech segments, therefore achieving a poor dereverberation

performance for unvoiced speech segments.

Recently, due to their strong regression capabilities, deep neu-

ral networks (DNNs) [12], [13] have also been utilized in speech

enhancement [14], [15], source separation[16], [17] and band-

width expansion [18], [19]. Han et al. [5], [20] also proposed

to dereverberate speech using DNNs, to learn a spectral map-

ping from reverberant to anechoic speech. Although the results

reflect the state-of-the-art performances, they represented the

DNN prediction of log-spectral magnitude into an unit range

and normalized the target features into the same range, pre-

venting a good dereverberation performance, especially at low

RT60s. Moreover, their system is environmentally insensitive,

not being to realize its full potential.

In this study, we utilize an improved DNN dereverberation

system we proposed recently [21] by adopting a linear output

layer and globally normalizing the target features into zero mean

and unit variance, and then investigate the effects of frame shift

and acoustic context sizes on the dereverberated speech qual-

ity using DNNs at different RT60s. We show that on the one

hand low frame shifts can not obtain good performances in

2329-9290 © 2016 IEEE. Personal use is permitted, but republication/redistribution requires IEEE permission.

See http://www.ieee.org/publications

standards/publications/rights/index.html for more information.

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功