PoolingNd层(Pooling层)1

需积分: 0 53 浏览量

更新于2022-08-03

收藏 460KB PDF 举报

在TensorRT中,PoolingNd层(也称为Pooling层)是一种用于神经网络的降采样操作,主要用于减少输入特征图的维度,同时保持其主要特征。它通常用于卷积神经网络(CNNs)中,以降低计算复杂度并提高模型的泛化能力。在TensorRT 8之前的版本中,我们可以使用`add_pooling_nd`或`add_pooling`方法来添加这个层。然而,从TensorRT 9开始,这些方法已被废弃,推荐使用`add_pooling_nd`。

**参数详解:**

1. **type**: 这个参数定义了池化类型,可以是最大池化(`trt.PoolingType.MAX`)或平均池化(`trt.PoolingType.AVERAGE`)。最大池化选取每个窗口内的最大值,而平均池化则计算窗口内所有元素的平均值。

2. **window_size_nd (window_size)**: 定义了池化窗口的大小,可以是一个N维的元组。例如,对于二维池化,窗口大小可能为`(2, 2)`,表示在宽度和高度方向上进行2x2的池化。

3. **stride_nd (stride)**: 表示滑动窗口在每个维度上的步长。步长决定了池化窗口在特征图上移动的距离。步长通常等于窗口大小,但也可以根据需求设置为其他值。

4. **padding_nd (padding)**: 用于指定填充的维度。填充可以在输入特征图的边缘添加额外的零,以便保持输出尺寸与输入尺寸相同。有三种填充模式:`same`、`valid`和`explicit`。在TensorRT中,这些模式可以通过`pre_padding`和`post_padding`来手动设置。

5. **average_count_excludes_padding**: 如果在平均池化中设置为True,那么计算平均值时将忽略填充区域。如果设置为False,那么包括填充区域。

**示例代码解析:**

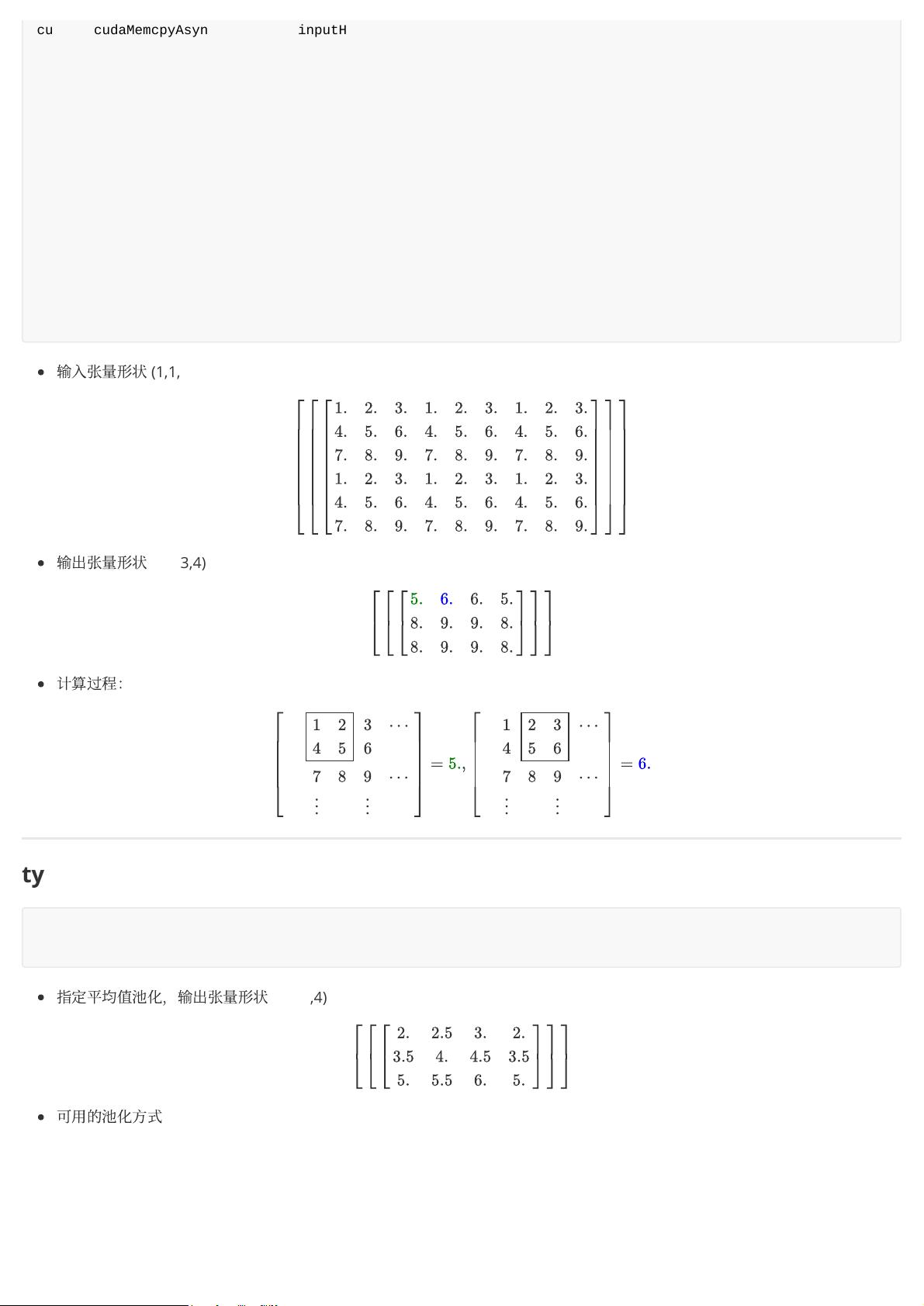

在提供的示例代码中,创建了一个三维池化层,输入张量的形状为 `(1, 1, 6, 9)`,即1个样本,1个通道,高度6和宽度9。池化窗口大小为 `(2, 2)`,没有指定步长,因此默认为 `(2, 2)`。由于未设置padding,所以没有填充。使用的是最大池化(`trt.PoolingType.MAX`)。

代码首先定义了输入张量的数据,并创建了TensorRT构建器、网络和配置。接着,通过`add_pooling_nd`方法添加了Pool Layer,并将其标记为网络的输出。然后,构建并序列化网络,创建CUDA引擎,最后分配设备内存,执行计算并将结果从设备拷贝回主机。

在计算过程中,注意到输入张量的形状从 `(1, 1, 6, 9)` 变为了 `(1, 1, 3, 4)`,这是因为池化窗口覆盖了原始特征图的一部分,导致输出的维度减小。由于没有使用填充,所以输出的形状是基于输入形状和池化窗口大小计算得出的。

PoolingNd层在TensorRT中用于执行多维池化操作,它提供了灵活性,允许用户自定义池化窗口大小、步长和填充,以适应不同的神经网络架构。在更新到TensorRT 9及以上版本时,需要将旧的API替换为`add_pooling_nd`,以保持兼容性和最佳性能。

lowsapkj

- 粉丝: 1015

- 资源: 312

最新资源

- 白色简洁风格的个人博客模板下载.rar

- 白色简洁风格的高山雪花登录注册框源码下载.zip

- 白色简洁风格的格调精品餐厅整站网站源码下载.zip

- 白色简洁风格的工业制造整站网站源码下载.zip

- 白色简洁风格的工艺品展览企业网站源码下载.zip

- 白色简洁风格的国际货运企业网站模板.rar

- 白色简洁风格的国外求职网页CSS模板.zip

- 白色简洁风格的果园水果主题整站网站源码下载.zip

- 白色简洁风格的国外设计团队CSS3网站模板下载.zip

- 白色简洁风格的韩国个人网页源码下载.zip

- 白色简洁风格的海上冲浪网页企业网站模板下载.rar

- 白色简洁风格的海边度假旅游HTML模板.zip

- 白色简洁风格的汉堡快餐外卖企业网站模板.zip

- 白色简洁风格的豪华海景酒店整站网站模板.zip

- 白色简洁风格的汉堡薯条快餐企业网站模板.zip

- 白色简洁风格的户外风筝CSS网站模板.zip